Editor’s Note: This post was originally published on October 23, 2018. Zoom recently started using WebRTC’s DataChannels so we have added some new details at the end in the DataChannels section.

Zoom has a web client that allows a participant to join meetings without downloading their app. Chris Koehncke was excited to see how this worked (watch him at the upcoming KrankyGeek event!) so we gave it a try. It worked, removing the download barrier. The quality was acceptable and we had a good chat for half an hour.

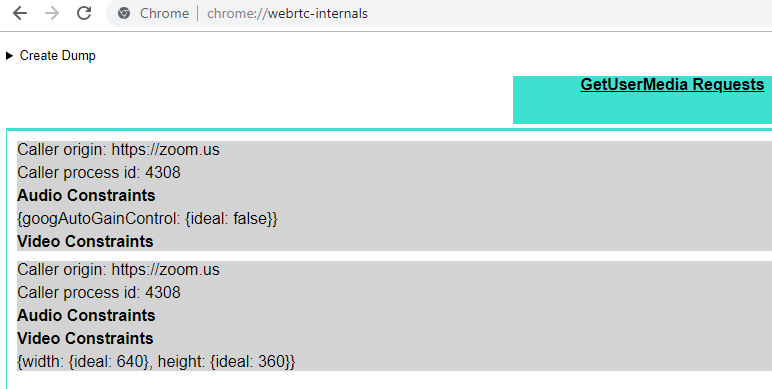

Opening chrome://webrtc-internals showed only getUserMedia being used for accessing camera and microphone but no RTCPeerConnection like a WebRTC call should have. This got me very interested – how are they making calls without WebRTC?

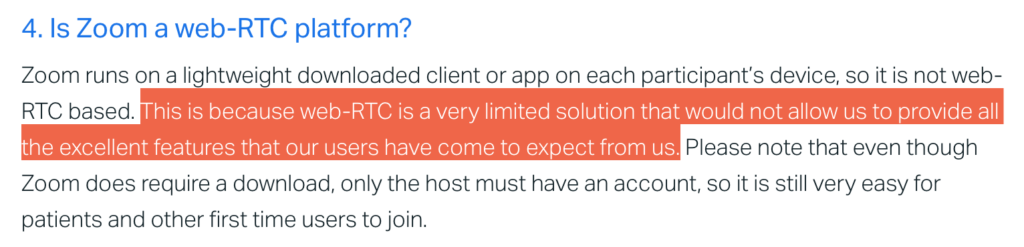

Why don’t they use WebRTC?

The relationship between Zoom and WebRTC is a difficult one as shown in this statement from their website:

The Jitsi folks just did a comparison of the quality recently in response to that. Tsahi Levent-Levi had some useful comments on that as well.

So let’s take a quick look at the “excellent features” under quite interesting circumstances — running in Chrome.

The Zoom Web client

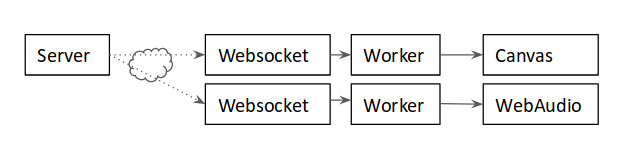

Chromes network developer tools quickly showed two things:

- WebSockets are used for data transfer

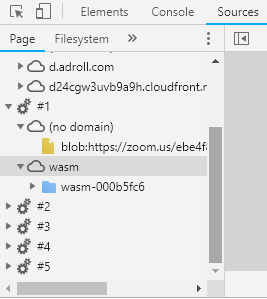

- there are workers loading WebAssembly (wasm) files

The WebAssembly file names quickly lead to a GitHub repository where those files, including some of the other JavaScript components are hosted. The files are mostly the same as the ones used in production.

Media over WebSockets

The overall design is quite interesting. It uses WebSockets to transfer the media which is certainly not an optimal choice. It is similar to using TURN/TCP in WebRTC — it has a quality impact and will not work well in quite a number of cases. The general problem of doing realtime media over TCP is that packet loss can lead to resends and increased delay. Tsahi described this over at TestRTC a while ago, showing the impact on bitrate and other things.

The primary advantage of using media over WebSockets is that it might pass firewalls where even TURN/TCP and TURN/TLS could be blocked. And it certainly avoids the issue of WebRTC TURN connections not getting past authenticated proxies. That was a long-standing issue in Chrome’s WebRTC implementation that was only resolved last year.

Data received on the WebSockets goes into a WebAssembly (WASM) based decoder. Audio is fed to an AudioWorklet in browsers that support that. From there the decoded audio is played using the WebAudio “magic” destination node.

Video is painted to a canvas. This is surprisingly smooth and the quality is quite high.

In the other direction, WebAudio captures media from the getUserMedia call and is sent to a WebAssembly encoder worker and then delivered via WebSocket. Video capture happens with a resolution of 640×360 and, unsurprisingly, is grabbed from a canvas before being sent to the WebAssembly encoder.

The WASM files seem to contain the same encoders and decoders as Zooms native client, meaning the gateway doesn’t have to do transcoding. Instead it is probably little more than a websocket-to-RTP relay, similar to a TURN server. The encoded video is somewhat pixelated at times and Mr. Kranky even complained about staircase artifacts. While the CPU usage of the encoder is rather high (at 640×360 resolution) this might not matter as the user will simply blame Chrome and use the native client the next time.

H.264

Delivering the media engine as WebAssembly is quite interesting, it allows for supporting codecs not supported by Chrome/WebRTC. This is not entirely novel – FFmpeg compiled with emscripten has been done many times before and emscripten seems to have been used here as well. Delivering the encoded bytes via WebSockets allowed inspecting their content using Chrome’s excellent debugging tools and showed a H264 payload with an RTP header and some framing:

|

1 2 3 |

02000000 9062ae85bb9c9d7801000401bede0004124000003588b8021302135000000000 1c800000016764001eac1b1a68280bde54000000 ... |

To my surprise, the Network Abstraction Layer Unit (NALU) did not indicate H264-SVC.

Comparison to WebRTC

As a summary, let us compare where what Chrome uses in this case is different from the WebRTC standard (either the W3C or the various IETF drafts):

| Feature | Zoom Web client | WebRTC/RTCWeb Specifications |

| Encryption | plain RTP over secure Websocket | DTLS-SRTP |

| data channels | n/a? | SCTP-based |

| ICE | n/a for Websockets | RFC 5245 (RFC 8445) |

| Audio codec | unknown (yet) | Opus |

| Multiple streams | not observed (yet) | Chrome implements the spec finally |

| Simulcast | not observed in the web client | extension spec |

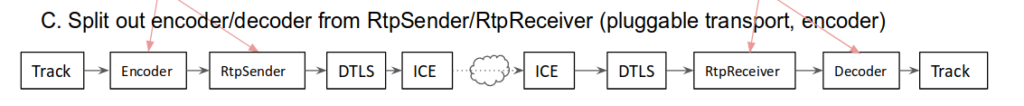

WebRTC, Next Version

Even though WebRTC 1.0 is far from done (and most developer are still using something that is dubbed the “legacy API”) there is a lot of discussion about the “next version”.

The overall design of the Zoom web client strongly reminded me of what Google’s Peter Thatcher presented as a proposal for WebRTC NV at the Working groups face-to-face meeting in Stockholm earlier this year. See the slides (starting on page 26).

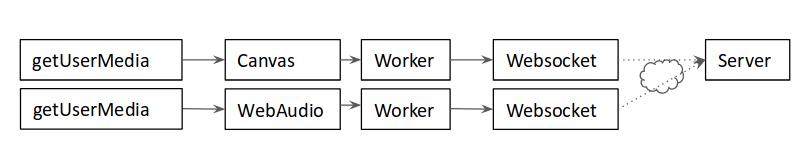

If we were to rebuild WebRTC in 2018, we might have taken a similar approach to separate the components. Basically taking these steps:

- compile webrtc.org encoders/decoders to wasm

- connect decoders with canvas and WebAudio for “playout”

- connect encoders with getUserMedia for input

- send encoded media via (unreliable) datachannels for transport

- connect RTCDataChannel feedback metrics to audio/video encoder somehow

The approach is visualized on one of the slides from the working groups meeting materials:

That proposal some has very obvious technical advantages over the Zoom approach. For instance, using RTCDataChannels for transferring the data which gives a much much better congestion control properties than WebSockets, in particular if there is packet loss.

The big advantage of the design is that it would be possible to to separate the Encoder and Decoder (as well as related things like the RTP packetization) from the browser, allowing custom versions. The main problem is finding a good way to get the data processing off the main thread in a high-performance way including hardware acceleration. This has been one of the big challenges in Chrome in the early days and I remember many complaints about the sandbox making things difficult. Zoom seems to work ok but we only tried a 1:1 chat and the typical WebRTC app is a bit more demanding than that. Reusing building blocks like MediaStreamTrack for data transfer from and to workers would also be preferable to fiddling with Canvas elements and WebAudio.

However, as that approach would have allowed building Hangouts in Chrome without having to open source the underlying media engine I am very glad WebAssembly was not a thing in 2011 when WebRTC was conceived. So thank you Google for open sourcing webrtc.org under a three clause BSD license.

Update September 2019: WebRTC DataChannel

As Mozilla’s Nils Ohlmeier pointed out, Zoom switched to using WebRTC DataChannels for transferring media:

Looks like @zoom_us has switched it's web client from web sockets to #WebRTC data channels. Performance a lot better compared to their old web client. pic.twitter.com/SQhP9XhHXP

— Nils Ohlmeier (@nilsohlmeier) September 5, 2019

chrome://webrtc-internals tells us a bit more about how this works. See a dump here which can be imported with my tool here.

No STUN/TURN = fallback to WebSockets

Looking at the RTCPeerConnection configuration the most notable thing is that iceServers is configured as an empty array which means no STUN or TURN servers are used:

This makes sense as TCP fallback is most still likely provided by the previous WebSocket implementation and no TURN infrastructure is required just for the web client. Indeed, when UDP is blocked the client falls back to WebSockets and is trying to establish a new RTCPeerConnection every ten seconds.

Standard PeerConnection setup but with SDP munging

There are two PeerConnections, each of which creates an unreliable DataChannel, one labelled ZoomWebclientAudioDataChannel, the other labelled ZoomWebclientVideoDataChannel. Using two connections for this isn’t necessary but was probably easier to fit into the existing WebSocket architecture.

After that, createOffer is called followed by setLocalDescription. While this is pretty standard there is an interesting thing going on here as the a=ice-frag line is changed before setLocalDescription is called, replacing Chrome’s rather short username fragment (ufrag) with a lengthy uuid. This is called SDP munging and is generally frowned upon due potential interoperability issues. Surprisingly Firefox allows this which means more work for Nils and his Mozilla team. We’ll discuss what the purpose of this might be below.

Simple server setup

Next we see the answer from the server. It is using ice-lite which is common for servers as it is easy to implement as well as a single host candidate. That candidate is then also added via addIceCandidate which is a bit superfluous but explains the double occurrence in Nils screenshot. The answer also specifies a=setup:passive which means the browser is acting as the DTLS client, probably to reduce the server complexity.

Quite noticeable is that we see the same server-side port 8801 both in Nils screenshot as well as the dump we gathered. This is no coincidence, Zoom’s native client runs on the same port. This means that all UDP packets have to be demultiplexed into sessions which is typically done by creating an association between the incoming UDP packets and the ufrag of the STUN requests from those packets. This is probably also the reason for munging the ufrag. This is a bit silly – demultiplexing works just as well with just the server side ufrag.

Traffic inspection does not reveal anything new

Inspecting the traffic is a bit more complicated. Since its JavaScript one can use the prototype override approach used by the WebRTC-Externals extension to snoop on any call to RTCDataChannel.prototype.send. Unsurprisingly, the payload of the packets is the same as the one sent via WebSockets.

This update to the Zoom web client will, as Nils pointed out, most likely increase the quality that was limited by the transfer via WebSockets over TCP quite a bit. While the client is now using WebRTC, it continues to avoid using the WebRTC media stack.

{“author”: “Philipp Hancke“}

Thanks for write-up. Did zoom write their h264 codec? In order to use emscripten they would probably need the source for a codec, right? I know the broadway decoder has been out for a few years, but I think that only works with baseline streams.

I think the reason they use RTCDataChannel because they want to decode the media on their own. Hence, they can use their (propetiary) compiled decoder using wasm.

As of April 2023 Zoom appears to have reworked their web client again, this time to use Web Audio. This was noticed by the Chrome/Chromium community when the built-in Live Captions no longer worked for Zoom. https://bugs.chromium.org/p/chromium/issues/detail?id=1435138

Since this page was so informative back in 2018/2019, thought you might like to know as a followup blog post would be very interesting.

Thanks Myk! It would be interesting to do a deep dive on Zoom’s web client again.