It has been a few years since the WebRTC codec wars ended in a detente. H.264 has been around for more than 15 years so it is easy to gloss over the the many intricacies that make it work. Reknown hackathon star, live-coder, and |pipe| CTO Tim Panton was working on a drone project where he needed […]

raspberry pi

Part 2: Building a AIY Vision Kit Web Server with UV4L

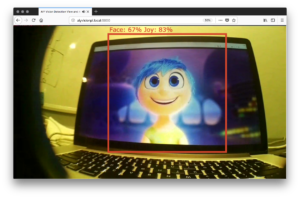

In part 1 of this set, I showed how one can use UV4L with the AIY Vision Kit send the camera stream and any of the default annotations to any point on the Web with WebRTC. In this post I will build on this by showing how to send image inference data over a WebRTC […]

AIY Vision Kit Part 1: TensorFlow Computer Vision on a Raspberry Pi Zero

A couple years ago I did a TADHack where I envisioned a cheap, low-powered camera that could run complex computer vision and stream remotely when needed. After considering what it would take to build something like this myself, I waited patiently for this tech to come. Today with Google’s new AIY Vision kit, we are […]

SDP: The worst of all worlds or why compromise can be a bad idea (Tim Panton)

As WebRTC implementations and field trials evolve, field experience is telling us there are still a number of open issues to make this technology deployable in the real world and the fact that we would probably do some things differently if we started all over again. As an example, see the recent W3C discussion What […]