I am working on a personal Chrome Extension project where I need a way to convert a video file – like your standard mp4 – into a media stream, all within the browser. Adding a file as a src to a Video Element is easy enough. How hard could it be to convert a video […]

Guide

Real-Time Video Processing with WebCodecs and Streams: Processing Pipelines (Part 1)

WebRTC used to be about capturing some media and sending it from Point A to Point B. Machine Learning has changed this. Now it is common to use ML to analyze and manipulate media in real time for things like virtual backgrounds, augmented reality, noise suppression, intelligent cropping, and much more. To better accommodate this […]

Calculating True End-to-End RTT (Balázs Kreith)

Balázs Kreith of the open-source WebRTC monitoring project, ObserveRTC shows how to calculate WebRTC latency – aka Round Trip Time (RTT) – in p2p scenarios and end-to-end across one or more with SFUs. WebRTC’s getStats provides relatively easy access to RTT values, bu using those values in a real-world environment for accurate results is more difficult. He provides a step-by-step guide using some simple Docke examples that compute end-to-end RTT with a single SFU and in cascaded SFU environments.

The Ultimate Guide to Jitsi Meet and JaaS

A full review and guide to all of the Jitsi Meet-related projects, services, and development options including self-install, using meet.jit.si, 8×8.vc, Jitsi as a Service (JaaS), the External iFrame API, lib-jitsi-meet, and the Jitsi React libraries among others.

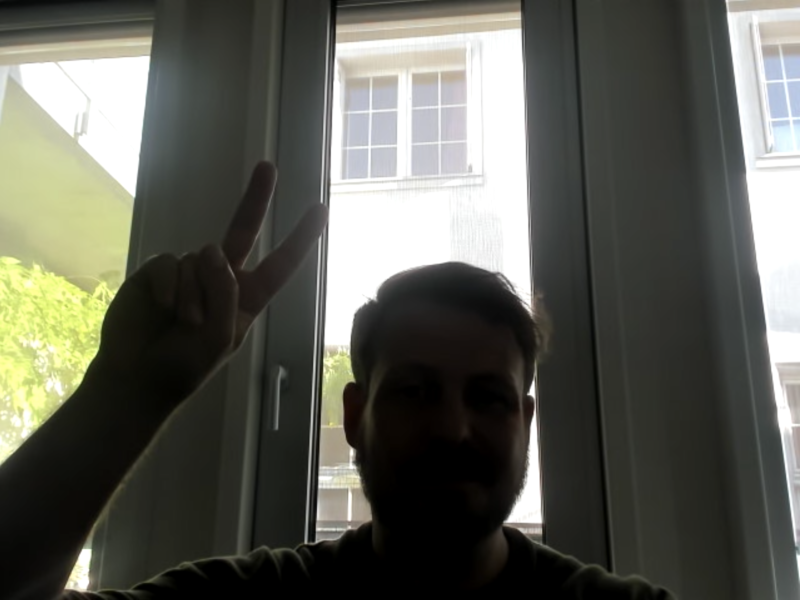

Fix Bad Lighting with JavaScript Webcam Exposure Controls (Sebastian Schmid)

Step-by-step guide on how to fix bad webcam lighting in your WebRTC app with standard JavaScript API’s for camera exposure or natively with uvc drivers.