How to use the AWS API Gateway WebSocket API functionality with Lamdba functions to implement a serverless WebRTC signaling architecture

signaling

The Minimum Viable SDP

One evening last week, I was nerd-sniped by a question Max Ogden asked: That is quite an interesting question. I somewhat dislike using Session Description Protocol (SDP) in the signaling protocol anyway and prefer nice JSON objects for the API and ugly XML blobs on the wire to the ugly SDP blobs used by […]

Project WONDER: showing WebRTC NNI does not need SIP

As discussed in previous posts, WebRTC standards do not specify a signaling protocol. In general this decision is positive by giving developers the freedom to select (or invent) the protocol that best suits the particular WebRTC application’s needs. This can also reduce the time to market since standards compliance-related tasks are minimized. WebRTC media and […]

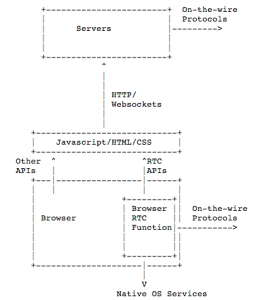

Signalling Options for WebRTC Applications

As I described in the standardization post, the model used in WebRTC for real-time, browser-based applications does not envision that the browser will contain all the functions needed to function as a telephone or video conferencing unit. Instead, is specifies the browser will contain the functions that are needed to run a Web application which would […]