Google recently announced their intent to merge Google Duo and Google Meet into one application. That, together with Gustavo Garcia’s recent blog post on how Google Meet uses WebRTC, made Philipp and Gustavo wonder what that means for the WebRTC bits of both applications. Hence we grabbed webrtc-internals dumps from both applications to do a back-to-back comparison. While there are a few some Googly commonalities, the WebRTC usage of both applications is surprisingly different as we’ll see.

Meet vs. Duo TL;DR Summary

| Google Meet | Google Duo | |

|---|---|---|

| PeerConnections | Single PeerConnection | |

| ICE / TURN | No STUN or TURN servers, ICE-TCP and nonstandard SSLTCP candidates; uses port 443 for TCP/TLS | |

| SRTP encryption | SRTP with newer AEAD_AES_256_GCM cipher suite | SRTP with old AES_CM_128_HMAC_SHA1_80 cipher suite |

| End-to-end encryption | No end-to-end encryption yet | End-to-end encryption using WebRTC Insertable Streams |

| Audio capture | Additional constraints but with default values | No constraints passed |

| Audio transmission | Opus with inband-fec and dtx. More audio codecs are supported in the SDP | Opus with inband-fec and no dtx is the only codec that is supported |

| Video capture | 720p at 24 fps | 360p at 30fps then 720p at 30fps facingMode “user” constraint |

| Video transmission | VP9 SVC enabled through SDP munging and VP8 simulcast used for screen sharing | VP8 simulcast enabled through the addTransceiver API |

| DataChannels | 4 DataChannels, reliable and unreliable | 3 reliable DataChannels |

| RTP header extensions | AV1 dependency descriptor and video-layer-allocation extension | Non-standard generic frame descriptor extension similar to the AV1 DD |

| RTCP | transport-wide-cc bandwidth estimation, NACK/RTX retransmissions, RRTR XR packets | transport-wide-cc bandwidth estimation, NACK/RTX retransmissions |

Note: the scope of this post doesn’t include info about the native mobile versions of these apps. Duo is supposed to have relevant improvements in terms of connectivity, more efficient video codecs for battery saving and proprietary ML based audio codecs for reliability and support of low bandwidth networks but we did not verify that as part of this research.

PeerConnection

Both Meet and Duo use a single RTCPeerConnection for outgoing and incoming video streams. Historically, Duo started with unified-plan enabled by default while Google Meet took until mid-2021 to switch the SDP format.

The connections have slight configuration differences:

| Meet | Duo |

|---|---|

| Configuration: { iceServers: [], iceTransportPolicy: all, bundlePolicy: max-bundle, rtcpMuxPolicy: require, iceCandidatePoolSize: 0, sdpSemantics: “unified-plan”, extmapAllowMixed: true } Legacy (chrome) constraints: { advanced: [{googScreencastMinBitrate: {exact: 100}}] } |

Configuration:{ iceServers: [], iceTransportPolicy: all, bundlePolicy: max-bundle, rtcpMuxPolicy: require, iceCandidatePoolSize: 0, sdpSemantics: “unified-plan”, encodedInsertableStreams: true, extmapAllowMixed: true } Legacy (chrome) constraints: {} |

Duo sets the encodedInsertableStreams flag to true (which is required to get end-to-end encryption with insertable streams). Meet sets a legacy constraint to configure a minimum bitrate for screencasts (even though this connection is not used for screen sharing as we will see later). This isn’t surprising as Meet supports screen sharing while Duo does not. Note however that this constraint is going away.

Things start to differ after that however. The first thing Duo does is to create an offer with three audio m-lines and a data channel. It creates an offer, calls setLocalDescription and then continues to add two video m-lines. This is actually violating the specification (which Chrome still allows) since you can not set a local description in the have-local-offer state. Firefox forbids this, however Duo does not support Firefox so this was probably never noticed. The answer is an ice-lite answer with candidates listed in the first m-line.

Meet adds audio and video (via the addTransceiver API these days) and then creates a simpler offer with just a single audio and video m-line at first. Then it adds three receive-only audio m-lines. ICE candidates are added via addIceCandidate instead of being listed in the SDP.

ICE / TURN connectivity

Neither Meet nor Duo configure any STUN or TURN servers – which is expected since both servers are easily reachable and support ICE-TCP and the nonstandard ssltcp variant.

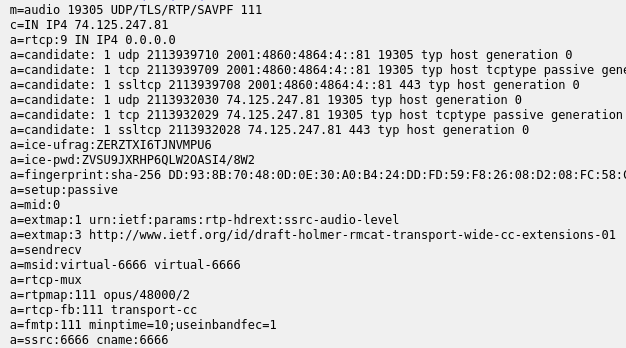

They do differ in where they put the ICE candidates – Duo puts them directly into the SDP of the answer:

|

1 2 3 4 5 6 |

a=candidate: 1 udp 2113939710 2001:4860:4864:4::81 19305 typ host generation 0 a=candidate: 1 tcp 2113939709 2001:4860:4864:4::81 19305 typ host tcptype passive generation 0 a=candidate: 1 ssltcp 2113939708 2001:4860:4864:4::81 443 typ host generation 0 a=candidate: 1 udp 2113932030 74.125.247.81 19305 typ host generation 0 a=candidate: 1 tcp 2113932029 74.125.247.81 19305 typ host tcptype passive generation 0 a=candidate: 1 ssltcp 2113932028 74.125.247.81 443 typ host generation 0 |

We see UDP candidates for both IPv6 and IPv4 on port 19305 as well as ICE-TCP (on port 19305) and ssltcp (on port 443) candidates which allow for TCP connectivity in cases where UDP is blocked (in particular corporate networks).

Meet sends the same types of candidates using addIceCandidate and in a slightly different order which suggests different server implementations. In addition, Meet also sends a UDP candidate for port 3478 which is the “standard” port used by many STUN servers. From our notes it is a relatively recent addition and may suggest that this port is often not blocked in enterprise environments that want to enable VoIP.

SSL-TCP candidates

The ssltcp candidates are a variant of ICE-TCP candidates that also send a “fake” SSL handshake which may fool some proxies into thinking this is a TLS connection. Whether this is still effective given that it uses a deprecated TLS variant is unclear, using port 443 is pretty much common practice.

getUserMedia audio constraints

Duo and Meet differ quite a bit with their getUserMedia audio constraints. Duo calls getUserMedia with both audio and video (twice) and only specifies audio: true.

| Meet | Duo |

|---|---|

| {deviceId: {exact: [“fbb1641ed4a296addf6969b8197d7143ab503cd4dfc96072b07a2d9ceab8eca3”]}, advanced: [{googEchoCancellation: {exact: true}}, {googEchoCancellation: {exact: true}}, {autoGainControl: {exact: true}}, {noiseSuppression: {exact: true}}, {googHighpassFilter: {exact: true}}, {googAudioMirroring: {exact: true}}]} |

true Combined with video capture – see the next section. |

Meet specifies a bunch of “goog” constraints. The impact of those constraints is very low (probably none) given that most of them are set to the default values used by browser WebRTC implementations.

Audio coding adjustments

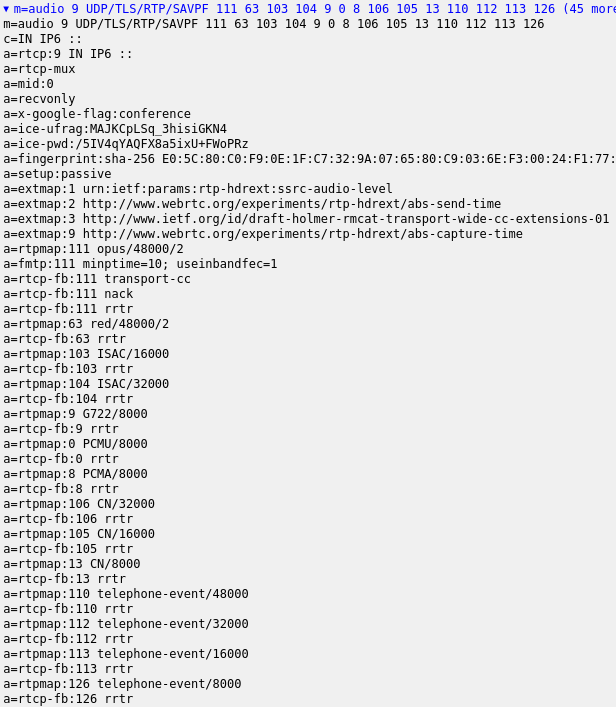

SDP audio-answers from the server these also differ considerably.

Duo answer:

Duo only responds with Opus as audio codec, specifying the usage of inband FEC and using transport-cc for bandwidth estimation. Given its focus on end-to-end encryption we’re not sure we would recommend sending the audio level over the network without encryption. This is however quite useful for active speaker selection in the SFU. So far there are no known attacks that reconstruct the audio from the levels.

The Meet answer is longer:

In addition to Opus we see all other audio codecs supported by libwebrtc, including audio/red. We doubt those are actually supported though.

Opus parameters

In terms of actual Opus audio encoding, we see both applications enable useinbandfec to provide a certain degree of robustness against packet loss. Other than this, Google Meet enables “dtx” support in Opus (in a later setRemoteDescription call); Duo doesn’t.

Three audio stream sources

Both implementations make use of three “virtual” SSRCs. Since Chrome only renders the three loudest audio sources (a not widely known restriction that influences system design), it looks like both systems do rewriting of audio SSRCs and only forward the three loudest speakers.

getUserMedia video constraints

The video getUserMedia constraints these differ quite a bit:

| Meet | Duo |

|---|---|

| { deviceId: {exact: [“b8fa4f11cda3094f85e15d8e5396b20f35a32164c06e5c9418bffab85113f2e1”]}, advanced: [ {frameRate: {min: 24}}, {height: {min: 720}}, {width: {min: 1280}}, {frameRate: {max: 24}}, {width: {max: 1280}}, {height: {max: 720}}, {aspectRatio:{exact: 1.77778}} ] } |

{ width: {ideal: 360}, height: {ideal: 640}, frameRate: {ideal: 30}, facingMode: {ideal: [“user”]} } |

Duo requests 640×360 (and later 1280×720) at 30fps with a facingMode constraint to get the user-facing camera. Duo comes from the mobile world where this constraint is necessary more often.

Meet splits its audio and video getUserMedia requests. It only requests a frameRate of 24 and is a bit more specific when asking for first 720p and then 640p:

We still wonder why the 24fps restriction is in place – a frame rate first standardized for sound films in 1926.

Video coding

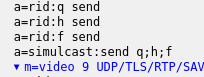

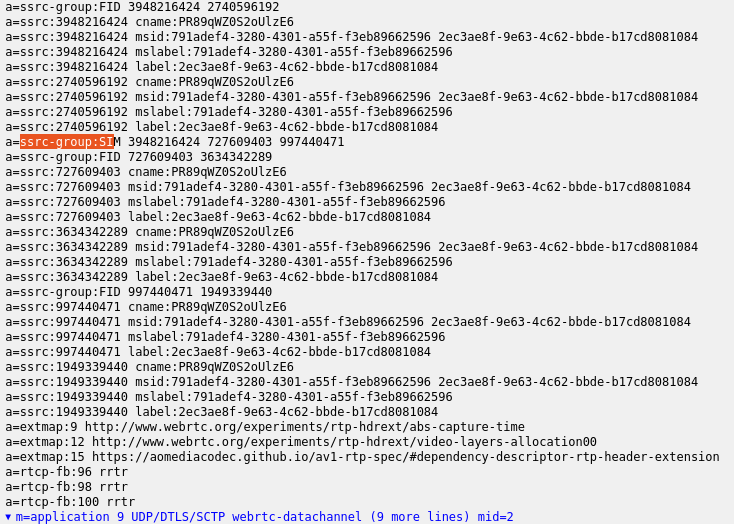

Duo is using the addTransceiver API and the so-called “spec simulcast” which uses the mid and rid header extensions shown in this SDP that enables VP8 simulcast:

VP8 vs. VP9

Google Meet has traditionally been using SDP munging to enable VP8 simulcast. This continues to be the case but with a additional twist:

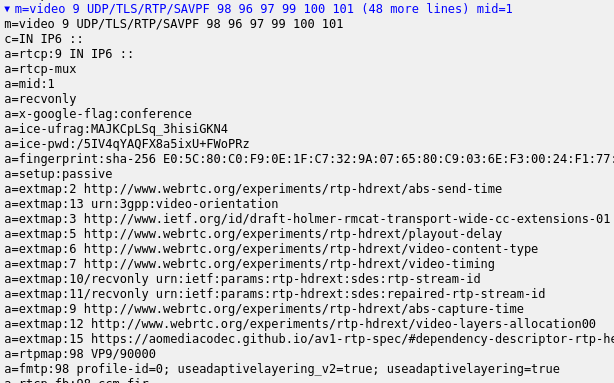

The answer from the server lists the RTP payload type 98 first:

This is for VP9 instead of VP8 (and uses a useadaptivelayering flag which is nowhere to be found in the WebRTC or Chrome code). As Iñaki Baz Castillo pointed out on twitter (in a sadly deleted thread) back in 2019, this actually enables VP9 SVC without the need for the field trial (which never rolled out). Work on the actual specification for WebRTC-SVC is actually progressing nicely (with a lot of delay). It seems the lack of this API did not stop Meet from using it. Obviously, given that VP9 SVC is using a single SSRC, munging in three SSRCs for VP9 SVC makes no sense at all.

Simulcast

In the case of Google Meet the a=x-google-flag:conference attribute is added to all the negotiated channels. This is required to enable simulcast via SDP munging (as seen in Fippo’s Simulcast Playground) but it is not needed anymore in case of Google Duo given that simulcast is enabled using the standard addTransceiver API.

Screensharing

While Google Duo does not support screen sharing we still find Google Meets approach to screensharing worth mentioning. It uses a different peerconnection, probably for reasons discussed by Tsahi Levent-Levi and Philipp in this fiddle of the month.

The initial connection setup is the same as for the video connection (it even negotiates the three virtual audio ssrcs again which does not make much sense to us). However, the answer from the server is preferring VP8 as codec. Using this together with VP8 simulcast and the x-google-conference flag triggers a special screen sharing mode which is mostly focused on providing different frame rates and not different resolutions (which is a risky thing for screen sharing as the resolution matters much more there).

DataChannels

Looking at the usage of RTCDataChannel we can see that both applications make use of them.

Google Meet creates 4 data channels named dataSendChannel, collections, audioprocessor and captions. They are created as reliable transports for the first two and unreliable for the others. Duo creates 3 datachannels named gcWeb, karma and collections. Most of them don’t show traffic. It is not easy to know what they are for, but we can see there are some few messages in the collections datachannel and a lot of messages in the audioprocessor channel when multiple participants are in the call. Google Meet uses it to provide active speaker / audio levels information given that the audio is multiplexed in three single audio tracks as described above in the Audio section.

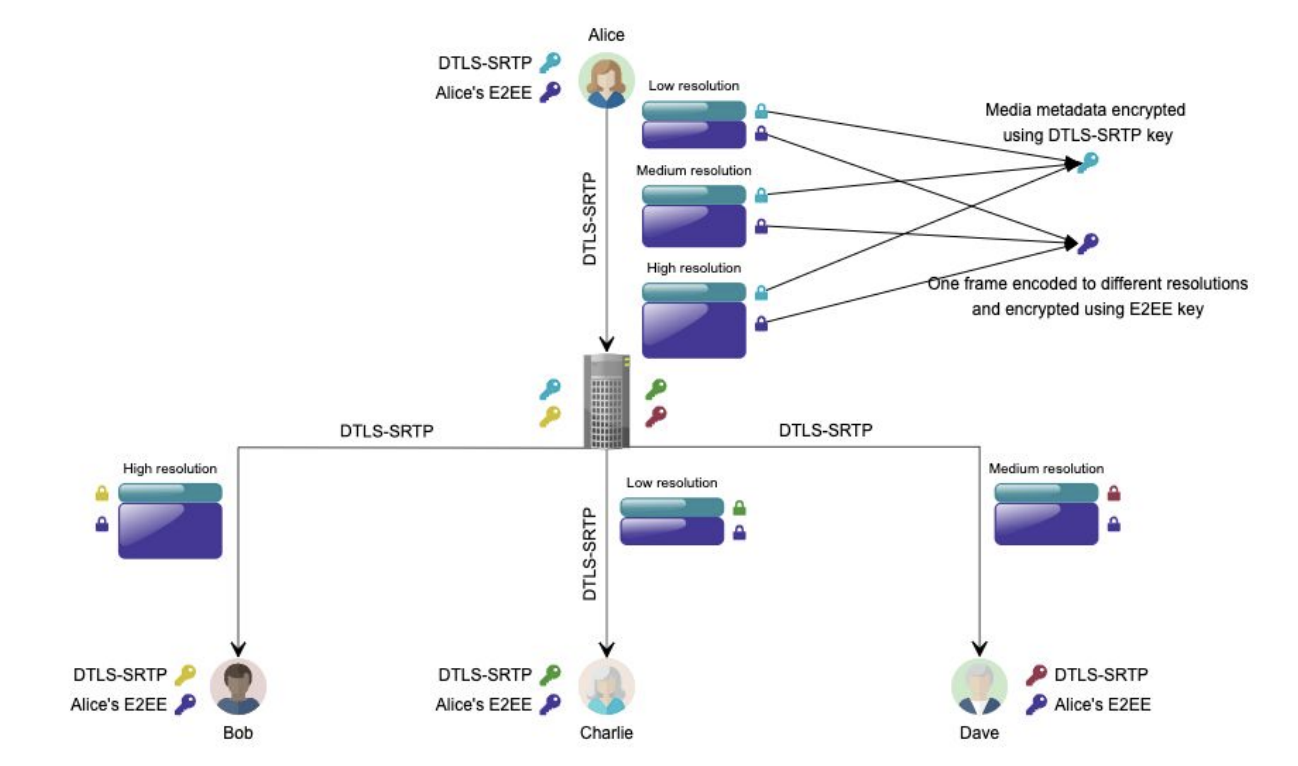

Security

Both Google Meet and Duo support the standard WebRTC encryption based on DTLS for the keys negotiation and Datachannels encryption and SRTP for the media encryption.

One small difference is the SRTP crypto suite used for the encryption. While Google Meet uses the more modern and efficient AEAD_AES_256_GCM cipher (added in mid-2020 in Chrome and late 2021 in Safari), Google Duo is still using the traditional AES_CM_128_HMAC_SHA1_80 cipher.

The biggest difference in terms of security is the support for End-to-End encryption in Google Duo. Frame encryption explained here was initially implemented for mobile and then included in the web application making use of the insertable streams API from WebRTC.

This has been previously explained in webrtcHacks and it is also done in other applications like Jitsi.

RTP header extensions

Even if both applications use standard RTP & RTCP for audio and video transmissions – like most of the WebRTC video conferencing applications – there are some differences in terms of the RTP header extensions used to convey additional information. They also have differences in the RTCP messages supported for media control.

There are many header extensions that are common for both Meet and Duo. That includes the abs-send-time, video-orientation, audio-levels, transport-wide-cc and sdes header extensions.

However Google Meet includes many more extensions related to timing and buffering:

a=extmap:5 http://www.webrtc.org/experiments/rtp-hdrext/playout-delay

a=extmap:6 http://www.webrtc.org/experiments/rtp-hdrext/video-content-type

a=extmap:7 http://www.webrtc.org/experiments/rtp-hdrext/video-timing

a=extmap:9 http://www.webrtc.org/experiments/rtp-hdrext/abs-capture-time

Note that the video-content-type extension which is an in-band description of whether the media source is coming from the camera or a screen sharing source to allow specific optimizations in the receiver side.

And Meet also includes an extension related to frame marking that is specified by AV1 but can be used for other codecs as well:

a=extmap:15 https://aomediacodec.github.io/av1-rtp-spec/#dependency-descriptor-rtp-header-extension

In case of Google Duo, this is handled in a different way, with a nonstandard extension (which even lacks documentation called the generic-frame-descriptor instead of the av1 dependency descriptor:

a=extmap:7 http://www.webrtc.org/experiments/rtp-hdrext/generic-frame-descriptor-00

In addition to that Google Meet also uses a nonstandard extension called “video layer allocation”:

a=extmap:12 http://www.webrtc.org/experiments/rtp-hdrext/video-layers-allocation00

This is (kinda) specified here. It is set in the remote description (with an id of a not-used extension from the offer). This is actually a very interesting extensions for SFUs since it solves two problems:

- When a simulcast or SVC layer is no longer sent due to bandwidth restrictions, this is communicated to the server. This allows the SFU to act faster and switch clients to a lower layer.

- It informs the server about the encoder target bitrates for each layer. This is more accurate than the server measuring this from the incoming packets. It also improves layer selection.

RTCP

In terms of RTCP there are no big differences, both applications use transport-wide congestion control messages for bandwidth estimation, NACKs with RTX for video retransmissions and PLIs for keyframe requests. The only difference found so far is the use of extended reports in Google Meet in the form of RRTR messages to be able to estimate the Round Trip Time delay from the receiver point of view instead of the sender. See this WebRTC samples pull request for some more information on how this enables calculation of time offsets.

Conclusions

As an app, Duo started out as a newer, more focused successor to Meet and that’s reflected in its implementation. Parts, like the use of three virtual tracks, are somewhat unique to Google, and are implemented in a similar way in both apps. The use of ICE with the absence of TURN servers is exactly the same. However, other parts of it are completely different – in particular the use of the WebRTC APIs like addTransceiver with simulcast. Duo’s later market entry meant it was able to leverage stable standards with unified-plan and “spec-simulcast” from the beginning. Duo also introduced innovative features for WebRTC, like end-to-end encryption.

Google Meet was comparatively slow to others in adopting new WebRTC features that came to the specification – to the extent of seriously delaying them (like WebRTC-SVC for which there is no product need as the feature is already enabled through a hack). Despite its spec compliance challenges, Meet has seen a tremendous amount of incremental improvements since early 2020. Examples include the video layer allocation extension, using GCM cipher suites for SRTP, a transition from the legacy addStream API towards addTransceiver (via addTrack) and of course the big transition from plan-b to unified-plan. Meet actually looks ahead in terms of encoding with the VP9 SVC support and RTP based features (rrtr, red, timing hdrext..). Meet is also a more feature rich product, which helps to push its WebRTC implementation.

Fippo kicked off webrtcHacks’ blackbox reverse-engineering series back in 2014 with How does Hangouts use WebRTC? Hangouts has evolved into Google Meet, where it continues to be a great reference implementation to examine. We were first able to easily look at Duo’s webrtc-internals when it introduced multi-party calling and web support two years ago. Reflecting back after our analysis this week, it feels like Duo has been in maintenance mode while Meet continues to push features and performance optimizations needed to compete during the pandemic.

Continued maintenance of two distinct stacks for the same purpose is not a healthy thing to do for an engineering organization. Philipp bets that the Meet stack will be the one that survives but we’ll see how this develops over the course of the next year.

{“authors”: [”Gustavo Garcia”, “Philipp (fippo) Hancke“]}

How does using 3 audio tracks and rewriting SSRCs affect audio/video sync (lip-sync)?

Hi,

Great post, giving a real insight into how Google Meet works. I have been studying the WebRTC Internals logs for Google Meet and came across that all invites and re-invites are initiated in a concatenated SDP in a single shot SDP from the browser client. i have tried the same in our POC SFU video conferencing but the packets are fragmented and causing issues on the client side and unstable, is this approach correct or my understanding is wrong about concatenated SDP ?

Thanks

I hope that you can re-test google meet now that google duo has been replaced by meet. The developers said that they incorporated lots of the technology from duo into meet so there’s a decent chance that there have been improvements since you made this blog post.

It is not a full analysis, but Fippo just did a mini-analysis of Meet here: https://webrtchacks.com/the-hidden-av1-gift-in-google-meet/