It has been a few years since the WebRTC codec wars ended in a detente. H.264 has been around for more than 15 years so it is easy to gloss over the the many intricacies that make it work.

Reknown hackathon star, live-coder, and |pipe| CTO Tim Panton was working on a drone project where he needed a light-weight H.264 stack for WebRTC, so he decided to build one. This is certainly not an exercise I would recommend for most, but Tim shows it can be an enlightening experience if not an easy one. In this post, Tim walks us through his step-by-step discovery as he just tries to get video to work. Check it out for an enjoyable alternative to reading through RFCs specs for an intro on H.264!

{“editor”: “chad hart“}

I’ve been doing WebRTC since before it was a thing and VoIP for a few years before that, so I know my way around RTP and real time media. Or so I thought…

In all that time it just so happened that I’d never really looked at video technology. There was always someone else on hand who’d done it before, so I remained blissfully ignorant and spent my time learning about SCTP and the WebRTC data channel instead.

Then a side project came up, sending H.264 video from a drone over WebRTC. How hard could it be?

TLDR;

Voice over RTP != Video over RTP

Why H.264 and not VP8?

That’s what the drone in question generated. Transcoding to VP8 was way, way beyond the capabilities of my hobbyist hardware (a Beaglebone or Raspberry Pi). Since I was part of the crew that brokered the compromise to support both H.264 and VP8 in WebRTC I figured I should take advantage and use the H.264 decoders that are available in all good WebRTC endpoints.

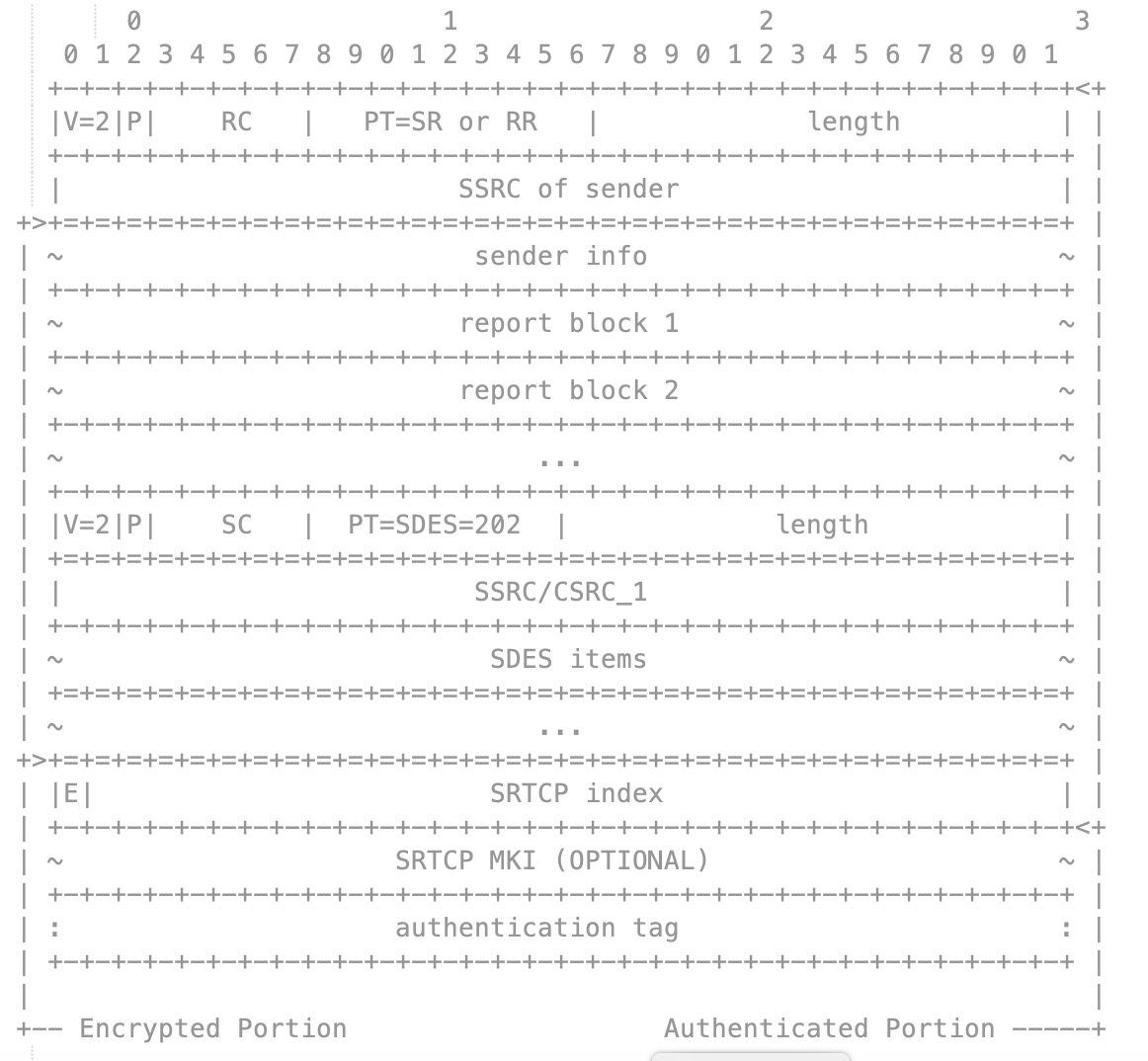

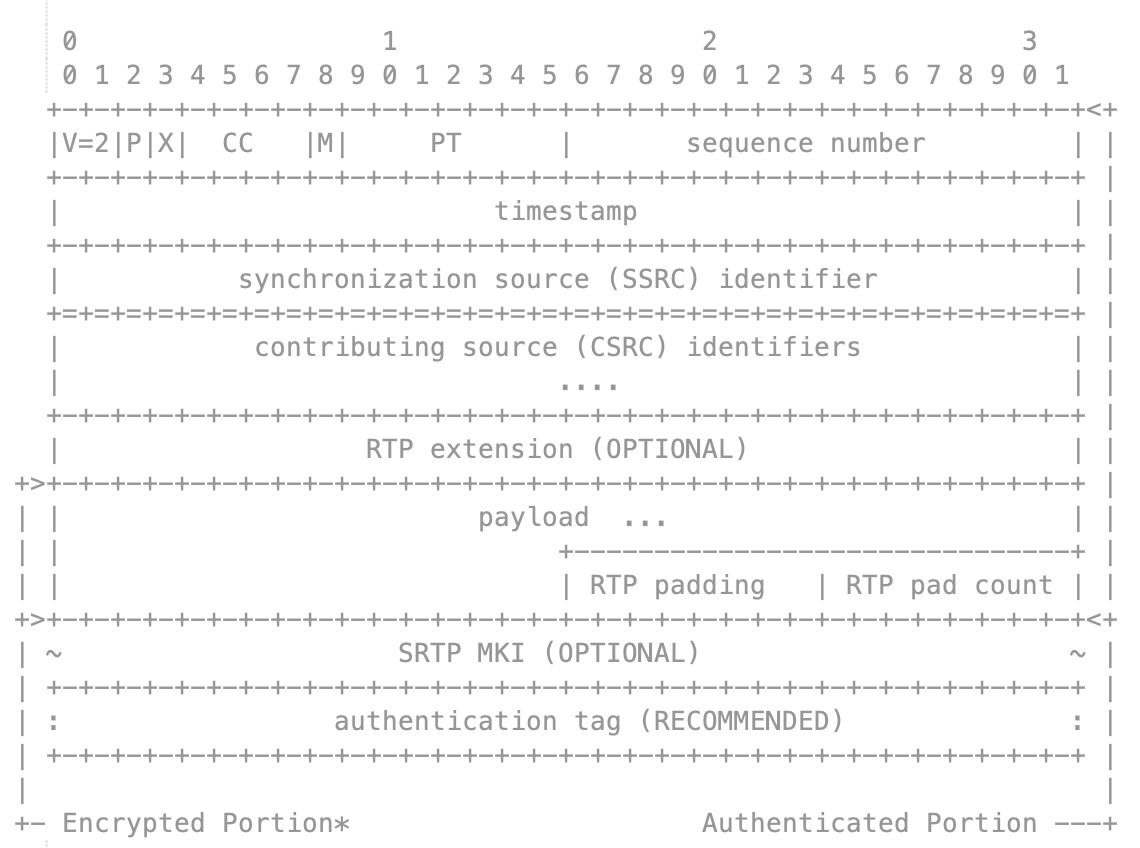

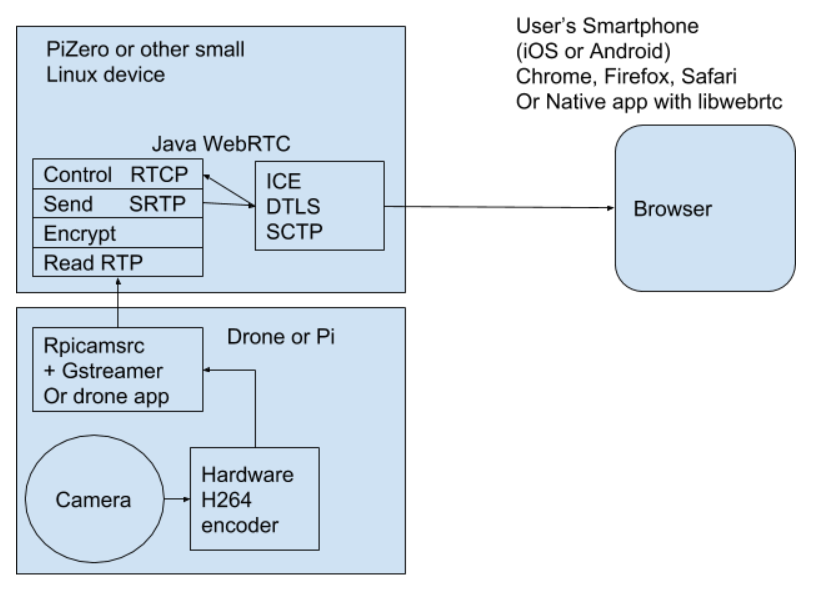

So I brushed the dust off the open source srtplight library (which I’d written as part of the Phono project JavaScript long ago) plugged it into |pipe|’s WebRTC stack. I wrote a class that would read RTP packets, encrypt them using DTLS-SRTP then forward them over the path that ICE had selected. I knew that the ICE/DTLS-SRTP bit worked because I’d already used that to provide audio from our WebRTC doorbell PoC.

Why use Java?

It takes 20GB to build the 1 million lines of libWebRTC put me off the C/C++ route. This is more than I wanted to deal with for my little project.

How many lines of code are in Google’s WebRTC implementation (https://t.co/JZRtXX5Mcz)? As of the end of 2018, it consists of 1.21M lines of code (up from 1.08M in 2017); for a comparison, this is 3x as much code as the Space Shuttle software.

— Justin Uberti (@juberti) January 10, 2019

Besides, I had all the bits I needed in Java already. In fact Java is a good choice for this sort of thing – arguably this is exactly what OAK – java’s precursor – was invented for.

The honed JVM makes it portable and performant on many architectures. On ARM the encryption used in DTLS-SRTP (AES) is mapped directly to a hardware accelerated instruction, meaning that even the smallest Raspberry Pi can encrypt multiple video streams.

The multi-threading is ideal for this sort of networking task.

Last but not least the JVM’s memory management and the compiler’s strong type checking mean that my code is relatively immune to buffer overruns and other memory attacks from inbound packets. (Oh and a shout out to Maven, which makes all other build systems look absurdly bad).

Getting video to work

To get started, I did the usual faffing to get the SDP offer/answer working. It took a while, but in the end Chrome accepted my SDP and showed packets were arriving.

No video though.

I dug some more and noticed that the packets were a bit smaller than I expected.

More digging showed that I’d kept the buffers on the RTP classes small because those classes were originally designed to be used in a constrained environment (J2ME). Big enough for a 20 ms G.711 packet, but, much smaller than the Maximum Transmission Unit (MTU) – so our H.264 packets were getting truncated. I fixed that in srtplight here.

Still no video.

Looking at Chrome’s chrome://webrtc-internals page I was getting plenty of bytes, but not a single decoded frame. Argh.

I had an inkling that this might be because I wasn’t replying to the RTCP packets that Chrome was sending (or indeed originating any RTCP packets of my own).

RTCP is used to control RTP media channels and report statistics. Chrome also uses RTCP extensions to estimate the available bandwidth. Since this was one-way video to the browser I had assumed that RTCP wasn’t needed. Now I was starting to wonder…

So I wrote some minimal RTCP classes to add to the SRTP implementation.

Still no video.

Reverse Engineering H.264 via Wireshark

Mark bits

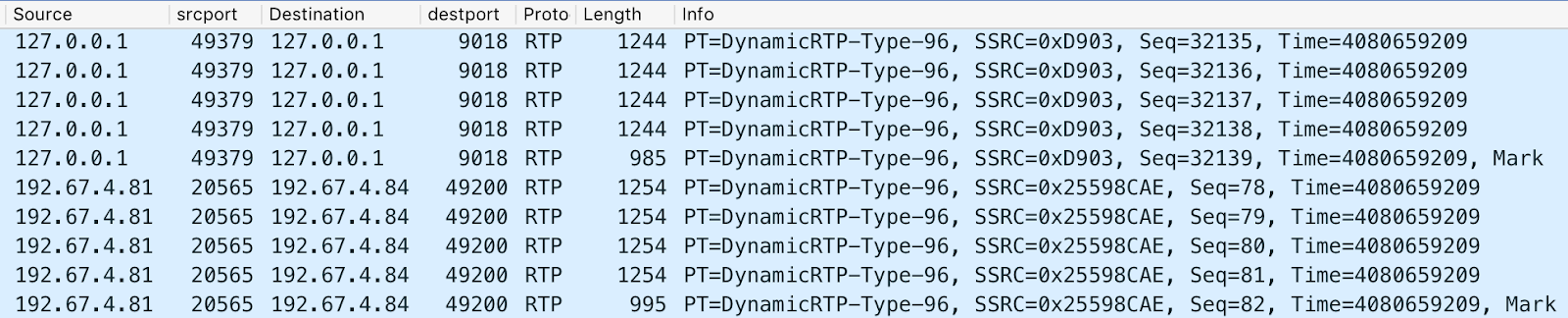

I fired up Wireshark and captured the in and outbound packets to try and see what was wrong. After many hours of staring at the screen I finally noticed…. the mark bit was set on some of the inbound packets, but not on any of the outbound ones.

At this point I should have gone off and read the RFC on H.264 packetization (especially section 5.1) . It would have saved me a lot of time. I didn’t. I did distantly remember the mark bit was used by DTMF to signal that this was the end of a group of (redundant) DTMF packets.

I adjusted the code to ensure that the mark bit was faithfully carried across from in to out.

Yay. Video. Sometimes, for a frame or 2, then nothing.

Timestamps

Back to Wireshark. I compared the inbound and outbound packets again. I noticed that the timestamps of the inbound packets were grouped. 5 to 10 packets would have the same time stamp, the final one having the mark bit set. The outbound ones had the time stamp of the current time of sending. I.e. they increased.

If I’d read the RFC I would have known….

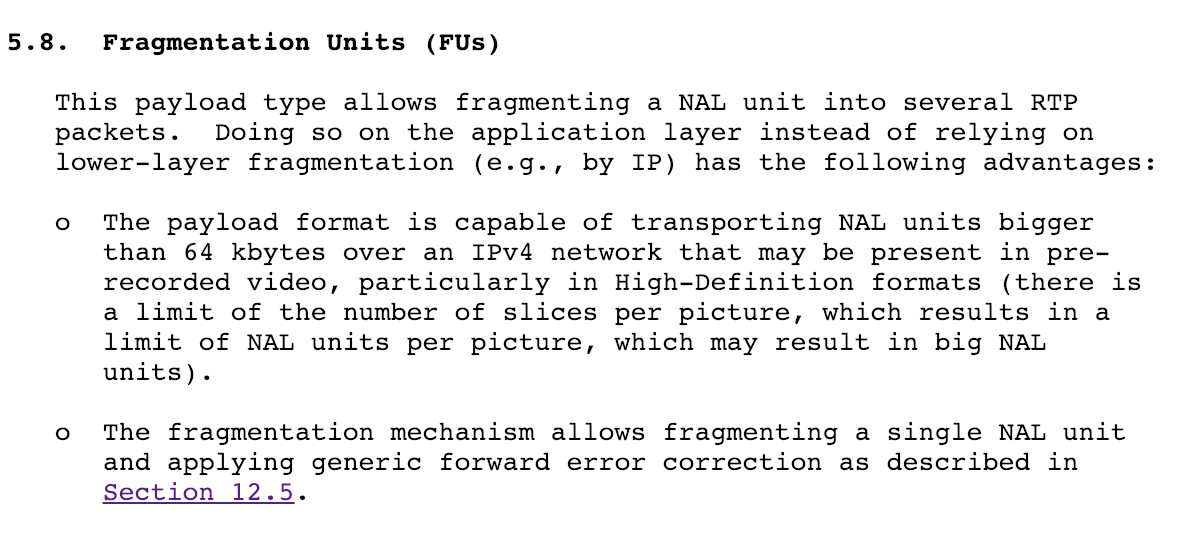

So here’s the thing: H.264 (or any video codec) creates frames that are much bigger than the MTU of a UDP network. So the RTP packetizer splits the frame up into packets and gives all the packets associated with a frame the same time stamp, but incrementing sequence numbers, tagging the last one with the mark bit.

FU MTU

You might be wondering why the encoder doesn’t just send packets that are bigger than the MTU and let the IP level handle the fragmentation. When I finally got around to reading the RFC I found the following section about Fragmentation Units (FUs):

I originally wrote the srtplight code to send audio from a local microphone. It generated it’s own timestamps on outgoing packets.

So I fixed that, to faithfully copy the timestamp from in to out…

More Video, better video, almost usable video, except when it wasn’t.

Key frames

I looked at the sequence numbers arriving at the receive end to see if any packets were being dropped. WebRTC-internals and Wireshark said no, but the video told a different story.

At this point I went off down a rathole of H.264 encoder modes, I found that sending key frames more often would revive the stalled video.

Unlike audio codecs, not all frames are of equal importance with video. Most frames only describe differences in the image – those can’t be rendered unless all the previous frames have been decoded. The exception to this are key frames – these contain a complete (if blurry) picture and function as the basis for subsequent packets to build upon. (This is an unbelievably gross oversimplification of what H.264 actually does, but from a packet’s eye view it will do). So getting a key frame allows a confused decoder to start over.

This didn’t explain why it was confused in the first place. Looking some more at the Wireshark I realised that some frames had missing packets on the inbound side, even though none did on the outbound. Which made no sense until I remembered that srtplight by default creates the sequence numbers (because that’s what we needed with the microphone.) So if an inbound packet from the drone was dropped or miss ordered, srtplight would give out the wrong sequence numbers from then on. This caused the re-assembled H.264 frame to contain nonsense with fragments missing or in the wrong order.

So I fixed that.

AND HEY! Video – useable video! Good enough to drive droids with.

Demented SFU

Time for some refinements.

It would be nice if more than one user could watch a given camera – like a local pilot and remote observer for example. Normally browsers just open a new camera instance and assume that the OS will do the right thing. The platform I was looking at by this point was the Raspberry Pi Zero. This has a hardware H.264 encoder that is only capable of creating a single encoded stream at a time.

So I wrote some code that took a single inbound packet and sent it over multiple WebRTC connections to multiple viewers.

This worked ok, but a new joiner wouldn’t see any video until a new keyframe had arrived (which might be several seconds). So I discussed this with some real WebRTC gurus – (you know who you are) who helped me understand that by this point I was writing something that looked like a demented SFU. They said a real SFU will stash the most recent keyframe and then play it out a to a new joiner so that they get some video immediately.

For example, here’s what Meetecho/Janus does:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

/* H.264 depay */ int jump = 0; uint8_t fragment = * buffer & 0x1F; uint8_t nal = * (buffer + 1) & 0x1F; uint8_t start_bit = * (buffer + 1) & 0x80; if (fragment == 28 || fragment == 29) JANUS_LOG(LOG_HUGE, "Fragment=%d, NAL=%d, Start=%d (len=%d, frameLen=%d)\n", fragment, nal, start_bit, len, frameLen); else JANUS_LOG(LOG_HUGE, "Fragment=%d (len=%d, frameLen=%d)\n", fragment, len, frameLen); if (fragment == 5 || ((fragment == 28 || fragment == 29) && nal == 5 && start_bit == 128)) { JANUS_LOG(LOG_VERB, "(seq=%" SCNu16 ", ts=%" SCNu64 ") Key frame\n", tmp - > seq, tmp - > ts); keyFrame = 1; /* Is this the first keyframe we find? */ if (!keyframe_found) { keyframe_found = TRUE; JANUS_LOG(LOG_INFO, "First keyframe: %" SCNu64 "\n", tmp - > ts - list - > ts); } } |

I implemented this and it works pretty well.

Almost there…

The final refinement was to respond to some RTCP messages that Chrome sends when it thinks it has lost or corrupted a keyframe. I use this to trigger sending an old (cached) keyframe.

Given the limitations of the hardware encoder on the Raspberry Pi this is about the best I can do, although I still need to understand what some of the weirder optional RTCP extensions for situations where I can ask the encoder to do things like regenerate frames etc.

A portable, lightweight H.264 WebRTC stack

So now we have a portable, lightweight WebRTC stack that can send H.264 video (and audio) from the camera of a piZero to multiple WebRTC browser recipients. This is a thing I have wanted to be able to do for literally years. Large parts of this stack are open source (see links above) – but the authentication and orchestration parts are closed source. You can experiment with the fruits of this at https://github.com/pipe/webcam.

Lessons-learned

- Just because your SRTP stack has carried lots of audio, don’t assume it will work with video, video is different.

- READ THE RELEVANT RFCs before you start!

- RTP is ill suited to Video – especially over a lossy medium. This is in contrast to audio, where with something like the Opus codec the loss of a single packet will be covered up by the codec’s Forward Error Correction. No glitch will be heard and subsequent packets won’t be impacted.

- Dropping a single H.264 video packet means that a whole frame (up to 10 packets) is unusable and will cause visible artifacts.

- Dropping a single packet from a keyframe means that the video will stall until the frame is re-sent or a new one arrives.

- Side projects can turn into the real thing.

{“author”,”Tim Panton”}

Regarding the mark bit, I understand that you forgot to keep the mark bit value when relaying a video packet, so it was always set to 0 in outbound. Is that correct?

Yep, in classic VoIP audio the mark bit only gets used in some variants of DTMF (inband) – so my audio-heritage phono stack didn’t pass it up to the layers above or allow setting it on non DTMF packets.

I enjoyed your post Tim, nice write up and deep details. People working in this level of detail will may also run into way more than 10 packets per frame when they move to HD etc, those can be 60+ packets; our team has had a lot of fun in that arena.

Thanks, that’s good (if scary) info about HD

Great article Tim! Read like a thriller. 🙂

Utterly impressive! (I have worked with MJPEG and MPEG-encoding 20 years ago, and still have respect for how bitfiddly hard this stuff is)

A while ago I made a simple node app to stream low latency video to a browser,and was able to get below 100ms delay (depends on the bw, encoder settings etc). I took a bit different approach. I used regular websocket as transfer and used the awesome Broadway decoder and player (uses webgl and optimized android h264 decoder compiled to wasm). The result was quite impressive. You can check the lib and example here https://github.com/matijagaspar/ws-avc-player. Downside is that it only supports baseline profile.

Matija – that’s quite impressive that you got down to 100ms.

Going the webRTC route is a little more complex, but you also get NAT traversal (i.e. both the device and the receiver can be behind different NATs) and the H264 decode uses hardware decoders if they are available, which saves on smartphone battery life.

Since I wrote this post, I’ve been working to add support for bandwidth estimation – Google has done a terrific job, within a few seconds the RTCP messages give you a surprisingly accurate estimate of the available P2P bandwidth so you can adjust the encoder params.

So overall webRTC is probably worth the extra effort in quite a few situations.

Yes! You are very right, and it’s a valid point. So I will try to play around with webrtc some more. My initial reasoning for going over ws was that: webrtc can be quite a pain and I honestly did not know that h264 was supported by webrtc, I assumed only vp8/9.

Damn good article (I know I’m late to the party).

Thanks for going into so much detail about your process.

I’m testing my homebrew (mostly C) SFU on a raspi4 too!

I too naively thought I could just make an SFU by multiplexing streams and being relatively naïve about the RTP content… *derp*

I’m seeing promising results with 2(hd) senders and 4 receivers:

https://weephone.domain17.net

https://github.com/justinb01981/tiny-webrtc-gw

But I’m hitting the limits of what I have access to in order to measure how well it scales… 😉

Thanks for your kind words.

Good luck with the SFU – it is hard work getting an SFU to be performant.

I did a talk this year about how to test SFUs – which might help:

How to test a webRTC service on your own….

Basically you can use cloud lambdas as fake users to test your app.

Thanks, I’ve heard others are using lambdas as well. I’ll dig into that article.

👨💻