Earlier last week a friend at Google reached out to me asking

Does Meet do anything weird with scalabilityMode?

Apparently, I am the go-to when it comes to Google Meet behaving weirdly :). Well, I do have a decade of history observing Meet’s implementation, so this makes some sense!

It turned out that this was actually about Microsoft Edge behaving in weird ways when it came to showing the scalabilityMode statistics property in Chromium’s webrtc-internals page (and for context, I work for Microsoft so I know a bit more about Edge and WebRTC). But at that point, it was too late since I had already spun up Chrome Canary and Edge to take a look at Google Meet. This turned out to be interesting enough for a blog post as I found an expected surprise – AV1!

Since this is my first post in a while, I will round out my Google Meet AV1 investigation with some related comments on VP9 SVC and talk about AV1 screen-sharing advantages too.

AV1 Clues in webrtc-internals

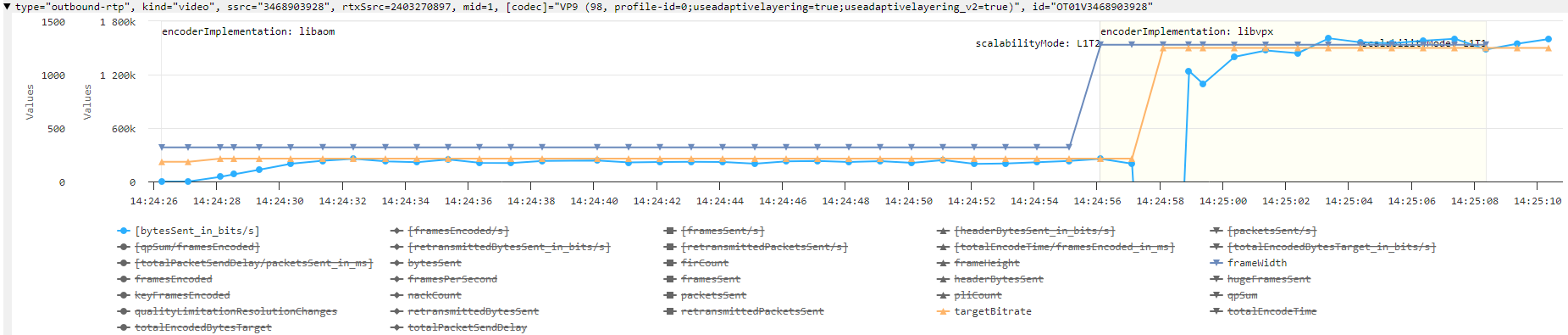

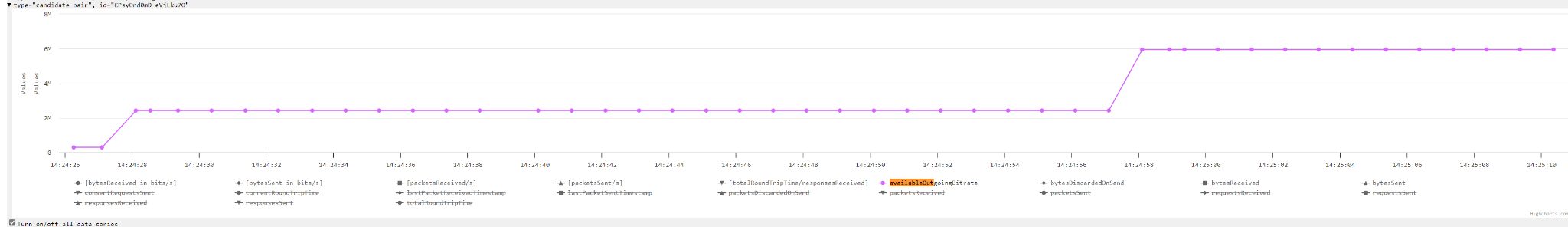

This is the first webrtc-internals dump I grabbed (you can download the raw dump in case you want to draw your own conclusions):

What caught my attention before actually reading anything (I’ll get to that below), are the many spikes in the graph. One potential reason for these spikes is that many of the getStats counters reset when the encoder gets reinitialized – for some reason – which creates the sawtooth-like spikes.

The bug causing this has been bothering me since I first reported it during my time at Tokbox back in December 2015, It remains unfixed eight years later:

getStats: switching the video codec resets the packetsSent/bytesSent counters

on a ssrc report

https://bugs.chromium.org/p/webrtc/issues/detail?id=5361

While a lot of things changed during that time, this issue has stayed with us. This is not unexpected. Fixing things requires time and effort and those are very scarce resources. Or to put it bluntly: unless a problem is a problem for a developer who gets paid to fix it, don’t expect much progress.

Now, reading the text at the top, we see here that the encoder implementation at the top shows libaom which means this call was encoding video using the AV1. It is no secret that Google is betting on AV1 and working on improving it in the context of WebRTC. But so far most of this usage has been in Google Duo (Meta has been deploying it in Messenger too) and this was Google Meet as the “Chrome WebRTC flagship application” using it. So this deserves a closer look!

Digging Deeper on how Meet is using AV1

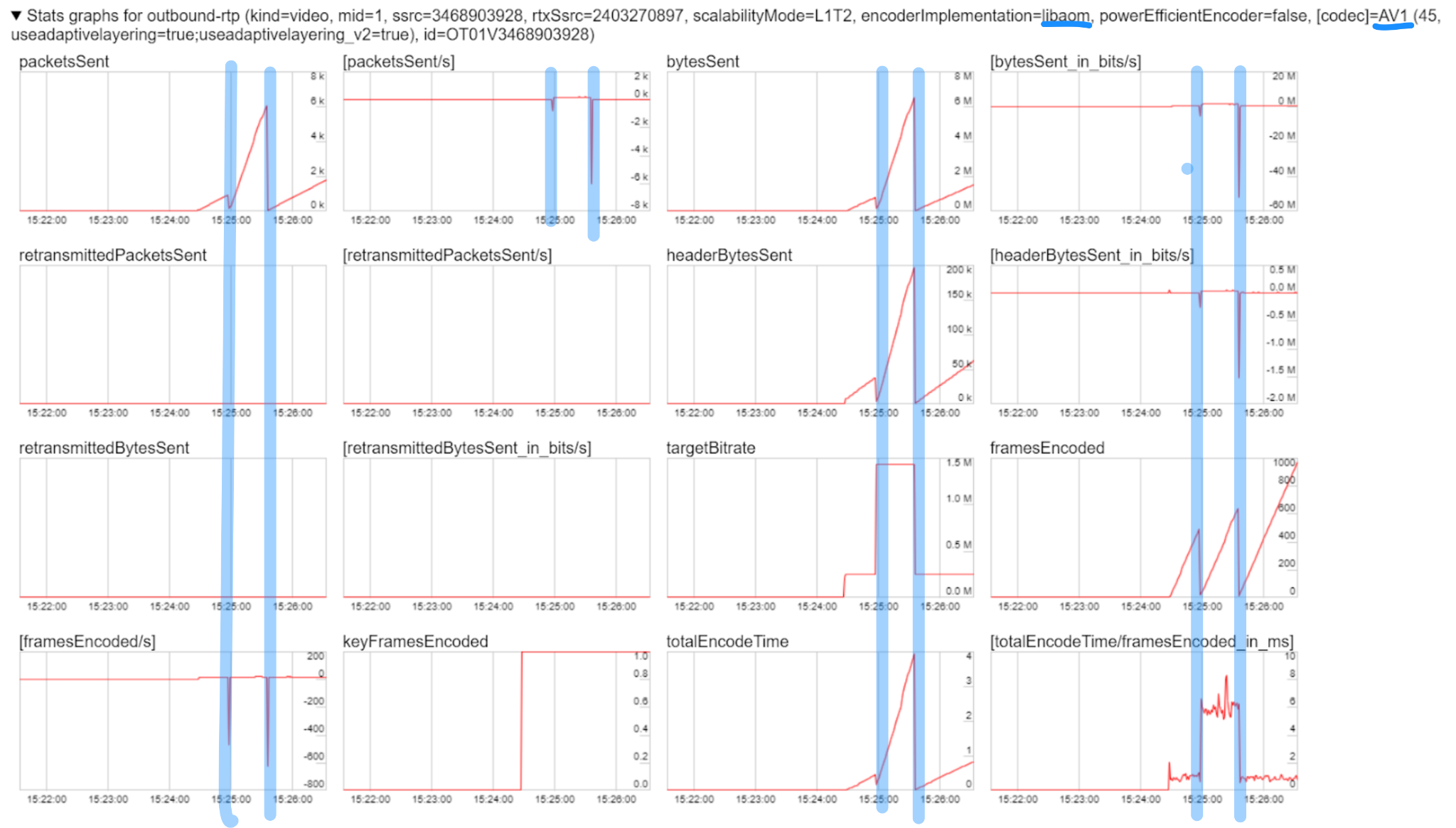

Reading raw JSON dumps from webrtc-internals is rather cumbersome, but fortunately, I already made webrtc-dump-importer for that. I made some updates to that tool to visualize both the encoder/decoder Implementation and scalabilityMode properties from getStats.

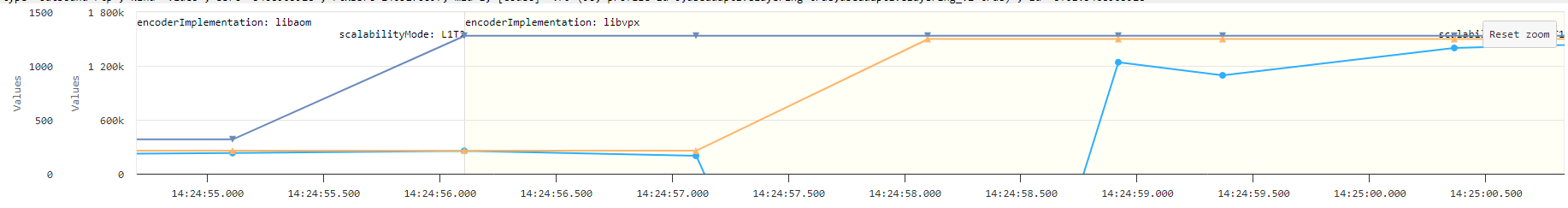

Overall, the call is split into three stages:

- The first one joins without anyone else being there,

- the second when the codec is switched back to VP9 and

- then the phase that follows.

This is shown below:

So what exactly are we seeing in the screenshot? It seems I was lucky and tested at a time when Google was running an experiment with AV1 since I can no longer reproduce this behavior. I was testing in Chrome Canary which has better support for seamless codec switching which may have contributed to being considered part of an experimental rollout.

The most important data series are the actual bitrate, the target bitrate, and the frame width & height. Let us look at the different stages in more detail:

AV1 was used during the “pre-call” period when I was alone waiting to open the second tab. During that time AV1 (with temporal scalability) sends 320×180 frames to the server. The scalability mode is L1T2, i.e. AV1 with temporal scalability. Sending data to the server makes some sense to warm up the transport and get a good bandwidth estimate but I don’t like the privacy implications of sending a user stream before actually joining a call. I’ve called this out to folks on the Hangouts Team a decade ago already, but to no avail. Most likely the incoming traffic caused by this does not generate any real monetary cost either.

You need to send some RTP packets to get a bandwidth estimate. It doesn’t really matter what codec you use. Using AV1 during that pre-call period satisfies the reason to send RTP data to the server, getting a proper bandwidth estimate of 2.5mbps even from the initial low-resolution AV1 data:

For Google Meet, this secondary purpose likely holds more significance. It puts any AV1-related code path into action in a production environment. Additionally, it provides Google with insights into CPU usage and the extent of AV1 support. Remember, for a service like Google Meet, the standards for deploying something in a “production” environment are exceptionally high. This lets them try AV1 without actually showing any video from it.

As soon as the second user joins (even if that user also supports the AV1 codec) the codec implementation changes to libvpx with a scalability mode L1T1 and the codec changes to VP9.

The bitrate goes negative due to the bug first mentioned above and is not shown. Surprisingly the target bitrate changes a second after the resolution which might be a bug.

Using AV1 during the pre-call phase is a great testing strategy and just how I would roll out a new codec to production. AV1 landed in libWebRTC in April 2020. Since then, it has undergone quite some tuning since which was nicely summarized in rtc@scale session in 2022.

Such a long time between landing the codec implementation and putting it into production is not unusual, we have seen a similar timeline for VP9 SVC which shipped in Chrome in late 2014 or early 2015 and we still discussed SVC as something “not there yet without flags” in 2017. Google fooled everyone on this one by enabling VP9 K-SVC by abusing the codepath for VP8 simulcast SDP munging which is still how Google Meet uses it in production.

VP9 k-SVC

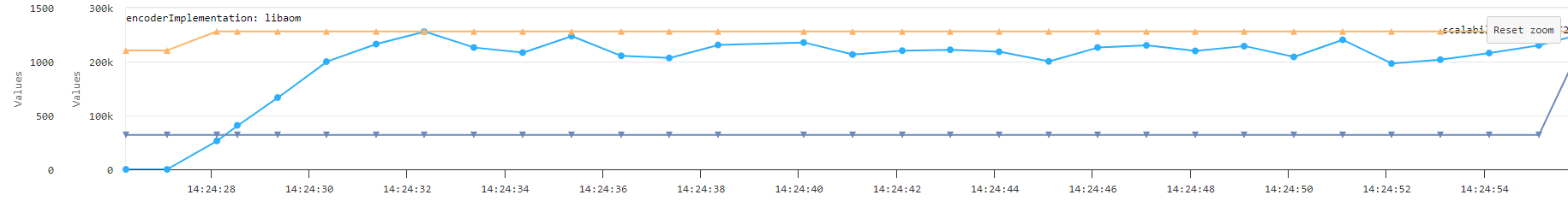

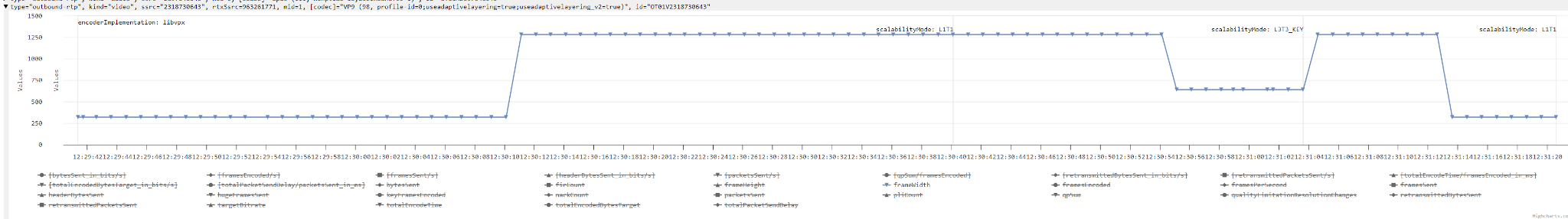

Video codecs in WebRTC are complicated. As further tests have shown, Google Meet still uses VP9 Scalable Video Coding (SVC, i.e. L3T3_KEY and similar modes) in production which is surprising given the amount of effort that went into hardware-accelerated VP9 simulcast. As a byproduct of looking at the AV1 changes, the dump-importer now makes it more clear how Google Meet is turning SVC on and off on the fly depending on the number of participants in the call:

In another dump, we see L1T1 (i.e. no SVC) being used with VP9 during the pre-call warm-up followed by VP9 L1T1 with high resolution after the second participant joined and then switching to L3T3_KEY. The reason to optimize the behavior here is that VP9 SVC currently forces a fallback to software decoding since the hardware decoder is unable to decode it. While fixing that may be possible, the fixes have not yet landed or been verified.

AV1 screen content coding is better

However, webcam video was not where I expected AV1 to show up in Google Meet first. While Meet uses VP9 and SVC for webcam content, screen sharing (or rather tab sharing which is more privacy friendly) continues to use VP8 simulcast on a separate RTCPeerConnection. AV1 would have been a much more natural codec to use here since it has a feature called “screen content coding” which does some tricks to reduce the bitrate drastically. libWebRTC has been enabling this particular mode for MediaStreamTracks coming from screen sharing (or with a contentHint set to “detail”) for a while now.

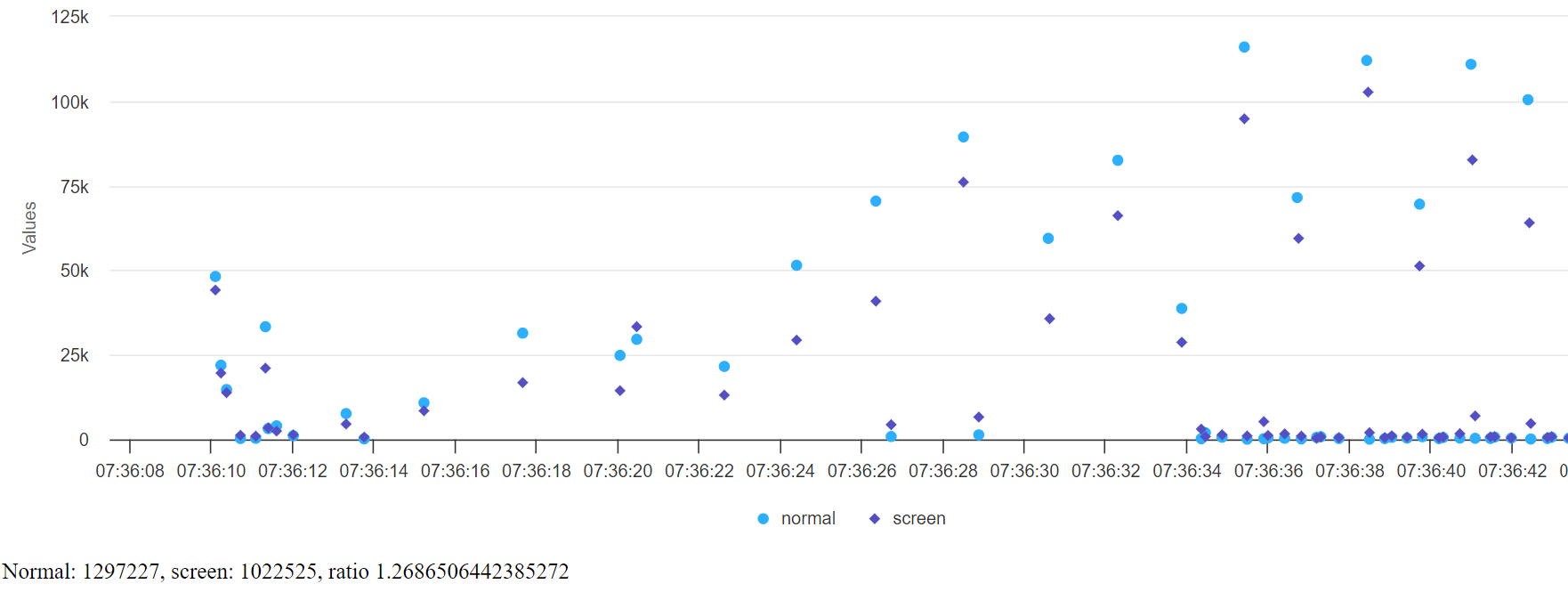

Earlier this year I tried porting that feature to WebCodecs to get some consistency in the different video encoding mechanisms supported by Chrome. That ended up being implemented in a different way than I expected. It is is now controlled by the contentHint attribute. The results shown below of this are quite impressive. WebCodecs makes them easy to visualize. Note: Doing this in WebRTC is much harder to compare since we don’t have easy access to frame sizes and encoder bitrate is driven by bandwidth estimation.

The screenshot above is using Chrome Canary with a jsfiddle running two AV1 encoders in parallel with the same content – a shared tab with slides that are being paged through slowly. The result is a 25%+ reduction in the frame sizes and hence bitrate. This is even more important in a multiparty scenario since an SFU has to distribute the large frames that come as spikes on every change of the current slide to many participants. The reduction in frame size helps a lot here.

Expect more AV1 to come in 2024

AV1 is becoming mature enough to be production-ready quite fast. For example, improved hardware codec support for AV1 just landed in Chrome M120. 2024 is going to be exciting.

{“author”: “Philipp Hancke“}

Leave a Reply