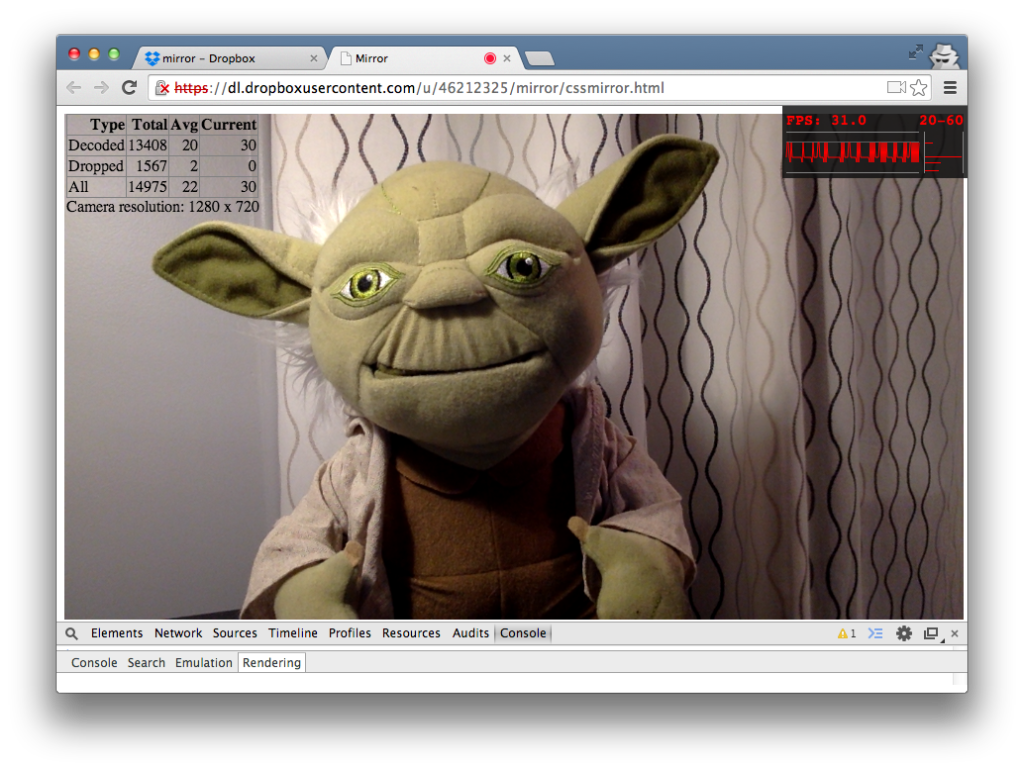

Let’s have some more fun with getUserMedia by creating a simple mirror application and determining its frame rate.

If your user is going to send their video, it is a general best practice to let them see what they are sending. To do this you simply route the local video stream capture by getUserMedia to a <video> element inside the DOM. That is pretty easy, but the challenge is the video you see is not mirrored. When you are looking at yourself you expect to see a mirrored image. When you don’t it feels off and leads to a poor user experience. Ok, so everyone mirrors their local video, so let’s do that.

Before we dive in, let me also give my usual warnings – this is mostly a case study in what not to do for n00bs and webdev-posers like me. There is a likely better ways to do things and I document some of them. Along the way I did discover how to get the actual frame rate coming in from your camera, found some good built-in tools for viewing the Chrome’s rendering rate, and made a few interesting observations on frame rates in general.

Make a Mirror with the HTML5 Canvas

Warning: this is not the best way to make a mirror in most cases – skip to the CSS3 Transforms section if you are just looking for a quick solution.

I did some searches and looked at some of my own code from the Baby Monitor and this looked straight forward. I found a bunch of examples, but no real explanations of how this worked. Since I didn’t really know much about using the canvas so I thought I would use this as a learning experience.

Basics

Let’ start out with some basic stuff. You can view the code on github, view the result here, or play interactively on jsfiddle.

The first step is to create a <canvas> in the DOM. I gave mine an id of “mirror”:

|

1 |

<canvas id="mirror"></canvas> |

Then I used jQueary to select this into a variable, create a context variable, and get my whole page:

|

1 2 3 |

//use vars so we only call jQuery selectors once var mirrorCanvas = $('#mirror')[0]; var canvasContext = $("#mirror")[0].getContext('2d'); |

Context? The word context is thrown around too much these days, but in the HTML5 canvas world, the getContext command creates a built-in HTML5 object that includes methods and properties for creating and editing images.

From there its just a few commands to mirror your image:

|

1 2 |

canvasContext.translate(area.offsetHeight * vidRatio,0); //move the drawing cursor to the right edge canvasContext.scale(-1,1); //flip the image horizontally |

I am going to use the scale command to negatively scale the X-axis scale(-1,1). Before we draw, we need to tell the canvas where it needs to start. Since we are drawing backwards due to our negative scaling, we need to start in the upper right corner. In my case I wanted the image to scale with the height of a “content” div I created which I mapped to an area variable with jQuery (area.offsetHeight). I also wanted to user what ever native resolution the camera returned, so I used created a vidRatio variable to compute the aspect ration from the actual videoWidths and videoHeights to compute this. Multiplying the height of my area by the aspect ratio puts results in a naturally scaled width.

Then you draw the image. You don’t want to draw the image only once, you actually want to do it a bunch of times per second, so you use the setInterval function with a predefined rate (actually the inverse of the FPS rate), as is shown here:

|

1 2 3 |

window.setInterval( function(){ canvasContext.drawImage(video, 0,0,area.offsetHeight * vidRatio, area.offsetHeight; }, rate); |

I will talk about the rate in a moment. This got me a mirrored video. From here I made a number of tweaks to automatically resize the canvas when the window sized changed and to toggle mirroring on and off.

Since I was adjusting the canvas dimensions based on the native size of the video that was returned, it was important that I get this size. I tied a getDimensions function to the playing event for the window. Tying to this event did not always work – I noticed that sometimes this would not be populated right away, particularly on my older camera. To overcome this I built a additional setInterval function to wait until the dimensions were populated before starting the canvas write:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

//Sometimes need to wait for dimensions to show function getDimensions(){ var timer = 10; var waitForDimensions = window.setInterval(function(){ //see if the video dimensions are available if(video.videoWidth*video.videoHeight>0) { clearInterval(waitForDimensions); vidRatio = video.videoWidth/video.videoHeight; console.log("after waiting " + timer + " ms, actual video size is: " + video.videoWidth + " x " + video.videoHeight); startCanvas(); //start calculating stats once the video is started calculateStats(); } //If not, wait another 10ms else { //console.log("No video dimensions after " + timer + " ms"); timer+=10; } }, 10); } |

This seemed to resolve the issue and provided some interesting data on how long it takes some cameras to load.

Frame Rates

So what is the right frame rate to update the canvas? Several examples I came across that used the canvas method above updated at 30 Frames Per Second (FPS). This seems reasonable, but what frame rate was my camera producing? What if my camera isn’t doing 30 FPS? Why do a CPU intensive canvas draw so often for no reason? This question set me out on a long journey.

To start out, I found a way to measure the frame rate. Unfortunately there is no built-in property in the video element or stream with this information (none that I could find at least), but I did come across a discussion and example in the chromium group on this here. Here is my modified version of the media_stat_perf.html example mentioned there:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

function calculateStats() { var decodedFrames = 0, droppedFrames = 0, startTime = new Date().getTime(), initialTime = new Date().getTime(); window.setInterval(function(){ //see if webkit stats are available; exit if they aren't if (!video.webkitDecodedFrameCount){ console.log("Video FPS calcs not supported"); return; } //get the stats else{ var currentTime = new Date().getTime(); var deltaTime = (currentTime - startTime) / 1000; var totalTime = (currentTime - initialTime) / 1000; startTime = currentTime; // Calculate decoded frames per sec. var currentDecodedFPS = (video.webkitDecodedFrameCount - decodedFrames) / deltaTime; var decodedFPSavg = video.webkitDecodedFrameCount / totalTime; decodedFrames = video.webkitDecodedFrameCount; // Calculate dropped frames per sec. var currentDroppedFPS = (video.webkitDroppedFrameCount - droppedFrames) / deltaTime; var droppedFPSavg = video.webkitDroppedFrameCount / totalTime; droppedFrames = video.webkitDroppedFrameCount; //write the results to a table $("#stats")[0].innerHTML = "<table><tr><th>Type</th><th>Total</th><th>Avg</th><th>Current</th></tr>" + "<tr><td>Decoded</td><td>" + decodedFrames + "</td><td>" + decodedFPSavg.toFixed() + "</td><td>" + currentDecodedFPS.toFixed()+ "</td></tr>" + "<tr><td>Dropped</td><td>" + droppedFrames + "</td><td>" + droppedFPSavg.toFixed() + "</td><td>" + currentDroppedFPS.toFixed() + "</td></tr>" + "<tr><td>All</td><td>" + (decodedFrames + droppedFrames) + "</td><td>" + (decodedFPSavg + droppedFPSavg).toFixed() + "</td><td>" + (currentDecodedFPS + currentDroppedFPS).toFixed() + "</td></tr></table>" + "Camera resolution: " + video.videoWidth + " x " + video.videoHeight; } }, 1000); } |

This function only works in Chrome and Opera implementations (versions I tried below) . It pulls decoded frame count and dropped frame count properties. You can then use these with a custom timer to estimate the frame rates. I played around with this on several cameras & browsers. The frame rates that are returned had a lot of variability – sometimes within the same session.

Here are some highlights:

- This doesn’t work in Firefox (30.0)

- It does work on Opera (22.0)

- Chrome (35.0) appears to adjust the framerate based on CPU load

- Chrome may adjust the framerate based on battery information – one night I was able to get Chrome to switch between 15 and 30 FPS just by removing and inserting my power cord but it hasn’t worked since (not sure if my browser auto-updated)

- Adjusting the window/canvas size would sometimes alter the frame rate

- I got framerates between 8 and 30 FPS between devices

- Chrome has some good rendering tools for checking the framerate the browser is actually writing at (see here for those)

The canvas can suck up a lot of CPU

I found that writing a large canvas like this can eat up a lot of CPU. Getting exact CPU measurements is difficult with all the automated optimizations that occur in the background, but the canvas writes along took roughly 10% of my CPU on my Galaxy S5 in a few simple tests. Generally I almost always noticed a difference in performance between the stream shown in the <video> element and the mirrored stream in the <canvas>. This was very apparent when Chrome’s FPS tool indicated the rendered frame rate was less than the native frame rate of the video; noting again this is tough to precisely nail down due to automated optimizations that adjust this.

Mirror the easy way with CSS3 transforms

Mirroring any element is easy with CSS transforms, all you need to do is:

|

1 2 |

transform: scale(-1, 1); /*For Firefox (& IE) */ -webkit-transform: scale(-1, 1); /*for Chrome & Opera (& Safari) */ |

That’s it. No need to create & manipulate a canvas element. Better performance and 100 fewer lines of code.

I discovered this after I was having so much CPU performance trouble with the canvas approach. I assumed there had to be a better way so I went back to the core WebRTC code AppRTC sample to see how it was done there.

You can grab the code on github, view the result here, or play with it interactively on jsfiddle.

Take-aways

Actually looking through previous experiments I had at least cut & pasted examples that used the CSS3 transform. If I had paid attention this would have been a shorter post.

The canvas is still needed if you want to do any manipulation of the image beyond the basic CSS3 transforms. If you are going to use the canvas method, it is best to keep it in a smaller window to prevent being a performance drag.

I also learned that that frame rate – even those directly from your camera – are NOT very deterministic. Don’t assume a FPS value and don’t depend on it being constant, even when it is local.

{“author”: “chad“}

Updated on 7/26/2014 with links to github repos and github.io hosting.

Very useful. Thank you very much