I wanted to add local recording to my own Jitsi Meet instance. The feature wasn’t built in the way I wanted, so I set out on a hack to build something simple. That lead me down the road to discovering that:

getDisplayMediafor screen capture has many quirks,mediaRecorderfor media recording has some of its own unexpected limitations, and- Adding your own HTML/JavaScript to Jitsi Meet is pretty simple

Read on for plenty of details and some reference code. My result is located in this repo.

Editor’s note: see the comments section for some very relevant commentary and caveats from Jan-Ivar at Mozilla.

The Problem

I built a Jitsi Meet server a few months ago with the intention of updating my Build your own phone company with WebRTC and a weekend post. There are a billion posts/videos on how to set up Jitsi Meet, and I don’t have anything new or interesting to add to the technosphere there. However, one feature I really wanted to implement is recording. I often do demos and recording the session for others and future reference. On the surface, my requirements here are simple – record my audio and the audio and video of the Jitsi Meet session on demand and save the file locally. This sounds like a simple feature to add, but…

Jitsi Cloud Recording Challenges

Jitsi has a module called Jibri used for recording. The Jibri setup and configuration is more complicated to install than the base Jitsi Meet, but one can struggle through it in hours or less if you’re familiar with the underlying system. Jibri loads a headless browser that acts as a silent participant in the call, grabbing the audio and saving it to disk. This approach is fairly resource intensive, which forced me to upgrade my server from $5/mo to $20/mo. It also only handles a single recording at time. If you want to record multiple sessions you can set it up to launch multiple Docker containers, which starts getting complex. On top of that, you also need to build a mechanism to transfer the files someplace after they are recorded or setup the Dropbox integration. I really just wanted a quick way to record a session and share it afterwards and this was all getting very complex. Time to look for a simpler way.

Local Recording

Another approach is to just record locally. Local recording is more secure by nature as you are not leaving unencrypted media on a server somewhere. It is also less resource intensive since you are using your local computer to save media it is already receiving vs. adding a new element in the cloud. Jitsi actually has an option for this, but that only includes the audio. I need to record whatever I am looking at on the screen too. So I set out on a hack to add local screen recording.

How to get the Media

The lazy way to do this is to hack together some audio sources for Quicktime recording or just use one of the WebRTC-based recording API’s or browser extensions, but that wouldn’t make for much of a post. Nor is it something that will work universally for all users who join over the web. I had previously made an audio recorder that overloads the createPeerConnection API, grabs all the tracks across multiple connections, and saves all the audio to file. This actually worked with Jitsi Meet’s multiple audio connections. I could have set up some canvas that would take the screen and save that too, but I found a much simpler way with getDisplayMedia.

getDisplayMedia review

The getDisplayMedia API for sharing Desktop media was introduced a while ago, which we covered here. The good news is all the major browsers implement getDisplayMedia. The bad news is these implementations are all different, and it could have an impact on the user experience. Let’s take a look.

Note: when I refer to Edge below, I am using the new Chromium-based Edge.

Test code

I thought this would be an easy API to evaluate, but I should know better. To help gather some data I wrote some code to help call getDisplayMedia and test parameters. You can see that on GitHub here or run it on my site here.

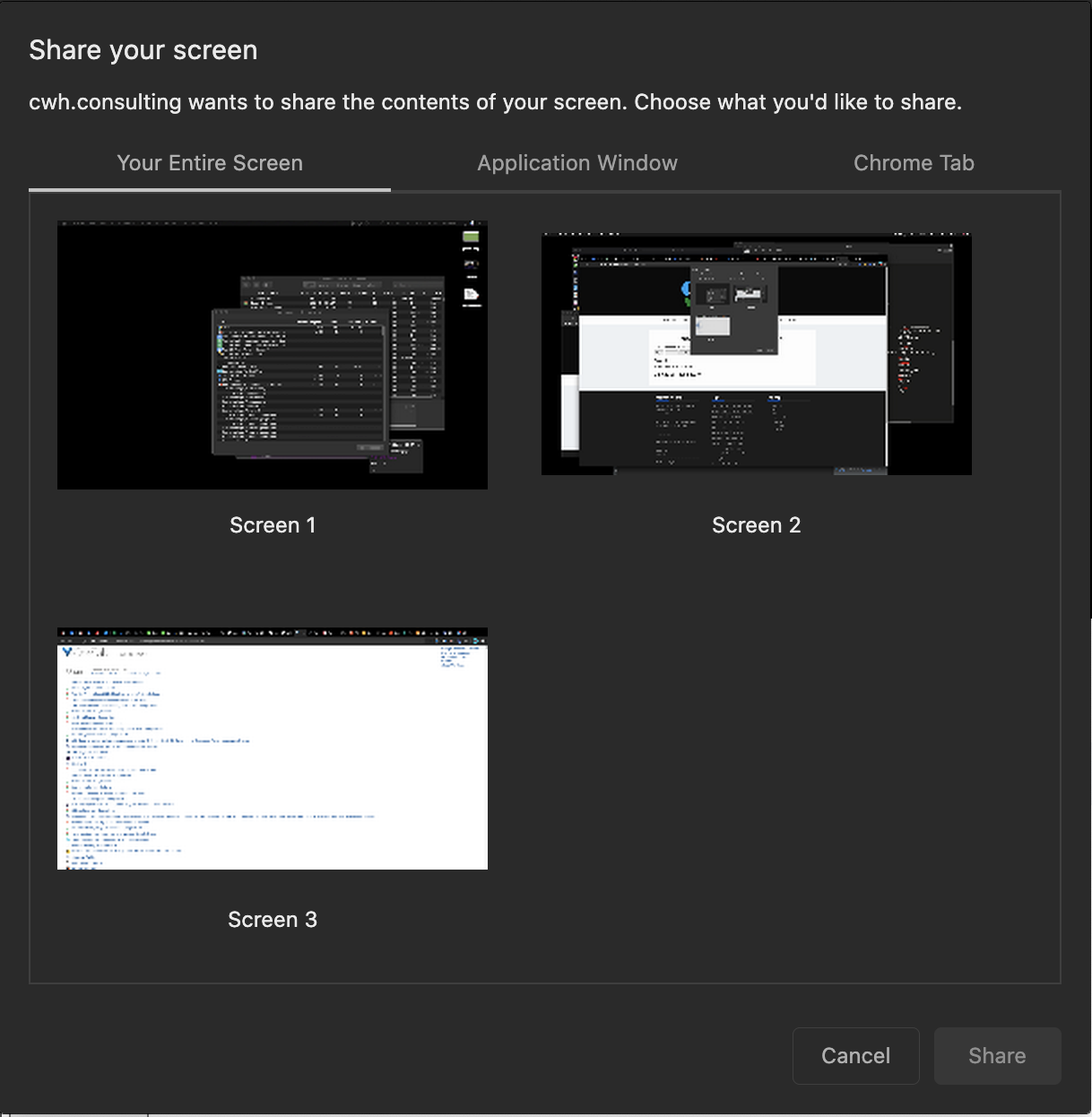

Screen Share Pickers

There are big differences in the recording picker options. Chrome and Edge allow choosing among any full display, application window, or browser tab. Firefox excludes the browser tab option. Safari has no picker and only lets you choose the current display.

You can see the UI differences below:

Chrome

| Version: 84 |

| Selection options: Display, Window, Tab |

|

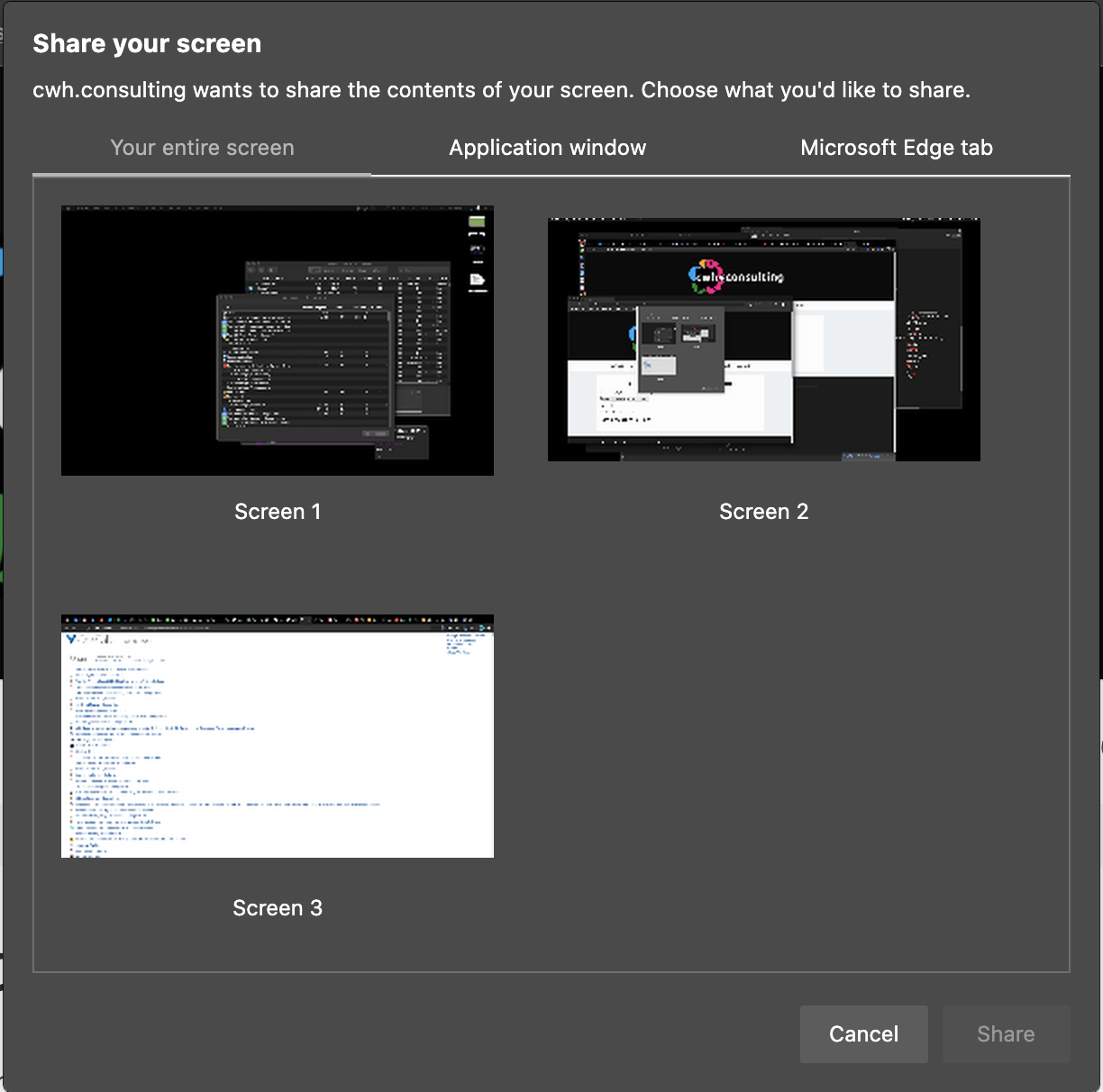

Edge

| Version: 84 |

| Selection options: Display, Window, Tab |

|

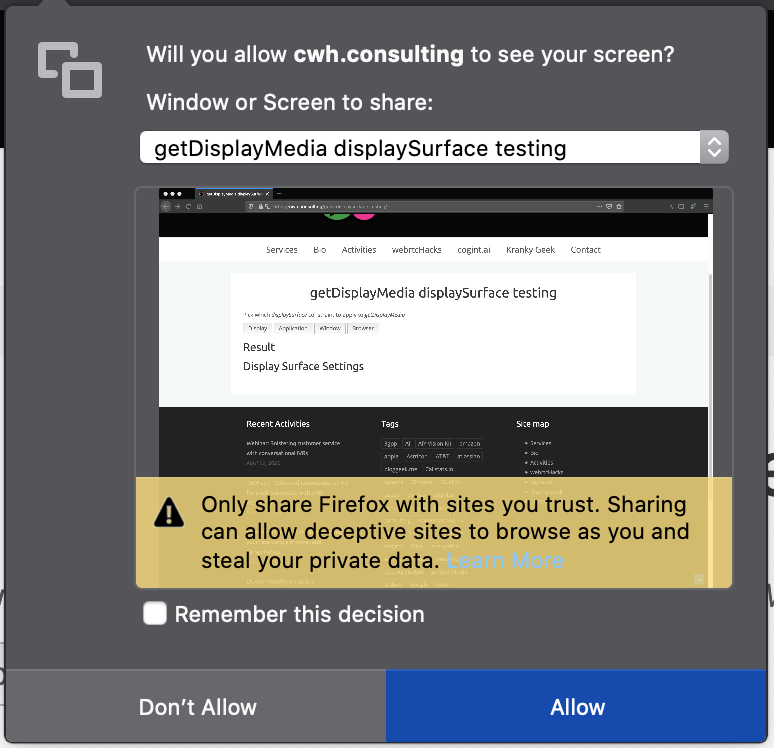

Firefox

| Version: 77 |

| Selection options: Display, Window |

|

Safari

| Version: 13.1 |

| Selection options: Current display |

|

Chrome and Edge display a blue highlight box around the inside of the window frame to indicate the tab is being shared.

displaySurface selection constraints are useless

The getDisplayMedia API includes a displaySurface option for choosing between desktop display , window , application , or browser tab. In my case I really only care about recording my Jitsi Meet tab. I wanted to see if I could simplify the user interface by limiting the section options. That seems like it should be easy to do with constraints.

However, the spec says:

> The user agent MUST let the end-user choose which display surface to share out of all available choices every time, and MUST NOT use constraints to limit that choice.

In fact, unlike getUserMedia :

> Constraints serve a different purpose in getDisplayMedia than they do in getUserMedia. They do not aid discovery, instead they are applied only after user-selection. (source)

This means in practice these constraints mean nothing. See the spec for more, but essentially the constraints aren’t allowed to do anything so there isn’t much point in using them. You also can’t enumerateDevices() on display surfaces or look for devicechange events either.

(As an aside: Perhaps these limitations are why Google Hangouts still uses its own extension mechanism instead of getDisplayMedia).

Note on Framerates

Like getUserMedia you can apply video resolution and frame rate constraints. The video resolution will just resize the video after capture. You can also reduce the frame rate if you want to reduce some cycles. In fact, you will see many WebRTC video conferencing services that send a screenshare reduce this to 10 or less depending on the content shared to minimize CPU usage.

User Gesture Requirements

Firefox and Safari require a user gesture, like a button click, before you can access getUserMedia . Chrome and Edge do not.

Try it yourself by pasting this into the JavaScript console:

|

1 |

navigator.mediaDevices.getDisplayMedia().then(console.log).catch(console.error); |

iFrame Permissions

After trying to do my tests in codepen, I discovered there are restrictions on iFrames. Firefox and Safari won’t work in an iFrame without a special allow permission and these permissions are different.

Firefox requires <iframe allow="display-capture"> . Safari requires <iframe allow="display"> .

Chrome and Edge do not have any special iFrame requirements.

Track Content Differences

Perhaps unsurprisingly at this point, there are differences in the information returned by each track when calling getSettings:

| Chrome | Edge | Firefox | Safari |

|---|---|---|---|

| aspectRatio: 1.7777777777777777

deviceId: web-contents-media-stream://1698:769 frameRate: 30 height: 1080 resizeMode: crop-and-scale width: 1920 cursor: motion displaySurface: browser logicalSurface: true |

spectRatio: 1.7777777777777777

deviceId: web-contents-media-stream://9:1 frameRate: 30 height: 1692 resizeMode: crop-and-scale width: 3008 cursor: motion displaySurface: browser logicalSurface: true videoKind: color |

rameRate: 30

height: 2100 width: 3360 |

frameRate: 30

height: 0 width: 0 |

Chrome and Edge are identical as usual except Edge adds a videoKind option. I am not sure what this is for. Firefox gives minimal video information. Safari only provides a value frameRate .

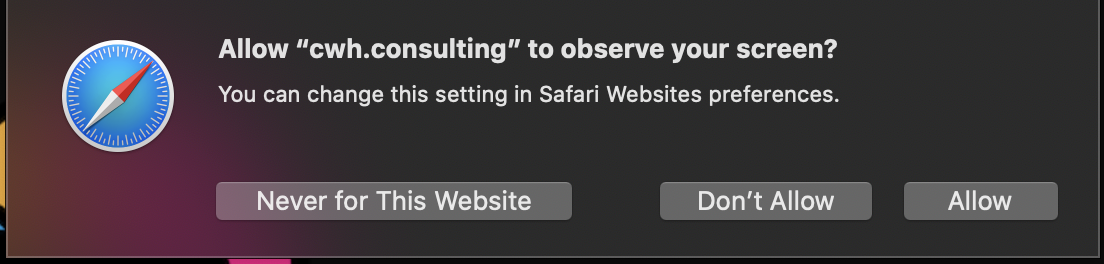

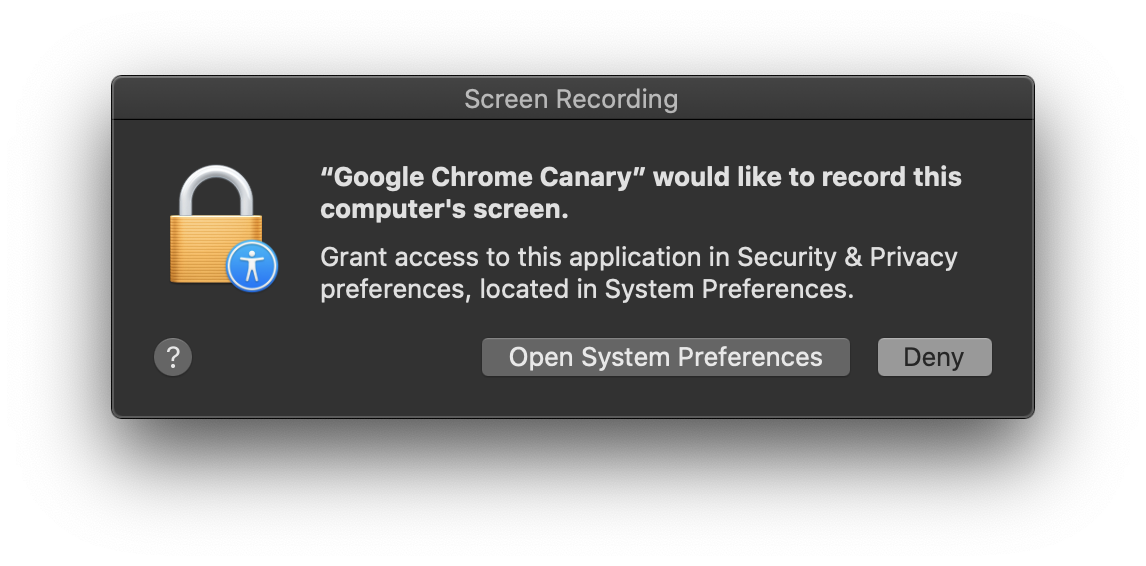

MacOS Catalina Permissions

Don’t’ forget MacOS Catalina introduced new screen recording permissions at the OS level. This means you need to grant Screen Recording access to the application.

Unfortunately this setting only takes effect after you reset the application, which is very inconvenient if you are in the middle of a meeting and need to present something quickly:

If you are implementing getDisplayMedia for the first time you might want to warn your Mac users of this.

Mobile support

Mobile support doesn’t exist. getDisplayMedia doesn’t work in Android or iOS for any browser.

getDisplayMedia with Audio

Getting the audio of the various participants is challenging, but can be accomplished by overloading the peerConnection and intercepting the streams. However, getting access to the system audio – for say capturing the audio of a video or shared application – is not possible using this method. Fortunately the getDisplayMedia spec does allow for the capture of system audio. This was not published in any of the discuss-webrtc PSA’s that I saw, but Chrome introduced this capability last year.

Audio parameter

The audio capture parameter looks just like it does for getUserMedia . Audio is easy to capture by adding an audio: true parameter:

navigator.mediaDevices.getDisplayMedia({ video: true, audio: true });

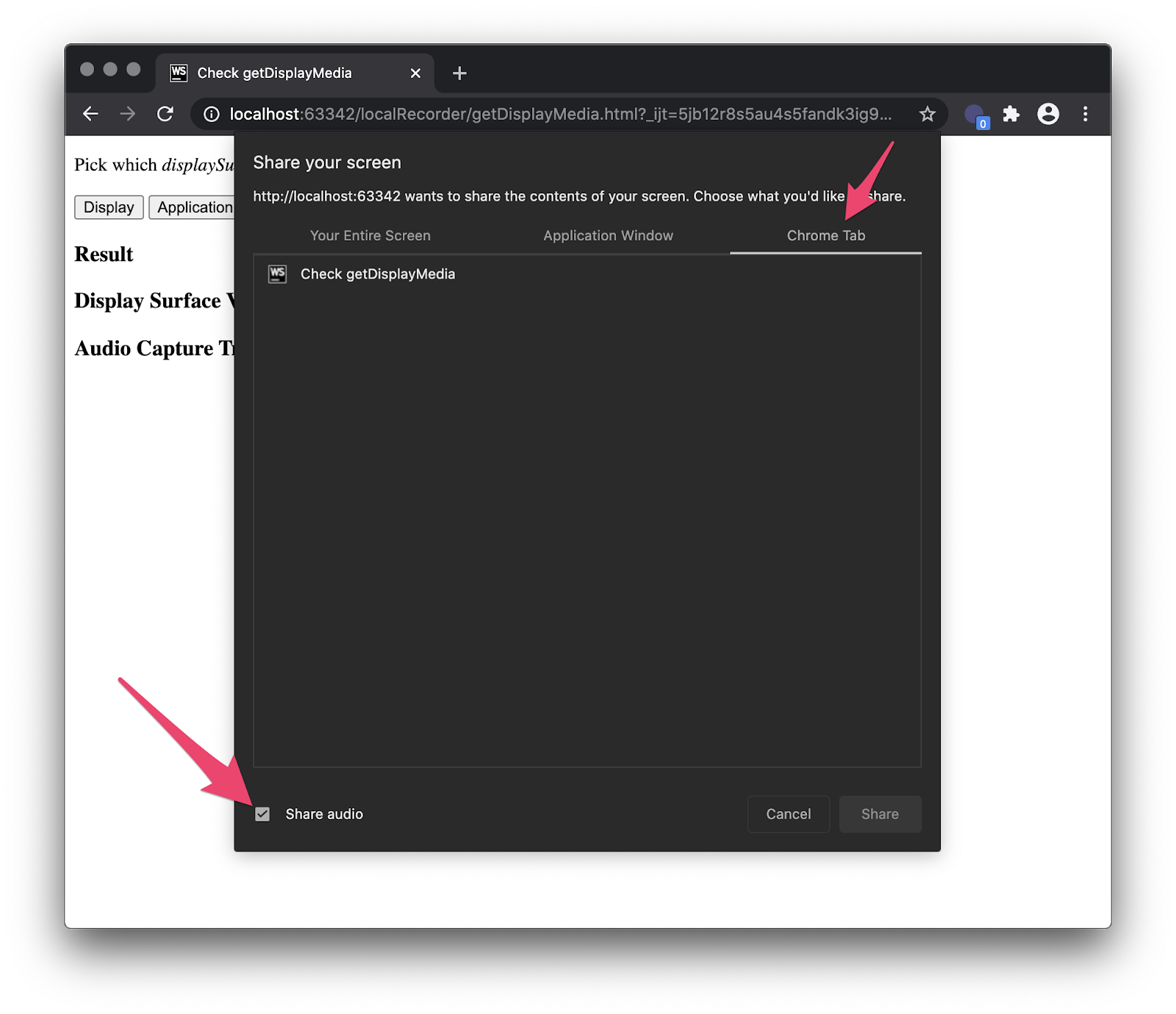

On MacOS, you will see a Share audio option when you select Chrome Tab:

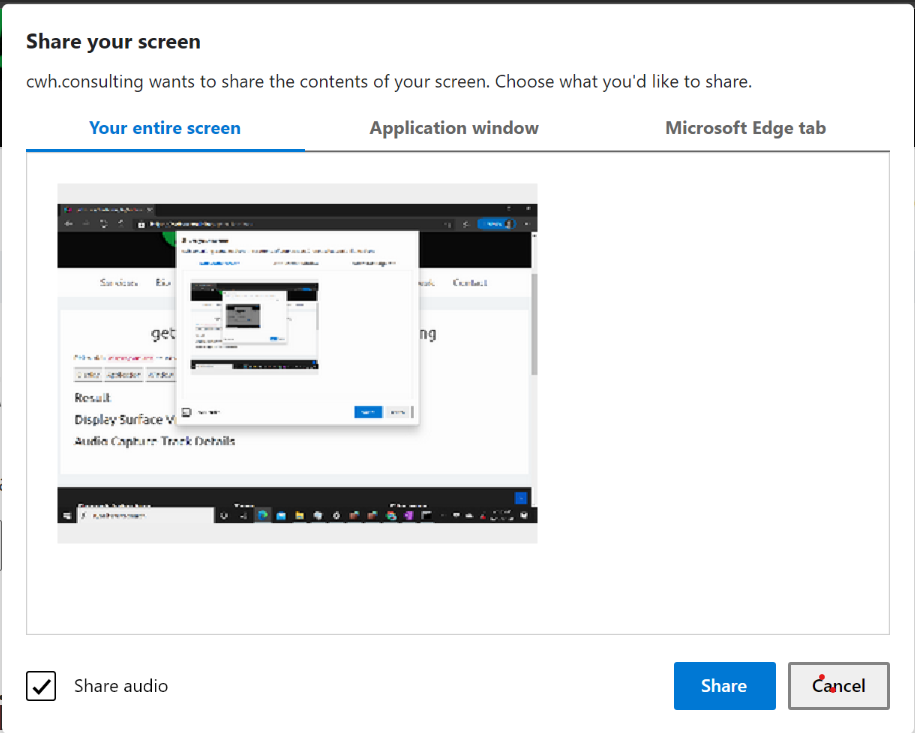

On Windows this shows when you select “Your entire screen” or a tab:

Quirks in getDisplayMedia Audio Capture

Audio capture with getDisplayMedia is fairly limited and does not provide a consistent user experience.

Different Operating Systems; different behaviors

As shown above, audio capture is only available when sharing a tab on MacOS. It is available for the whole screen or a Tab in Windows. Only for Tabs in Linux. Audio capture is not available for a specific application or window in either OS.

Browser support

Only Chrome and Edge support audio capture, so browser support for display plus audio capture is also poor.

Inconsistent constraint checks

navigator.mediaDevices.getDisplayMedia({audio:true}).then(console.log).catch(console.error); results in a TypeError: Failed to execute 'getDisplayMedia' on 'MediaDevices': Audio only requests are not supported .

Changing the parameters to {video: true, audio: true} works, but if the user does not select an audio source there is no warning or rejection. If your app expects audio, then you need to check for it by counting the audio tracks:

|

1 |

navigator.mediaDevices.getDisplayMedia({video: true, audio:true}).then(s=>s.getAudioTracks().length>0).catch(console.error); |

Audio parameters

Calling getSettings() on the audio track output looks like this:

|

1 2 3 4 5 6 7 8 |

autoGainControl: true channelCount: 1 deviceId: web-contents-media-stream://37:4 echoCancellation: true latency: 0.01 noiseSuppression: true sampleRate: 48000 sampleSize: 16 |

I was not able to adjust the sampleSize by adjusting the parameters. Using various values,I was able to change the sampleRate between 44100 or 48000 with some quick tests. These happen to be the default rates for PCM and Opus audio, the mandated audio codecs supported by WebRTC. I could also turn off the autoGainControl , echoCancellation , and noiseSuppression . I did not have a good way to test if these actually do anything when off.

Note turning off echoCancellation did result in 2 tracks returned. There is a lot more that could be tested here with the audio capture devices, but that was beyond the scope of my evaluation this time.

Recording media with MediaRecorder

MediaRecorder API review

The mediaRecorder API makes it super easy to record a stream. Just:

recorder = new MediaRecorder(recorderStream, {mimeType: 'video/webm'}); recorder.start();

Then we just listen for new data, and save it to an array somewhere:

|

1 2 3 4 5 |

recorder.ondataavailable = e => { if (e.data && e.data.size > 0) { recordingData.push(e.data); } }; |

After that we can playback that stream or save it to disk. See MDN’s mediaRecorder API guide for more details or my sample for examples on playback and saving to disk.

Note on recording timeslice parameter (i.e. – recorder.start(100) ) – don’t do it without a good reason! See why this was changed in the WebRTC repo official sample in this issue.

mediaRecorder browser differences

I have several different streams I want to record:

- The local getUserMedia stream so I can hear what I am saying

- The system audio to capture what everyone else is saying and what ever I am playing

- The screen capture

So just add a couple of audio tracks and the one video track to a single stream and send that to the mediaRecorder, right? Well, no – as explained in this Chromium doc, mediaRecorder will only record a single track the way it is implemented in Chrome today.

Firefox does support stereo recording.

Safari doesn’t support mediaRecorder at all. There is however a neat polyfill that adds that support.

WebAudio Mixing to overcome Chrome MediaRecorder issues

Chrome will only record one track at a time. To overcome this we can can:

- Save the individual files and use something like ffmpeg to save a multi-channel stream

- use WebAudio to mix multiple audio streams into one and then send that audio stream to the recorder.

Option 2 is much easier. The code here is simple – input 2 streams, mix the audioTracks and add all the video tracks ( mediaRecorder will ignore the 2nd video track if that is included) :

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

function mixer(stream1, stream2) { const ctx = new AudioContext(); const dest = ctx.createMediaStreamDestination(); if(stream1.getAudioTracks().length > 0) ctx.createMediaStreamSource(stream1).connect(dest); if(stream2.getAudioTracks().length > 0) ctx.createMediaStreamSource(stream2).connect(dest); let tracks = dest.stream.getTracks(); tracks = tracks.concat(stream1.getVideoTracks()).concat(stream2.getVideoTracks()); return new MediaStream(tracks) } |

You can also check out Muaz Khan’s MultiStreamMixer for something a little more robust that handles video source mixing too.

Adding the code to Jitsi

The next challenge was adding this code to Jitsi. You could load this code in your own page and then load in Jitsi in an iFrame or even use the Jitsi iFrame API to autoload the iFrame into a designated element. However, that would require some additional work to direct people to the proper room. I wanted to continue just sharing a link like https://meet.your.tld/room without more work. It is possible to do this without building the Jitsi source code – here’s how.

Using Jitsi Meet’s static files

Jitsi actually serves static files to load its various web elements. In the Debian install, these files are located at /usr/share/jitsi-meet/ . Examining /usr/share/jitsi-meet/index.html one can see Jitsi Meet inserts modules using <!--#include virtual=”yourfile.html”> :

|

1 2 3 4 5 6 7 8 9 10 11 12 |

<script><!--#include virtual="/config.js" --></script><!-- adapt to your needs, i.e. set hosts and bosh path --> <!--#include virtual="connection_optimization/connection_optimization.html" --> <script src="libs/do_external_connect.min.js?v=1"></script> <script><!--#include virtual="/interface_config.js" --></script> <script><!--#include virtual="/logging_config.js" --></script> <script src="libs/lib-jitsi-meet.min.js?v=4025"></script> <script src="libs/app.bundle.min.js?v=4025"></script> <!--#include virtual="title.html" --> <!--#include virtual="plugin.head.html" --> <!--#include virtual="static/welcomePageAdditionalContent.html" --> <!--#include virtual="static/settingsToolbarAdditionalContent.html" --> </head> |

We simply need to insert our content in the body section like so:

|

1 2 3 4 5 |

<body> <!--#include virtual="body.html" --> <div id="react"></div> <!--#include virtual="static/recorder.html" --> </body> |

The code

Files loaded in that static directory will load, so you then need to get the recorder files there. I broke the local recording functionality out into 2 files:

- HTML with some buttons and ugly CSS and

- JavaScript file for all the logic discussed above with some basic playback and save controls.

The repo also includes a bash script which will copy these files and insert the recorder into Jitsi Meet’s index.html .

The full repo is https://github.com/webrtcHacks/jitsiLocalRecorder.

Not Just Jitsi

This approach will actually work to record anything and long as you load it. It doesn’t need to be Jitsi. You can see a demo of this on my site here.

This is a mediocre hack

This works for me, but it is kind of ugly – both in the literal sense since my CSS sucks – and more generally because there are many limitations to this approach:

- Bad screen picker UI – The getDisplayMedia with audio picker is very limited. I can remember how to navigate the picker to find the tiny audio checkbox, but that is not something you can ask your average user.

- No audio device selection – I call getUserMedia without specifying a media device, so it might not be the same one I use in the Jitsi Meet session. This could be rectified by adding a media device selection UI element, using one of the Jitsi API’s or by overloading getUserMedia

- No recording notification – in many places you may have a legal obligation to tell others you are recording them. The minimalistic GUI only acts locally. Unlike the built-in Jitsi Meet recording tools, my hack has none of this. I experimented with using the lib-jitsi-meet API to add a new recording participant to the call with success, but this has the downside of forcing the bridge out of peer-to-peer mode (p2p4121) and then consuming more resources.

So, I was able to accomplish my goal but this probably is not going to be widely applicable. At least I learned something along the way!

{“author”: “chad hart“}

Jan-Ivar over at Mozilla had a number of great comments I will review and address in an update. Here they are for readers who see this before I make that update:

Under “iFrame Permissions” you say “Safari requires

iframe allow="display"but for me, Safari 13.1 on macOS seems to support “display-capture” which btw is to spec.

(Safari on iOS is another matter; no support even on ipads.)

“API includes a displaySurface option for choosing between desktop … displaySurface selection constraints are useless … essentially the constraints are allowed to do anything”

Yes, they’re read-only effectively, and were almost removed to avoid confusion. However, they do let JS know what category of surface the user chose, using track.getSettings(), so not 100% useless even though perhaps it’s close 😉.

“Firefox and Safari require a user gesture”

“Firefox, Safari, and the spec require a user gesture” — might be helpful to readers as to what changes to expect here 😉

“there are differences in the information returned by each track”

FWIW constraints are source-specific, and there’s an explicit list in the screen-capture spec of the only constraints that should work there. E.g. I believe all of the audio constraints you mention as specific to microphones, so I wouldn’t expect them to stick around in Chrome.

“Inconsistent constraint checks … Audio only requests are not supported”

True, though the error is a Chrome bug. In Firefox (even though it doesn’t support audio yet) as well as the spec, {video} defaults to true in this spec, which means calling navigator.mediaDevices.getDisplayMedia() without arguments should get you video, and {audio: true} = {video: true, audio: true}.

I also wanted to mention the “limitations” are intentional and not likely to be lifted: sharing a web surface exposes users to unique security attacks that are hard to explain even to experts (circumventing the same-origin policy). See this blog for details.

Oh and thanks for covering the macOS permission stuff. FWIW on the “Unfortunately this setting only takes effect after you reset the application” it seems to work for me even when I don’t, but YMMV.

i tried to use this solution, but participants audio is not recording, can u please help me to know the issue

Thanks for your posting.

I have followed your steps but after download, I cannot play the video file.

But when I click play button, it plays well.

What should I do? please help me.

Thanks for helpful posting.

I have tried follow steps as you mentioned. After download video file, and I could not play it. It seems video file break down. but When I click play button, it plays as well,

What should I do? please help me.

hi Chad Hart,

nice article, and its work on jitsi.but i did’t want capture entire screen display, just video large only $(‘video#largeVideo’) but also audio. could you give me insight.

Hi Chad,

I have tried your code with self hosted jitsi. After recording the voice form mic is coming but the voice of speakers is not coming in the saved webm file. I am using google chrome on windows to access the vc. Is there any permission to be given from browser?

Dear Chad,

Using the above code I am not able to capture speaker voice of participants,I am using Chrome on windows to access the self hosted jitsi application where your code is integrated

For everyone who is having trouble with the Jitsi Local Recorder: I am planning to update the project this week to fix some bugs. Please hold tight.

In the future, please file your bugs as issues here: https://github.com/webrtcHacks/jitsiLocalRecorder

Of course pull requests with fixes are always welcome!

Is it possible to save the file as mp4 video format?

How would it be?

The native JavaScript browser APIs don’t let you save direct to mp4. You could something like the ffmpeg.wasm in the browser with JavaScript or go to an external service for that conversion like AWS Elastic Transcoder.

Improved local recording solution. The audio of the conference participants is recorded and mixed from the jitsi streams. Also does not require permission to record local audio – audio is taken from the audio stream of a user-selected microphone. Hence video and audio recording works in all popular browsers. There is also no problem with new joined participants during recording. Enjoy –

https://github.com/TALRACE/JitsiLocalScreenRecorder