I’ve had an ongoing annoyance with screen sharing while presenting. You go into your video conferencing app, say hello to everyone, and then start sharing your deck which is in another tab or window. After that, what do you look at? The presentation? But I want to see and interact with the audience. Some tools give you a preview of what you are sharing, but you still need to jump back and forth to the deck to advance the slides. It seems like there should be a better way… and now there is with Capture Handle.

Capture Handle is a new API in origin trial for use with screen sharing (getDisplayMedia) that lets a screen sharing app identify and coordinate with the tab the user selects.

Elad Alon is a Googler involved with the webrtc.org team. He put together the Capture Handle spec draft and is pushing it through the W3C standardization process.

In this post, Elad introduces Capture Handle with some examples on preventing the “hall of mirrors” effect and how to fix the slide advancement while screen sharing problem I described above. Capture Handle has many other uses too. More details are below in the post.

{“editor”: “chad hart“}

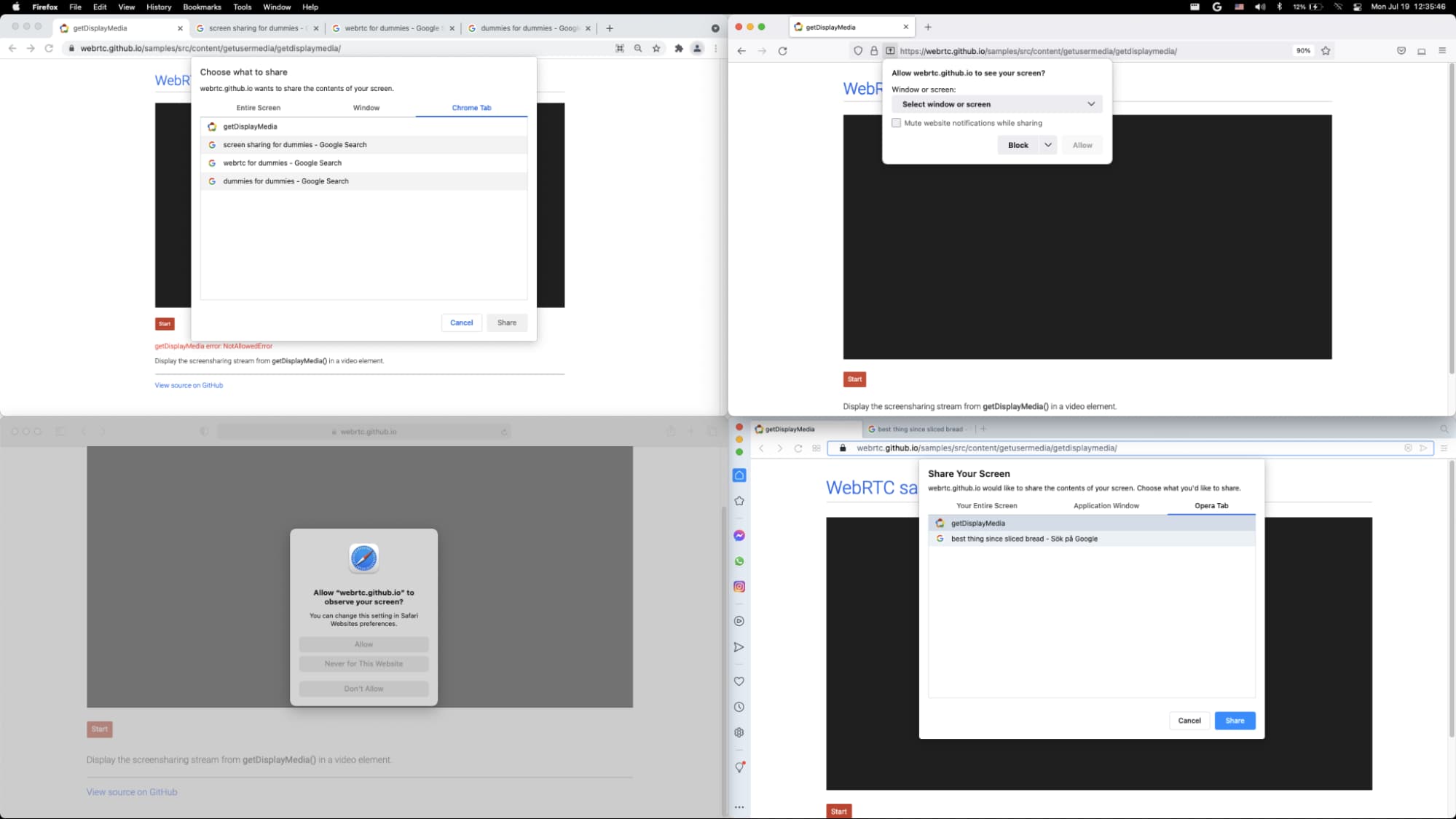

Screen-sharing has become a more ubiquitous part of users’ lives over the past year, for reasons that need not be mentioned, for fear of this video being demonetized. For Web-based products, this is typically achieved via a call to getDisplayMedia. Once getDisplayMedia is invoked, the browser presents the user with their own flavor of a media picker.

Currently, the API allows the capture of three different display surface options – screen, window, or tab. The choice lies in the hands of the user. (This choice may be limited by the user’s choice of browser, as Firefox does not yet support tab-capture, and Safari only offers full-screen-capture.)

getDisplayMedia returns a Promise. When the user makes their choice, the Promise is resolved and the application can start using the returned MediaStream, which is guaranteed to have one video track, and possibly also an audio track. But what else can the application readily discover about the captured surface? And why would it care? Let’s tackle both questions.

Identifying the captured display-surface

Previously, the application could only distinguish between screen/window/tab capture.

- This is vacuously true for Safari, as only screen capture is supported.

- Chrome allows querying the captured surface through MediaTrackSettings.displaySurface.

- Firefox exposes the title of captured windows as MediaStreamTrack.label. Screen-capture assigns a special string, such as “Primary Monitor”.

So there is no standard way of truly identifying a captured web application. Was the captured tab a YouTube video? A presentation? If a presentation – which app? How can the capturing and captured applications collaborate if they cannot even identify each other? Should they resort to steganography by embedding a QR code?

Enter Capture Handle. Let’s look at a couple of examples to illustrate how Capture Handle works…

Detecting self-capture

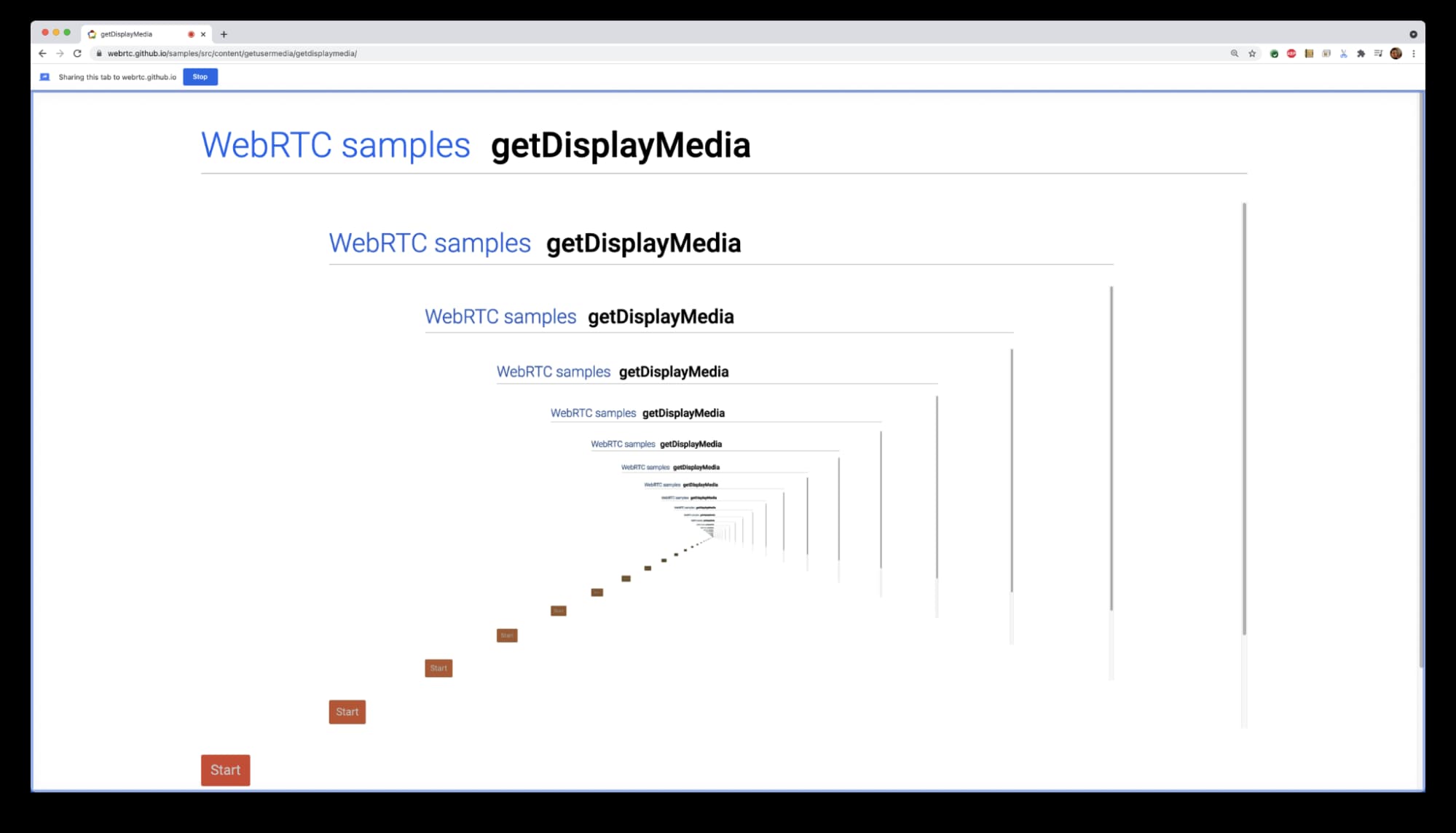

We can start with the minimal example for Capture Handle – a single tab (where two were expected by the app). Consider an application that presents the captured video back to the local user. Self-capture would lead to a hall-of-mirrors effect.

If the web application could detect this, it could avoid replaying the video back to the local user, avoiding this nauseating effect.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

const TOTALLY_RANDOM_ID = “42”; // Or actually randomize... const ownId = TOTALLY_RANDOM_ID; // Set the capture handle of the web application. navigator.mediaDevices.setCaptureHandleConfig( { handle: ownId, permittedOrigins: ["*"] } ); // ... const stream = await navigator.mediaDevices.getDisplayMedia(); const [track] = stream.getVideoTracks(); // Check to see if the captured tab has the same handle. const isSelfCapture = track.getCaptureHandle && track.getCaptureHandle() && track.getCaptureHandle().handle == ownId; // Then mitigate the hall-of-mirrors as you see fit, // e.g. hide the element using CSS. |

In this case, it is easy for the web application to set an ID on the current tab. This captureHandle().handle can then be used to check if the user selected their own tab in the screen share picker.

You can see a full demo of this concept here.

Bootstrapping collaboration

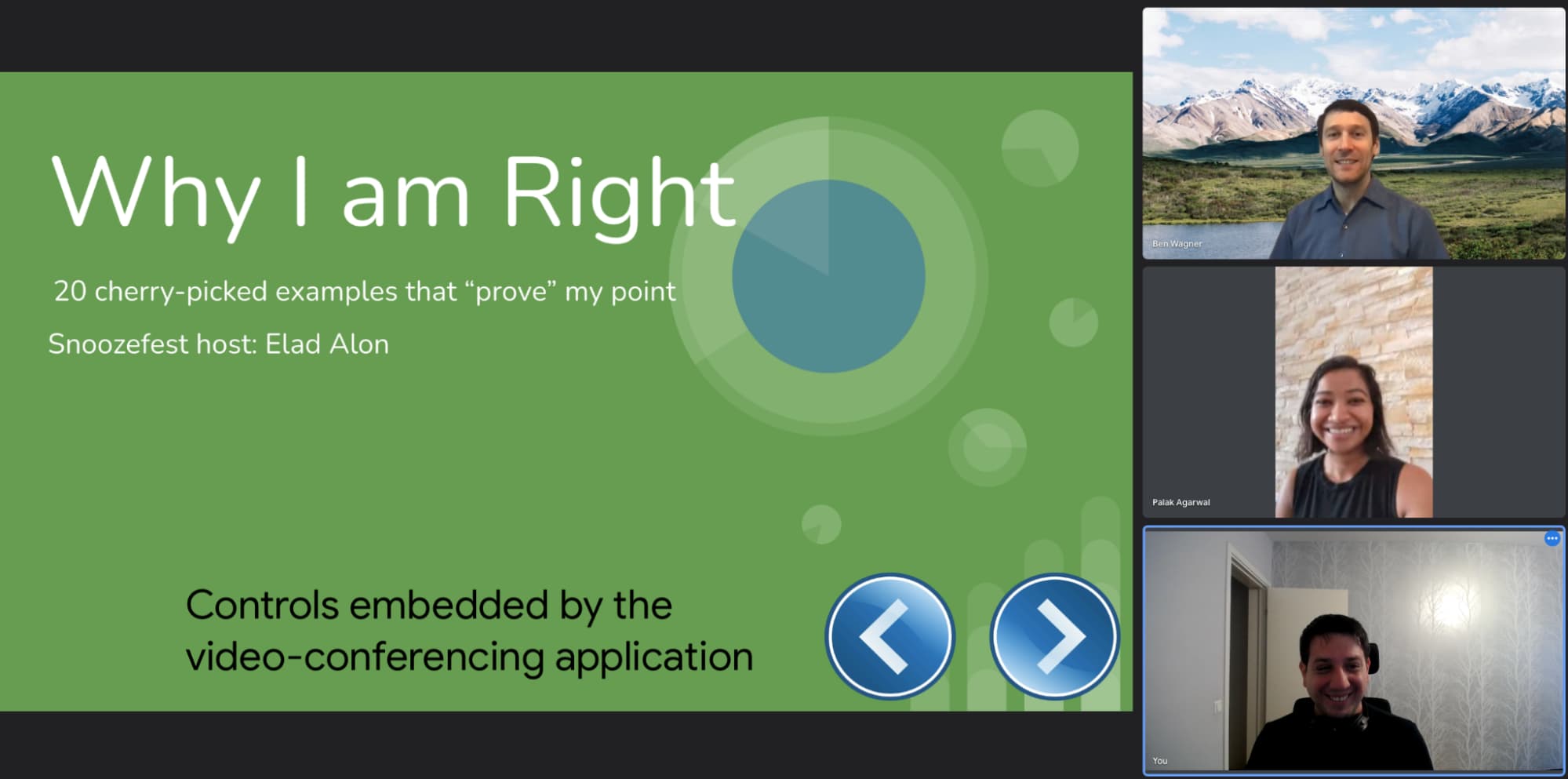

If an application can identify the application it is capturing, it can collaborate with it. Messages can be exchanged between them using any old method and add include semantic meaning of any kind. For example, a VC application could remotely control slide-decks.

A demo is available here. Be forewarned that it was produced by a C++ developer, not a web developer, and it shows – I know. The TL;DR is that the captured application sets a handle, and the capturing application extracts the session ID from it. Imagine a slide deck web application called Slides 3000

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

//////////////////////////////////////// // Captured application (Slides 3000) // //////////////////////////////////////// function getLoonySessionId() { ... // Returns some ID which is meaningful using loonyAPI. } function onPageLoaded() { // Expose and add info as you like, including the origin. ... setCaptureHandleConfig({ exposeOrigin: true, handle: JSON.stringify({ description: "See slides-3000.com for our API.", protocol: "loonyAPI", version: "1.983", sessionId: getLoonySessionId(), }), permittedOrigins = ['*'] }); ... } |

and a video calling web application called VC-MAX:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

//////////////////////////////////// // Capturing application (VC-MAX) // //////////////////////////////////// async function startCapture() { // ... const stream = await navigator.mediaDevices.getDisplayMedia(); const [track] = stream.getVideoTracks(); if (track.getCaptureHandle) { // Feature detection. // Subscribe to notifications of the capture-handle changing. track.oncapturehandlechange = (event) => { onNewCaptureHandle(event.captureHandle()); }; // Read the current capture-handle. onNewCaptureHandle(track.getCaptureHandle()); } ... } function onNewCaptureHandle(captureHandle) { if (captureHandle.origin == 'slides-3000.com') { const parsed = JSON.parse(captureHandle.handle); onNewSlides300Session(parsed.protocol, parsed.version, parsed.sessionId); } } function onNewSlides300Session(protocol, version, sessionId) { if (protocol != "loonyAPI" || version > "2.02") { return; } // Exposes prev/next buttons to the user. When clicked, these send // a message to some REST API, where |sessionId| indicates that the // message has to be relayed to the Slides 3000 session in question. ExposeSlides300Controls(sessionId); } |

Choose your own signaling

Capture Handle provides identification, not communication. How messages are exchanged between the two tabs is out of scope. One could imagine BroadcastChannel being used, or shared cloud infrastructure, i.e. the signaling server (in a wider sense than usually used by WebRTC). All reasonable means of communication share one property – they require an ID of the captured application to be known to the capturer – and this is what Capture Handle provides.

This gives flexibility to the developer in how they want to implement their application. For example, what if the user has multiple slide deck tabs open – should they all be instructed to flip to the next slide? Inconceivable!

Using Capture Handle to optimize capture parameters

Consider two users – one user is sharing a 1080p trailer with their friends, suggesting they should co-watch the movie. The other user is presenting a mostly static document to their coworkers. Both are using the same video calling application to share the other tab. Should the same frame rate and resolution be used in both capture scenarios?

Future mechanisms for robustly hinting at the content type might be advisable, but as a stop-gap measure, Capture Handle allows one to check whether the tab’s origin is a known video-serving site, known productivity suite, etc. Applications can then set the frame-rate and resolution properties as well as the contentHint.

Can I use Capture Handle now?

Capture Handle is in origin trial in Chrome starting with M92.

There are two minor changes in the API between M92 and M93:

| Change | M92 | M93+ |

|---|---|---|

| API | track.getSettings().captureHandle | track.getCaptureHandle() |

| Events | event.captureHandle | event.captureHandle() |

No additional changes are currently planned. Should they occur, they will be visible in the git history. An extension is planned, however, in expanding this API to also work with window-level capture.

Chrome is gathering public web developer support to convince other browser vendors of the importance of this feature. If you find this feature useful, please leave origin trial feedback, comment on this WICG thread or below here.

{“author”: ”Elad Alon”}

Hi,

Do you have any recommendations on how to draw a frame around the shared screen?

Can be done by detecting the device/screen id and then marking it in a transparent window. but it seems to be too much work.