Conference calling is a multi-billion dollar industry that is mostly powered by expensive, high-powered conferencing servers. Now you can replicate much of this functionality for free with a modern browser using the combination of WebRTC and WebAudio.

Like with video, multi-party audio can utilize a few architectures:

- Full mesh – each client sends their audio to every other client; the individual streams are then combined locally before they come out of your speaker

- Mixed with a conferencing server acting as a Multipoint Control Unit (MCU) – the MCU combines each stream and sends a single set to each client

- Routed with a conferencing server in a Selective Forwarding Unit (SFU) mode – each client sends a single stream to the server where it is replicated and sent to the others

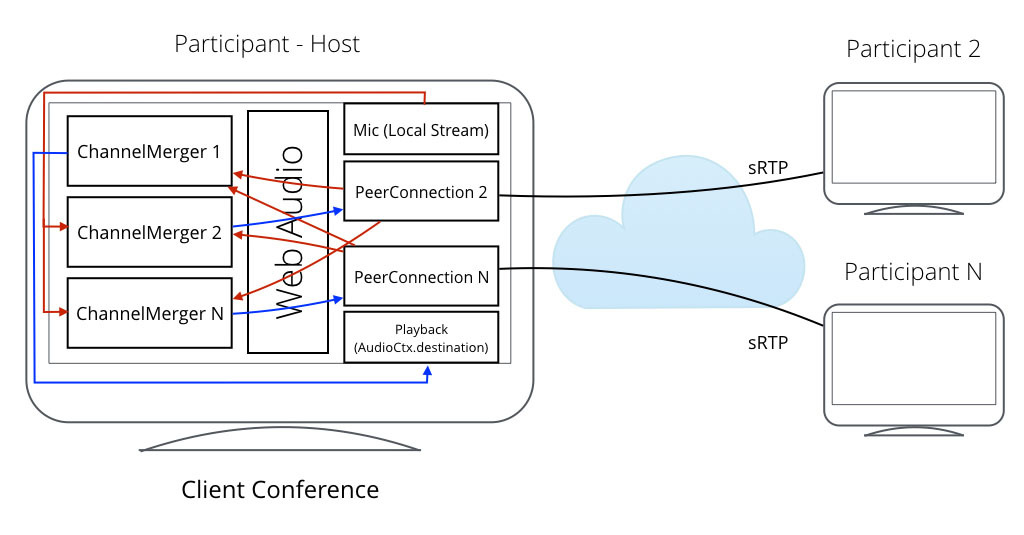

This architecture represents a fourth type: client-mixed type where one of the clients acts like the server. This provides the server-less benefits of mesh conferencing without the excessive bandwidth usage and stream management challenges.

Previous webrtcHacks contributor, and VoxImplant CEO, Alexey Aylarov put together a demo showing this technique. See the description of his this work and sample code below.

{“editor”: “chad hart“}

For regular readers of webrtcHacks, I guess there is no need in special introduction to WebRTC and WebAudio. WebRTC helps us to build web apps with real-time communication capabilities, while WebAudio is used to process audio in a browser using a JavaScript API. Until recently it wasn’t possible to combine the two technologies because of browser issues and limitations, but looks the situation has changed. Previously simple combinations of these API’s let developers do things like visualize microphone activity by piping getUserMedia audio to WebAudio. Recent changes mean we can start can start building much more complex apps that once required specialized server-side software. The one I’m going to describe here is called “Poor man’s conferencing”.

Traditionally conferencing was performed by a centralized server (see here for a discussion of some of these architectures). Actually, I suspect we have been using locally mixed conferences for a long time in Skype since Skype started as pure P2P service it had to mix audio conferences on a client side. This approach has number of its advantages, but the main one is that it is free.

Audio mixing is done on the host machine – usually the first caller – and it’s the most complex part of the service.

Recent versions of Chrome and Firefox make this scenario possible by leveraging Web Audio and WebRTC.

RTCPeerConnection

We use the RTCPeerConnection class to make calls and work with MediaStream transmission. Despite WebRTC’s shift to working with the newer MediaStreamTrack objects, Web Audio works (and will work) with the original MediaStreams object. So let’s start with something simple:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

// we got MediaStream from mic - this code will play it via Web Audio function gotStream(stream) { // Web Audio - create context window.AudioContext = window.AudioContext || window.webkitAudioContext; var audioContext = new AudioContext(); // Web Audio works with audio nodes that can be attached to each other var mediaStreamSource = audioContext.createMediaStreamSource( stream ); // Let’s playback our stream mediaStreamSource.connect( audioContext.destination ); } navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia; navigator.getUserMedia( {audio:true}, gotStream,function(){}); |

To combine different streams into a single combined stream we will need to use to implement our mixer. If the conference has N participants then we will need N instances of ChannelMergerNode since each participant should receive the mix of others (not including himself).

This looks like:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

window.AudioContext = window.AudioContext || window.webkitAudioContext; var audioContext = new AudioContext(); var mediaStreamSource = audioContext.createMediaStreamSource( local_stream ), participant1 = audioContext.createMediaStreamSource( participant1_stream ), participantN = audioContext.createMediaStreamSource( participantN_stream ); // Mixer var merger = audioContext.createChannelMerger(); // Send the stream to MediaStream, which needs to be connected to PC var destination_participant1 = audioContext.createMediaStreamDestination(); mediaStreamSource.connect(merger, 0, 0); // Send local stream to the mixer participantN.connect(merger, 0, 0); // add all participants to the mix mediaStreamSource.connect(merger, 0, 1); // FF supports stereo participantN.connect(merger, 0, 0); // FF supports stereo // Send the mix to destination_participant1 merger.connect( destination_participant1 ); // Add the result stream to PC for participant1 , most likely you will want to disconnect the previous one using removeStream pc.addStream( destination_participant1.stream ); |

It doesn’t look too complicated and works well for Chrome->Chrome (host), Firefox->Chrome (host), Firefox->Firefox (host), but when the mix is sent from Chrome to Firefox something goes wrong and you can’t hear the whole mix. I couldn’t figure out the issue so far, but if you can – your comments are welcome 🙂

EDIT (24 May 2016): It appears there is a much easier and better way to mix without using the ChannelMerger node. We can just connect participants’ streams to MediaStreamDestination – WebAudio will mix it anyway. Thank you Andreas Pehrson for the suggestions!

Here is the updated code:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

window.AudioContext = window.AudioContext || window.webkitAudioContext; var audioContext = new AudioContext(); var mediaStreamSource = audioContext.createMediaStreamSource( local_stream ), participant1 = audioContext.createMediaStreamSource( participant1_stream ), participantN = audioContext.createMediaStreamSource( participantN_stream ); // Send the stream to MediaStream, which needs to be connected to PC var destination_participant1 = audioContext.createMediaStreamDestination(); mediaStreamSource.connect(destination_participant1); // Send local stream to the mixer participantN.connect(destination_participant1); // add all participants to the mix // Add the result stream to PC for participant1 , most likely you will want to disconnect the previous one using removeStream pc.addStream( destination_participant1.stream ); |

Demos

You can try the demo here (+github) , it uses our VoxImplant platform for P2P calls and signalling. There is no real magic here in the signaling, just setup a PeerConnection between each participant and the host. This is much simpler than the mesh conferencing scenario where you need to setup a PeerConnection to every participant.

Considerations

This approach does have some limitations. Don’t expect to hold very large conferences. The JavaScript-based Web Audio engine is not optimized to mix dozens to thousands of calls like some server-side conferencing engines. However, I have used it with up to 6 participants without issue on my laptop. I would not expect it to perform as well on mobile. Also, any processing, like starting other applications, or network limitations, such as streaming a moving, at the host participant will impact the entire conference. These variables are usually much easier to control in a server-side environment.

Still, if you don’t want to pay anything to run a conferencing server, you can’t beat client-side JavaScript.

{“author”, “Alexey Aylarov”}

Nice!

Is that possible to mix multiple audio input streams using this technique in a SFU model and play them via a single AUDIO control?

This way we would be able to control (e.g. set volume) all the audio streams just by one audio control.

Yes, it should work w/o problem – just attach media streams from PCs to the destination media stream and attach it to audioContext

Hi there, very good your post.

My question is, can I test the demo with just one ip public ?

I tried to test the demo from two operations system, my current system windows 10 and another virtual system, but I get this notification: Online Users Nobody is online at the moment .

Thanks for your support.

Regarding the sample code provided, is that supposed to be in the onstream function of the host(the host’s browser-server)? Also, my current webRTC setup is using the updated ‘tracks’ approach, do I need to use the deprecated ‘streams’ approach to get this to work?

Is the idea that as soon as the host receives a stream from a newly connected peer, it is added to the mixer, which all peers that were already in the mixer will automatically receive, or do I need to recreate everyone’s stream(include audio from all mics except for the receiving peer) and send it back out, causing another negotiation event?

Sorry, I am new to, both, webRTC and Web Audio APIs

Is it possible to do a two-way mp3 audio streaming using this approach? I assume that mp3 to opus conversion should be made somehow without a noticeable delay…

Converting opus to mp3 in the browser should be possible with something like ffmpeg.wasm, but I suspect the latency would be noticeable.