tl;dr: WebMediaPlayers are kinda expensive. Make sure you understand how you use them. Set srcObject to null before removing elements from the DOM…

So we had an intervention in Chrome 92. If you tried to have more than 75 audio and video elements playing, then some of them would have thrown this error instead of playing:

[Intervention] Blocked attempt to create a WebMediaPlayer as there are too many WebMediaPlayers already in existence. See crbug.com/1144736#c27

75 sounds like a lot, but there are many scenarios where you would have had issues even if you didn’t have 75+ media elements playing. For example, if you didn’t destroy the elements in a particular way, Chrome will not deprecate the resource. In addition, this could happen with mere 38 participants in a call if you use separate audio and video elements for each stream (which is relatively common). Some applications may also stop the camera to mute it. In this case, using a single video element can then get into a state where audio is not played since a video frame is required.

Tsahi Levent-Levi and me also discussed this in this months “WebRTC fiddle of the month”.

The change was reverted, by increasing the limit high enough that it should effectively be disabled. However, the issue ended up in Chrome stable. The revert rolled out only two weeks later on August 3rd as part of the stable refresh.

Leaking WebMediaPlayers

The intention behind the change was to reduce the number of out-of-memory issues from WebMediaPlayers. These were not detectable by JavaScript before. The user’s browser just crashed. Users would obviously not be happy about this and nobody had much visibility into the problem.

The pandemic led to much larger calls. Now the use of many media elements in video calls and the amount of memory required by WebMediaPlayers is more of an issue.

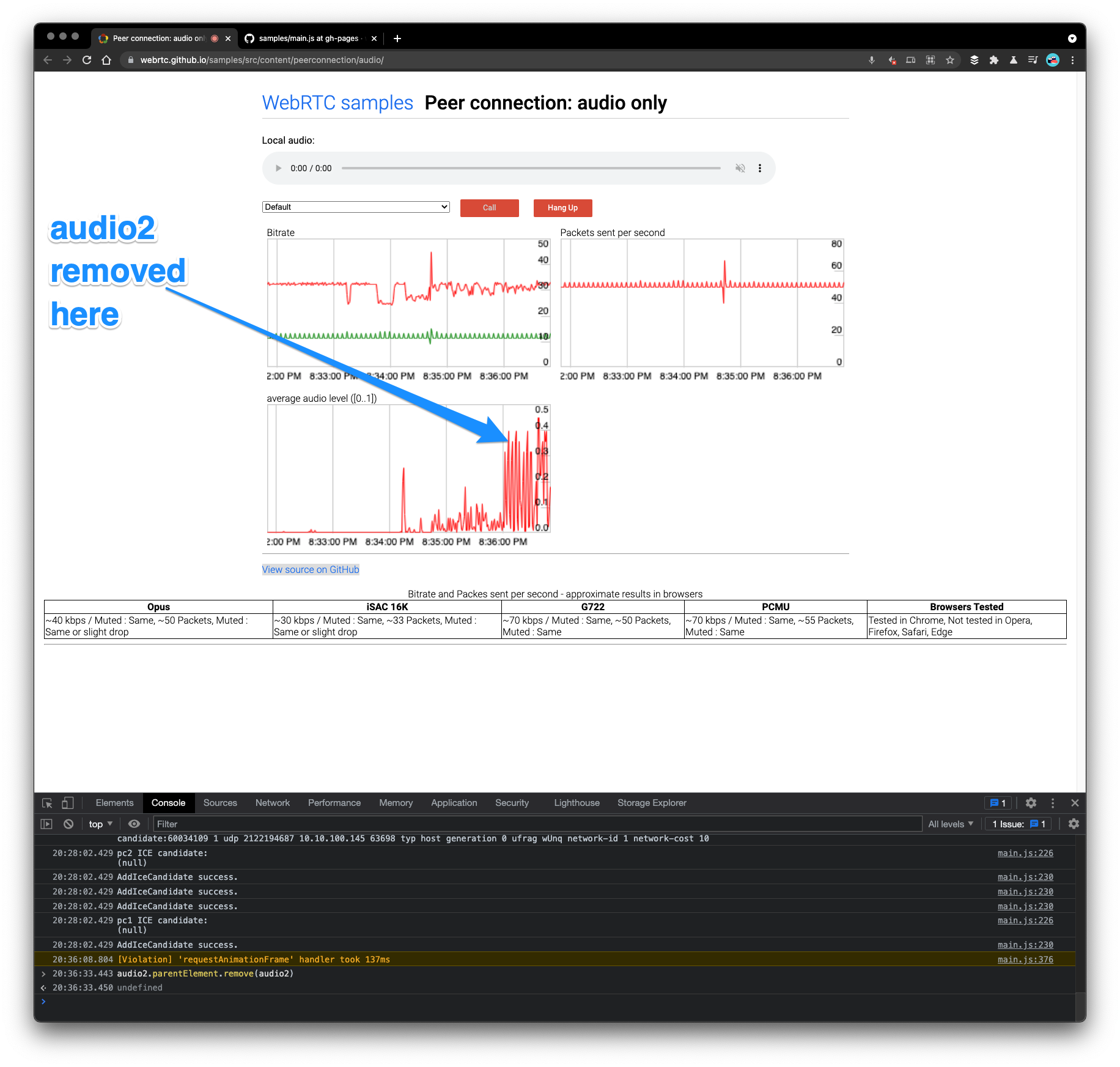

In fact, it is relatively easy to leak WebMediaPlayers. Let’s say In your group call a participant leaves. You remove that participant’s particular element from the DOM. The track ends because the RTCPeerConnection gets closed soon. You think everything is fine. Let’s replicate what goes wrong here on the WebRTC audio sample page. This sample shows 2 audio-only peer connections sending audio to each other with a getStats display on the sender. Click the “call” button and make sure to make some noise or play some music in the background so you can see the audio level. Now open the JavaScript console and remove audio2 by calling audio2.parentElement.remove(audio2).

Audio playout stops. But wait… audio level is still shown in the graph at the bottom. Audio level is only computed if there is a player. So this is not fully gone.

We have a memleak… (yes, we keep a reference to the object so this might behave slightly different). Note that closing the RTCPeerConnection ends the track but does not change the state of the player (which might be a useful thing for the browser to optimize)

As shown in the video below, setting audio2.srcObject = null destroys the player:

As a rule of thumb, set srcObject to null before removing the object from the DOM.

Inspection with chrome://media-internals

In chrome://media-internals (which is deprecated but still useful) the issue is easy to see:

- Removing the element from the DOM (at 00:00:06) puts the WebMediaPlayer into a

kPausestate. - Detaching the

srcObjectdestroys the player (at 00:00:15)

Note: you can find the render_id in chrome://webrtc-internals too.

Best practices for muting

For video-muted participants there is a relatively straightforward optimization:

- Whenever you hide a participant using CSS after a signaling message, detach the

srcObject. - Whenever you show a participant, attach the

srcObject.

This is not very disruptive in terms of UX but probably saves quite some memory if you have a large number of video-muted participants. Ideally, the browser would optimize but. This does combine unrelated properties like visibility and playout, so might be hard to do.

Note that webkitDecodedFrameCount also increments on hidden elements.

Audio is much more difficult because of the UX involved. If you wait for the signaling message that someone unmuted to arrive before reattaching the srcObject then you will end up with clipping issues. One could use insertable streams but they currently don’t provide an audio level yet.

Test in Chrome Beta and Chrome Canary

This issue should have been caught in Chrome 92 Beta or Canary. It was not, and not for the first time.

As WebRTC application developers, it is your responsibility to test your application.

If you have an application that was broken for two weeks that will hopefully show you the cost of not testing. This particular issue is hard to catch in automatic tests due to the number of streams required. Using the Beta or Canary version of Chrome on a day-to-day basis in most calling scenarios means you can catch this early. Having periodic large-scale tests with a new beta two weeks before promoting to stable is probably a good idea too. You can find the schedule in the Chromium dashboard.

{“author”: “Philipp Hancke“}

Thanks, Philipp.

This is a great explainer.

As a result, do we need to use an object pool pattern for reusing and elements? (or it do not worth it)

| reusing ‘audio’ and ‘video’ elements

From what I heard the “heavy” object here is the WebMediaPlayer, not the HTMLMediaElement so I don’t think having a pool for the latter is going to give you that much.