We covered End-to-end encryption (E2EE) before, first back in 2020 when Zoom’s claims to do E2EE were demystified (not just by us; they later got fined $85m for this), followed by the quite exciting beta implementation of E2EE in Jitsi using Chromium’s Insertable Streams API. A bit later we had Matrix explain how their approach to E2EE works. Last, but not least, we saw Apple ship FaceTime to the web and looked at what it does under the hood.

All of this happened in 2020 and 2021. It is now 2024, so you might wonder what has happened since then.

The other reason for an update now is that Mozilla’s WebRTC team recently published a blog post on the topic of E2EE. I have some disagreements with that which boil down to:

- E2EE in all browsers in mid-2023 was too little too late; we wanted it in 2020

- The standardization completely ignored that web developers were totally ok with the 2020 state of things

- The “main thread” argument seems reasonable but is more nuanced than presented

- The format of the encoded data is not “random”, but well-defined (after I gave it a stern look)

- Blog posts and polyfills are not how you solve problems in WebRTC anymore

So here’s what I am going to do here:

- Review the adoption of E2EE in WebRTC implementations since its introduction

- Example some interesting new usage

- Look at browser support and explore the standardization process that took place

- Explain some of the more recent features added to the specification like the mimeType attribute

- Discuss the need for (or lack of) interoperability and adapter shimming

Note that we are not talking about the built-in encryption you get automatically when you do a peer-to-peer call. E2EE in this context means maintaining encryption when middleboxes like Selective Forwarding Units (SFU’s) are used, as is commonly the case in multi-party video conferencing services.

E2EE adoption and how things look at the beginning of 2024

We know that Google added the initial API to Chromium in early 2020 to bring its Duo application which featured E2EE to the web. We revisited the topic in mid-2022 but since then Duo has been taken over by Google Meet. Even the duo.google.com URL now redirects there. This does not bode well for adoption…

E2EE usage is low

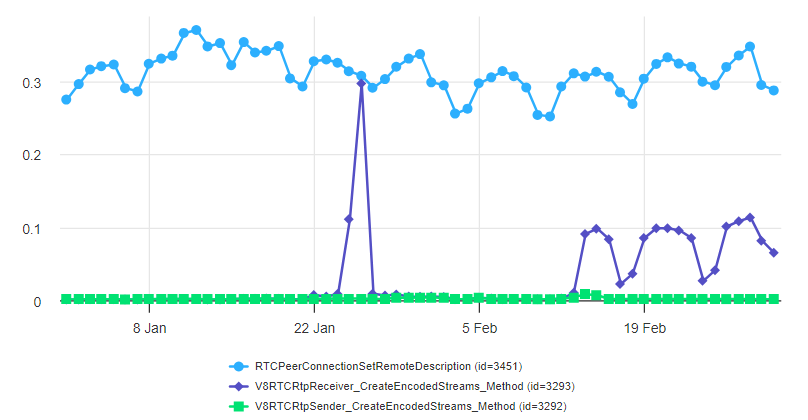

And indeed, the public Chromestatus data somewhat confirms low adoption:

What we compare is the setRemoteDescription API call as a baseline for “all connections” (the blue line) to the number of times the insertable stream API’s are used. Here we measure both the sender (the green line) and receiver (the purple line). The latter happens in less than 1% of the total calls. This is not much.

Maybe E2EE on the web was not quite that important after all. Also note that this usage includes FaceTime on the Web which uses E2EE by default the last we checked. I have not heard much about that either…

But wait, what is that tiny little speck in the lower right corner? Let’s take a closer look and change the way we look at the data from windowing over 28 days (or four weeks) to per-day:

We see two things here:

- an odd spike on January 26th, 2024. The usage seems to go to almost 100% of our

setRemoteDescriptionbaseline. Looks like an accident/bug in the data collected. - a fairly large increase in the usage of the receive-side API starting on February 14th. The send-side API usage increased a bit on that day but far less. The size is a third of our setRemoteDescription baseline.

The size of 33% means that something large is responsible. Google Meet is the usual suspect here, as we have seen before.

Google Meet doing a new experiment with the Insertable Streams API?

It was easy to inject some JS code and confirm this usage using a modified version of the good old WebRTC Externals extension. So far it looks like this is only feature detection on a video receiver.

It is hard to say what Google is planning to achieve with this. The API usage is not symmetric for sender and receiver so that rules out E2EE as a use-case. All of this happens to correlate with some ongoing work to no longer require upfront opt-in to the API for all senders and receivers (which is great for E2EE because it prevents sending unencrypted traffic by accident) but allow a specific sender or receiver to call the createEncodedStreams method.

This work is being tracked in this Chromium issue and was successfully launched in Chrome stable a few days ago (congratulations!)

End-to-end-encrypt WebRTC in all browsers?

If you have not already, read Mozilla’s blog post. Did you notice that in 2024 all browsers allow you to implement actual E2EE? Well, some updates at least! But let us take a look at when things shipped according to MDN:

- Chrome and by extension Edge and other Chromium-based browsers do not support the API (they shipped a different one in mid-2020)

- Safari shipped in 15.4 in March 2022

- Firefox shipped in late August 2023

Now we are in a bad situation where we have two somewhat incompatible APIs and it took way too long to get here in the first place.

The next paragraph about the “worker-first” API fails to mention a few things:

Chromium experimented early with shipping APIs for this. Unfortunately, the early APIs exposed the media pipeline on main-thread, subjecting it to risks of jank given the nature of the JavaScript event model.

The Working Group learned from these experiments, and settled instead on a “worker-first” API, which means an API that is simpler to use in a worker than from main-thread.

Well, Chromium experimented and Google Duo shipped. In mid-2020 Chromium shipped an API that allowed both the main-thread model (which it seems is still in use there) as well as a worker approach. The worker approach had some performance issues which took until 2022 and needed external contributions to iron out but was adopted by Jitsi even before that (in a “beta” manner). The “offload stuff from the main thread” argument sounds great but is more tricky in practice.

There is a technical point in the debate but a lot about it is “standards politics”. This brings us to the next topic…

Consensus in the W3C WebRTC Working Group

There is an ongoing debate in the W3C working group about where the API for the “encoded transform” shall be available. The specification currently only allows the transformation of frames to happen on a “worker” (i.e. the JavaScript equivalent of a thread). Chromium’s implementation allows the transformation to be done on the “main thread” as well but supports operation in a worker. A WebRTC sample shows how that works in detail (and does work in all browsers; well, after a small fix).

Saying that the working group “settled on a worker-first API” needs a bit more explanation of what the working group actually agreed on. There was a “call for consensus” which is a formal decision-making process described here.

There were a couple of responses, best viewed in the threaded version of the archive:

- an objection from Microsoft’s Bernard Aboba due to normatively referencing an IETF specification that still is not done (I think)

- support from Apple’s Youenn Fablet

- support from Mozilla’s Jan-Ivar Bruaroey (who wrote the recent blog post there on E2EE)

- conditional support from Google’s Harald Alvestrand

Formally this specification had “consensus” in 2021 but in particular Google noted two issues:

- Issue 64: Remove CreateEncodedStream variant API which led to the discussion about documenting what is called the “legacy API”.

- Issue 89: Generalize ScriptTransform constructor to allow main-thread processing

Two and a half years later we do not have any progress on either of these issues. Google’s pull request to “document the legacy API” which is the original API implemented in Chromium since 2020 has been left starving. This API is what Chromium has shipped for more than three years. It was quite sad to see the specification which started with a good amount of energy lose momentum like that.

Also, note that the specification is not “worker-first” but “worker-only”. That means it forbids using the API on the main thread. Chromium supports usage on the main thread and Google Meet actually uses it that way. However, Chromium also supports the worker approach.

So to be “spec-compliant”, Chromium would have to break existing functionality.

Editor NoteContention has been part of every standardization process I have observed. WebRTC is no exception, even today in its mature state (search for ORTC or SDP here if you want historical drama). Sometimes this contention makes for a good story. It can also be boring and confusing unless you are an active part of the discussion. We try to keep our coverage interesting, but still accurate here. To that end, I asked W3C WebRTC Working Group Co-chair Jan-Ivar Bruaroey of Mozilla for his feedback. He had some issues with the characterizations above and shared some references which I have included here. On the topic of worker-first vs, worker-only, he asked that I call out the following from the Mozilla post:

He also commented that this is how Chromium supports workers today – you just need to move the stream from the main thread to the worker instead of from the worker to the main thread. You can learn more about transferable streams in the transferable streams explainer. The Chromium feature page for that is here. Mozilla’s page on Transferrable objects shows that Readable Streams, Writable Streams, and Transform Streams show these methods are supported everywhere but Safari today. He also added:

{“editor”, “chad hart“} |

Specifying Payload Type

The Mozilla blog post provides a fiddle for E2EE. The description of how the simplistic xor E2EE needs to encrypt the data is explained quite oddly. Trial and error is not required at all to figure out which bits need to be encrypted – we have a WebRTC sample for that which explains the gory details and references the specifications explaining why:

|

1 2 3 4 5 6 7 8 9 10 11 |

// Do not encrypt the first couple of bytes of the payload. This allows // a middle to determine video keyframes or the opus mode being used. // For VP8 this is the content described in // https://tools.ietf.org/html/rfc6386#section-9.1 // which is 10 bytes for key frames and 3 bytes for delta frames. // For opus (where encodedFrame.type is not set) this is the TOC byte from // https://tools.ietf.org/html/rfc6716#section-3.1 // TODO: make this work for other codecs. // // It makes the (encrypted) video and audio much more fun to watch and listen to // as the decoder does not immediately throw a fatal error. |

That code has a TODO suggesting that the (xor-)”encryption” needs more work depending on the format of the data, i.e. what codec is used. This has been an area I worked on so let me try to explain how that went.

Looking at the payload type (added in early 2022) allows a developer to determine that format but requires access to the SDP to lookup the payload type and the codec it is associated with. This is possible but clunky as it requires parsing the SDP. In particular, in workers where you would also need to obtain the SDP and keep that information in sync.

The actual solution here was to specify the data format. This is what I did in two changes to the specification:

- PR 140: add mimeType to metadata (which took from July 2022 until October 2023 to get merged…)

- PR 212: Describe “data” attribute (which depended on the earlier PR)

Together this allows an application to determine the actual type of the data and the format it is in which affects how encryption needs to work. Firefox still needs to implement this attribute.

To me, it is not clear how the W3C specification had “consensus” while failing to describe what the format of the data that it allows manipulating.

The video analyzer fiddle, the main thread use case and a bad polyfill

Towards the end of Mozilla’s blog post, the polyfill is used to update one of the WebRTC samples that collects some statistics on the video frames.

This is easier on the main thread where the sample can manipulate the DOM directly. Great but… this is a sample. Its purpose is to demonstrate the actual API, where the statistics are gathered and displayed is not that important. The PR mostly makes sense but the use of a polyfill does not.

Such a polyfill would allow developers to write the same code in all browsers that somehow support an E2EE API. This is generally in line with what the adapter.js polyfill has done since the early days of WebRTC. This polyfill was even proposed for inclusion into adapter.js (which remains widely downloaded). However, as its maintainer, I opposed the idea as I do not think that surprising developers on a topic as sensitive as E2EE is a good idea.

Summary

E2EE was very exciting in 2020. Deploying this in a way that would lead to large-scale adoption turned out to be complicated. It is unlikely that “works in all browsers” was the only thing holding it back. Most high-volume multi-party meeting apps have features like recording and transcription that break E2EE. As the data shows, E2EE ended up being not that important. Google seems to have found another use case for the API, even though that use case remains unclear for now.

Mozilla’s blog post presents a very one-sided view of the debate about where the API should be available that is still ongoing in the W3C WebRTC Working Group. This argumentation style is something that I have observed in that working group far too often and it wastes a lot of my energy and motivation. I have consequently decided to take a leave of absence in a stronger form of leaving the working group 🖐️🎤

{“author”: “Philipp Hancke“}

Thank you to Jan-Ivar Bruaroey for his review and feedback.

The results could have a significant bias because they come from browser statistics. For messaging apps like Threema and Signal, E2EE for SFU-based calls is considered mandatory but they don’t appear in browser statistics.

It’s true what fippo says though – the non-standard API was sufficient for the E2EE use case. I’m still happy it got standardised, so support in Safari and Firefox is possible now. But, wow, that took way too long. Those who truly needed it nagged their users to install a native or Electron-based app by now.

The whole Worker discussion is unfortunate. There’s a thousand ways to shoot yourself in the foot with Web APIs performance wise but that doesn’t mean that those APIs are badly designed per se. I can’t comprehend the nannying that was applied there to prevent usage in the main thread. As a result the API is overly restrictive. For example, because of the construction that prevents usage in the main thread, the transformer cannot be sent over an arbitrary MessagePort which can be annoying for more complex Worker scenarios. Unaware users will always blame the browser vendor for janky UI/UX even though it’s the app/page developers fault – really, there was nothing to win here.

Criticism aside, I still want to thank the WebRTC WG for their work.