One of the great things about WebRTC is that it is built right into the web platform. The web platform is generally great for WebRTC, but occasionally it can cause huge headaches when specific WebRTC needs do not exactly align with more general browser usage requirements. The latest example of this is has to do with the autoplay of media where sound(s) suddenly went missing for many users. Former webrtcHacks guest author Dag-Inge Aas has been dealing with this first hand. See below for his write-up on browser expectations around the playback of media, the recent Chrome 66+ changes, and some tips and tricks for working around these issues.

{“editor”: “chad hart“}

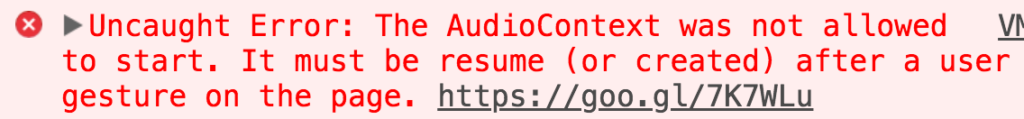

If you’re reading this, there’s a good chance you have encountered weird issues with your WebRTC application in Safari >=11 and Chrome >=66. This error, or similar, may surface as your interface sounds no longer playing (incoming call sound), your audio visualizer is no longer working, or your WebRTC application is not playing any sound at all from remote peers.

Currently, this bug is impacting major WebRTC players, such as Jitsi, Tokbox, appear.in, Twilio, Webex and many, many more. Interestingly, it seems Google’s Meet and Chromebox for meetings is also affected.

The source of our woes: Autoplay policy changes. In this blogpost, I’ll tell you what they are and how they affect WebRTC, and how you can fix this in your application. But first, what are the changes?

What are the changes?

This whole story starts in 2007, when the iPhone, and subsequently iOS, was released. If you have worked with Safari for iOS in the past, you may have noticed that Safari has required a user gesture to play <audio> and <video> elements with sound. This requirement has in some ways been relaxed over the years, with iOS 10 allowing video elements to start playing automatically in a muted state. This causes some problems in WebRTC, as a <video> element is required to see and hear a MediaStream. It’s no use being able to play a video element with no sound automatically, because when having a video call it’s nice to be able to hear the other party as well, without requiring the user to “click play”. However, Safari for iOS hasn’t been on most WebRTC developers mind because WebRTC hasn’t been supported on the platform until relatively recently. Until iOS 11.

The first time I encountered this issue was while testing to see if my then recent implementation of a video call in Confrere worked on iOS. To my surprise, it didn’t, but it I found I wasn’t alone. Github user kylemcdonald reported on webrtc-adapter that the getUserMedia sample did not work on iOS. The fix? Adding the newly created property playsinline to the video element allowed it to be played, with audio, on iOS. The details for WebRTC are unfortunately not in the original autoplay changes blog post from Safari, but they remedied that fact by publishing a blog post on WebRTC in Safari before release. Here, it clearly states that the following applies to MediaStreams and audio playback:

- MediaStream -backed media will autoplay if the web page is already capturing.

- MediaStream -backed media will autoplay if the web page is already playing audio. A user gesture will still be required to initiate audio playback.

Now, there is no mention of playsinline in that document, but if you combine the two announcements, one should be able to figure out how to make your WebRTC application work on Safari for iOS.

Why is autoplay being restricted?

Initially, the focus was on avoiding substantial data costs for users. Back in 2007, data was expensive (and still is in most of the world), and few web pages were adapted for mobile. Also, autoplaying audio was and still is, one of the most annoying things on the web. Making sure that video could only be played (and loaded) with a user gesture made sure that the user was aware that they were playing video and audio.

Then came the GIF. GIFs are just animated <img> s, so they did not require a user gesture to be loaded. However, they can be quite large, and therefore costly to our poor mobile users. A video is more space efficient, but they required a user gesture to load, which was quite annoying, so pages continued to use GIFs. This all changed in iOS 10 when Safari allowed autoplaying videos in a muted state. Saving bandwidth was now a matter of allowing video, and discouraging the use of 3 minute long GIFs.

Autoplay restrictions are rolling out for desktop browsers

If you search for “how to stop autoplaying audio”, you will find quite a few hits. Recently, certain news outlets have figured that if they play REALLY LOUD audio upon page load, users will stay longer and click their ads. Of course, this is wrong, but for some reason, that doesn’t stop them from doing it. Due to this, desktop browsers are now following Safari’s example of disallowing audio playback. Most notably is Chrome, which rolled out new autoplay policies in Chrome 66.

Chrome comes with a twist to the original model though, the Media Engagement Index.

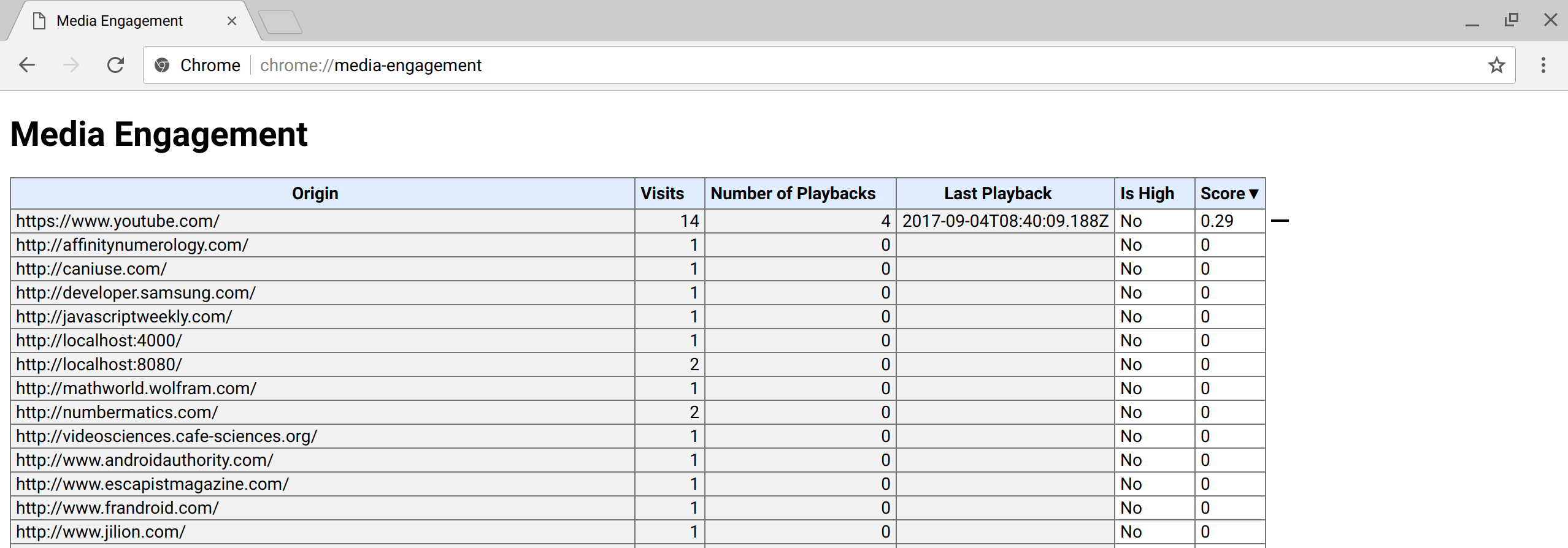

The Media Engagement Index (MEI)

The Media Engagement Index, or MEI for short, is a way for Chrome to gauge how likely you as a user is to want to allow autoplaying audio on a page, based on your previous interactions with that web page. You can see what this looks like by going to chrome://media-engagement/. The MEI is calculated per user profile, and is persisted to incognito mode. That last bit makes it really hard for developers to test their pages with a zero-sum MEI, which would help uncover issues with autoplaying audio before hitting production. Does anybody want to guess what happens next?

It’s not just about <audio> and <video>

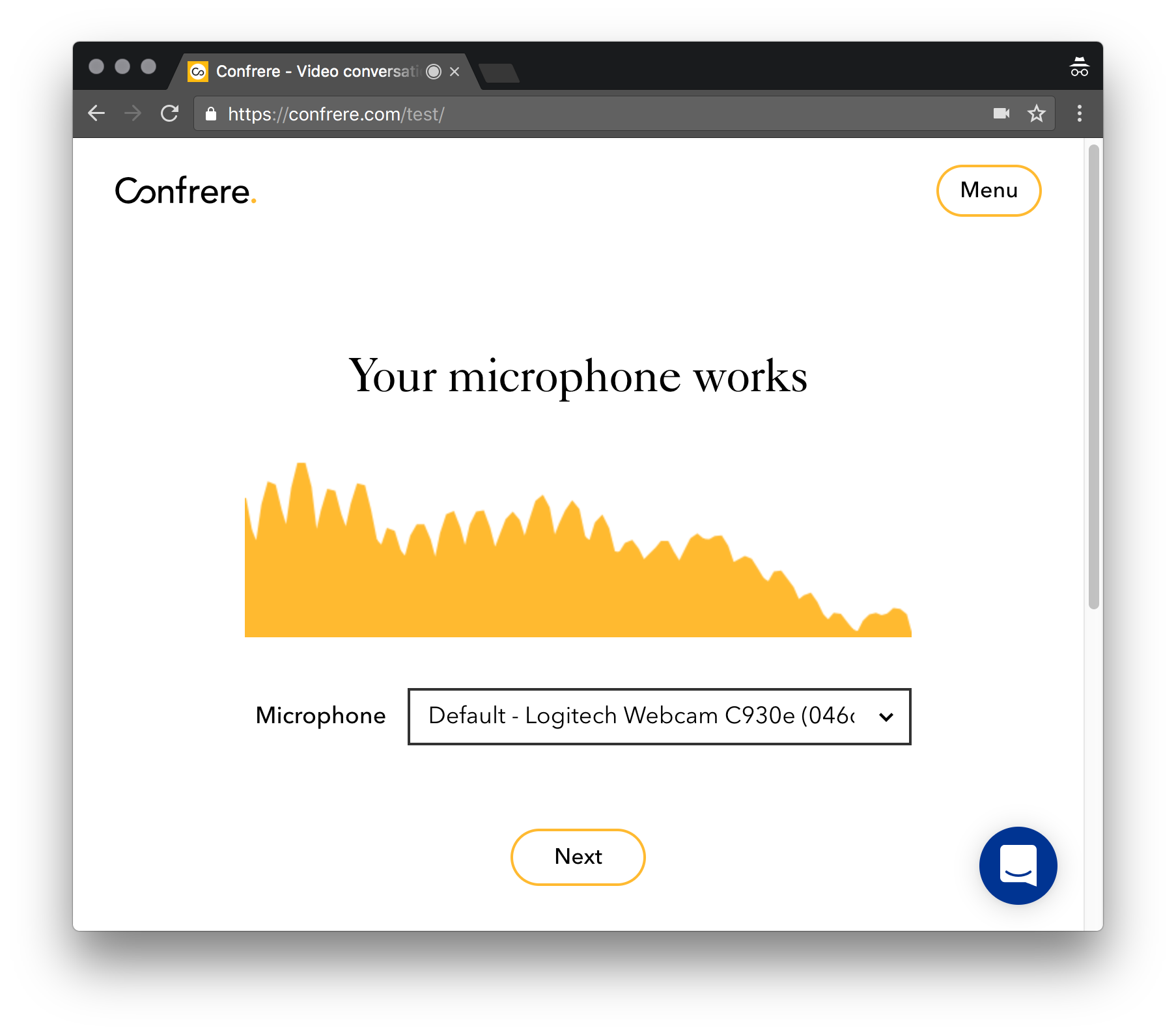

Now as it turns out, the new autoplay policy changes affect other things than the <audio> and <video> tag. A common UX pattern in WebRTC is to provide users with feedback on microphone input volume. To do this, audio is analyzed using AudioContext, which takes a MediaStream and outputs its waveform as buckets. No audio is being played here through the speakers, but for some reason even analyzing the audio is blocked because AudioContext, in theory, allows you to output the audio.

This issue was first reported to the Webkit bug tracker in December, and a fix was merged six days later into Webkit. The fix? To allow AudioContext to work if the page is already capturing audio and video.

So why are you still reading this blog post? It turns out Chrome did the same mistake as Safari did. Even though this affects many WebRTC providers, Google has been relatively silent on this matter. There have been many attempts to get them to do a publish a PSA on the effects of autoplay on WebRTC, but this has not yet happened.

MEI scores messing with your testing

How did we get into this mess? Surely many developers must have tested their AudioContext code before this change made it into Chrome 66 stable where it effectively hits every single user. This is where MEI hits you. You see, frequent interactions with a page give you a higher MEI score, meaning that developers who frequently test in new releases on their own product are not likely to encounter the bug, as audio is allowed to be played and analyzed. Not even incognito mode helps you, as MEI is persisted. Only starting Chrome with a fresh user profile will surface the issue, a fact which is easy to forget for even seasoned Google QA people.

What should browser vendors do?

Changes to core functionality on the web is difficult to do right. Chrome has put out numerous autoplay policy change notices, but none of them mention WebRTC or MediaStreams. The seemingly forgotten Permissions API has not been updated, so that developers have no way to synchronously test if they need to prompt the user for a gesture. One suggestion is to allow AudioContext to output audio if the page is already capturing as Safari has done, but this feels like a hack rather than a solution. It also doesn’t support other legitimate use cases for analyzing audio when getUserMedia is not involved.

One concrete solution for browser vendors is to allow media permissions to impact the media engagement index. If the user has granted perpetual access to user media, then one should probably assume that the web page is trusted enough to output audio as well with no user interaction, regardless of if it’s capturing at the moment. After all, at that point the user trusts that you do not broadcast their microphone and camera to millions of users without their knowledge, so being able to play interface sounds is at that point is a minimal concern.

How to fix this in your application

There are luckily a couple of things you can do, depending on what you are trying to fix. These are the things we added at Confrere when we first met this issue rolling out support for Safari for iOS.

add playsinline

To fix videos having no sound, add the playsinline attribute on your video element. This is well documented by now. It works in both Safari and Chrome, and has no adverse effects in other browsers.

user gestures

To fix your audio visualizer, just add a user gesture. We were lucky here because we had the luxury of being able to add multiple steps without user disruption in our onboarding flow to a video call. You might not be so lucky. Until Google fixes this, there is no workaround but to add a user gesture.

no fix for interface sounds

There is no workaround at the moment for fixing interface sounds. Some are experimenting with creating an AudioContext that is reused across the application which you pipe sounds through, but I haven’t tested this. In Safari it is a little better. As long as you are capturing, you can play sounds for incoming chat messages and calls, but you probably don’t want to have user media enabled all the time just to be able to get the user’s attention that there’s an incoming call.

As you can see, there are a few things you can do to remedy this issue until there is a more long-term solution. And don’t forget to follow the bug for more updates.

{“author”: “Dag-Inge Aas“}

this spec might be changed again. Many users complains it.

https://bugs.chromium.org/p/chromium/issues/detail?id=840866

iOS Safari only allow a single video element to play sound.

You can’t play video elements with sound of two conference participants. It might be possible with a single audio element but I couldn’t make it work.

https://bugs.webkit.org/show_bug.cgi?id=176282#c4

@Ben: That’s weird, I’m not able to replicate that on Confrere. We’re running full mesh with multiple participants. I’ll follow up on that bug as well, very interesting!

Some updates from the Chrome Team: https://bugs.chromium.org/p/chromium/issues/detail?id=840866#c103

In my case, I have both the playsinline and user gesture working when establishing the call. However, when I toggle MediaStreamTrack.enable on and off for two times, the remote sound is lost.

Repository URL: https://github.com/Unrupt/unrupt-demo/blob/master/unrupt.js

Please note, we’re using AudioBufferNode, which stop receiving sound after unmuting for the second time. However, the sound is still being played through the MediaStream.

Here’s Github Issue: https://github.com/Unrupt/unrupt-demo/issues/11

https://github.com/versatica/mediasoup/issues/264#issuecomment-455262127

— quite a good hack to synchronously check if autoplay is blocked.