Next week the IETF 87th standardization meeting will take place in Berlin, Germany. Most of the sessions I’m planning to attend are related to SIP, Diameter and of course WebRTC. When a week ago I started preparing some material for the meeting, a customer called and asked me to provide a training session on WebRTC standardization and implementation status for their R&D team. While this is something I’m planning to do in the next month, I thought I could start my contribution to this blog by providing a brief introduction to WebRTC standards and describe what’s going on in each group. This introductory post is meant to provide a very initial overview and I’m planning to go into technical details in future blog entries.

Overview

As described in the IETF website, existing Web-based RTC implementations (i.e. Flash, Silverlight, Java, etc.) are proprietary and non-interoperable, as they require non-standard extensions or plugins to work. There is a desire to standardize the basis for such communication so that interoperable communication can be established between any compatible browsers. The goal is to enable innovation on top of a set of basic components. One core component is to enable real-time media like audio and video. A second goal is to enable data transfer directly between clients.

The RTCWEB group in the IETF and the WEBRTC group in W3C are specifying a browser based client to support communication services. This standardization can be seen from two different perspectives: on one side, the W3C is defining a set of JavaScript APIs for Web-browsers so application developers can control the components. On the other side, the IETF is defining a new ‘protocol profile’ defining a core set of functionalities (end-to-end security, codecs, extensions, etc.). It’s worth noting that this new profile can be used beyond web-browsers and, in fact, we’re seeing customers implementing it on mobile apps, set-top boxes and other devices.

IETF RTCWEB

Accordingly, the RTCWEB Working Group (WG) at the IETF is working on producing architecture and requirements for selection and profiling of the on the wire protocols. At the same time, it identifies state information and events that need to be exposed in the APIs as input to W3C. The main deliverables the IETF RTCWEB WG is producing are summarized in the table below:

| Coverage Area | IEFT Drafts | Description |

| Use cases | draft-ietf-rtcweb-use-cases-and-requirements |

Describes WebRTC use cases and derives a number of requirements from them |

| Security | draft-ietf-rtcweb-security | Analyzes the WebRTC threat model |

| draft-ietf-rtcweb-security-arch | Defines an architecture which provides security within that threat model | |

| Signaling negotiation and NAT traversal | draft-ietf-rtcweb-jsep | Describes the mechanisms for allowing a Javascript application to fully control the signaling plane of a multimedia session via the interface specified in the W3C RTCPeerConnection API, and discusses how this relates to existing signaling protocols |

| Media transport | draft-ietf-rtcweb-rtp-usage | Describes the media transport aspects of the WebRTC framework including how the Real-time Transport Protocol (RTP) is used in the WebRTC context and requirements for which RTP features, profiles, and extensions need to be supported. |

| Audio codecs | draft-ietf-rtcweb-audio | Outlines the audio codec and processing requirements for WebRTC client application and endpoint devices. Note that both Opus and G.711 are the minimum required to be implemented by any WebRTC compliant implementation. In addition, other documents are being discussed in order to recommend additional voice codecs for a number of use cases |

| draft-marjou-rtcweb-audio-codecs-for-interop | Complementary proposal recommending AMR-WB, AMR and G.722 codecs to improve interoperability towards legacy networks | |

| Video codecs | draft-alvestrand-rtcweb-vp8 | Recommends VP8 as a mandatory to implement video codec |

| draft-burman-rtcweb-h264-proposal | Alternative proposal for H.264 | |

| draft-dbenham-webrtc-videomti | Alternative proposal for H.264 backed by Apple | |

| Quality of Service markings | draft-ietf-rtcweb-qos (replaced by draft-dhesikan-tsvwg-rtcweb-qos) | Provides the recommended DSCP values for browsers to use for various classes of traffic |

| Data Stream transport for non-audio/video media | draft-ietf-rtcweb-data-channel | It provides an architectural overview of how the Stream Control Transmission Protocol (SCTP) is used in the WebRTC context as a generic transport service allowing Web Browser to exchange generic data from peer to peer. |

| draft-ietf-rtcweb-data-protocol | Specifies a protocol for how the JS-layer DataChannel objects provide the data channels between the peers |

The most controversial areas above have to do with signaling and video codecs. Note that while WebRTC is “signaling agnostic”, you still need a standard way to let JavaScript control the signaling plane. draft-ietf-rtcweb-jsep references the SDP Offer/Answer (O/A) model as described in RFC 3264. This model is currently being revisited and is an ongoing point of controversy. On the video codec front there has been a heated batter between mandating VP8 vs. H.264. In my opinion, it initially started as a technical discussion but it’s currently purely a patent-related issue.

Another claimed controversy often cited by WebRTC’s critics is around Microsoft and Apple’s lack of support. I also often hear things like “Microsoft and Apple are not involved in WebRTC”. Well, it’s true not much is known about their product/technology plans, but you cannot say they are totally absent. Microsoft (i.e. Skype, Lync, IE teams) is a very active participant in the standardization process and has even proposed its own alternative specification (CU-RTC-Web). Some view this as evidence that Microsoft is against the WebRTC, but Microsoft continues to be actively involved despite that fact that its recommendations were not immediately adopted. On the other side, Apple has not been very active in terms of contributions but it seems they’re closely following the work as they have expressed their opinion in discussions like the one on video codecs.

In addition, there are number of documents tackling other but related issues. From those, I’d like to mention draft-hutton-rtcweb-nat-firewall-considerations which examines how WebRTC plays with NATs, Firewalls and HTTP proxies. I think this is a very interesting problem to be solved as I’m currently experiencing it in the field: a customer is running a WebRTC trial in a couple of corporate sites and they are suffering from HTTP-proxies (and restrictive firewalls) rejecting/blocking non-HTTP traffic. We developed a workaround for this but would rather implement a standard solution when ready as TURN/STUN/ICE is insufficient for this scenario.

While most of the work is done primarily by using already defined protocols and functionalities, missing parts are being worked out in other IETF working groups (e.g. MMUSIC WG is working on Trickle ICE and a number of extensions required by WebRTC). At the same time, other working groups are producing specifications that are mostly meant to be implemented in a WebRTC context (e.g. SIPCORE WG is now completing the SIP over Websockets soon-to-be RFC which has already a number of available implementations – Quobis QoffeeSIP, Versatica JsSIP, Doubango SIPML5, etc.).

When it comes to next week’s face-to-face meeting, some of the notable agenda items include:

- Discussions on SDP O/A model and a potential way forward – draft-ivov-rtcweb-noplan, draft-jennings-rtcweb-plan, draft-roach-rtcweb-plan-a, draft-uberti-rtcweb-plan, draft-roach-mmusic-unified-plan (IPR disclosure) and draft-raymond-rtcweb-webrtc-js-obj-api-rationale are interesting documents, especially the last two

- Whether SDES should be part (and how) of WebRTC – note that current architecture only considers DTLS-SRTP.

W3C WebRTC

As defined in its charter, the mission of the W3C WebRTC WG is to define client-side APIs to enable Real-Time Communications in Web-browsers. These APIs should enable building applications that can be run inside a browser, requiring no extra downloads or plugins, that allow communication between parties using audio, video and supplementary real-time communication, without having to use intervening servers (unless needed for firewall traversal, or for providing intermediary services). Enabling this requires client-side technologies like API functions to explore device capabilities, to capture media from local devices, for encoding and other processing of those media streams, for establishing direct peer-to-peer connections (including firewall/NAT traversal), for decoding and processing of those incoming streams; etc. The current editor’s draft defines a set of APIs based on some preliminary work done in the WHATWG. The API specification to get access to local media devices is being developed by the Media Capture Task Force. Overall, the document is not yet complete but some early experimental implementations are already available (mainly from Google and Mozilla – which are happening to share part of the code, mostly related to the media processing). It’s in the W3C context where Microsoft submitted its alternative CU-RTC-Web proposal almost a year ago.

For the last month, main topic of discussion in this group (incl. cross-posting emails with the IETF mailing lists) has been whether the SDP O/A model and the API in its current form provides the level of flexibility WebRTC use cases require. While some participants believe the current model is good enough to implement their applications, it seems that a growing number of folks believe it’s not. Note this is not the first time we have this discussion (see this email from Sep 2011) but it seems we have now on-board a larger representation of the Web Development community. I’m not going to elaborate on this topic here as I believe it deserves a dedicated blog post (in the meantime you can check the How to Design a Good API and Why it Matters and Input from JS Developers on their experience with the WebRTC API) but it seems to me the discussion is leaning towards whether we should:

- Finish WebRTC 1.0, let industry adopt it (“done is better than perfect” – especially considering market windows of opportunity), and then fix things in 2.0 (yes, 2.0 might be constrained by backward compatibility), or

- Simply revisit the whole thing, which would probably delay 1.0 significantly but give the chance to provide a re-designed approach. By the way, note that both the IETF and the W3C are already delayed according their original plans (to be fair this is something common in any standards/collaboration effort).

See here an interesting email from one of the W3C WebRTC chairs and Cisco’s and Google’s position on the topic published just few hours ago. After an endless number of emails and given the current state of the discussion, I believe current API ‘opponents’ should put together a concrete API proposal and submit it to W3C – ideally it should allow the current API to be layered on top of it.

3GPP

As discussed, W3C and the IETF are defining the underlying technology to be used when implementing WebRTC-based services. From the feedback I’ve heard from customers and the activities I’m doing in the field, it seems initial use cases for WebRTC are either going to be “pure-web” or somehow involve call-center/UC infrastructures. However, while it might not be the most relevant case, I am also seeing a number of telcos willing to leverage this technology either to deliver brand new services or simply extend existing portfolio towards the web domain. In line with this, the 3GPP is currently discussing how to use WebRTC for IMS access. The work is called ‘Web Real Time Communication (WebRTC) access to IMS (IMS-WEBRTC)’ and not surprisingly, main companies behind this effort include:

| Alcatel-Lucent | Ericsson | NTT DoCoMo |

| AT&T | Huawei | Orange |

| Broadcom Corporation | Intel | Qualcomm |

| China Mobile | Nokia | Telecom Italia |

| China Telecom | Nokia Siemens Networks | T-Mobile |

| Deutsche Telekom | NTT | Verizon |

(disclaimer: I’m currently collaborating with some of these companies in WebRTC-related activities)

As any other 3GPP effort, it involves several groups. On one hand, SA1 is focused on service requirements specification and has mostly completed its work including things like the ability for WebRTC clients to reuse IMS credentials, interoperability between IMS and WebRTC endpoints, delivery of IMS services over WebRTC, regulatory functions (e.g. Lawful interception) and charging, etc. and, in my opinion the most interesting one, the ability for an IMS service provider to offer IMS services to users interacting with a 3rd party website – this means web developers could potentially develop applications using operators infrastructure. OK, this is not something new but WebRTC might bring some advantages when comparing it to previous proposals/efforts. In a couple of cases, operators even mentioned plans to offer things like “TURN-as-a-service” (possibly including Call Recording features, LI, etc.) for Over-the-Top players (you can find some thoughts on Telco and OTT here).

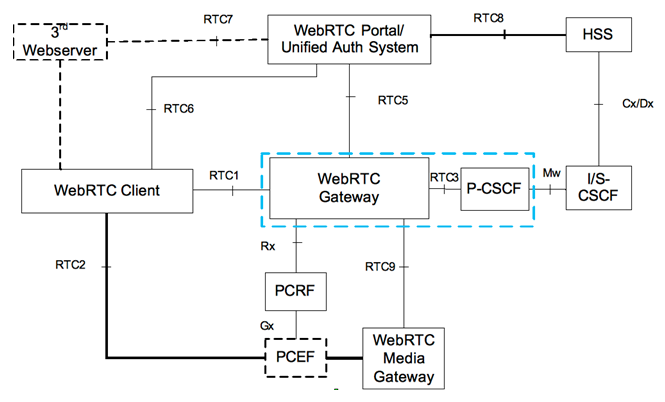

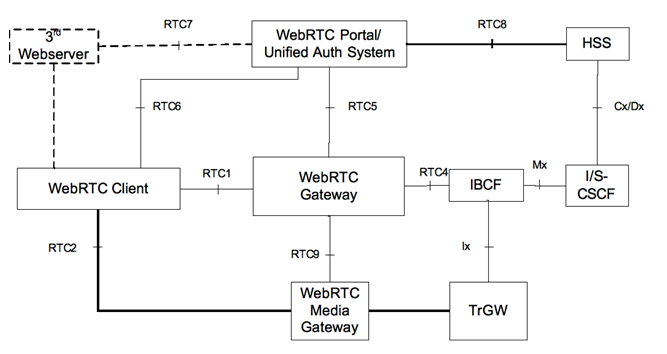

On the other hand, SA2 is currently focused on describing the high level architecture requirements for WebRTC access to IMS. As mentioned, in order for WebRTC clients to have access to 3GPP IMS, interoperability between IMS and the WebRTC client is needed. I did briefly introduce the general VoIP and WebRTC interoperability topic earlier this year in this presentation and I am now discussing the IMS specifics with an operator. Concretely, the SA2 objectives are to expand the IMS architecture and procedures; this includes signaling, media and authentication aspects. While this is work-in-progress and subject to discussion/modification, the diagrams below represent an initial approach for the resulting reference architecture:

High level architecture for IMS_WebRTC

High level architecture for IMS_WebRTC NNI Scenario

As can be seen, the following entities have been defined:

- WebRTC gateway: it’s the IMS signaling interfacing component to the WebRTC client and can be seen as a Signaling Gateway. Some of the functionalities include WebRTC to SIP/IMS signaling conversion, support for ICE procedures on SDP O/A negotiation, support for STUN keep-alive as defined in RFC 5389, communication with Policy and Charging Rules Function (PCRF) to authorize the bearer resources and manage QoS, communication with Web portal/Unified Auth System to verify user authorization, etc.

- WebRTC Media gateway: it does all the media plane adaptations and its functionality include things like SRTP/RTP media conversion, flow mux/demux, , convert non-audio/video media between DataChannel/WebSocket and MSRP, support codec transcoding, support ICE procedures on connectivity check, etc.

- WebRTC portal/Unified Auth System: its functionality include things like store user related information (e.g. web identification, mapping between web identification and IMS identities – note it has an interface towards the HSS), user authentication, access control Token generation, or even communicate with third party servers to validate third party id’s. For the last couple of years I’ve seen service providers combining things like SIP and Oauth 2.0 and hence implementing a very basic version of what some folks call today ‘Identity Federation’. I think this kind of features/services will gain popularity in the context of WebRTC; especially when users can reach each other using the Internet.

Note that these new entities could be co-located with other IMS functions. In fact, there are many SBC vendors providing both WebRTC signaling and media gateways as part of their SBC offering. In some cases these SBCs are also running P-CSCF and other IMS functions.

Not only SA1 and SA2 but other groups within 3GPP will also be involved in the process. For instance, SA3 will take care of security aspects while charging aspects will be undertaken by SA5.

Beyond the standards organizations mentioned, some other industry forums are starting some WebRTC-related activities. As an example, the IMTC has recently created the WebRTC Interoperability Activity Group, the SIPForum is currently discussing the charter for its WebRTC Task Group and there are some ongoing discussions in the GSMA context. Related to the GSMA, I had the opportunity to discuss with some operators potential ways to deliver VoLTE/RCS services over WebRTC. I will share progress on this in future blog posts.

Want to learn a bit more about this? We’ll elaborate some of the topics in future blog entries. In the meantime, Alan and Dan have recently published the second edition of their WebRTC book. You can also send me an email to [email protected] or follow me on Twitter at @victorpascual.

{“author”:”victor“}

Victor,

thanks for this very good overview.

There’s one thing I would like to comment on and that is the 3GPP architecture diagram.

Actually, 3GPP SA2 is in the phase of studying the architecture. The picture you have published does not represent current SA2 group consensus regarding the initial approach, but it is the input of one particular company into the study process.

Kind regards,

Uwe

Hi Uwe,

thanks for your comment! You’re absolutely right, this is just part of the study process. I should have stated it clearer in my post.

An interesting topic is whether WebRTC access should be provided not only via P-CSCF but also include IBCF, and if so, whether it should be considered NNI as we (and regulators) understand it today. What’s your opinion?

By the way, referenced IETF Internet-Drafts not following the naming convention draft-ietf-… are individual documents and do not represent Working Group consensus.

Thanks again,

-Victor

I received several emails asking about WebRTC, IMS and 3GPP. Find here some draft (27 Jul 2013) documents: http://list.etsi.org/scripts/wa.exe?A2=ind1307d&L=3gpp_tsg_sa_wg2&T=0&P=39516

-Victor