If you are new to WebRTC then you have missed out on years of drama in the standards bodies over various issues like SDP and codecs. These standards dictate what vendors must implement so they ultimately dictate the industry roadmap. To get a deep perspective and appreciation of the issues, we like to ask Dan Burnett, W3C editor to comment on where we are at with the standardization process. I caught up with Dan at this year’s IIT Real Time Communications Conference and had the more detailed Q&A with him shortly thereafter.

We asked Dan to comment on recent spec changes, ORTC, the next version of WebRTC, codecs, Apple, when the 1.0 spec might ever be finalized, and a whole lot more.

{“editor”, “chad hart“}

New Governance

webrtcHacks: Hi Dan. Can you describe some of the recent changes to the W3C WebRTC governance?

Dan: Yes. There was a long-running but productive discussion among the members of the WebRTC Working Group (WG), ORTC Community Group (CG), and the some of the members of the W3C advisory committee – which is the group that officially determines group charters.

As part of the Charter renewal process, we decided that there would be one additional Chair of the WebRTC Working Group – Eric Lagerway of Hookflash who was one of the initiators of ORTC. Also the decision was that the WebRTC WG is the official group where all future standardization work in WebRTC will happen, meaning the ORTC work will gradually fold into that group.

Additionally, the group was chartered to work on another version beyond 1.0 – WebRTC Next Version or WebRTC-NV.

There are 2 requirements on that version:

- There is no requirement that new features introduced in the specification have an SDP equivalent

- WebRTC NV is not a replacement for WebRTC 1.0 – it is an extension. It is expected that all browsers that support WebRTC NV will support 1.0 functionality as well.

One other thing has happened that is not official, but is probably good is that Bernard Aboba from Microsoft has joined the WebRTC 1.0 editing team.

The Next Version

webrtcHacks: yeah, Bernard mentioned that in the interview I did with him last week. Can you explain WebRTC NV? Why didn’t you just call it 2.0, or 1.1, or whatever?

Dan: I have been working on standards for a long time. I have seen groups spend ridiculous amounts of time deciding on a name for a specification. In this particular case a “1.1” sounds like a minor change from “1.0” while “2.0” sounds like a major change. Some people want a minor change. Some people want a major change. If enough people want different minor changes it will end up being a 2.0 anyway because of the number of changes. The goal was to avoid that disagreement now so that we can move forward,.

webrtcHacks: So what is WebRTC NV then, beyond what you stated earlier about no SDP?

Dan: Nothing is officially decided but I expect that there will continue to be more low-level controls as in ORTC. This is complicated by the fact that new feature proposals are continuing to come in for 1.0. Many of these features are from ORTC.

In the Sapporo meeting coming up, Google will be sharing their idea for what should go into WebRTC-NV when we finally start working on it.

webrtcHacks: How do you see ORTC influencing the WebRTC spec? Is WebRTC-NV really just ORTC?

Dan: If it had to summarize WebRTC-NV I would say that it is the combination of WebRTC 1.0 and ORTC. It is a requirement that 1.0 applications continue to work in WebRTC-NV implementations. It is not required that ORTC applications work directly in WebRTC-NV.

I believe the ORTC community intends to modify ORTC as necessary to remain consistent with WebRTC as it evolves.

webrtcHacks: Is there an end-date to ORTC-then? When it is mostly merged with WebRTC-NV will it cease to exist?

Dan: I can’t speak for the ORTC group. I have not heard of an end date. You’ll have to ask one of the primary ORTC contributors.

Spec Changes

webrtcHacks: What are some of changes made to the specs recently. Particularly those that impact the developers out there?

Dan: First I would like to give a little plug for my webrtcstandards.info site where I have been putting exactly that sort of information over the past few months. I will mention some things here, but you can get more details on that site.

webrtcHacks: ok, we’ll give you one plug (laughs)

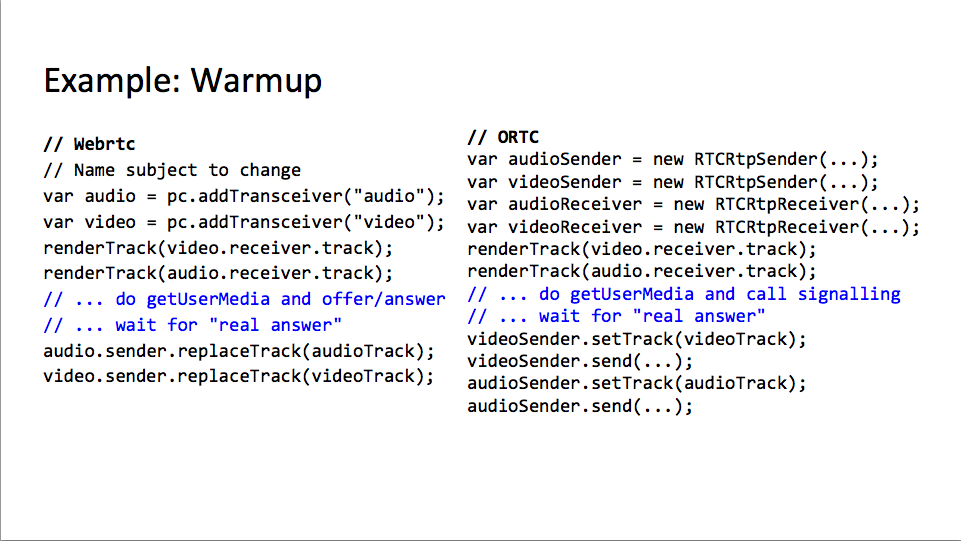

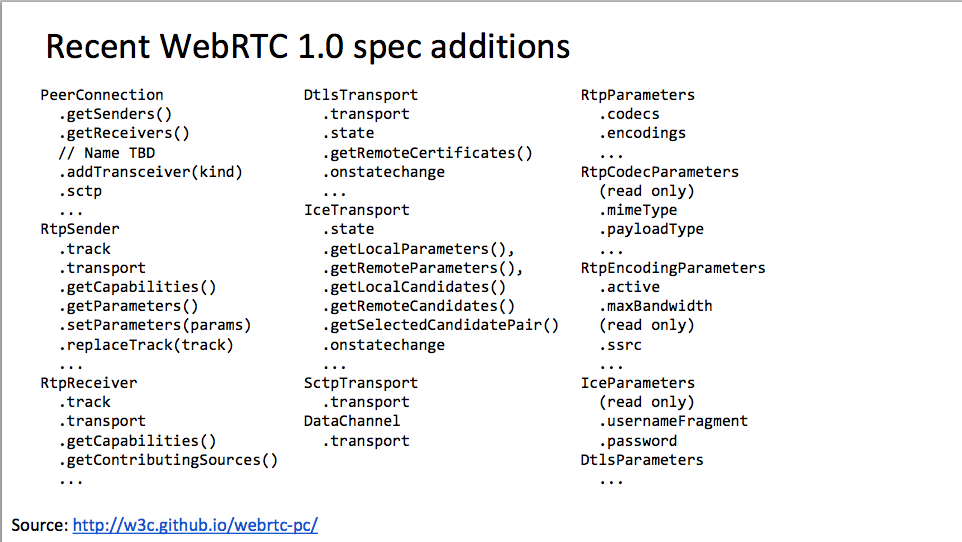

Dan: One of the biggest and most relevant changes on what we were just talking about is the introduction of the RTCsenders and RTCreceivers. These are objects that allow for both information and more direct control over how tracks are sent over a PeerConnection. Notice as part of this that we have moved from a stream based API to a track based API.

webrtcHacks: And what advantage does the track approach provide?

Dan: It turns out developers want to have more control over exactly how tracks are sent and received. For example being able to specify which codecs are to be used and the parameters used to configure those codecs. They should be able to configure some transport properties as well on a per track basis such as FEC, retransmission, and bandwidth. Because of this it really didn’t make sense to talk about streams as the primary primitive being sent over a PeerConnection since they are really just a collection of tracks.

webrtcHacks: So the others?

Dan: First, on the one we just mentioned – that was a foundational change where we are going to be seeing many other changes later on. Now I’ll talk about the others that are not related to that.

One big change was the API’s have been converted to use ECMAScript Promises. I think I mentioned this last year.

webrtchacks: You did.

Dan: It has happened. It is now in the specifications.

Promises are now the recommended mechanism for WebRTC specifications and for web specifications in general for dealing with asynchronous function calls. Not so much for things that generate multiple events, but definitely for any single asynchronous function call.

This is part of the move of ECMAscript toward truly asynchronous function calls as you can see if you look at some of the thoughts or future versions of ECMAscript.

The original callback based API’s currently still exist but will eventually be deprecated. Developers should start using the Promise versions.

webrtcHacks: I know media capture from the DOM is another one.

Dan: There has been good progress on capturing media directly from media elements such as audio, video and canvas. Developers have had to use hacks up to this point to be able to capture a canvas for example. Maybe they would take snapshots, but that is not the same as a realtime media stream as you would get from a getUserMedia call.

The major changes going into the specification soon are to try to reproduce the resulting media stream as faithfully as possible to what a user would experience from that element. For example, if the user is playing a video and pauses it and then resumes, the resulting stream should show the paused video for the amount of time it was paused and then resume again.

This seems to be what developers are most interested in.

webrtcHacks: can you talk about some of the use cases that are being referenced around this feature?

Dan: Shared whiteboard is probably the best example, but there maybe some instances for training purposes where you want to capture how the user has interacted with existing elements – video or audio.

webrtcHacks: What about screensharing?

Dan: There is good progress happening there as well on the specification. It still has some tricky issues in terms of what apps should be able to request to be shared and what users should have control over. An example of this is Microsoft Powerpoint – if a user has 3 powerpoint documents up – say different presentations for different clients; they are likely to only want to share one one of those presentations – one window of that application. That works great until they go into presentation mode, which is far as the computer is concerned is a different window. So is this a case where the user should decide or is this a case where the application should decide what is shared?

In general the WG believes that the user should have the control, but browsers may have to make special cases for known applications such as Powerpoint so that it just works.

webrtcHacks: How about simulcast?

Dan: At the Seattle meeting there were some strong opinions on how simulcast should work and some proposals. Each time we get to the details the discussions diverge rather than converge. We all want it but we do not agree on how it should be signaled.

Timelines

webrtcHacks: Now for an easier one. When will 1.0 be done?

(laughs)

Dan: I am tempted to give a similar answer as last year.

There are 2 primary specifications. The media capture specification is right now finishing up addressing the comments from its Last Call review which is the wide range review that is required in order to go forward. There aren’t any new features being requested by group members – it’s just cleaning up and fixing.

It probably will be stable within another 6 months.

webrtcHacks: Stable meaning not changing any more?

Dan: Yes – meaning no contentful changes. Only editorial fixes.

Now the WebRTC specification has the problem that new features keep coming in.

werbrtcHacks: Just to clarify – the Media Capture group is the getUserMedia API and when you WebRTC, that means the RTCPeerConnection and DataChannel related API’s?

Dan: Yes.

These are features that have come from ORTC. At each meeting we have tried to finalize the list, but new proposals continue to creep in. Within 6 months we will know whether the chairs have been able to hold the line on the most recent list agreed to in Seattle.

webrtcHacks: So is this why it is taking so long?

Dan: Yes.. The good news about it is that the features that are going in are the most requested ones from ORTC.

IP Leakage

webrtcHacks: The IP leakage issue was a hot topic on webrtcHacks and elsewhere? Many have labeled it as a flaw; other say this behaviour was by design? Can you share the “standards” perspective on this topic and the considerations that were discussed?

Dan: The summary is this – there are 2 problems with IP leakage:

One kind is the leakage of public addresses that the user doesn’t want leaked. This can happen when a user is using a VPN and not all of the traffic is sent over the VPN – a so called split tunnel VPN. This is an issue if the user doesn’t want their non-VPN public address to be revealed. This is not a WebRTC problem; this is a split tunnel VPN problem. That doesn’t mean that people don’t blame the browser vendors even though it’s not their fault (laughs}

Technically any application running on your machine could do the same thing if you’re running a split tunnel VPN. There are extensions to turn off WebRTC for people who are very concerned about this.

The other kind of leakage is leakage of your local IP address. the reason this concerns some people is that it can be used to map the topology of your local network, say within an enterprise. However it turns out that applications can use an XmlHttpRequest to do the same thing. Despite that, the browser vendors are working on ways to turn off the reporting of these local addresses.

There will be more details coming up in an upcoming post on my site.

What’s Apple Doing?

webrtcHacks: Now the only major browser vendor left is Apple. Can you comment on public participation by Apple?

Dan: It is clear that people from Apple are continue to follow the work, but they still don’t contribute.

webrtcHacks: Do you know if they contribute to other WG more actively.

Dan: Yes, Apple does contribute more actively in other WG within W3C.

Codecs

webrtcHacks: Anything new with video codecs now that the market has had some time to react to the decision to include both VP8 & H.264 for browsers? How is the VP9 vs. H.265 and Alliance for Open Media (AOM) discussion changed the discussion?

Dan: The gauntlet has been thrown for the creation of free and open source video codecs. MPEG-LA needs to take notice that the media producers and distributors are serious about coming up with lower cost alternatives. This pressure just continually increases. The AOM is a prime example of that.

webrtcHacks: Has the Alliance for Open Media come up in standards discussion? In the past I know there was discussion of just allowing software codecs that could defined on the fly.

Dan: Codecs still need to be created. The discussions of VP8 vs H.265 and VP9 vs. H.265 are not really technical discussions. They are all about intellectual property because of the cost of licensing the codecs. The issue is not being able to select a codec – the issue is having a codec that you want to choose.

One API change that is just gone in is being able to choose which codec of the browser supported ones to use.

Microsoft

webrtcHacks: Anything else to add?

Dan: I think we’re finally on a good track in respect to a path forward for ORTC and WebRTC and thus the inclusion of Microsoft as a true and complete WebRTC vendor eventually. We just need the feature inflow from ORTC to stop right now to be able to declare victory and move on.

I think this is evidence that the industry really does want this to happen.

I spoke with a number of people who talk to HTML developer groups and they all agree that even today no more than 50% of the developers have heard of WebRTC – still! It is likely that one reason for that is for many developers a technology isn’t real until it is in Internet Explorer or its successor – Edge.

So having Microsoft fully engaged on a plan that we can all agree on now is a good thing for everyone.

{

“Q&A”:{

“interviewer”:“chad hart“,

“interviewee”:“Dan Burnett“

}

}

One of the problems I have with this “1.0” API is that it looks nowhere near what I have been working with for the last three years and what is currently implemented in browsers.

While I think that some of the changes (promises in particular) are great, the addition of the objects, much as I understand the motivation of the browser vendors (satisfying their own technological needs?), I do not see much push from the developer community for the 1.0+objects. This looks like something that could be shimmed on top of real ORTC. But with access to ORTC I would go straight for the real thing…

Standards… I prefer code 🙂