Dealing with multi-party video infrastructure can be pretty daunting. The good news is the technology, products, and standards to enable economical multiparty video in WebRTC has matured quite a bit in the past few years. One of the key underlying technologies enabling some of this change is called simulcast. Simulcast has been an occasional sub-topic here at webrtcHacks in the past and it is time we gave it more dedicated attention.

To do that we asked Oscar Divorra Escoda, Tokbox’s Senior Media Scientist and Media Cloud Engineering Lead to walk us through it. Tokbox was one of the first to market with a SFU and Oscar shares some of his learnings below.

{“editor”: “chad hart“}

As WebRTC matures use cases beyond traditional telephony are becoming more prevalent. Instead of just person-to-person calling, video-telephony and enterprise conferencing, WebRTC is making it possible for any application to add realtime media communications – be it personal real time broadcasting, healthcare, dating, or dozens of others. The heterogeneous mixture of use cases, growing means of network access, and mind boggling diversity of device endpoints, poses a very interesting challenge to quality of experience. In this new WebRTC-enabled environment, media optimizations and efficient quality control become more important than ever.

Enter the SFU

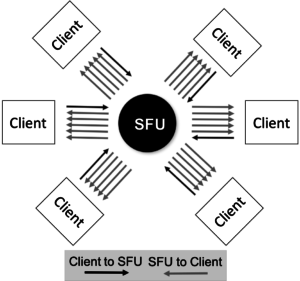

One of the most critical features for most multiparty use cases is the ability to adapt the quality of video for each participant that has their own unique network conditions, devices, render sizes, etc. The traditional approach to solve this adaptation problem used to be by means of transcoding within Multipoint Control Units (MCUs). However, that approach is very expensive computationally, and would represent a cost problem to the emergence of many WebRTC uses. Thus, Selective Forwarding Units (SFUs) have become popular as a more economical solution for multi-party video routing.

The first generation of WebRTC SFUs were mostly unable to adapt video bitrate independently for different participants. In most cases SFUs were just forwarding RTCP feedback from all receivers to the sender. As a result, senders would adapt bitrate to the worst receiver conditions (i.e. lowest estimated bandwidth and worst network QoS) which would then be forwarded to all participants.

Making Video Encoding more Flexible

Typically there are three modalities to layer the quality of a video stream:

- resolution,

- frame-rate, and/or

- encoding quality (quantization).

In a multi-party call, rather than sending the same resolution, frame-rate, and encoding quality to every participant, a better approach is to send distinct streams to each participant along one or more of these 3 dimensions independently. The wise combination of a layered video encoding and SFU-based multiparty video routing, if done properly, allows doing that without the cumbersome cost of MCUs.

In this way, every participant can receive a gracefully degraded version of the original full quality stream depending on their needs and capabilities.

Simulcast

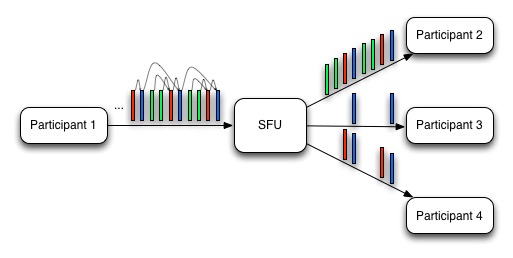

Simulcast describes the approach where you have different independent versions of the same stream with different resolutions sent simultaneously from the same endpoint. Typically, in simulcast/scalability-based platforms one encodes and transmits a set of qualities (resolutions + frame-rates) covering a range from a base minimal quality up to the highest necessary quality for the use case.

With all available streams, the SFU can then decide which quality (i.e. which packets) to forward to each participant, as shown in two examples in the next figures.

Scalable Video Coding

When qualities are not independent like in simulcast, but depend on each other (higher quality requires lower quality to decode), we call it, more generally, scalable video. Scalable video usually comes in the form of an embedded bitstream structured in layers, allowing to select and/or decode more or less of them. Depending on the number of dependent layers picked, one gets different qualities from it. If the stream includes multiple dependent layers allowing to decode different resolutions it is called spatial scalability. In the case where layers imply a reference dependence among frames allowing to decode different frame rates, this is known as temporal scalability. Finally, if layers allow to decode more or less bits from quantized data (e.g. predictive residue), this is known as quality scalability.

Scalable Video Coding (SVC) is thus a stream where video information is structured in a way allowing to decode all or part of it. Akin to simulcast, the SFU can then decide which quality (i.e. which packets) from a scalable video stream to forward to each participant.

In the specific case of temporal scalability, the SFU can generate different qualities (frame-rates) and adapt to the cpu and/or network conditions of each participant as shown in the figure below. Indeed, quality selection has a direct consequence on the amount of information or bitrate finally sent to each endpoint.

Flexibility is Good

Layered video encoding schemes were designed looking for a seamless capacity to adapt transmitted and/or decoded data from a stream or file depending on the needs of use cases, and without needing any kind of expensive transcoding. In the context of Real Time Communications, some of main benefits of the SVC approaches are the following:

- Bandwidth optimization

- different bitrate levels from a layered video stream can be chosen to best fit end-point’s available bandwidth without transcoding

- CPU optimization:

- decoding CPU usage can be optimized by controlling the bitrate

- Decoding and rendering CPU usage optimization by controlling maximum resolution and frames per second transmitted or decoded

- Energy optimization

- Energy consumption (and battery life) in devices is usually reduced in higher or lower degree depending on the optimizations chosen from the points above

- Better fit of visualization with resources consumption

- Why should we receive or decode full streams when only a small widget resolution render is necessary for a given use case?

- One can choose what level of fidelity by means of any or all of the possible layering dimensions available fits best a use case

- It enables smart SFUs to optimize video quality independently per receiver at a low computational cost

SFU Approaches for Simulcast and Scalability

There are different ways to implement simulcast and scalability. Probably, the first successful implementation in the market was the one used by Vidyo and Google Hangouts based on H.264/SVC, where scalability capabilities are fully built into the codec itself. In the early days of WebRTC some companies like AddLive were sending a sort of simulcast with multiple independent streams (high and low quality). Then, some years ago, Chrome added native support for simulcast combined with temporal scalability in WebRTC with VP8 and started to use it in Google Hangouts. At that point, other companies like TokBox figured out how to make use of it.

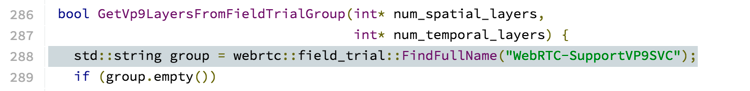

Nowadays you can see more and more people trying to use simulcast both for open source and commercial products. And for those curious, VP9-SVC support appeared recently in WebRTC codebase under a field trial flag, so maybe we need to update this blog post soon.

Simulcast Isn’t Easy

To be able to benefit from simulcast/scalability support in your WebRTC platform you need at least two things:

- Have simulcast/scalability support in the browsers (or other WebRTC endpoints). In the VP8 simulcast case, this special support is only required in the sender side who needs to send multiple versions of a video stream. With the proper SFU, the feature is 100% transparent for the receiver that will keep receiving a single regular VP8 video stream.

- Build the piece of logic allowing to select which quality/layer to forward to each receiver in your SFU.

Browser & Device Support

Chrome

To enable simulcast/scalability support in Chrome, you have to munge the SDP Offer with the so-called SIM group to configure the number of resolutions to send (maximum of 3 if you are sending video in HD and a maximum of 1 for screen-sharing) and include a special flag in the SDP Answer (x-conference-flag) (see here and here for more information).. One of the nicest parts of enabling simulcast in Chrome is that it automatically enables temporal scalability in VP8 as well (more info here).

Firefox and standard methods

More recently, standards and Firefox are also adding simulcast support by means of a new protocol feature called RID. RID allows the identification of the different simulcast streams in RTP packets. ORTC and latest WebRTC specification goes much further adding APIs to tune the parameters of the different simulcast qualities (WebRTC spec, ORTC spec).

Mobile

It is no secret that video simulcast/scalability has an overhead cost in bandwidth and CPU usage at the encoding side. This may be challenging for mobile devices, specially for what encoding multiple resolutions concerns. In these cases, exclusive use of temporal scalability is a very interesting alternative in order to reduce CPU, battery and network consumption.

SFU Considerations

In the SFU, there are many details one needs to figure out to make simulcast/scalability properly work, optimizing rate-control to maximize quality for every video receiver. These include:

- managing RTP packets from different quality sources,

- usage of RTP padding,

- rewriting timestamps,

- measuring network conditions

- properly terminating RTCP loops, and

- handling accurate bandwidth estimation in the SFU, among others.

Regarding the decision engine in your SFU, typically quality/layer selection can be based on the network conditions for every receiver (communicated to the SFU by means of RR and REMB RTCP packets). However, any other service/application specific logic can be added too, like for example to send a higher video quality for the active speaker in a conference.

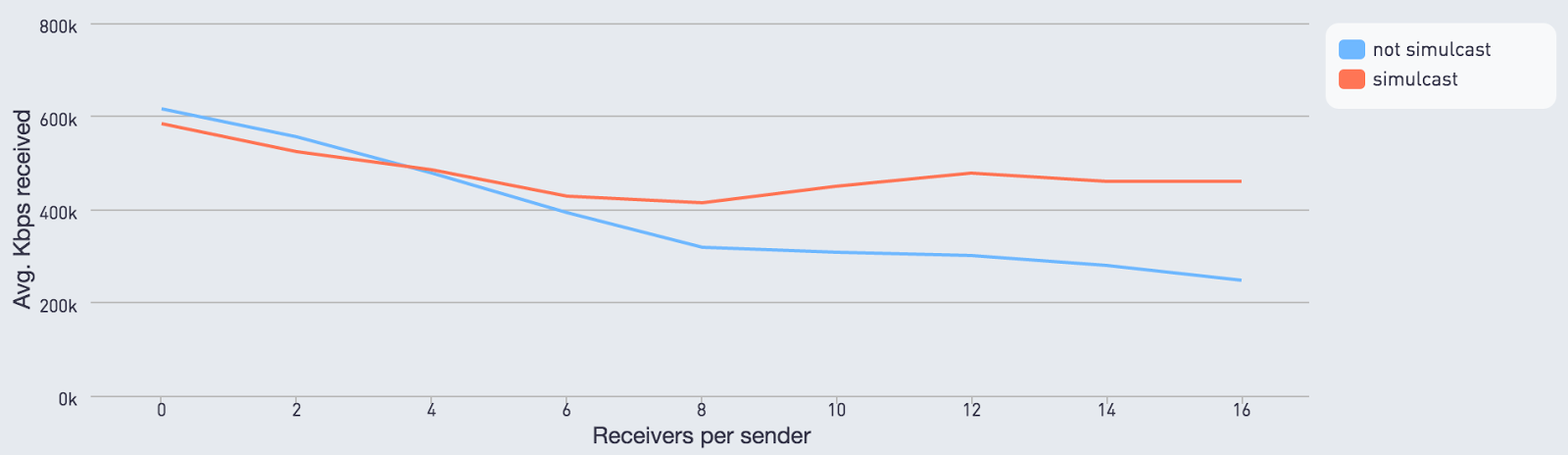

In our implementation the goal was to use simulcast to enable servers to maximize quality for every participant. We wanted to avoid the bitrate of the latter being dragged down due to other participants within the same session with less bandwidth available. Our expectations were that the improvements should be more clear the more receivers you have per sender.

Simulcast Performance

The improvement you get with simulcast depends a lot on the specific use case (type of devices, networks, resolutions, and number of receivers). The graph below depicts some bitrate data gathered from one of our public demo applications for the last months. As you can see, the benefit of simulcast is higher the more people you have receiving the video streamed by a sender. This is because in SFU-based video distribution without simulcast, one ends up sending the video stream encoded for the bandwidth of the receiver with the least bandwidth, and the more participants you have the more likely it is to have one under bad network conditions.

On the other side, when using simulcast, the average bitrate received by endpoints is independent of the number of receivers. They can receive different qualities better adapted to each one’s network or HW capacity conditions without affecting each other. A general result is an overall higher average bitrate across the platform streams for multi-party. On the other hand, the overhead of simulcast makes it suboptimal for 1:1 calls and, depending on the use case, may statistically still not stand-out compared to regular single stream for very small parties like 3 participants for instance (although it will still have a positive effect on those specific sessions where there is a participant with low resources). In any of the cases, it is a key requirement for simulcast/scalability to have enough available upload bandwidth on senders to properly operate and provide improvements.

SFU’s are the future

It is clear that classic alternatives such as MCUs may have been more popular, and may be better known as a solution to adapt quality for each receiver. However, unless you have a service and business model where it makes sense to pay for the transcoding cost, and you are willing to accept some degradation in quality and delay, probably one of the best options you have to provide a good quality of experience to your users in multiparty sessions is with something similar to what is described in this post.

The authors would like to thank Philipp Hancke, Jose Carlos Pujol and Gustavo García for their comments and contributions.

{“author”, “Oscar Divorra”}

Any sample code is there for sfu

There are a few open source projects with SFU capabilities – search for the following on Github:

-Jitsi video bridge

-Janus

-Voluntas

Thanks for your great post! I wonder if I enable the simulcast, Is the VP8 encoding is also SVC?

If you enable simulcast with VP8 you get automatically temporal scalability (that is one of the two popular types of scalability). But probably people won’t call it SVC, for that you need to use VP9, read latest post about VP9 SVC in webrtchacks.

Would you care to mention some of theses modern SFU and perhaps an example of it being used somewhere ?

Thanks you,

Chad mentioned some opensource SFUs supporting simulcast in one of the comments. For the commercial ones you can check OpenTok platform for example.

very helpful. Thank You.