It turns out people like their smartphone apps, so that native mobile is pretty important. For WebRTC that usually leads to venturing outside of JavaScript into the world of C++/Swift for iOS and Java for Android. You can try hybrid applications (see our post on this), but many modern web apps applications often use JavaScript frameworks like AngularJS, Backbone.js, Ember.js, or others and those don’t always mesh well with these hybrid app environments.

Can you have it all? Facebook is trying with React which includes the ReactJS framework and React Native for iOS and now Android too. There has been a lot of positive fanfare with this new framework, but will it help WebRTC developers? To find out I asked VoxImplant’s Alexey Aylarov to give us a walkthrough of using React Native for a native iOS app with WebRTC.

{“editor”: “chad hart“}

If you haven’t heard about ReactJS or React Native then I can recommend to check them out. They already have a big influence on a web development and started having influence on mobile app development with React Native release for iOS and an Android version just released. It sounds familiar, doesn’t it? We’ve heard the same about WebRTC, since it changes the way web and mobile developers implement real-time communication in their apps. So what is React Native after all?

“React Native enables you to build world-class application experiences on native platforms using a consistent developer experience based on JavaScript and React. The focus of React Native is on developer efficiency across all the platforms you care about — learn once, write anywhere. Facebook uses React Native in multiple production apps and will continue investing in React Native.”

https://facebook.github.io/react-native/

I can simplify it to “one of the best ways for web/javascript developers to build native mobile apps, using familiar tools like Javascript, NodeJS, etc.”. If you are connected to WebRTC world (like me) the first idea that comes to your mind when you play with React Native is “adding WebRTC there should be a big thing, how can I make it?” and then from React Native documentation you’ll find out that there is a way to create your own Native Modules:

Sometimes an app needs access to platform API, and React Native doesn’t have a corresponding module yet. Maybe you want to reuse some existing Objective-C, Swift or C++ code without having to reimplement it in JavaScript, or write some high performance, multi-threaded code such as for image processing, a database, or any number of advanced extensions.

That’s exactly what we needed! Our WebRTC module in this case is a low-level library that provides high-level Javascript API for React Native developers. Another good thing about React Native is that it’s an open source framework and you can find a lot of required info on GitHub. It’s very useful, since React Native is still very young and it’s not easy to find the details about native module development. You can always reach out to folks using Twitter (yes, it works! Look for #reactnative or https://twitter.com/Vjeux) or join their IRC channel to ask your questions, but checking examples from GitHub is a good option.

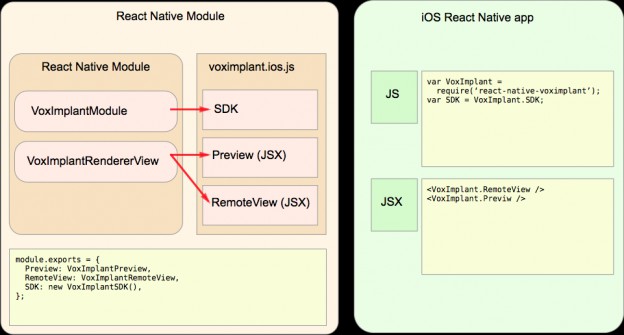

React Native’s module architecture

Native modules can have C/C++ , Objective-C, and Javascript code. This means you can put the native WebRTC libraries, signaling and some other libs written in C/C++ as a low-level part of your module, implement video element rendering in Objective-C and offer Javascript/JSX API for react native developers.

Technically low-level and high-level code is divided in the following way:

- you create Objective-C class that extends React’s RCTBridgeModule class and

- use RCT_EXPORT_METHOD to let Javascript code work with it.

While in Objective-C you can interact with the OS, C/C++ libs and even create iOS widgets. The Ready-to-use native module(s) can be distributed in number of different ways, the easiest one being via a npm package.

WebRTC module API

We’ve been implementing a React Native module for our own platform and already knew which of our API functions we would provide to Javascript. Creating a WebRTC module that is independent of signaling that can be used by any WebRTC developer is a much more complicated problem.

We can divide the process into few parts:

Integration with WebRTC

Since webRTC does not limit developers how to discover user names and network connection information, this signaling can be done in multiple ways. Google’s WebRTC implementation known as libwebrtc. libwebrtc has a built-in library called libjingle that provides “signaling” functionality.

There are 3 ways how libwebrtc can be used to establish a communication:

-

libjingle with built-in signaling

This is the simplest one leveraging libjingle. In this case signaling is implemented in libjingle via XMPP protocol.

-

Your own signaling

This is a more complicated one with signaling on the application side. In this case you need to implement SDP and ICE candidates exchange and pass data to webrtc. One of popular methods is to use some SIP library for signaling.

-

Application-controlled RTC

For the hardcore you can avoid using signaling altogether 🙂 This means the application should take care of all RTP session params: RTP/RTCP ports, audio/video codecs, codec params, etc. Example of this type of integration can be found in WebRTC sources in WebRTCDemo app for Objective-C (src/talk/app/webrtc)

Adding Signaling

We used the 2nd approach in our implementation. Here are some code examples for making/receiving calls (C++):

- First of all, create Peer Connection factory:

1peerConnectionFactory = webrtc::CreatePeerConnectionFactory(…); - Then creating local stream (we can set if it will be voice or video call):

1234localStream = peerConnectionFactory->CreateLocalMediaStream(uniqueLabel);localStream->AddTrack(audioTrack);if (withVideo)localStream->AddTrack(videoTrack); - Creating PeerConnection (set STUN/TURN servers list, if you are going to use it)

1234webrtc::PeerConnectionInterface::IceServers servers;webrtc::CreateSessionDescriptionObserver* peerConnectionObserver;peerConnection = peerConnectionFactory ->CreatePeerConnection(servers, ….,peerConnectionObserver); - Adding local stream to Peer Connection:

1peerConnection->AddStream(localStream); - Creating SDP:

1webrtc::CreateSessionDescriptionObserver* sdpObserver;- For outbound call:

- Creating SDP:

1peerConnection->CreateOffer(sdpObserver); - Waiting for SDP from remote peer (via signaling) and pass it to Peer Connection:

1peerConnection->SetRemoteDescription(remoteSDP);

- Creating SDP:

- In case of inbound call we need to set remote SDP before setting local SDP:

12peerConnection->SetRemoteDescription(remoteSDP);peerConnection->CreateAnswer(sdpObserver);

- For outbound call:

- Waiting for events and sending SDP and ICE-candidate info to remote party (via signaling):

123456789webrtc::CreateSessionDescriptionObserver::OnSuccess(webrtc::SessionDescriptionInterface* desc) {if (this->outgoing)sendOffer();elsesendAnswer();}webrtc::CreateSessionDescriptionObserver::OnIceCandidate(const webrtc::IceCandidateInterface* candidate) {sendIceCandidateInfo(candidate);} - Waiting for ICE candidates info from remote peer and when it arrives pass it to Peer Connection:

1peerConnection->AddIceCandidate(candidate); - After a successful ICE exchange (if everything is ok) connection/call is established.

Integration with React Native

First of all we need to create react-native module (https://facebook.github.io/react-native/docs/native-modules-ios.html) , where we describe the API and implement audio/video calling using WebRTC (Obj-C , iOS):

|

1 2 3 4 5 6 7 8 9 10 11 12 |

@interface YourVoipModule () { } @end @implementation YourVoipModule RCT_EXPORT_MODULE(); RCT_EXPORT_METHOD(createCall: (NSString *) to withVideo: (BOOL) video ResponseCallback: (RCTResponseSenderBlock)callback) { NSString * callId = [createVoipCall: to withVideo:video]; callback(@[callId]); } |

If want to to support video calling we will need an additional component to show the local camera (Preview) or remote video stream (RemoteView):

|

1 2 |

@interface YourRendererView : RCTView @end |

Initialization and deinitialization can be implemented in the following methods:

|

1 2 3 4 5 6 7 8 9 10 11 |

- (void)removeFromSuperview { [videoTrack removeRenderer:self]; [super removeFromSuperview]; } - (void)didMoveToSuperview { [super didMoveToSuperview]; [videoTrack addRenderer:self]; } |

You can find the code examples on our GitHub page – just swap the references to our signaling with your own. We found examples very useful while developing the module, so hopefully they will help you to understand the whole idea much faster.

Demo

The end result can look like as follows:

Closing Thoughts

When WebRTC community started working on the standard one of the main ideas was to make real-time communications simpler for web developers and provide developers with a convenient Javascript API for real time communications. React Native has similar goal, it lets web developers build native apps using Javascript. In our opinion bringing WebRTC to the set of available React Native APIs makes a lot of sense – web app developers will be able to build their RTC apps for mobile platforms. Guys behind React Native has just released it for Android at Scale conference, so we will update the article or write a new one about building the module compatible with Android as soon as we know all the details.

{“author”, “Alexey Aylarov”}

Nice article!. This would speed up the process of building mobile versions of existing web apps. Thanks Alexey!

Hi, I am expecting the article about Android. When will you publish it?

If write in React Native then can you create an SDK so appcn be embedded into other apps?

Hi,

I have implemented WebRTC for video recording. I am facing issue i.e. when start camera to video recording the flashlight turn off of device camera.

isn’t there a react native webRTC library?