Many in the industry, including myself, reference Amazon’s Kindle Fire HDX Mayday button as using WebRTC or at least as something that is WebRTC like. The Kindle Fire HDX is not available everywhere, so if you have not seen this the Android Authority has a good video of this feature here.

First lets think about how we tell if an app is using WebRTC. If the app is a webpage it is fairly simple – just look for the use of the getUserMedia and CreatePeerConnection APIs in the site’s Javascript using your browser’s developer console. It is a little more complex if WebRTC is embedded inside a native application. We could start with a debate about “What makes an app a WebRTC app”? If it uses part of WebRTC source code and not the W3C API’s does it count? Since this blog is for developers, not philosophers, let’s start by figuring out what Mayday actually does by looking at a Wireshark trace.

Cracking Mayday

We started considering 2 main approaches:

- Root the Kindle and search for signs of the WebRTC code

- Use Wireshark and examine what the app is sending over the wire to see if it does WebRTC-like things

The first approach is tricky and may not yield any useful information depending on how the app was built. The second approach is relatively simple, but yields less information since WebRTC streams should be encrypted and therefore difficult to decode unless you are the NSA.

I convinced an old friend of mine that recently purchased an Amazon Kindle Fire HDX to help investigate the Mayday button. We looked into rooting it for approach #1 but he was not willing to take the risk of bricking his brand new device. Therefore we went with approach #2.

You can find the complete capture here. He also took a video to help synchronize what happens on the screen with the trace (we removed the audio to help keep my friend anonymous). If you don’t know Wireshark and want to follow along you should probably look at some tutorials first.

What are we looking for?

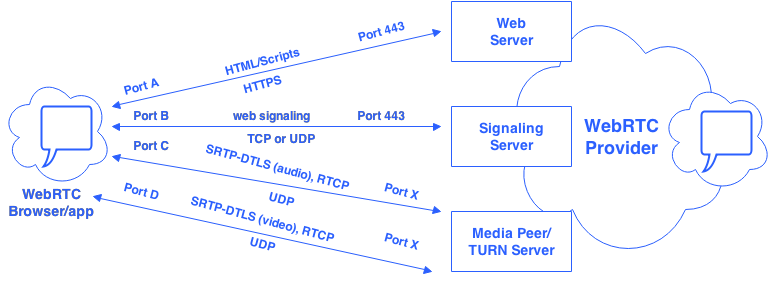

As mentioned above, WebRTC uses encryption so we shouldn’t be able to see the signaling contents or listen/see the RTP media. However, there are a bunch of things that happen over the wire in a typical WebRTC session – like a Chrome to Chrome browser session:

- Some kind of HTTP/Javascript pushed from a webserver – this may not exist in an app

- Some kind of signaling to a signaling server – this could be Victor’s RFC7118 or any other web-based signaling

- ICE negotiations with STUN & TURN – other VoIP technologies are much more likely to use Hosted NAT traversal from a SBC

- SRTP – 4 SRTP streams communicating to a media peer or TURN server (assuming bi-directional audio & video) – one in each direction for voice and one in each direction for video; this should be the majority of traffic

- WebRTC mandates DTLS for SRTP, so we should see DTLS negotiations on the same ports as our media

- RTP Bundling – the WebRTC RTP usage draft requires the use of stating “Implementations are REQUIRED to support transport of all RTP media streams, independent of media type, in a single RTP session according to [I-D.ietf-avtcore-multi-media-rtp-session]” meaning each of the above RTP streams should be on the same IP address, Port, and Transport (UDP or TCP)

- RTCP multiplexing – RTP and RTCP traffic should share the same ports according to the latest WebRTC RTP specs: “support for multiplexing RTP data packets and RTCP control packets on a single port for each RTP session is REQUIRED, as specified in [RFC5761]”

If you put this all together, this should look something like:

Resolving Our Topology

I am not a Wireshark decoding expert (maybe I am now), but I noticed a few things on my initial scans:

- Lots of STUN and TURN traffic

- A couple Amazon servers involved in RTP traffic

- HTML, CSS and Javascript content being pushed to the Kindle

- TLS negotiations to encrypt traffic

It took me several iterations to figure out the topology, some of which I will walk through below. Wireshark has various tools to help identify the various entities in the capture, including:

- statistics->conversations

- statistics->end points

- telephony->RTP

These tools show various stats to help figure out what is going on.

Here is a quick summary of what I found by digging deeper into the conversations identified above:

| User | User Port | Network | Network Port | Direction | Contents |

| Kindle | 46011 | Kconnect-us.amazon.com | 443 | <-> | HTTPS |

| Kindle | 40787 | Edge Server | 443 | <-> | TLS |

| Kindle | 45563 | Edge Server | 29548 | <-> | RTP-G.711 |

| Kindle | 45563 | Edge Server | 29549 | -> | RTCP |

| Kindle | 59522 | TURN Server | 3478 | <-> | RTP Dynamic 100 & 116, RTCP |

| Kindle | ? | ? | ? | <- | Screen control |

Keep in mind we actually took a couple of separate traces – I am only referring to the final clean one we did in this post but we noticed the same patterns in both.

Signaling

It is hard to hide any traffic that may be happening in the background unrelated to the Mayday button, but trace reveals a regular flow of traffic from www.amazon.com and Kconnect-us.amazon.com. Kconnect-us.amazon.com has variable body sizes with a minimum size of 60 bytes. I am guessing this is some kind of Kindle control channel that is always running.

There is also a bi-directional TLS stream that is setup between the Kindle and the edge server at the beginning of the call.

RTP Streams

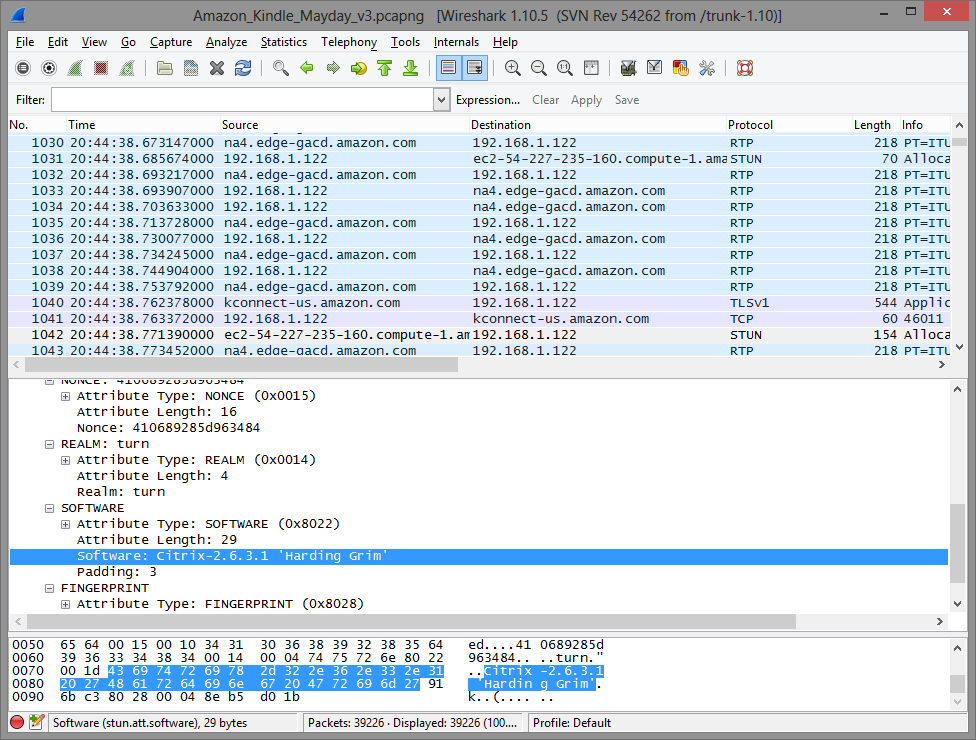

Let’s start with RTP since that should be the vast majority of traffic. We know audio was sent and received from the agent and that the agent was sending a video stream, so I expected at least 3 RTP streams – probably 4 since we also know the agent can see the screen and a common approach is to encapsulate this with a video codec. There is also a lot of UDP traffic going through this TURN server that is RTP. I did a few tricks to get all the RTP streams to show correctly:

- Setting protocol preferences for RTP to “Try to decode RTP outside of conversations” and treating RTP version 0 packes as STUN packets

- Manually decoding all traffic on ports 29548 as RTP

- Turned off RTP-EVENT decoding – I am not sure why, but Wireshark was showing a lot of RFC2833 events coming from the TURN server; the full range of telephony tones were clearly not being sent to the Kindle so I turned this decoding off and this filled in the missing packets in the stream

After doing this I could see 4 distinct RTP streams under Telephony->RTP->Show All Streams to see what I had for RTP:

Clicking on the Analyze button reveals more useful information. There are 2 G.711 streams – one from our Kindle to an “edge” sever labled na4.edge-gacd.amazon.com and one in the reverse direction. These each last 188 seconds, which corresponds to our call times.

The other ones are labeled RTPType-100 and RTPType-166. RTPType-100 and above corresponds to dynamic payload types. These are typically used for video, so this should be no surprise. The Analyze button indicates the these streams last 183 seconds. If you watch the video you will notice there is a 4-5 second lag before the video starts, so this makes sense.

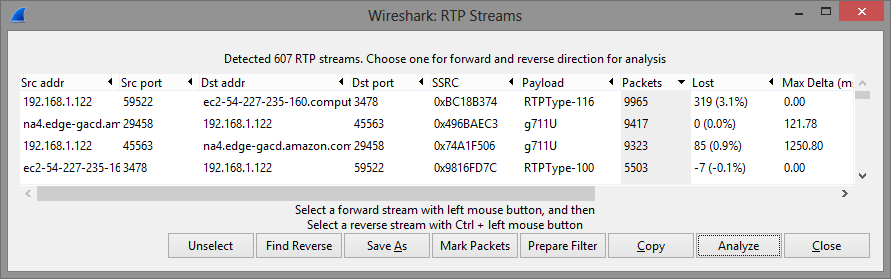

Mayday uses rfc5766-turn-server

As you remember from Reid’s posts on NAT Traversal and Emil’s Tricke-ICE review, WebRTC sessions use the ICE framework with STUN and TURN to traverse NATs. These packets show a lot of useful information.

Wireshark labels STUN and TURN packets the same, but if you search on “TURN” it will bring you to Frame # 1042. Examining this packet revealed it was the Citrix-2.6.3.1 ‘Harding Grim’ TURN server:

A quick search on this reveals a header file containing this as a #define value in the source code for the popular rfc5766-turn-server by Oleg Moskalenko (see here for our Q&A with Oleg). Oleg – rfc5766-turn-server is awesome you deserve some credit for being part of Amazon’s Mayday service!

RTCP multiplexing

As mentioned above, WebRTC multiplexes RTCP on the same port as RTP. Wireshark does not have great handling of this. Sorting our RTP stream list (you can’t see the many individual RTCP packets in the graphic above) reveals the 2 video streams use RTCP multiplexing – look for all the “Reserved for RTCP Conflict Avoidance” packets.

RTCP on the 2 G711 streams needed a bit more tweaking to get them to show correctly. When it is not multiplexed, RTCP is usually sent on a 1 port higher than the RTP port, and it looks like that is the situation with ports 29458 and 29459. I did a “decode as” for RTCP on 29459 to show this.

It looks as it RTCP is multiplexed with RTP on the Kindle end, so there was not much I could do there to force Wireshark to resolve all these packets as one RTCP stream.

Screen control?

The agent drew on the screen between 20:46:26 and 20:46:31. I could not find any obvious candidate flows for this – many possibilities exist. If you watch the video, you can see the the drawing appears in sections – not as flowing bush strokes. This implies the image may be sent in several bundles as opposed to a continuous stream of individual pixels to draw.

What does it all mean?

Why are the video streams going though a TURN server but not the audio? Why aren’t all the streams going to the same location. Why isn’t RTCP multiplexing used on the edge server but it is on the Kindle? What is controlling the signaling?

This would all have me confused, except I have a customer with a very similar topology. This customer is using WebRTC to video enable an existing contact center. They already had a SBC to:

- control SIP signaling,

- manage the audio streams,

- help with NAT traversal, and

- connect to the existing contact center infrastructure.

WebRTC is just being used to add video between the end points and the agents there. I believe that is probably the case here too:

Amazon runs a massive call center based on normal audio telephony. The infrastructure for agent Work Force Optimization (WFO) is critical for skills based routing, performance monitoring, and logging. This infrastructure is generally tightly integrated with the existing audio systems. It is reasonable to think that Amazon would not want to rebuild all of this from scratch for WebRTC and would prefer to leverage as much existing infrastructure as possible. Connecting the audio to these existing systems and using WebRTC to add video would allow them to do that. This also explains the lag between the video and audio. WFO systems are usually triggered off the start of audio – it would make sense that Amazon would start that as soon as possible.

So is this WebRTC?

So let’s refer back to our original list to see what WebRTC-like traits are present:

- Web-based signaling – very plausible but very hard to confirm without unecrypting

- ICE negotiations with STUN & TURN – yes, lots of that along with a rfc5766-turn-server implementation

- SRTP – check

- SRTP-DTLS – nope; it is possible Amazon is using SDES. This would not be surprising since, as Victor covered in this post, DTLS was only mandated last August

- RTP bundle – not applicable here; the audio and video are going to separate places so there is not really an opportunity to combine them in a single IP:Port

- RTCP multiplexing – yep, everywhere but the Edge server/SBC which is not surprising

I am not going to impose a definition of WebRTC on you here, but I think there is a very high probability that this is WebRTC for the video with a lower probability on the audio. I think the more interesting, and perhaps unexpected, result is the layering of what looks very much like a WebRTC video flow on-top of a more traditional VoIP audio connection. I was also pleasantly surprised to see the use of Oleg’s rfc5766-turn-server. It will be interesting to follow how/if this service changes as WebRTC matures.

Got Wireshark skillz? Please take a look at the trace yourself and comment below.

I’ll be at Enterprise Connect in Orlando next week if you happen to be there.

{“author”, “chad“}

That’s a very interesting investigation… Lots of “forensic” information. Thanks, Victor, for the analysis !

Oleg

Oleg, it’s great to see real-world services using your software — as I mentioned several times, I have a number of Tier1 customers also playing with your server to run some WebRTC-related trials 😉

For the record, I’ve tried to help but this has been mostly Chad’s (evening/night) work!

Sorry, I meant, “thanks, Chad”, of course !

Oleg

Google did their own forensics and confirms our Mayday findings and adds there is high likelihood that VP8 is used:

“Well, it’s true. We did our own forensics a few months back and determined that Mayday is completely WebRTC-based, using the Chrome WebRTC stack, the G.711 audio codec, and most likely, the VP8 video codec. There are a number of hints in the Wireshark trace that give this away, even though the media is encrypted with SDES-SRTP; we are pretty sure the video is VP8 because the observed packetization exactly matches how WebRTC handles VP8.”

https://plus.google.com/103619602351433955946/posts/d7W3VuYJcFJ

Now we should try to learn what’s happening at the signaling level

Is the WebRTC Screen sharing used for sharing of the users screen?

Yes, the trace indicates a video stream is being sent from the Kindle to the network. Since the service does not utilize a video camera on the device this stream must be for encoding the screen capture.

Does anyone know of a company that can implement a turnkey “Mayday” type service. We do a lot of work in schools helping teachers implement technology and having a way for our coaches to provide on-demand support to teachers would be very interesting. Thanks!

I met with Oracle salesmen recently. They told me Amazon is using the Oracle Session Controller for their Mayday service. Were they lying to me?

They did, at least at the time when this blog post was written.

See https://youtu.be/Gr7PJAyMJdU?t=2m1s — this shows the STUN software attribute from one of the packets in the dump. Googling for this ‘net-net os-e’ leads to a Oracle session border controller manual.