It is always exciting to have not one, but two WebRTC gurus teaming up on a WebRTC analysis. This time, regulars Gustavo and Fippo take a look at Cloudflare’s WebRTC implementation, how they use the new WebRTC-based streaming standards – WHIP and WHEP, and Cloudflare’s bold pronouncement that they can be a replacement to open source solutions like mediasoup and Janus.

Read on for the latest in our blackbox reverse engineering series below.

{“editor”, “chad hart“}

Sidenote: speaking of Fippo, mediasoup, and Janus – see all three and hear about WHIP/WHEP at the Kranky Geek WebRTC show, Nov 17th.

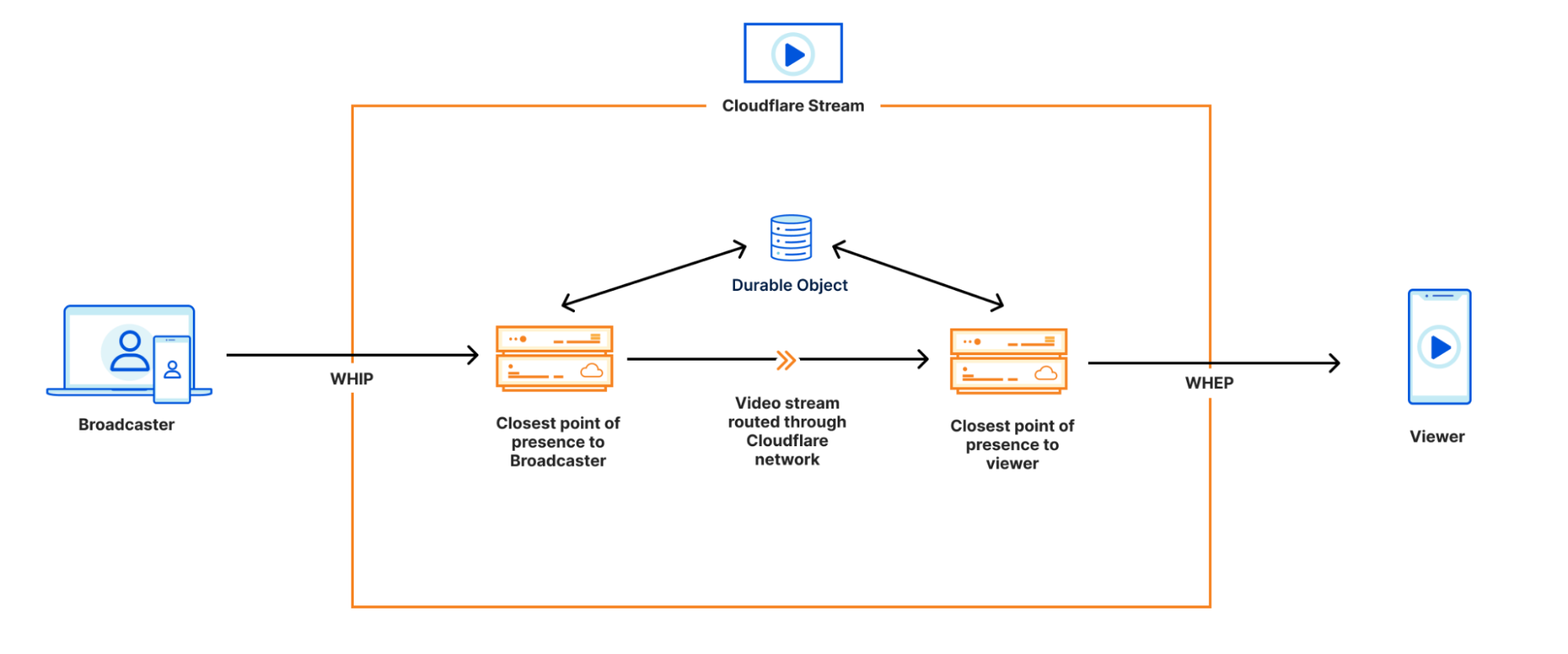

When one of the world’s largest Content Delivery Network (CDN) providers gets into the WebRTC game, it gets our attention. About a year ago, Cloudflare started with a TURN service. Last month they added a live streaming with WebRTC option under their Stream service in addition to Cloudflare Calls to provide multiparty audio and video capabilities. Cloudflare tries to differentiate itself by leveraging its global network and Anycast routing for low latency and resiliency.

We really wanted to test the RTC service. While we were waiting, Renan Dincer, one of their system engineers, mentioned on Twitter that the service is powered by the same infrastructure and technology as Cloudflare Stream. So, we made a basic sample app and grabbed a webrtc-internals dump and Wireshark capture to analyze how they use WebRTC technology to power this new infrastructure and look for interesting ideas that we can reuse…

Summary

| PeerConnection | Single PeerConnection (but we only looked at the streaming use-case with a single stream) |

|---|---|

| ICE / TURN | Only STUN servers and UDP candidates |

| SRTP encryption | SRTP with AES CM cipher suite |

| Audio transmission | Opus with inband FEC but without DTX. No other audio codecs are supported in the SDP |

| Video transmission | VP8 without simulcast |

| DataChannels | Not used |

| RTP header extensions | only transport-cc |

| RTCP | transport-wide-cc bandwidth estimationNACK without RTX retransmissions |

| Interfaces | WHIP & WHEP with opensource SDK |

Review

For the review, we checked out both WHIP and WHEP on Cloudflare Stream:

- WebRTC-HTTP Ingress Protocol (WHIP) for sending a WebRTC stream INTO Cloudflare’s network as defined by IETF draft-ietf-wish-whip

- WebRTC-HTTP Egress Protocol (WHEP) for receiving a WebRTC steam FROM Cloudflare’s network as defined in IETF draft-murillo-whep

These are 2 new specifications that define simple HTTP-based signaling interactions for WebRTC media streams aimed at the real-time streaming/broadcasting industry. While these specs define WebRTC’s signaling and some media parameters, there are still many details left up to the implementation. In addition, WHIP and WHEP likely won’t be used for Cloudflare Calls so we looked for insights into what their multi-party conferencing implementation might look like.

Code & Developer Experience

In terms of developer experience, using Cloudflare Stream was very good. Their dashboards and documentation are very polished and we got support and answers from their community in the forums and Discord channels when we needed them.

Cloudflare provides a very simple JS SDK (WHIPClient.js and WHEPClient.js) but it is just 100 lines of code that customers can modify and tune for example to choose the video source and resolution.

You can see their sample on stackblitz:

See their instructions here on where to get the credentials to use that.

PeerConnection

First, we looked at the peer connections created. As expected for a WebRTC service, Cloudflare Stream creates an RTCPeerConnection for sending video and a separate one PeerConnection for receiving video. We will need to check back to see if Calls will use one or multiple peer connections for each participant.

The offerer is always the browser, both for sending and receiving. Using that approach for receiving a stream you want to view is not that common, but not unreasonable. This is probably related to the use of WHEP as described in the section below.

There is no special configuration passed to the PeerConnection constructor. All the settings are the default ones and we will review the iceServers configuration in the next section.

| Configuration: “https://cloudflare-templates-nd6fvy–8788.local.webcontainer.io/, { iceServers: [stun:stun.cloudflare.com:3478], iceTransportPolicy: all, bundlePolicy: max-bundle, rtcpMuxPolicy: require, iceCandidatePoolSize: 0, sdpSemantics: “unified-plan”, extmapAllowMixed: true }” |

ICE / TURN connectivity

Cloudflare configures STUN but not TURN servers. Given that Cloudflare servers have a public IP, that is good enough for NAT traversal. However, it is not enough for networks that block UDP or non-TLS traffic. There are other services like Google Meet that do not include TURN servers, but in those cases, their media servers include ICE-TCP and pseudo-TLS ICE candidates for restrictive firewalls. Those techniques are not available in this case.

Their servers use the ice-lite variation of ICE, like most of today’s SFUs. You can see the candidates in the answer:

|

1 2 |

a=candidate:2796553419 1 udp 2130706431 172.65.29.121 1473 typ host a=candidate:2796553419 2 udp 2130706431 172.65.29.121 1473 typ host |

We see UDP candidates only for IPv4 on port 1473 (and separate candidates for RTCP as specified by component 2 which are unnecessary when rtcp-mux is negotiated). As mentioned before, there are no ICE-TCP or (nonstandard) ssl-tcp candidates.

The offer is posted together with some of the local ICE candidates that are gathered after a one-second timeout (or when gathering is finished). We found a public IP (i.e. a server reflexive candidate) in the list of candidates is required, otherwise, the offer is rejected. This is somewhat surprising since local ICE candidates aren’t typically necessary when talking to a server that discovers those candidates as peer-reflexive. We have seen this requirement to “whitelist” source addresses in the past, however.

The answer from the server always has a single candidate. We found some cases where it is not the correct one. Specifically, if the offer had IPv4 and IPv6 even if the latter was link-local and not useful for internet communication, the server would return a single IPv6 candidate making impossible the communication.

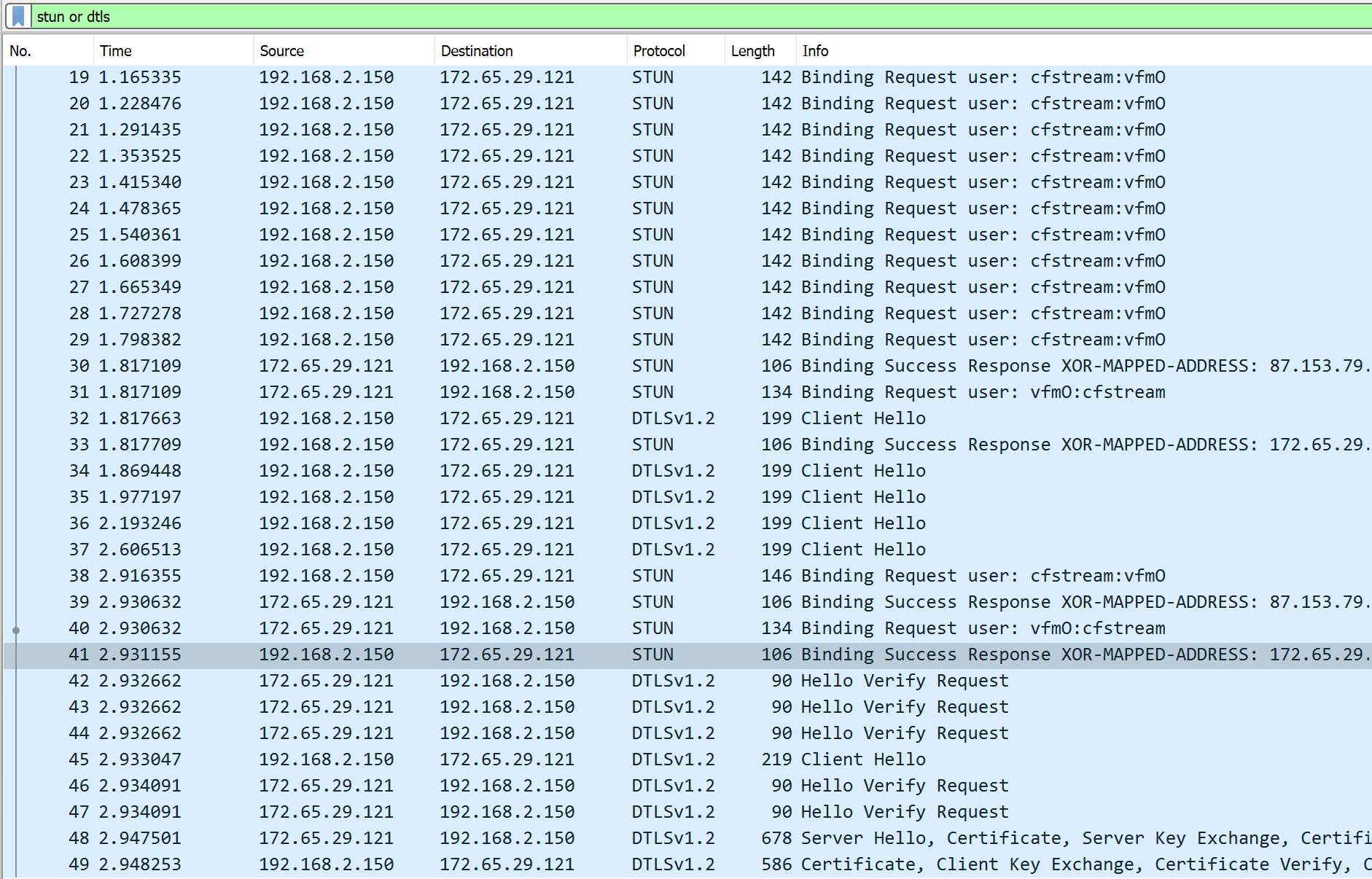

Looking at the Wireshark dumps we see a surprisingly large amount of time pass between the first STUN request and the first STUN response – it was 1.8 seconds in the screenshot below.

In other tests, it was shorter, but still 600ms long.

After that, the DTLS packets do not get an immediate response, requiring multiple attempts. This ultimately leads to a call setup time of almost three seconds – way above the global average of 800ms Fippo has measured previously (for the complete handshake, 200ms for the DTLS handshake). For Cloudflare with their extensive network, we expected this to be way below that average.

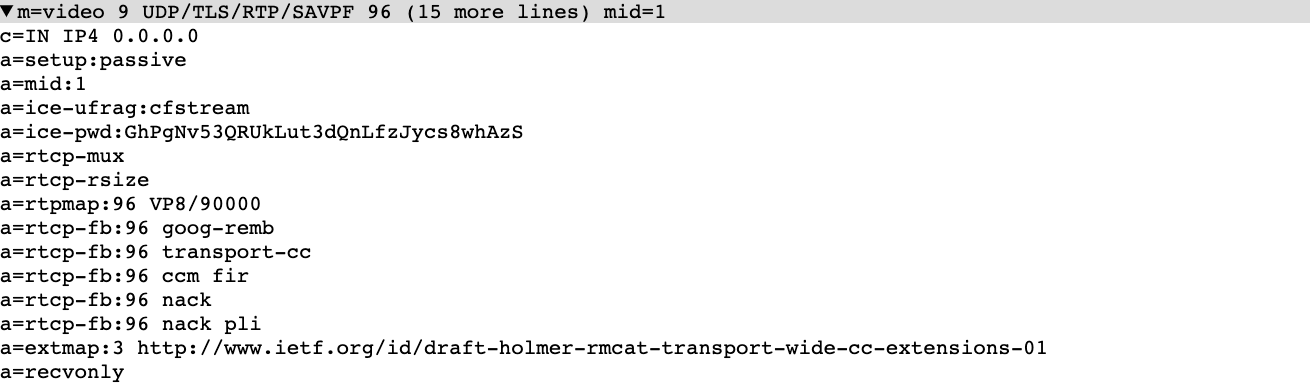

ICE credentials and demultiplexing implications

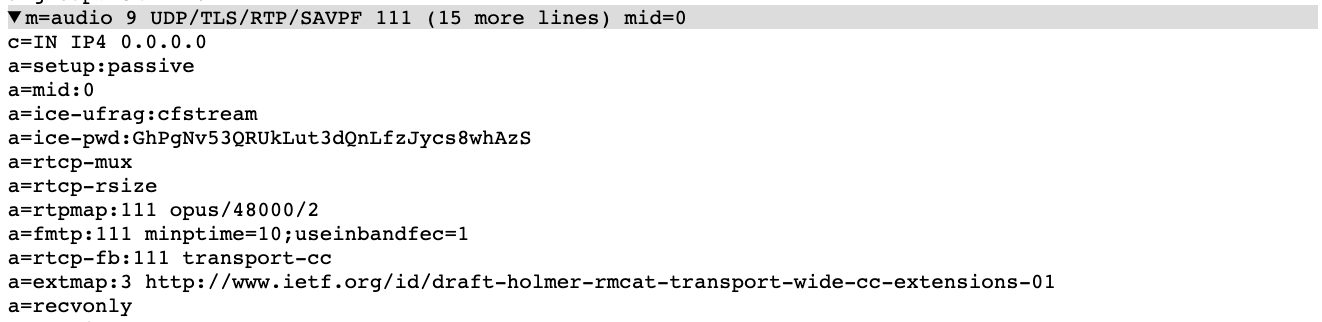

Cloudflare uses a hardcoded ice-ufrag in the SDP:

|

1 2 |

a=ice-ufrag:cfstream a=ice-pwd:GhPgNv53QRUkLut3dQnLfzJycs8whAzS |

After digging some more we noticed that the ice-pwd is used to generate the message authentication in both sessions. What was more concerning, the same ice-pwd was reused a couple of days later. While it is hard to come up with a way to exploit this, it is a bad practice since ice-pwd is used in STUN short-term authentication for the messages sent by ICE (described in https://www.rfc-editor.org/rfc/rfc5389#section-10.1).

We reported this privately and it is getting fixed which might take a while.

Other than this, the 30 characters in the ice-pwd attribute gave us a hint at the implementation. Normally, this attribute is defined to have at least 22 characters in https://www.rfc-editor.org/rfc/rfc5245#section-15.4

One library that uses 30 instead is Pion’s ICE implementation https://github.com/pion/ice/blob/28d40c9a2700166dc42d1a2e399fd19424e701ea/rand.go#L11

Audio and Video getUserMedia constraints

These are not very relevant given that the audio and video acquisition is managed inside the very simple WHIP JS library and that can be changed by each application and be different in the upcoming new Calls product.

Audio coding adjustments

SDP audio answers from the server are not very complicated:

Stream only respond with Opus as an audio codec, specifying the usage of inband forward error correction (FEC) but no discontinuous transmission (DTX) and using transport-cc for bandwidth estimation.

We also observe the usage of the legacy mslabel and label ssrc-specific attributes. These have been removed from Chrome a few releases back (after being a “TODO remove” for almost a decade). The standard a=msid attribute is not used; instead, only the legacy ssrc-specific variant of it is used. This is not going to work well in Firefox…

Surprisingly, the cname attribute, which should be the same for audio and video from the same source, is the same as the track identifier. Even though in WebRTC synchronization is generally not happening on CNAME, that is a bit odd.

Video Coding

For video, we see only VP8 being negotiated, with the standard options.

Notably missing is the support for retransmissions or RTX. While this was not supported in Firefox for a long time, it is generally available across browsers these days and “cleaner” than resending packets in plain.

We don’t see simulcast enabled but that might be different for the Calls product – time will tell…

Security

Cloudflare relies on the standard WebRTC encryption based on DTLS for the key negotiation and SRTP for the media encryption.

The server negotiates a “passive” setup attribute which means the browser is the DTLS client. This is typically done to let the server negotiate the more advanced (and less CPU-heavy) GCM SRTP cipher suites, however, we still see the AES_CM_128_HMAC_SHA1_80 cipher.

RTP header extensions

The small number of header extensions used are noticeable. In this case, Cloudflare only used the minimum required to have bandwidth estimation working (transport-wide-cc header extension).

The ssrc-audio-level extension which is commonly used for active speaker detection is not used. However, this implementation is a streaming use-case where that isn’t needed. We expect to see this in Calls for multiparty calling when that is available.

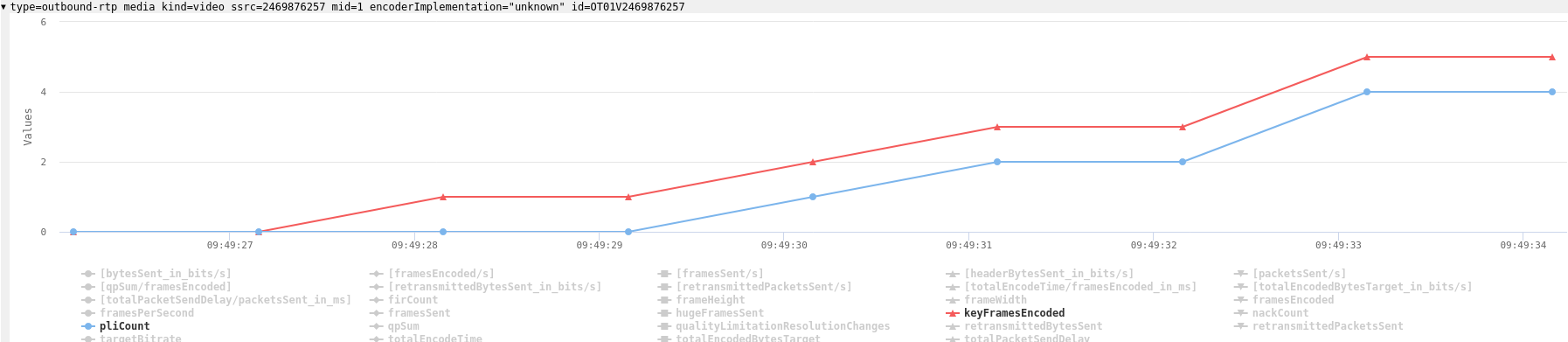

RTCP

In terms of RTCP, there is nothing major to note. Stream uses transport-wide congestion control messages for bandwidth estimation, NACKs for video retransmissions and PLI for keyframe requests. The service is not currently using RTX for retransmissions, but it does use the same packets with the same ssrc as the original transmission.

The rtcp-rsize extension is negotiated for audio even though this has no impact. In general, all WebRTC implementations deal quite well with the behavior specified in RFC 5506 https://datatracker.ietf.org/doc/html/rfc5506 even when not negotiated.

We see a periodic picture loss indication (PLI) and a resulting keyframe every three seconds.

We will need to see if that also happens in the Calls product. Static keyframe rates typically lead to lower video quality compared to on-demand keyframes.

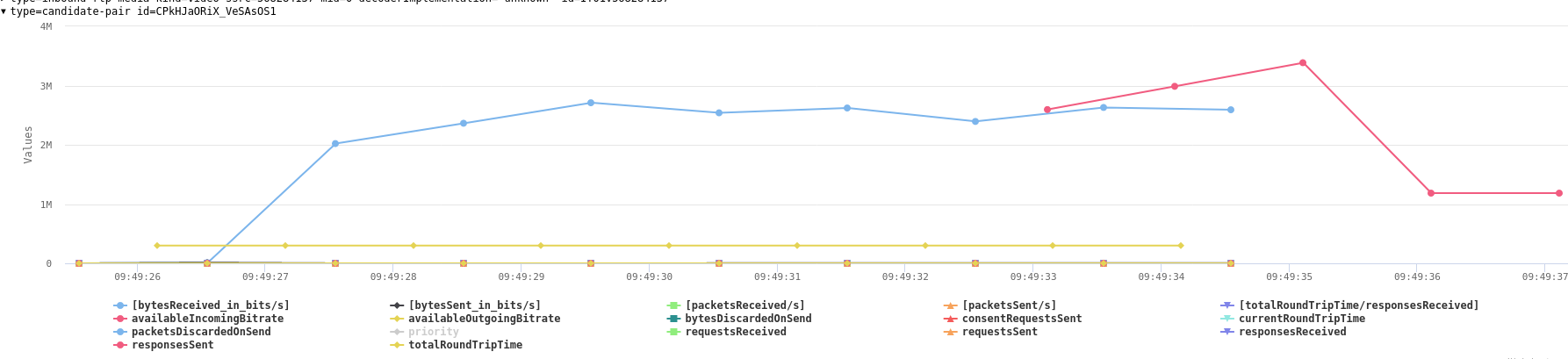

The inbound stream shows Receiver Estimated Maximum Bitrate (REMB) messages – and consequently availableIncomingBitrate stats on the candidate-pair.

This makes no sense since that stream is not going to send anything.

RTP

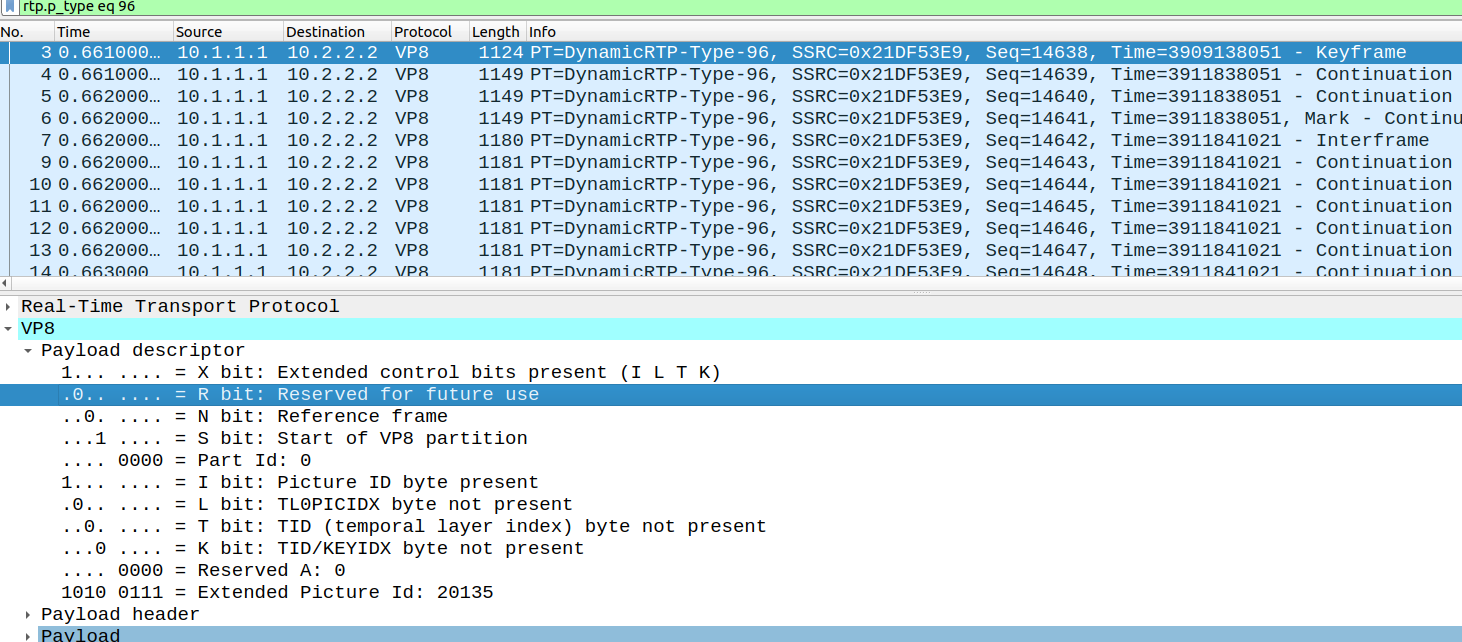

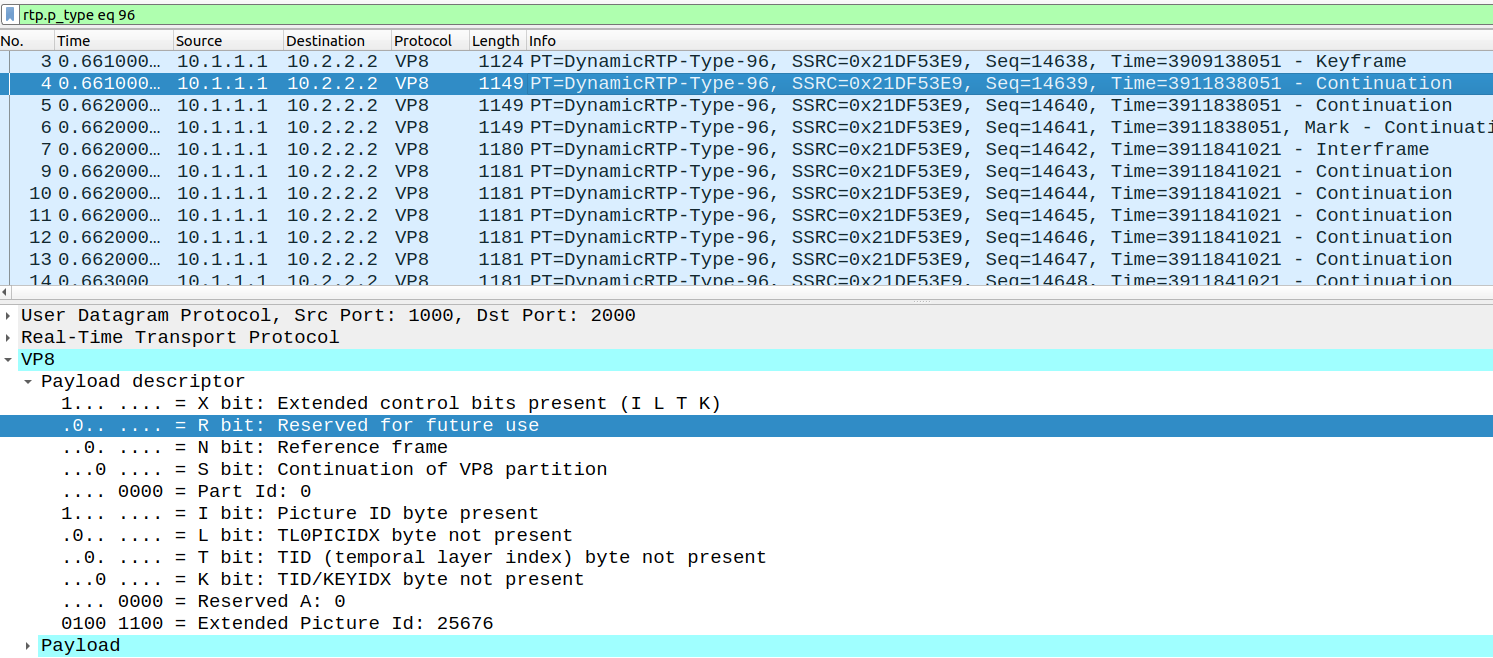

Since we think debugging VP8 is fun, we also took a quick look at the VP8 payload being sent and received in Wireshark. We found a surprising oddity – likely a bug – in the picture ids (see also this PSA: VP8 Simulcast in the SFU).

For the very first frame, the VP8 picture Id is different in the first packet than in the rest of the packets. While the picture Id is 20135 in the first packet:

The next packet of the same frame changes to 25676 and this number is incremented moving forward.

It is rather surprising that libwebrtc even accepts that behavior even as it is clearly a bug somewhere in the system.

Networking and Anycast

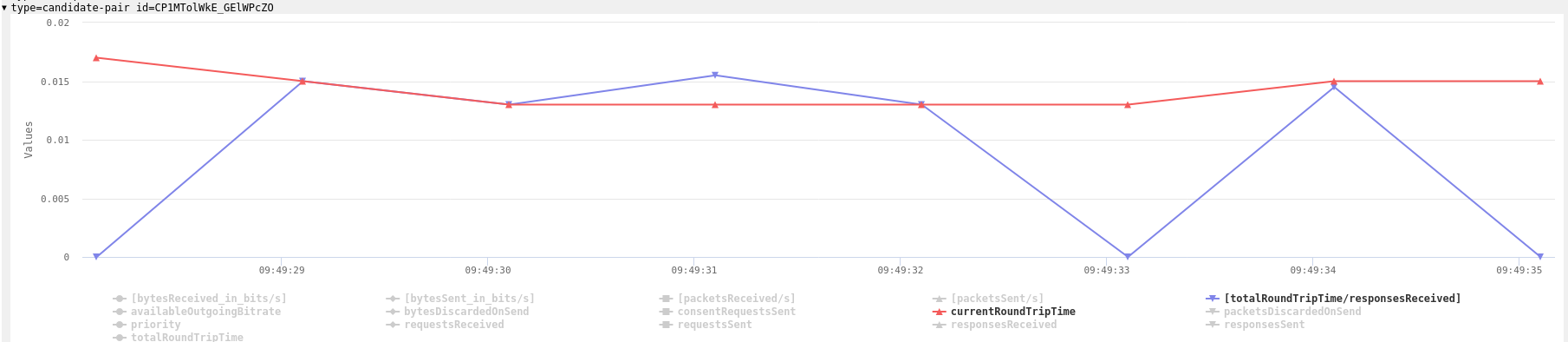

The core value proposition from Cloudflare is the use of their network infrastructure to provide very low latencies which helps to improve the user’s RTC experience. Media servers are exposed behind an anycast IP that is routed to the region with the lowest latency. Lower latencies should result in higher quality and anycast helps with network-level redundancy. We tested the latency with a basic ping and looked at the STUN round trip times and got values from 5ms to 15ms depending on the country in Europe:

This is in line with what we see from Google services like Stadia and Meet or NVIDIA’s GeforceNow.

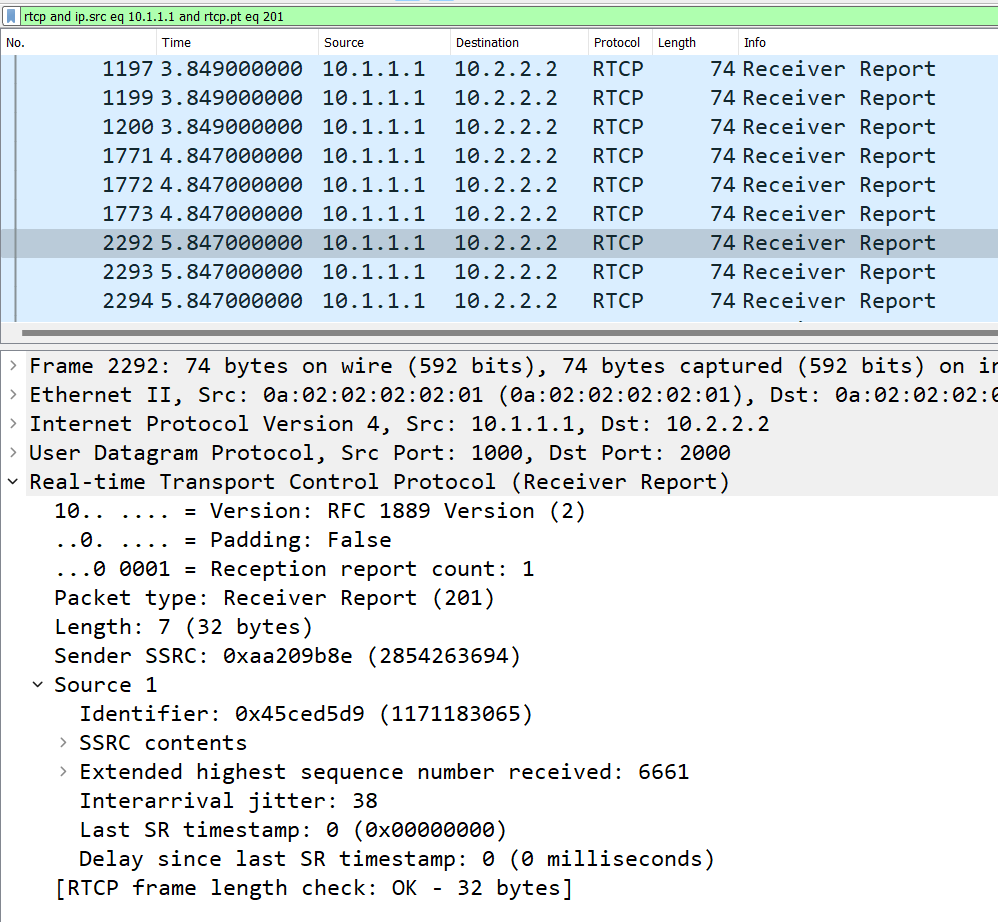

The remote-inbound-rtp statistics for Round Trip Time are not present in the stats. Looking at the Wireshark PCAPs, it turned out that the “last sender report” timestamp in the RTCP receiver reports as well as the “delay since last sender report” (DLSR) fields are always set to zero. That breaks the Round Trip Time calculation:

The implementation also generates receiver reports for RTX despite not negotiating or using it.

WHIP & WHEP Interfaces

One interesting aspect of Stream solution is that it is based on newly standard interfaces – WHIP and WHEP. These are HTTP-based interfaces being standardized for the ingestion and playback of streams. The launch of these newer standards by a company as widely used as Cloudflare is great for the advancement of these standards. It is also one less proprietary interface to worry about for converting between WebRTC and RTMP.

The solution is supposed to provide also traditional HLS/DASH interfaces like the original version of the Cloudflare Stream product, but apparently, that part is not yet fully supported. Once this is in place we expect to be able to publish using WebRTC for low latency and browser interoperability and consume using more typical HLS players for cheap and easy very large audience viewing.

Conclusions and future bets

In terms of implementation details and quality, Cloudflare’s implementation is not an alternative to mediasoup or Janus yet (we found the “Cloudflare Calls can also replace existing deployments of Janus or MediaSoup” statement in the original announcement rather odd). It is like comparing Heroku with Apache. In addition, Cloudflare has some room to improve in several areas. For example, it would be nice if they supported more codecs and quality features like simulcast and RTX.

Very positively, the existing interfaces (WHIP/WHEP) and the expected ones (HLS/DASH) provide an easy integration path for applications. The standardization that WHIP and WHEP provide the no SDK is necessary. Cloudflare’s network (leveraged by the use of anycast) and their massive customer base make this a promising product for both streaming and calling use cases even if it is still beta and with some details and features to iron out.

{“authors”: [”Gustavo Garcia”, “Philipp (fippo) Hancke“]}

Hi, thanks for this nice article, what do you guys think about cloundflare calls in 2024, they have made it open beta and pricing things are there as well. I am currently using mediasoup. Do you guys think cloudflare calls is replacement of mediasoup?