- WebRTC and its peer-to-peer capabilities are great for one-to-one communications. However, when I discuss with customers use cases and services that go beyond one-to-one, namely one-to-many or many-to-many, the question arises: “OK, but what architecture shall I use for this?”. Some service providers want to reuse the multicast support they have in their networks (we are having fun doing some experiments with this), some are exploring simulcast-based solutions, others are considering centralised solutions like MCUs/mixers, and a bunch of them are simply willing to place the burden on the endpoint by using some variation of a mesh-based topology.

- The folks at TokBox (a Telefónica Digital company) have great experience with multiparty conferencing solutions. I thought it would be great to have my friend Gustavo Garcia Bernardo (Cloud Architect at TokBox) to share here his take on the topic.

At TokBox, Gustavo is responsible for architecture, design, and development of cloud components. This includes Mantis, the cloud-scaling infrastructure for the OpenTok, which uses the WebRTC platform. Before joining TokBox, Gustavo spent more than 10 years building VoIP products at Telefónica and driving early adoption of WebRTC in telco products. In fact, I’ve known Gustavo for 8 years now and the first time I met him it was preparing a proposal for a European Commission-funded research project on P2PSIP. Since then we’ve been collaborating in the IETF doing some work in the context of P2PSIP, ALTO and SIP related activities. A couple of years ago, while I was working with Acme Packet (now Oracle), we worked together designing and launching Telefonica’s Digital TuMe and TuGo. Lately we have both shifted our focus towards WebRTC.

{“intro-by” : “victor“}

WebRTC beyond one-to-one (by Gustavo Garcia Bernardo)

In spite of limited specification of anything beyond one-to-one audio and video calls in WebRTC, one of the most popular usages of this technology today is multiparty video conference scenarios. Don’t think just about traditional meeting rooms. There are different use cases beyond meeting rooms, including e-learning, customer support, or real time broadcasting. In each case, the core capability is being able to distribute the media streams from multiple sources to multiple destinations. So… if you are a service provider how can you implement a multi-party topology with WebRTC endpoints?

There are several different architectures that may be suitable depending on your requirements. These architectures basically they revolve around two axis:

- Centralized vs Peer-to-Peer (P2P) and

- Mixing vs Routing.

I will describe the most popular solutions here. If you need to go deeper into the protocol implications and implementation details the architectures, you can find all the relevant information in the RTP topologies IETF document.

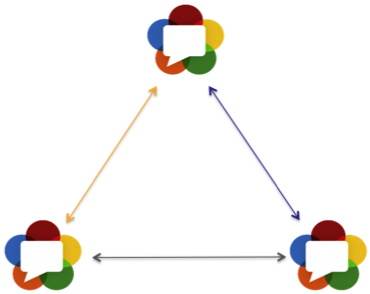

Mesh solution

The Mesh approach is the simplest solution. It has been popular among new WebRTC servie providers because it requires no initial lack of infrastructure. The architecture is based on creating multiple one-to-one streams from every sender to every possible destination.

Even if it looks like an inefficient solution, in practice it is very effective and provides the lowest possible delay, with independent out-of-the-box bit rate adaptation for each receiver.

The “only” problem is that this solution requires lot of up-link bandwidth to send the media to all the destinations simultaneously, and existing browser implementations require a significant amount of CPU power to encode the video multiple times in parallel.

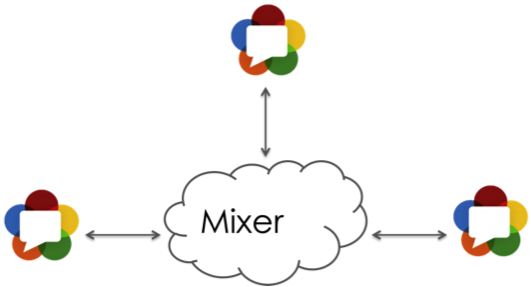

Mixer solution

This Mixer approach is the traditional solution for multi-conferencing, and has been used for years with great success. This success can be credited to the fact that it requires the least amount of intelligence in the endpoints. The architecture is based on having a central point maintain a single one-to-one stream with each participant. The central element then receives and mixes each incoming audio and video stream to generate a single stream out to every participant. One common term in the video conference industry for these centralized element is Multipoint Control Unit (MCU). In practice, use of an MCU usually refers to a mixer solution.

Mixers are very good solution for inter-operating with legacy devices. They also allows for full bit rate adaption because the mixer can generate different output streams, with different qualities for each receiver. Another advantage of a mixer solution is that it can utilize hardware decoding in the device, providing that many WebRTC devices would include the capability to decode a single video channel in the chipset.

The main drawbacks are the infrastructure costs in the MCU. Additionally, because mixing requires decoding and re-encoding, this introduces extra delay and loss of quality. Lastly, transcoding and composition may, in theory, result in less flexibility for the UI of the application (although there are workarounds for this issue).

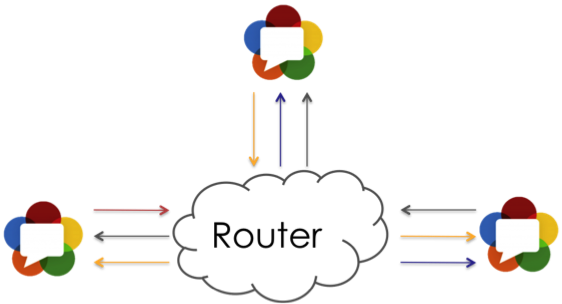

Router solution

The Router (or relay) approach became popularized by H.264 SVC infrastructures, and it is the architecture being used by most of the new WebRTC platforms that have started without any legacy baggage. The architecture is based on having a central point receiving a stream from every sender and sending out a stream to every participant for each. This central point only does packet inspection and forwarding, but not expensive encoding and decoding of the actual media.

Routers provide a cheap and scalable multiparty solution, with better delays and no quality degradation compared with the traditional mixer solutions.

On the other hand, there is less experience in the industry building these infrastructures, and adaptation of streams to different receivers becomes tricky. It requires support in the endpoints to generate multiple versions of the stream (i.e. with simulcast or VP8 temporal scalability) that can later be selectively forwarded in the router, depending on the capabilities of each receiver.

Which architecture should I use?

Unfortunately, there is no simple answer. In fact some commercial solutions include support for all of them, in order to optimize different customers’ use cases. However, there are some general rules of thumb that you can use.

If you are providing an audio only service, or need interoperability with legacy devices, then the Mixer architecture is likely the most appropriate for you. Also, in some cases where the cost of the infrastructure is not an issue, and the participants have very heterogeneous connectivity, this can be a good solution.

If you are building a service to be used by users with really good connections and powerful devices (i.e. an internal corporate service), and the number of participants is limited, then you may get good results with a Mesh architecture.

In general if you are providing a large scale service, preference should be given to the Router approach. At the end of the day, the router solution is closest to the Internet paradigm of putting the intelligence in the border of the network, to achieve better scalability and flexibility when building the end user applications.

What is missing in WebRTC?

Even if there are both commercial and free solutions that provide multiparty services on WebRTC, there are still issues that need to be addressed in the base technology to enable an even better user experience. These include:

- Improved audio processing and coding, specially in the acoustic echo cancellation and noise suppression algorithms.

- More advanced and flexible congestion control, allowing developers to modify on-the-fly, the parameters of streams, such as bitrate, quality, or resolution of video.

- Simulcast and layered video coding support to adapt the original video stream to the capabilities of each receiver independently, without expensive transcoding. In case of simulcast, it can be done today with some workarounds. However, in the case of VP8 layered video coding, it is only possible today by hacking WebRTC codebase.

All in all, we are in a good position to start providing multiparty services to our customers based on WebRTC technology. As the standards evolve, as more APIs are provided, and as better implementations in more browsers are shipped, the future of web based video conferencing becomes even more promising.

{“author”,”Gustavo Garcia”}

Hi guys

you have really good blog! But I have one question about this post

Is there any opensource solutions for Routing video (I say about media server with command protocol) I’ve researched this issue but my results were very poor

Thanks,

Sergey G

If you are looking for an open source video routing solution (as opposed to traditional MCU/mixing), then I would recommend Jitsi’s Videobridge. Guest webrtcHacks author Emil Ivov runs the project.

In the router solution, how do you handle RTCP packets?

Does the router acts as a peer and generate RTCP packets of his own with reception reports or does it just foward RTCP packets from the peers?

If the latter, does it need to modify the packet and replace SSRCs in the header and reception blocks?

Is there an RFC dealing with this?

DO Chrome and FF support it?

Thanks

You can implement the RTCP handling in both ways.

1) Forwarding the RTCP packets without any modification. It works and it is supported by FF and Chrome. It is simpler but less efficient and you can end up wasting bandwidth in RTCP traffic or sending duplicated retransmissions requests.

2) Generating/Manipulating the RTCP packets in the router. Ideally you can aggregate the RTCP feedback from all the recipients and generate a single RTCP feedback to the source with a single SSRC. You will save some bandwidth and win some flexibilty but it is more complex and you have to be careful to not break algorithms like the congestion control in the browsers.

Regarding opensource projects, Licode implements forwarding RTCP without any modification, and apparently Janus has code to do a simple modification of the SSRC before forwarding the packets.

I was missing the fact that Chrome and FF already support connecting to a translator that forward RTCP packets. With your answer I’ve implemented it and it works.

Can you estimate for how many users using forwarding RTCP packets without modifications is fine and when it’s essential to generate or manipulate RTCP packets to save bandwidth and improve the performance?

For 20 users publishing audio and 4 publishing video, is it still OK to forward RTCP packets without modifications?

IMO it is not that Chrome/FF support connecting to a translator but the fact that in most of the cases they ignore the SSRC when they receive the RTCP packets 🙂

For your use case the RTCP bandwidth is probably not an issue yet but you are going to have issues with retransmissions, keyframe requests and bandwidth estimations. If you just forward those RTCP messages (NACK, PLI, REMB…), the quality is not going to be as good as it could be with a little bit of intelligence in the router.

What about ROC synchronization?

If I have one publisher and one subscriber connected to MCU for an hour, the ROC will be probably 3. When a new client join the call, I need to choose a sequence number between 0 and 2^15 and roc=0. The new client will send RTCP reports with different index than what the sender sent.

Is there a way to pass the initial ROC so the new client will use the same index as the publisher and old subscribers?

You should decrypt and re-encrypt the RTP packets in the MCU so that the ROC is always 0 for the subscribers no matter what value it has for the publisher or other subscribers.

I’m decrypting an re-encrypting the RTP in the MCU and starting with ROC=0 for a new subscriber. I have a question is about the sequence number.

Can I use the publisher’s sequence number when sending packets to a subscriber that joined late?

If I’m supposed to use the publisher’s sequence number, isn’t there a possibility that a new subscriber will have ROC out of sync in case he join when the sequence number is close to 2^16 and there are out-of-order packets? (That’s why a sender starts with seq=rand(2^15)).

If I should generate a new sequence number for the new subscriber, the RTCP reception reports will be wrong because info like the extended highest sequence number received will refer to a different sequence number.

Yes, you can use the publisher’s sequence number when sending packets to a subscriber that joined late.

One subscriber can receive sequence number X with ROC Z and the other subscriber can receive sequence number Y with ROC Z because the ROC is just a SRTP concept and the SRTP context is different per subscriber.

If a client joins when the sender sequence_number=65534 but it misses few packets and the first packet he’ll get is sequence_number=3, the server will advance roc to 1 but the client will still think roc=0.

In the normal case we solve it by setting the initial sequence_number to rand(2^15) but now we have to use the current sender sequence_number to be able to forward rtcp packets without having to rewrite them.

Will the WebRTC client be smart enough to try roc=1 if roc=0 fails because of missed packets?

I see what you say, sorry. According to the spec it could be handled but it is not clear:

“In particular, out-of-order RTP packets with sequence numbers close to 2^16 or zero must be properly handled.”

The other option you have is a bit of a hack but if you detect that case, wait a couple second (50 packets) before starting sending packets.

If you investigate/test it more keep us updated.

Hello,

We have an one-to-many broadcast system adtlantida.tv.It works, but we need to allow more listeners/viewers saving bandwidth.Our arquitecture is: node.js, socket.io, STUN server, TURN server.Now we are investigating in MCU (Telepresence,Meddoze, Licode, Kurento)…most of them looks only for multivideoconference, not for one-to-many broadcasting.Which one is your recommendation?

Thanks in advance for your great work and information,

Beka

Hi

We are working with WebRTC multicast implementation, I have one question

Is peer to peer distribution works in different network? let’s an example

One user has started the broadcast and N number of users has join the broadcast from any country or any where.

so my question is how peer distribution work?

is peer distribution (multicast) work in different network or it’s only work in private network?

will peer distribution manage automatically or peer distribution will not work in such case?

I read googled but I am not able to find perfect answer.

Thanks

Gaurav Dhol