For the last year and a half I’ve been working with a number of customers helping them to understand what WebRTC is about, supporting them in the definition of new products, services, and in some cases even developing WebRTC prototypes/labs for them. I’ve spent time with Service Providers, Enterprise and OTT customers and the very first time I demoed WebRTC to them, after the initial ‘wow moment’ almost all of them complained about the ‘call setup delay’, as in some cases represented tens of seconds.

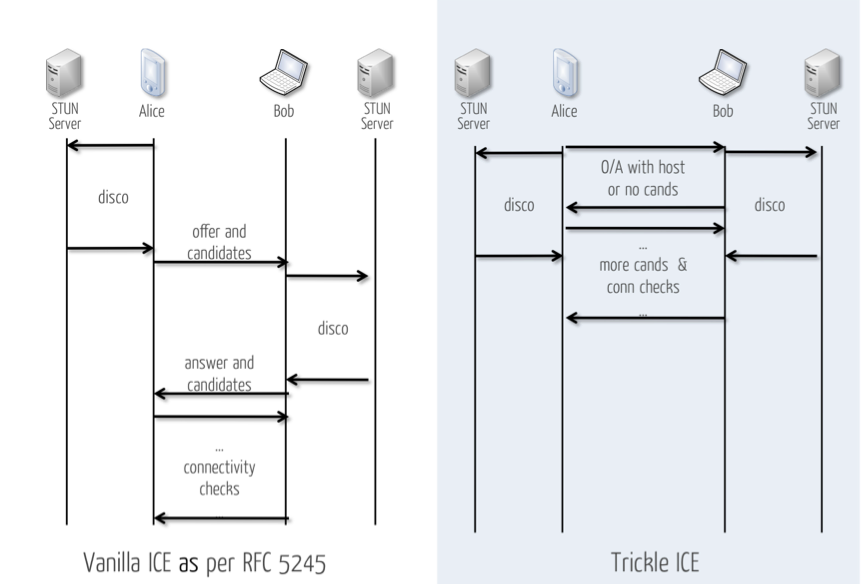

Why is there such delay? In short, because of the ICE (Interactive Connectivity Establishment) processing. As we have mentioned in our past networking posts, an endpoint using ICE needs to gather candidates, prioritize them, choose default ones, exchange them with the remote party, pair them and order into check lists. Once all this have been completed, and only then, the endpoints can begin a phase of connectivity checks and eventually select the pair of address candidates that will be used in the session; this process can lead to relatively lengthy session establishment times.

Fortunately, Emil Ivov (founder and current lead of the Jitsi project) and others have been working on an extension to ICE that allows agents to send and receive candidates incrementally rather than waiting to exchange a complete list. With such incremental provisioning, ICE agents can begin connectivity checks while they are still gathering candidates and considerably shorten the time necessary for ICE processing to complete; thereby, reducing the ‘call setup delay’ customers were complaining about.

Also note Emil be discussing this topic in Paris next week during the WebRTC Conference and Exhibition. Chad and I will be there too.

Please read below for Emil’s review of the ICE trickle extension.

{“intro-by”,”victor”}

ICE always tastes better when it trickles! (by Emil Ivov)

“The one thing I love about WebRTC is how it makes all communication P2P”

This is a statement I read or hear very often when people discover WebRTC. NATs have been causing such a mess in VoIP, for so long that many people have come to believe that only a brand new technology, like WebRTC, could possibly ever save us from this ordeal.

Well … this is not quite the case. Just as with RTP, SDP, DTLS/SRTP, RTCP-MUX and many others, WebRTC borrows most of its NAT traversal utilities from pre-existing mechanisms. To be fair though, there is this one bit that WebRTC helps improve: establishing a connection as quickly as possible with the help of Trickle ICE.

We will dive into the details of Trickle ICE in just a minute, first however, let’s have:

A lil’ bit of history

The way that many VoIP protocols work is actually quite simple: Whenever Arya wants to talk to her brother Bran (probably to inform him on the imminence of a particular season), she would send him a message containing her IP address. If Bran chooses to accept the call, he would respond with his own IP address. From then on the actual exchange of audio and video can happen directly between them. Peer-to-peer media of parties across different domains is a key aspect of the traditional “VoIP Trapezoid” and allows us to keep VoIP lightweight. It reduces the need for infrastructure.

The model breaks as soon as you introduce NATs into the picture. If Arya was to get a private, NATed IP address from Bran she wouldn’t be able to send him audio or video. It would be pretty much the same if one day you got a letter in your box that urgently asked for a reply but where the return address said: “The house with the yellow door”.

While NATs could maybe be ignored back in the mid ‘90s when protocols like SIP were born, this was no longer the case a few years later. It became evident that they were here to stay and that VoIP needed patching. And patch we did …

First, we tried to handle things in efficient ways so that media could continue flowing (almost) directly between Arya and Bran. That’s how we came up with STUN. STUN works pretty much the same way as whatismyip.com. It allows VoIP clients to ask a server what their public address is so that Arya can then include that in her call invitation to Bran.

While STUN works in a number of cases, there are a number of others where it doesn’t. Some NATs, you see, would allocate a different port number for every connection you made. This means that the port number you get from the STUN server would not be the one you need for your VoIP call and process would once again fail.

We didn’t give up. Many vendors tried using things like UPnP or the Port Control Protocol (PCP), where you would ask your gateway to allocate a port for you. That worked … when it worked and it didn’t when the above protocols were not supported by the gateway. Overall we came up with the same partial success we had with STUN.

Even IPv6 was in that situation: it allowed for direct connections when supported by the network but one couldn’t count on it to always be there.

The only reliable techniques were those that relied on relaying all media traffic through the server. These could be transparent solutions like Hosted NAT Traversal and Latching or client-controlled ones like TURN and Jingle Relay Nodes.

The situation becomes particularly problematic due to the fact that the SDP Offer/Answer process that SIP uses for media negotiation, is a one shot thing: you “offer” an address and it either passes or it fails. SDP Offer/Answer doesn’t allow you to, say, start with STUN realise it doesn’t work and then simply fall back to TURN.

This left everyone with the following choice: VoIP providers could either relay everything with the cost implications that came with this or insist on using unreliable solutions like STUN and just let some percentage of their users bite the bullet when that fails.

As a result NAT traversal was often a cause of great frustration and many people arrived at the conclusion that there was simply no way out of that situation.

Then we started having ICE

That’s when a new solution emerged: the concept of interactive connectivity establishment. The mechanism got standardized by the IETF as the ICE protocol and it was in use by applications like Skype and Google Talk even before that.

The idea is rather simple. It’s about acknowledging that none of the mechanisms above are perfect, so instead of insisting on our favorite, well we simply try them all. Before a client is to start a call, it would gather all possible addresses it can. Well, technically they are not only addresses but rather address:port/transport triplets, where the transport can be UDP or TCP. ICE calls these triplets “candidates” and so shall we.

The ICE RFC5245 only talks about using STUN and TURN but in practice nothing prevents clients from also leveraging on the other NAT traversal tricks mentioned above … and most clients do.

So, once all the candidates are gathered, they are ordered in a list based on priorities. Highest priorities are assigned to candidates with the least overhead: those that you get from the device itself, the IPv6 and IPv4 “host” candidates. Next come STUN candidates. The ICE specification calls them server reflexive because they do work a little bit like a mirror. This is also where you would place candidates obtained with UPnP and PCP.

Finally come the “relayed” candidates that clients obtain from TURN or Jingle Nodes servers. The prioritization happens in a way that would only result in use of relaying when no other route is available between two endpoints.

So is that it? Did we reach El Dorado? It sounds like with ICE we could have direct or almost direct connectivity when that’s at all possible and fall back to relaying only when that’s necessary.

Awesome! No more NAT problems … right?

Having ICE will slow you down

While ICE was percolating through the IETF, it saw a lot of criticism, a fair amount of which was directed at its complexity. The IETF’s response to this was:

“ICE is complex because we live in a complex world.”

With time more and more endpoints added ICE because of the efficiency promise it carried, but even when implementations were becoming stabler and stabler one specific problem persisted: setting up a connection through ICE could take a considerable amount of time … as in tens of seconds.

Every time a client is preparing to setup an ICE connection it needs to gather candidate addresses. In addition to addresses that appear on the local interfaces, this also includes acquiring STUN bindings, Jingle Nodes and TURN allocations or executing UPnP or PCP queries.

Once the above are completed, the client would take all the resulting candidates and send them to the remote party where the exact same process would start.

Not only that but many of these have to be executed through all the interfaces on the client endpoint. There are many ways for this process to go wrong and make you wish for a coffee while waiting for your call to connect:

- A VPN interface with no Internet connectivity. This would mean that VoIP clients will try to get in touch with STUN and TURN servers through it but never get a response. Their transactions would have to timeout before the client proceeds

- A wireless interface that appears “up” but is otherwise not connected (e.g. because it attached to a hotspot with a captive portal)

- A STUN/TURN server that went down for some reasons and is not even returning “port unreachable” ICMP errors.

In all of the above cases ICE would be peacefully dripping STUN requests while (as Chef would put it):

… you wait, and you wait, and you wait and you wait …

… and you wait, and you wait, and you wait …

The ICE RFC 5245 recommends that instead of waiting for the user to make a call, clients should start their candidate harvest as soon as they have a cue that a connection might be attempted soon. Picking up the receiver off the hook would be a good indication for this. This however, only applies to hardware phones as soft phones don’t always have a reliable indication that a call is pending. Also that kind of optimization only solves half the problem and the time of a complete harvest would still have to be endured when the remote party receives the call.

An interesting side effect of all this waiting is that we have started seeing weird things happen, like soft phones generating long, fake DTMF sequences while dialing, only to amuse the user while rushing through the stages of ICE.

So, this is where we get to:

Trickle ICE

Trickle ICE first appeared a few years ago within the context of XMPP Jingle and the IETF is currently working on covering all cases and providing a complete Trickle ICE specification.

The idea behind the protocol is that rather than waiting for a whole bucket of candidate addresses to fill, clients would start exchanging, or trickling the newly found addresses one by one, as they discover them.

In other words, a Trickle ICE client would gather local host candidates and immediately send them to the remote party. It would then discover a TURN/STUN address and send that, then potentially UPnP and send that, etc.

Making candidate harvesting a non-blocking process, has a significant impact on the speed of the entire process.

First of all, given how the initial batch of host candidates is not delayed, the call invitation can leave very early and both clients can start the discovery process almost simultaneously.

Not only that but they would also be able to immediately start verifying whether the candidates they have can work, all the while receiving new candidates and adding them to their checklists.

As soon as every client is done harvesting addresses, it would send an “end-of-candidates” signal to its peer so that it would know that this is all there is and if nothing works then it is free to declare failure.

As a result both clients perform discovery and connectivity checks simultaneously and it is possible for call establishment to happen in milliseconds. Also, possibly even more importantly, it is no longer possible for half-dead interfaces and inaccessible servers to delay the process.

The best part is that there is no downside. ICE isn’t less efficient or less reliable as a result. It is a pure improvement … in every way but one: it is not backward compatible!

Trickle ICE with SIP

Lack of backward compatibility, that is being able to have a vanilla ICE implementation successfully talk to a Trickle ICE, is not a problem in cases where support for trickling can be predicted. XMPP, for example, has always had advanced discovery mechanisms, that allow clients to know whether or not they should be using trickle before they get into the call.

WebRTC does not have discovery, but it doesn’t really need it in this case because it makes support for Trickle ICE mandatory. Any web app can hence bravely enable and use it most of the time.

Things get complicated for SIP because it has neither of the above: it has neither the reliable discovery mechanisms of XMPP, nor the mandatory support for trickling that WebRTC comes with. Every call initiating SIP INVITE needs to look in a way that can be handled by legacy endpoints. This includes legacy ICE implementations.

To circumvent the problem Trickle ICE comes with a special mode of operation called “Half Trickle”. It basically implies that the calling side starts by performing ICE regularly. It gathers all candidates and only then it sends its first INVITE request. If the callee supports Trickle ICE, the rest of the process is still optimized because it can send candidates incrementally speed up the discovery of a working one.

Once “Half Trickle” has been performed at the initiation of a call, subsequent SIP INVITEs (reINVITEs) can use “Full Trickle” and have all the benefits of Trickle ICE. Similarly, SIP clients that support Trickle ICE and that run in controlled environments can be configured to assume support for Trickle ICE in every callee and avoid use of “Half Trickle”.

| NAT Traversal Standards | Acronym | Specification |

| Session Traversal Utilities for NAT | STUN | IETF RFC 5389 |

| Universal Plug-and-Play | UPnP | UPnP.org |

| Port Control Protocol | PCP | IETF RFC 6887 |

| Hosted NAT Traversal | HNT | IETF Draft MMUSIC-Latching |

| Traversal Using Relays around NAT | TURN | IETF RFC 5766 |

| Jingle Relay Nodes | XSF XEP-0278 | |

| Interactive Connectivity Establishment | ICE | IETF RFC 5245 |

| Incremental Provisioning of Candidates for the Interactive Connectivity Establishment (ICE) Protocol | Trickle ICE | IETF DRAFT MMUSIC-Trickle-ICE |

Summary, or, if you only read one paragraph

Going back to where we started from: many of the technologies adopted by WebRTC, such as NAT traversal, media encryption, port multiplexing, and many others, have existed for a while now and have even gained considerable levels of adoption.

The popularity of WebRTC does bring these technologies to the front however, and many of them are now seeing various improvements. Trickle ICE is one such improvement to the ICE protocol. It does not make it more or less likely for you to establish direct sessions across the Internet, but it does make the process considerably faster and less noticeable to users.

Come to think of it, many could argue that this is just as important as the actual optimization …

{“author”,”Emil Ivov”}

Thanks for such a detailed yet very enjoyable article on one of the most important technicalities of WebRTC! This is indeed going to be a determining factor for the success of Internet communication services from now on. I’ve spent quite some time trying to raise awareness on this in the Telco industry. At the end of the day, trickle vs. non-trickle will make for a handicap factor of 10: in a competition with peer-to-peer web communications (that get trickle for free from the browser), you really don’t want to be on the wrong side of the par!

The industry doesn’t seem much responsive though…

I just want to say, this is really good ariticle for me to get a overview how the messaging application to get through the NAT to talk with the peer.

Thanks to this article. As far as I understand the difference between RFCed ICE and this is that you make stun request to server while starting negotiation between clients using local interfaces? Almost asyncronically? Why should you do this before the call when you can actually get it on the very beginig of the SIP session (say, registration) and keep this information?

Very educational and well written. Thanks !

Still cannot get the “half-trickle”.

If the primary problem was that the three phases happen consecutively, in a blocking way, which may lead to undesirable latency during session establishment.

(

three main phases: (1) gathering, (2) candidates are sent to a remote agent and (3) connectivity checks

)

And “half-trickle” means: “ that the calling side starts by performing ICE regularly”

Than we can still fall into undesirable latency from same reasons described in this article

(e.g. – VPN interface, STUN/TURN server down, wireless interface that appears “up” but is otherwise not connected etc..)

Can this be clarified how “half trickle” solves this ?

Hey Kamil,

> As far as I understand the difference between RFCed ICE and this is that you

> make stun request to server while starting negotiation between clients using

> local interfaces?

I didn’t understand the part about “local interfaces” but the rest is pretty much true, with one main difference: it’s not only about STUN. It’s also about TURN and potentially other traversal methods too (e.g. UPnP, Jingle Relay Nodes).

> Why should you do this before the call when you can actually get it on the

> very beginig of the SIP session (say, registration) and keep this information?

There are two main reasons for this:

1. You don’t know what to reserve until you actually make the call.

Should a client obtain a binding for 1 port only? That may cut it in case you have a webrtc to webrtc call. You would however need 2 ports in case your a not using bundle (1 for audio and 1 for video) and 4 in case you don’t use rtcp-mux as every stream would require. What happens if you ever need to make a second call? Do you start by pre-binding 8 ports just in case? Or 16? Or maybe 32 to really be on the safe side?

Now let’s also add TURN here and you get to double all the numbers above once more. Powers of 2 go up pretty quickly. This leads to the second reason which is that:

2. This would put unbearable strain on TURN and STUN servers. Keeping all these ports alive all the time would imply huge bandwidth requirements on the server side. In the case of TURN, this could mean entire machines that remain unused just so that they would be able to keep ports open.

So when you take all that into account, pre-allocating and maintaining ports for ICE would probably be more expensive than simply relaying every single call.

Hope this answers your question!

Hey Rachel,

You are actually missing something important in your three step process. You should rather have something like this

(1) offerer gathers candidates and sends offer

(2) (new) answerer gathers candidates and sends answer

(3) connectivity checks.

With vanilla ICE all of them are blocking and strictly consecutive. Full trickle makes them all run in parallel. Half trickle keeps (1) blocking but (2) and (3) still run in parallel.

Also note that half trickle is only needed in the first Offer/Answer exchange in a session. All consecutive Offer/Answer exchanges (or re-INVITEs for SIP) in that session can use full trickle because support for it would have been confirmed in the beginning.

Does this answer your question?

Yes and No.

First, thanks for the detailed answer.

I want to refer to the first step.

If it is still blocking (as TURN Server is not replying or Wifi up but not connected) we will still see a delay in the call setup. correct ?

it is true that without trickle-ice it will be worse, but do you agree that it still can delay the call setup in many seconds ?

Super informative article! Thanks for sharing with us 🙂

Really great and detailed article explaining an often misunderstood mechanism. Thanks!

Super awesome! Cleared a lot of things.

Hi!

Thanks for article, it’s really great.

I didn’t quite grasp what’s the role of SDP here. I mean, does Trickle ICE replaces it? Or TrickleICE just happening after SDP?

Thanks!