Slack is an über popular and fast growing communications tool that has a ton of integrations with various WebRTC services. Slack acquired a WebRTC company a year ago and launched its own audio conferencing service earlier this year which we analyzed here and here. Earlier this week they launched video. Does this work the same? Are there any tricks we can learn from their implementation? Long time WebRTC expert and webrtcHacks guest author Gustavo Garica takes a deeper dive into Slack’s new video conferencing feature below to see what’s going on under the hood.

{“editor”, “chad hart“}

Early this year Slack added support for audio calls using WebRTC technology. Soon after that launch Philipp Hancke wrote this blog post analyzing it. Yoshimasa Iwase followed soon after with even more detail.

This week Slack announced video support and generated some excitement in the WebRTC community again. Today some of us saw it enabled for the first time, so what was the first thing we did? We set up a meeting with our team to do a sprint planning using this new feature of course. To peek inside of Slack’s WebRTC workings, we made some quick calls and looked at the SDPs and other stats available in the awesome webrtc-internals in Chrome.

No TCP or IPv6

The first thing you see with webrtc-internals is that they are still using TURN UDP and disabling IPv6.:

Ultimately Slack is aiming to be a a corporate communication tool. “Enterprise” often means customers that block any “suspicious” UDP traffic. Given this, one would expect to see support for TURN TCP and TLS, but surprisingly this isn’t the case. The same is true for IPv6 support – maybe there is a problem in Janus or Slack signaling stack to support it but probably something easy to change in future versions.

Media Server Platform

The next thing look at is the SDP coming from the server.

As expected, we see that Slack is still using the nice open source SFU called Janus from MeetEcho (see Lorenzo from MeetEcho talk about gateways here).

Simulcast

One of the interesting things when talking about multiparty WebRTC these days is how do you implement bandwidth adaptation for different participants? In the SFU world there is some agreement on simulcast being the right way to proceed. Simulcast has been available for some years, but standardization and support in WebRTC is not complete. As a result, there are many services were simulcast is still not used. It is good news that Slack is using it and more and more people is starting to use it apart from Google Hangouts and TokBox.

Looking more closely at the Slack SDP (see below), you can see simulcast is being used by looking at the SIM group in the offer and x-google-flag:conference in the answer.

One of the benefits of enabling simulcast is that automatically enables temporal scalability for further granularity. I did a quick check of the framerate received under different network conditions and apparently Slack is not yet making use of this functionality. However, there has been interest for this feature from Slack employees in the WebRTC mailing lists so we should review it more before confirming it.

Another interesting point is always to check if they are using multistream peer connections. In case of Slack (and many other services/platforms) they are using a new RTCPeerConnection for each sender and for each receiver. This is slightly inefficient because of some overhead and extra establishment time. However, it is way easier to implement, so it is a very popular choice these days particularly because there is no way to do multistream PeerConnection in a single, cross-browser way.

Codecs

Regarding codecs- we don’t see any surprising there, Opus and VP8 are being used. This is as expected because Chrome does not support simulcast with H264 yet. It is interesting though that they are enabling discontinuous transmission in OPUS to save bandwidth in the audio channel when participants are not talking. It would be good to extend this analysis to mobile devices at some point to see if they use the same codecs (I think this is probable).

Active Speaker Detection

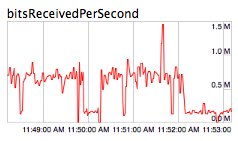

One interesting feature in Slack is the automatic video switching to show only the active speaker. Looking at the Chrome console you can see how the list of active speakers is communicated using the signaling channel. This means the active speaker is detected in the server side. The detection is probably done using the audio-levels header extension that is negotiated in the SDP. This includes a voice flag in the RTP packets. This method is very inexpensive from a media processing perspective since no audio decoding is required. It is also interesting to see how the bitrate received is reduced for the participants that are not shown in the screen although for some reason it still keeps receiving some packets (~100kbps). Perhaps these correspond to the lowest simulcast quality.

ICE Connectivity

For connection establishment and encryption we see standard ICE and DTLS are being used. A full ICE implementation is used instead of an ice-lite one as we often see these days in many SFUs. One curiosity is that the SFU is returning 2 different IP addresses as candidates and both of them are private. I don’t see a good reason to do that and it looks more a configuration issue that could slow down the ICE establishment than a feature.

Not much has changed

BUNDLE and rtcp-mux are also used as expected! Nothing really changed here from Fippo’s post.

{“author”,”Gustavo Garcia”}

Nice article, thanks for sharing! Especially since I don’t have a premium Slack account and couldn’t check this myself

To answer Gustavo’s question, Janus does support IPv6 and TURN over TCP/TLS too (via libnice). The TURN setup they have might be a choice, while for what concerns IPv6 my guess is that either the server they’re deploying it on doesn’t support it (IIRC AWS didn’t some time ago, for instance, and not sure if that changed in the meanwhile), or they didn’t enable it in Janus (by default it’s disabled in the config).

On the private IPs as the only candidates, I seem to remember Yoshimasa explaining how this was a trick to basically force you to go over TURN. That’s probably not needed anymore, at least on Chrome, as you could do the same by passing an iceTransportPolicy:”relay” constraint. Anyway, not sure whether or not that was indeed their intention.

Even if your server doesn’t support IPv6 IMO is a good idea to keep it enabled so that it works in networks with NAT64 routers. Thx for confirming Janus support IPv6.

Regarding the private IPs Slack is not forcing TURN. I think the selected candidate pair was prflx-local in my call. What they are forcing by using private addresses is the media server to send the first successful BindingRequest and that should slow down the establishment unnecessarily.

BTW @Lorenzo, if you cannot get a Premium Slack account for free I don’t know who can get it

Yeah, the irony…

Thanks for the clarifications!

Actually it’s easy to implement multistream in a single PeerConnection, just send a list of streams information from a SFU server and assemble SDP accordingly in browser (plan b in Chrome and unified plan in Firefox)