Maximizing stream quality on an imperfect network in real-time is a delicate balancing act. If you send too much information then will cause congestion and packet loss. If you send too little then your video quality (or audio) will look like garbage. But how much can you send? One of the techniques used to find this is called “Bandwidth Probing”

Today Kaustav Ghosh is joining us to review bandwidth probing and how it fits in WebRTC’s bandwidth estimation. Kaustav is a Senior Software Engineer at Microsoft working out of Tallinn Estonia. Kaustav describes why probing is needed, how probing works, reviews the libwebrtc implementation in Google’s bandwidth control (GCC), and reviews tooling used to debug issues with bandwidth estimation (BWE).

Introduction

Google Congestion Control (GCC) is the advanced congestion control algorithm used in WebRTC. It plays a crucial role in optimizing network performance, particularly in real-time communication. Bandwidth probing is one of the techniques used in GCC to help estimate bandwidth.

Unfortunately, bandwidth probing is one of the less documented areas of WebRTC. In this post, I document the mysteries behind bandwidth probing based on the latest Chromium WebRTC source code at the time of writing.

I recommend this draft document for details on the GCC algorithm in WebRTC. it may be old and outdated but it explains a lot of the basics

Why is bandwidth probing needed in Congestion Control?

Congestion Control in RTC is the process of detecting network congestion so the sender can control the amount of data it sends without overloading the network and causing packet loss. To do that, the WebRTC stack needs a running estimate of how much bandwidth is available. If your bandwidth estimate (BWE) is too high, then the WebRTC stack might send too much information and cause congestion, which will lower the media quality. If the BWE is too low, WebRTC may not send enough bits to have sufficient quality.

WebRTC clients send a stream and receive RTCP feedback from the receiver that indicates whether the packets were successfully delivered and when they arrived. This feedback is used as input to the two traditional bandwidth estimators in WebRTC – (1) a delay-based estimator and (2) a loss-based estimator. The loss-based estimator uses the packet loss information and the delay-based estimator uses the information about packet delay in the network. More information on the delay-based estimator can be found here, and information on the loss-based estimator can be found here. The final bandwidth estimate is the minimum of the two estimates.

The delay-based estimator is based on the principle of additive increase and multiplicative decrease or AIMD. Because of this, when the network quality is poor, it can quickly respond through fast decreases in the bandwidth sent. However, in situations like at the start of a call, the estimator is typically conservative since it does not know how much the network can handle. Similarly, after a congestion event, the delay-based estimator does not want to overwhelm the network and cause another congestion issue, so the bandwidth estimate increases only slowly. But what if there is actually plenty of bandwidth available to improve the stream quality? How do you know? This is where probing is used. Probing is a way to test the waters and see how much data the connection can handle without using “real” traffic. Probing involves sending out RTP packets at a higher rate than the current bandwidth estimate in a short period to increase the bandwidth estimate aggressively.

The probe packets can be disregarded without degrading media quality at the receiving end.

Probing techniques

WebRTC uses two techniques for probing – RTX probes and padding probes. Note that Forward Error Correction (FEC) packets could also be used for probing, but that approach is not implemented in WebRTC.

RTP Retransmission (RTX) probes

RTX probes are resends of previous RTP packets with RTX retransmissions specified in RFC4588 as payload format when negotiated with the peer. These packets will have a different abs-send-time or transport-wide-cc sequence number and use the RTX RTP stream (i.e. RTX payload type, sequence number, and timestamp) associated with the media RTP stream.

RTX probing uses recently sent RTP packets that the remote side has not yet acknowledged. Sending these packets again has a small chance of arriving in time to be useful to recover the original packet if the original packet is lost. It will not affect RTP processing at the receiver otherwise.

Using RTX for probing is generally preferred. Unlike RTP Padding probes discussed in the next section, RTX packets are real RTP packets so they efficiently simulate the RTP stream.

RTP Padding probes

Padding probes consist of only RTP padding packets with header extensions (either abs-send time or the transport-wide-cc sequence number) and set the RTP P bit. They also have a specific RTP payload format – 255 bytes of all zeroes followed by a byte with value 255. See section 5.1 of RFC3550. Padding probes use the RTX RTP stream (i.e. payload type, sequence number, and timestamp) when RTX is negotiated or otherwise share the same RTP stream as the media packets.

These probes are used either when:

- RTX is not negotiated (such as for audio, less commonly for video) or

- no suitable original packet is found for RTX probing.

To avoid timestamp confusion, padding probes should not be interleaved with packets of a video frame. Note that it is possible to have probe packets with the same RTP timestamp after the packet with the frame’s marker bit. Padding probe packets are only used for bandwidth estimation and not used elsewhere in the WebRTC stack.

With only 255 bytes of RTP payload, these packets are small. This results in needing to send a larger number of packets to test higher bitrates. This has disadvantages from a congestion control point of view because packet loss may occur just because so many packets are sent in a short amount of time.

Sending probe clusters

In this section, we will walk through the algorithm. For full details, you can always reference the Chromium source here: https://source.chromium.org/chromium/chromium/src/+/main:third_party/webrtc/modules/pacing/

A probe cluster consists of several probe packets sent in a very short period to check if it’s possible to achieve the target bitrate. Because the probe packets are sent in over duration, it allows testing high bitrates (i.e. bitrate = bits sent / timeEnd – timeStart). When it’s time to send a probe, a probe cluster is created by calling the function BitrateProber::CreateProbeCluster.

A new probing session is initialized if the prober is allowed to probe. It does not initialize the prober unless the packet size is large enough to use for probing in the current implementation. The default minimum packet size required to initialize the prober is 200 Bytes. This value is configurable through the field trial WebRTC-Bwe-ProbingBehavior.

At the time of writing this post, this logic is being updated – you can see progress in this WebRTC ticket.

There is also an RTPSender::GeneratePadding function that finds suitable-sized packets from the packet history and, if required, generates padding packets. At this point, these selected probe packets are not sent. Instead, they are put into the pacer queue.

Lastly, there is a Pacer component used to smooth the flow of packets sent onto the network. Pacer checks the pacer queue of pending packets including probe packets, and forwards them for sending to the network by calling the function PacingController::ProcessPackets.

Estimating bitrate through probes

The sender sends out the probe packets of a probe cluster. Then the receiver lets the sender know the arrival time of each packet through the transport-wide congestion control RTCP feedback message.

While using sender-side bandwidth estimation, the sender knows which packets are part of a probe cluster and which are regular media packets. Because of this extra information, the sender can handle situations better such as when a lost packet is a probe packet. When using receiver-side bandwidth estimation, the receiver doesn’t have this information.

For every probe packet the sender receives feedback about, it calls ProbeBitrateEstimator::HandleProbeAndEstimateBitrate(const PacketResult& packet_feedback). This function returns the estimated bitrate if the probe packet completes a valid probe cluster.

First, this method calculates send interval, receive interval, send rate, and receive rate of the probe cluster. A probe cluster is successful or valid if:

- send and receive intervals of the probe cluster are valid

- the ratio between the receive rate and send rate of the probe cluster is valid

The estimated bitrate is the minimum of the send rate and receive rate of the probe cluster. If we’re receiving at a significantly lower bit rate than we are sending, it suggests that we’ve found the true capacity of the link. In this case, it sets the target bitrate slightly lower to not immediately overuse.

When to probe

This section outlines the key scenarios when probes are sent during a call.

At the start of the call

At the start of a non-simulcast call, WebRTC has a hardcoded start bandwidth estimate of 300kbps. Traditional estimators can take a relatively long time to ramp up to a bandwidth estimate capable of sending high-quality video – i.e. one with a 720p resolution running at a 1.5mbps bitrate in a good network.

As you can see in the GCC code, a ProbeController sends two probes when no probe has yet been triggered (that’s the kInit state) and a transport is connected (i.e. the network is available). These probes ramp up the estimate exponentially. The target bitrates of the two exponential probes are (3 * start_bitrate) = 900kbps and (6 * start_bitrate) = 1800kbps, where start_bitrate is a hardcoded value of 300kbps.

If the network is not available in the above scenario, it will trigger the two exponential probes immediately once the network becomes available.

When the Max bitrate of the video channel increases

Once probing is complete, if the new max bitrate of the video channel (same as bandwidth attribute b=AS in SDP) is higher than both the old max bitrate and the current estimate, another probe can be sent to check if we can achieve this higher bitrate.

When probing is in progress and the channel has more capacity

When probing is in progress, if the new estimated bitrate is more than the minimum bitrate required to probe further and the channel has more capacity, ProbeController may continue further probing while waiting for probing results.

After a large drop in the bandwidth estimate

The bandwidth estimator may react to transient network anomalies and drop the bandwidth estimate. Ideally, the estimator should not react at all to problems that have nothing to do with congestion. However, because the estimator wants to react quickly, it doesn’t always have time to determine if the problem is persistent or not. If a transient problem causes a large bitrate drop, it can take a long time to fully recover through the normal ramp-up process.

The function ProbeController::RequestProbe() is called once it has returned to normal state from a large drop in bandwidth estimate. It initiates a single probe session (if not already probing) at 0.85 * bitrate_before_last_large_drop_. If the probe session fails, the assumption is that this drop was a real one from a competing flow or a network change.

When max_total_allocated_bitrate has changed

When ProbeController::OnMaxTotalAllocatedBitrate() function is called, it indicates the max_total_allocated_bitrate (the sum of the configured bitrates for all active send streams) has changed. The algorithm sends probes to check if achieving this bitrate is possible.

This is one of the examples when it may trigger a low-target bitrate probe after a high-target bitrate probe. This may happen if max_total_allocated_bitrate value decreases.

Application limited region (ALR) probing

Data sent to the network can be limited by the application’s ability to generate traffic instead of bandwidth restrictions e.g., when sharing a browser tab with static content. This situation is called Application limited region (ALR). When in ALR, the traditional ways of doing congestion control work poorly to keep the bandwidth estimate high because of not having enough data.

In this case, the ProbeController sends periodic probes to generate enough data to maintain a high bandwidth estimate.

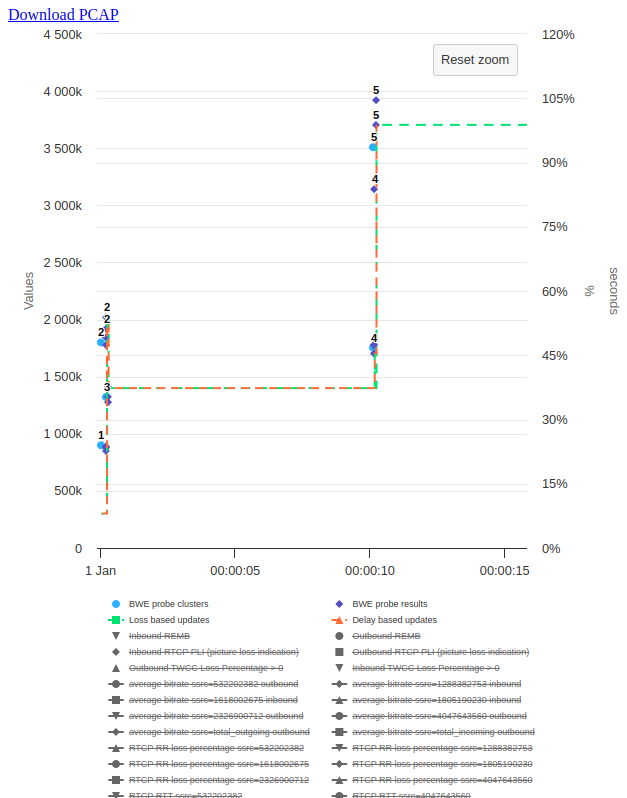

For example, the above graph is from a call with video-based screen sharing enabled. By design in static video-based screen sharing, network traffic is limited by the application. The blue line shows that most of the time, the bitrate sent was less than 400kbps. So, libwebrtc sends periodic probes every 5 sec to maintain a high bandwidth estimate.

Probing in action

One can quickly explore how probing works in a WebRTC call by using either Fippo’s online visualizer or Google’s RTC event log visualizer. Both of the tools draw graphs by parsing the RTC event log. However, the online visualizer tool has better visualization support for the probe clusters. I captured an RTC event log from a sample WebRTC video call and used the online visualizer tool in the example below.

How bandwidth estimate changes once probes are successful

At the start of the call, the bandwidth estimate starts at a hardcoded 300kbps value. But as video is enabled, the sender wants to send high-quality video as soon as possible. So, it sends 3 probe clusters(cluster ID 1, 2, and 3) with different bitrates. These are marked as light blue colored dots. Once these probe clusters are successful (dark blue colored dots), the bandwidth estimate increases aggressively to more than 1mbps within less than a second. After that, it sends two more probe clusters (cluster ID 4 and 5) and once these are successful, it pushes the estimate to more than 3mbps.

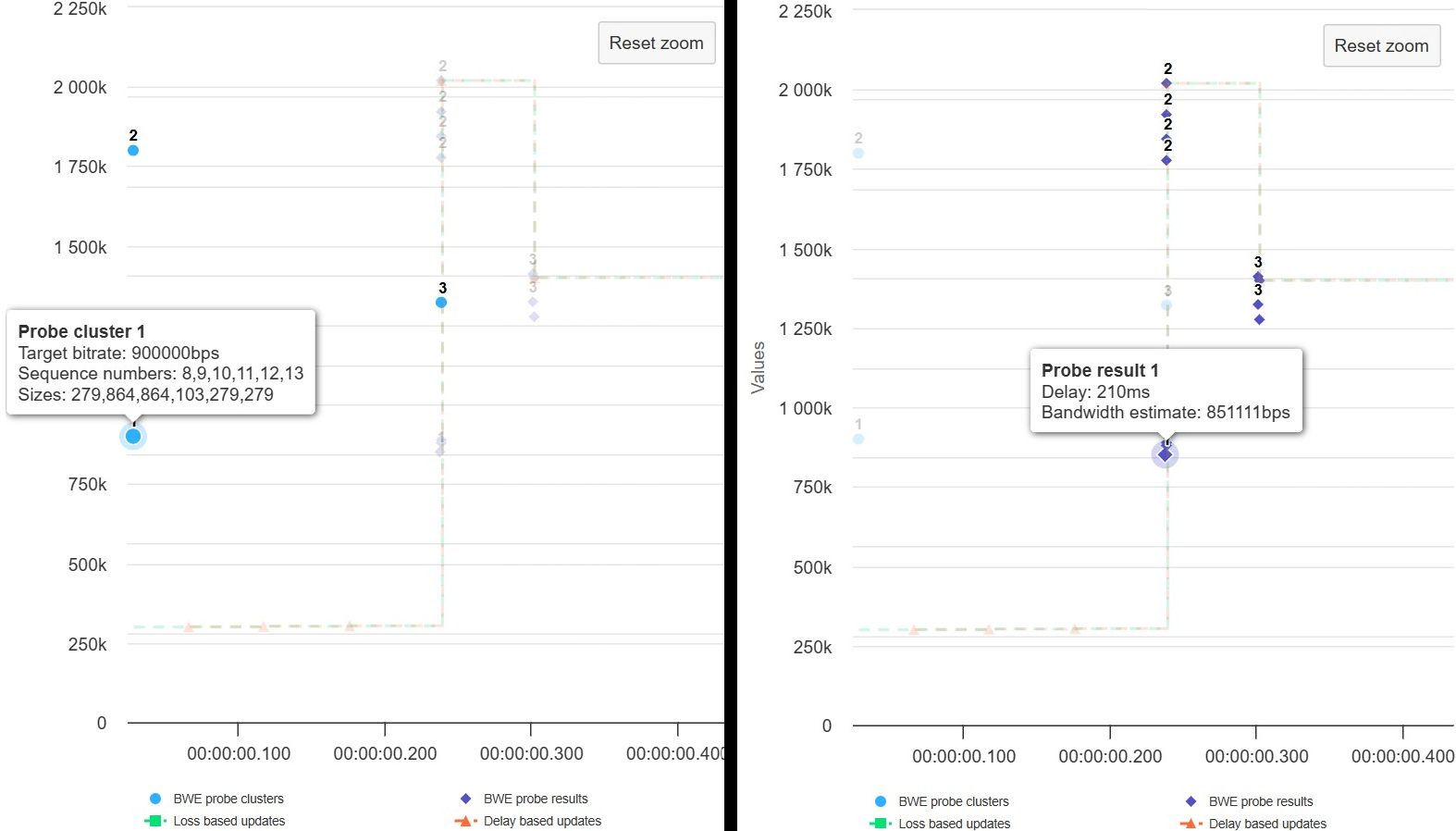

Probe cluster details

If you hover over a probe cluster in the graph, you can see details associated with the probe cluster – i.e., sequence numbers and sizes of the RTP packets part of this cluster, cluster ID, and probe target bitrate. See the associated delay and estimated bandwidth by hovering over a probe cluster result in the graph.

It is also possible to download the RTP packet dump from this tool to look closely at individual probe packets in Wireshark. At the time of writing, the tool doesn’t have full support for the new event log format. So, consider starting Chrome with --force-fieldtrials=WebRTC-RtcEventLogNewFormat/Disabled/ to get access to full RTP packet dump and other useful features.

Conclusion

In summary, bandwidth probing works on the idea of sending extra packets to gauge the available bandwidth. By acting like a scout, probing ensures a smooth and enjoyable call experience.

libwebrtc may decide to send probes of different target bitrates in various situations during the call. Bandwidth probing is still undergoing further improvements and refinements as libwebrtc continues to evolve.

{“author”: “Kaustav Ghosh“}

Thanks for the article! Could you pls clarify the phrase: “To avoid timestamp confusion, padding probes should not be interleaved with packets of a video frame”. What does it mean in protocol point of view? Right now I’m trying to implement probing mechanism in our SFU, but got instant freeze in browser and have no idea how to debug it. In webrtc-internals there are no NACKS or packets lost, so it might be problems on decoder side.

Let’s take an example. SFU sends last video packet with seqNum=123, ts=1000. Then SFU receives command to send 3 probing packets. It allocates next 3 seqNums (124, 125, 126) and creates packets with these seqNums and latest timestamp (in our case it’s 1000). Then SFU continue to resend video packets with rewrited seqNums (so the next one will be 127, 128…). Is it correct logic?

Yes, the example you mentioned is correct.