We have been waiting a long time for Microsoft to add WebRTC to its browser portfolio. That day finally came last month when Microsoft announced its new Windows 10 Edge browser had ORTC. This certainly does not immediately address the Internet Explorer population and ORTC is still new to many (which is why we cover it often). On the positive side, interoperability between Edge, Chrome, and Firefox on the audio side was proven within days by multiple parties. Much of ORTC is finding its way into the WebRTC 1.0 specification and browser implementations.

I was with Bernard Aboba, Microsoft’s WebRTC lead at the IIT Real Time Communications Conference (IIT-RTC) and asked him for an interview to cover the Edge implementation and where Microsoft is headed. The conversation below has been edited for readability and technical accuracy. The full, unedited audio recording is also available below if you would rather listen than read. Warning – we recorded our casual conversation in an open room off my notebook microphone, so please do not expect high production value.

We cover what exactly is in Edge ORTC implementation, why ORTC in the first place, the roadmap, and much more.

You can view the IIT-RTC ORTC Update presentation slides given by Bernard, Robin Raymond of Hookflash, and Peter Thatcher of Google here.

{“editor”, “chad hart“}

Intro to Bernard

webrtcHacks: Hi Bernard. To start out, can you please describe your role at Microsoft and the projects you’ve been working on? Can you give a little bit of background about your long time involvement in WebRTC Standards, ORTC, and also your new W3C responsibilities?

Bernard: I’m a Principal Architect at Skype within Microsoft, and I work on the Edge ORTC project primarily, but also help out other groups within the company that are interested in WebRTC. I have been involved in ORTC since the very beginning as one of the co-authors of ORTC, and very recently, signed up as an Editor of WebRTC 1.0.

webrtcHacks: That’s concurrent with some of the agreement around merging more of ORTC into WebRTC going forward. Is that accurate?

Bernard: One of the reasons I signed up was that I found that I was having to file WebRTC 1.0 API issues and follow them. Because many of the remaining bugs in ORTC related to WebRTC 1.0, and of course we wanted the object models to be synced between WebRTC 1.0 and ORTC, I had to review pull requests for WebRTC 1.0 anyway, and reflect the changes within ORTC. Since I had to be aware of WebRTC 1.0 Issues and Pull Requests to manage the ORTC and Pull Requests, I might as well be an editor of WebRTC 1.0.

What’s in Edge

webrtcHacks: Then I guess we’ll move on to Edge then. Edge and Edge Preview are out there with varying forms of WebRTC. Can you walk through a little bit of that?

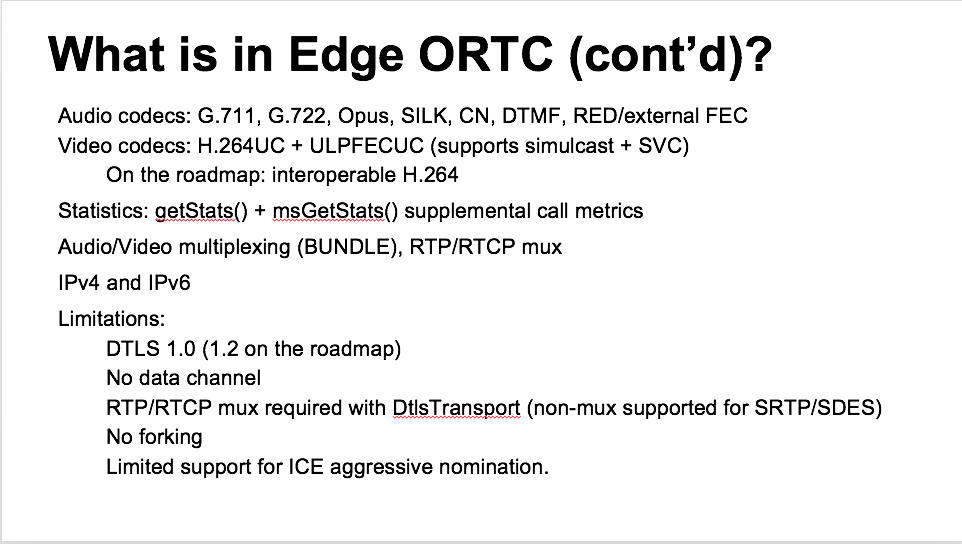

Bernard: Just also to clarify for people, Edge ORTC is in what’s called Windows Insider Preview. Windows Insider Preview builds are only available to people who specifically sign up to receive them. If you sign up for the Windows Insider Preview program and install the most recent build 10547, then you will have access to the ORTC API in Edge. In terms of what is in it, the audio is relatively complete. We have:

- G.711,

- G.722,

- Opus,

- Comfort Noise,

- DTMF, as well as the

- SILK codec.

Then on the video side, we have an implementation of H.264/SVC, which does both simulcast and scalable video coding, and as well as forward error correction (FEC), known as H.264UC. I should also mention, we support RED and forward error correction for audio as well.

That’s what’s you will find in the Edge ORTC API within Windows Insider Preview, as well as support for “half-trickle” ICE, DTLS 1.0, etc.

webrtcHacks: I’ll include the slide from your presentation for everyone to reference because there’s a lot of stuff to go through. I do have a couple of questions on a few things for follow up. One was support on the video side of things for. I think you mentioned external FEC and also talked about other aspects of robustness, such as retransmission?

Bernard: Currently in Edge ORTC Insider Preview, we do not support generic NACK or re-transmission. We do support external forward error correction (FEC), both for audio and video. Within Opus as well as SILK we do not support internal FEC, but you can configure RED with FEC externally. Also, we do not support internal Discontinuous Operation (DTX) within Opus or SILK, but you can configure Comfort Noise (CN) for use with audio codec, including Opus and SILK.

Video interoperability

webrtcHacks: Then could you explain H.264 UC? The majority of the people out there that aren’t familiar with the old Lync or Skype for Business as it is now called.

Bernard: Basically, H.264 UC supports spatial simulcast along with temporal scalability in H.264/SVC, handled automatically “under the covers”. These are basically the same technologies that are in Hangouts with VP8. While the ORTC API offers detailed control of things like simulcast and SVC, in many cases, the developer just basically wants the stack to do the right thing, such as figuring out how many layers it can send. That’s what H.264UC does. It can adapt to network conditions by dropping or adding simulcast streams or temporal layers, based on the bandwidth it feels is available. Currently, the H.264UC codec is only supported by Edge.

webrtcHacks: Is the base layer H.264?

Bernard: Yes, the base layer is H.264 but RFC 6190 specifies additional NAL Unit types for SVC, so that an implementation that only understands the base layer would not be able to understand extension layers. Also, our implementation of RFC 6190 sends layers using distinct SSRCs, which is known as Multiple RTP stream Single Transport (MRST). In contrast, VP8 uses Single RTP stream Single Transport (SRST).

We are going to work on an implementation of H.264/AVC in order to interoperate. As specified in RFC 6184 and RFC 6190, H.264/AVC and H.264/SVC have different codec names.

webrtcHacks: For Skype, at least, in the architecture that was published, they showed a gateway. Would you expect other people to do similar gateways?

Bernard: Once we support H.264/AVC, developers should be able to configure that codec, and use it to communicate with other browsers supporting H.264/AVC. That would be the preferred way to interoperate peer-to-peer. There might be some conferencing scenarios where it might make sense to configure H.264UC and have the SFU or mixer strip off layers to speak to H.264/AVC-only browsers, but that would require a centralized conferencing server or media relay that could handle that.

Roadmap

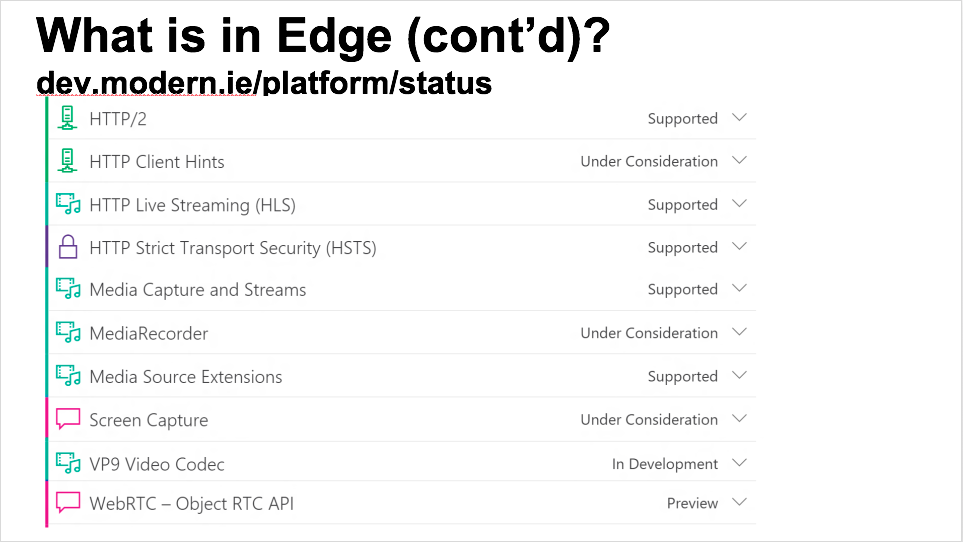

webrtcHacks: What can you can you say about the future roadmap? Is it basically what’s on the dev.modern.ie page?

Bernard: In general, people should look at the dev.modern.ie web page for status, because that has the most up to date. In fact, I often learn about things from the page. As I mentioned, the Screen Sharing and Media Recorder specifications are now under consideration, along with features that are in preview or are under development. The website breaks down each feature. If the feature is in Preview, then you can get access to it via the Windows Insider Preview. If it is under development, this means that it is not yet in Preview. Features that are supported have already been released, so if you have Windows 10, you should already have access to them.

In terms of our roadmap, we made a roadmap announcement in October 2014 and are still executing on things such as H.264, which we have not delivered yet. Supporting interoperable H.264 is about more than just providing an encoder/decoder, which we have already delivered as part of H.264UC. The IETF RTCWEB Video specification provides guidance on what is needed to provide interoperable H.264/AVC, but that is not all that a developer needs to implement – there are aspects that are not yet specified, such as bandwidth estimation and congestion control.

Beyond the codec bitstream, RTP transport and congestion control there are other aspects as well. For example, I mentioned robustness features such as Forward Error Correction and Retransmission. A Flexible FEC draft is under development in IETF which will handle burst loss (distances greater than one). That is important for robust operation on wireless networks, for both audio and video. Today we have internal FEC within Opus, but that does not handle burst loss well.

webrtcHacks: Do you see Edge pushing the boundaries in this area?

Bernard: One of the areas where Edge ORTC has advanced the state of the art is in external forward error (FEC) correction as well as in statistics. Enabling external FEC to handle burst loss, provides additional robustness for both audio and video. We also support additional statistics which provide information on burst loss and FEC operation. What we have found is that burst loss is a fact of life on wireless networks, so that being able to measure this and to address it is important. The end result of this work is that Edge should be more robust than existing implementations with respect to burst loss (at least with larger RTTs where retransmission would not be available). We can also provide burst loss metrics, which other implementations cannot currently do. I should also mention that there are metrics have been developed in the XRBLOCK WG to address issues of burst loss, concealment, error correction, etc.

Why ORTC?

webrtcHacks: You have been a long time advocate for ORTC. Maybe you can summarize why ORTC was a good fit for Edge? Why did you start with that spec versus something else? What does it enable you to do now as a result?

Bernard: Some of the originally envisaged advantages of ORTC were indeed advantages, but in implementation we found there were also other advantages we didn’t think of at the time.

Interoperability

Bernard: ORTC doesn’t have SDP [like WebRTC 1.0]; the irony is ORTC allowed us to get to WebRTC 1.0 compatibility and interoperability faster than we would have otherwise. If you look at the adapter.js, the adaptation code for Edge is failrly small. Why? Since we don’t generate SDP than adapter.js would have needed to parse and reformat. It also saves development work to not have to write that code within the browser and instead, but have it in JavaScript, where it can be modified in case people find bugs in it.

The irony is ORTC allowed us to get to WebRTC 1.0 compatibility and interoperability faster than we would have otherwise

Connection State Details

The other thing we found about ORTC that we didn’t appreciate early on was it gives you detailed status of each of the ICE and DTLS transports. Particularly when you’re dealing with situations like multiple interfaces, you can get more information about failure conditions than is available in WebRTC 1.0 prior to addition of objects. One of the reasons people will find the objects interesting in 1.0 is to obtain that kind of diagnostic information. The current connection state in WebRTC 1.0 is not really enough – it’s not even clear what it means. With the 1.0 and ORTC object models, you will have information on the state of each ICE and DTLS transport. Details of the connection state is pretty important. That was a benefit we didn’t anticipate, that is valuable and will available in the WebRTC 1.0 object model as well.

Many simple scenarios

Bernard: Then there were the simple scenarios. People say, “I don’t need ORTC because I don’t do scalable video coding and simulcast”. In the slides, Peter [Thatcher] illustrates simple scenarios that are enabled by objects, such as hold and changing the sending codec. So there are basic scenarios will be enabled in ORTC as well as the WebRTC 1.0 object model.

How is Edge’s Media Engine built

webrtcHacks: In building and putting this in the Edge, you had a few different media engines you could choose from. You had the Skype media engine and a Lync media – you combine them or go and build a new one. Can you reveal the Edge media architecture and how you put that together?

Bernard: What we chose to do in Skype is move to a unified media engine, and we have WebRTC capabilities to that engine, so things like RTCP MUX and BUNDLE are now there. It took a little bit longer to do it that way, but the benefit is a standards compliant browser as well as a media stack that allows us to use those technologies internally, without having different stacks that we would have to rationalize later.

right now, our focus is very much on video, and trying to get that more solid, and more interoperable

Also, the stack is both client and server capable – this isn’t just client code that couldn’t handle load. That is also true for components such as DTLS. Whether or not we use all the capabilities in Skype is another issue, but it is available.

More than Edge

webrtcHacks: Is there anything else that’s not on dev.modern.ie that is exposed that a developer would care about? Any NuGet packages with these API’s for example?

Bernard: dev.modern.ie does not cover non-browser components in the Windows platform. For example, Edge currently supports DTLS 1.0. DTLS 1.2 is important because of the additional ciphersuites (Elliptic Curves) that are implemented in other browsers such as Chrome and Mozilla. With RSA-2048, the CPU cycles required to generate certificates can be very significant, which would be a problem in particular on mobile devices. While this isn’t covered on dev.modern.ie, it is obviously very important.

There has also been thinking going on in the IETF on how ICE can perform better in mobile scenarios. Some of that work is still is in progress, but there’s a new ICE working group. Robin Raymond of Hookflash has implemented a number of ICE performance improvements in ortc-lib, which is on the cutting edge. That’s something, that will be of general interest for ortc-lib use on mobile.

I should mention, by the way, that the Edge Insider Preview is only for desktop. It is not available on Windows Phone 10.

webrtcHacks: Any plans for embedding the Edge ORTC engine as a IE plugin?

Bernard: An external plugin or something?

webrtcHacks: Yeah, or a Microsoft plugin for IE that would implement ORTC.

Bernard: All new features are being implemented in Edge – IE is frozen technology. For consumers, Windows 10 is a free upgrade within the first year. So hopefully, people will take advantage of that and get all the new capabilities, including Edge with ORTC.

Is there an @MSEdgeDev post on the relationship between this and InPrivate? pic.twitter.com/bbu0Mdz0Yd

— Eric Lawrence (@ericlaw) September 22, 2015

A setting discovered in Internet Explorer that appears to address the IP Address Leakage issue.

Validating ORTC

webrtcHacks: Is there anything you want to share?

Bernard: adapter.js is an important thing because it validates the theory that WebRTC 1.0 API support could be built within JavaScript on top of ORTC.

webrtcHacks: And that happened pretty quick – with Fippo‘s help. Really quick.

Bernard: Fippo has written the pull request to support Edge. He is finding bugs in Edge, as well a finding spec bugs. This helps to make sure that the compatibility we promised can be made real. So compatibility isn’t just a vague promise. It has to be demonstrated in software.

Of course the adapter.js code supporting Edge ORTC currently only can be tested with audio. Video adaptation is more complicated, particularly as more codecs are added. So Fippo’s work enabling WebRTC 1.0 audio applications to run on Edge will not be the last word. We will need to pay even more attention to interoperability for video, because it is a lot more complicated.

adapter.js is an important thing because it validates the theory that WebRTC 1.0 API support could be built within JavaScript on top of ORTC.

What does the Microsoft WebRTC team look like

webrtcHacks: Can you comment on how big the time is that’s working on ORTC in Edge? You have a lot of moving pieces in different aspects …

Bernard: There are many teams that have contributed code that is used by Edge. There is the Edge and Skype teams, as well as Windows components, such as S-Channel (that provides support for DTLS). The recently announced work on VP9 streaming video is handled in the Windows Media team. When you look at all the people whose work has touched Edge in some form, it is hundreds of people.

webrtcHacks: And you need to pull it together for purposes of WebRTC/ORTC, is that right?

Bernard: There are a lot of teams involved, and there will probably be more going forward. People ask “Why don’t you have the datachannel”? The data channel requires support for additional transport protocols which isn’t Skype’s area of expertise. So it is necessary to have a team with the expertise in that area to take ownership of that.

Feedback please

webrtcHacks: Any final comments?

Bernard: I would like to encourage people to sign up for Windows Insider Preview, run it, file bugs, and let us know what you think. You can vote on the dev.modern.ie website for new features, which is cool, and we do listen to the input. In addition to the ORTC API we support there are many other APIs that can be useful in building WebRTC applications, and I is important to get a sense of developer priorities, because there are so many things that you could possibly focus on. Right now, the ORTC focus is on interoperable video, but the Edge team as well as other teams are working on many features at the same time that will impact Edge.

webrtcHacks: This is great and very insightful. I think it will be a big help to all the developers out there. Thanks!

{

“Q&A”:{

“interviewer”:“chad hart“,

“interviewee”:“Bernard Aboba“

}

}

Edited on Oct 13, 2015 – 2nd half edited for clarity & readability. Please see the audio recording above for the full, unedited version.

I would like use ortc javascript in our application which makes audio calls to PSTN numbers from microsoft edge. Could you please suggest me the required protocols and setup in our server. We would like to build the application as Twilio uses https://developer.microsoft.com/en-us/microsoft-edge/testdrive/demos/twilioortc/

It’s a shame. None of the Microsoft examples work. It’s not Oct 2018!

If you want to use webrtc go Chrome, FireFox, or win32 development.