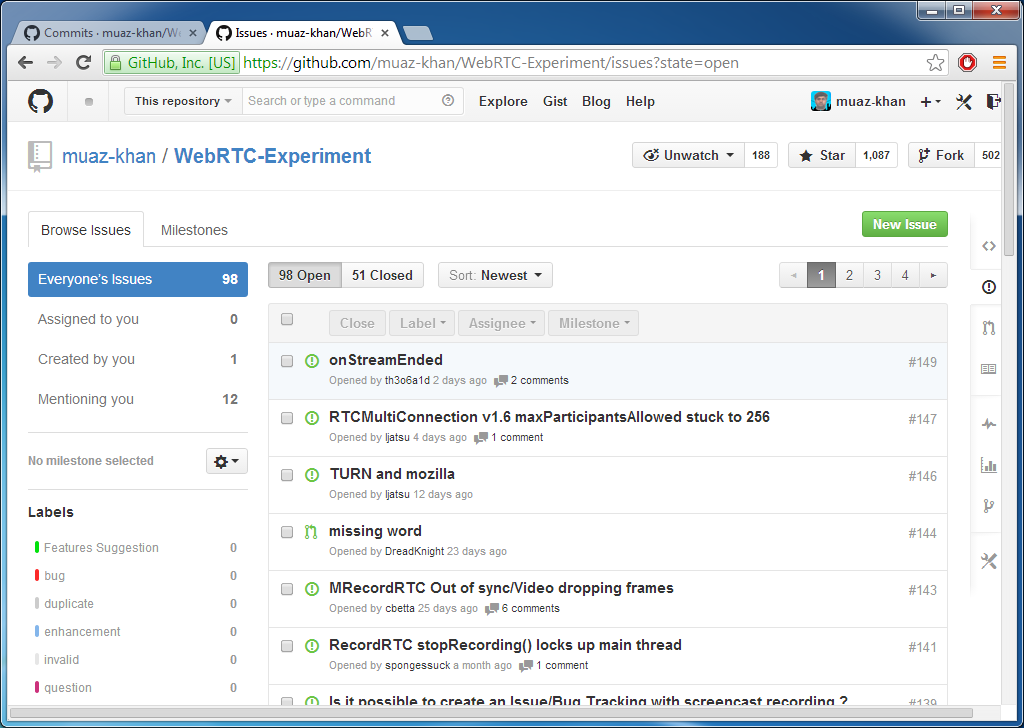

Want to try out a newly released WebRTC feature or capability? Odds are Muaz Khan has already done it. I cannot think of any other individual who has contributed more open source WebRTC application experiments to the community than Muaz and his webrtc-experiment.com. His GitHub repository boasts 44 different projects.

He did all that in less than 2 years and he is just getting started. What is even more amazing is Muaz had done all this with very limited resources from a remote village. He doesn’t event have electricity for large portions of the day in some months!

I was able to pull Muaz away from coding long enough to get him to tell us about his experiments and his thoughts on developing with WebRTC. I kept loose track of his projects and was very surprised on how much he has done during our conversation – I think you will be too.

{“intro-by”, “chad“}

webrtcHacks: Please give us an overview of webrtc-experiment.com and your WebRTC projects?

Muaz: webrtc-experiment.com is a collection of WebRTC demos I have experimented over the last 19 months. I developed the majority of these experiments based on the end-users’ feedback and features request.

For example, I was asked how to stream a region of the screen, stream pre-recorded media -used often by music industry- and how to record media streams locally. I did some experiments and added demos like RecordRTC, pre-recorded media streaming and part of screen sharing. To record media with Chrome, RecordRTC relies on 3rd party libraries like whammy/weppy and recorderjs. It also uses MediaRecorder API to record audio/video in single webm container on Firefox (Nightly).

webrtcHacks: Did you know anything about WebRTC before you started those experiments?

Muaz:I developed a few apps using Microsoft’s DirectShow technology e.g. all connected audio/video devices capturing and recording in AVI container.

When Chrome announced getUserMedia support, I planned to bring same recording app in the browser.

On the 14th of August 2012, I received my 1st freelancing invitation via elance – this was before I first tried the WebRTC RTCPeerConnection API. For this project a one-to-one ASP.NET based WebRTC demo was my first WebRTC experiment. My major WebRTC work was started from 26 Dec, 2012 when I wrote first multi-user tab broadcasting app.

webrtcHacks: And who are your ‘customers’? Are they open source people, companies, the general WebRTC community?

Muaz: My customers are mainly “small” VoIP companies and groups.

In open-source/MIT context, RecordRTC and RTCMultiConnection are used by “some” online school networks, music industry, and healthcare systems. Usually people migrate from Flash. I’m rarely able to catch all such companies because they link my libraries and code from their own servers.

RecordRTC-to-PHP is used in many big projects, but I can’t list such names here.

New social networks implementers (that are bypassing or planning to bring better things comparing existing social networks) are also using RTCMultiConnection.

I received a lot complains that a popular commercial WebRTC API provider fails on low-quality networks and systems – that’s why I added application specific bandwidth and resolutions customizations in RTCMultiConnection; also runtime filtering of features and auto renegotiation.

webrtcHacks: What other experiments do you have there?

Muaz: There are many other experiments and libraries available on my website like:

- RTCMultiConnection.js – a comprehensive wrapper library for RTCWeb APIs; fits in any environment!

- RecordRTC – A library for cross-browser audio/video/gif recording

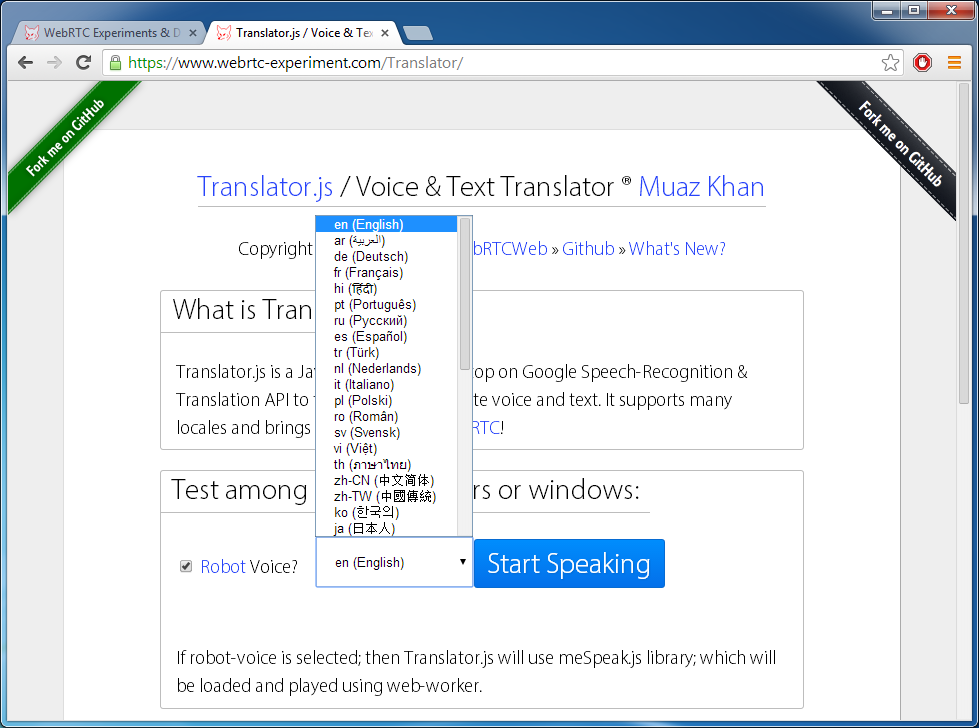

- Translator.js – A library to translate voice and text messages in about 41 languages

- Meeting.js – multi-party video conference using mesh-networking

- File.js – A reusable file sharing library can be used with WebSockets/Socket.io or WebRTC data channels to share auto fragmented files, concurrently.

- DataChannel.js – JavaScript library for data/file/text sharing; auto fallbacks to signaling gateway for non-WebRTC browses

- RTCPeerConnection.js – A simple wrapper library for RTCPeerConnection and RTCDataChannel API

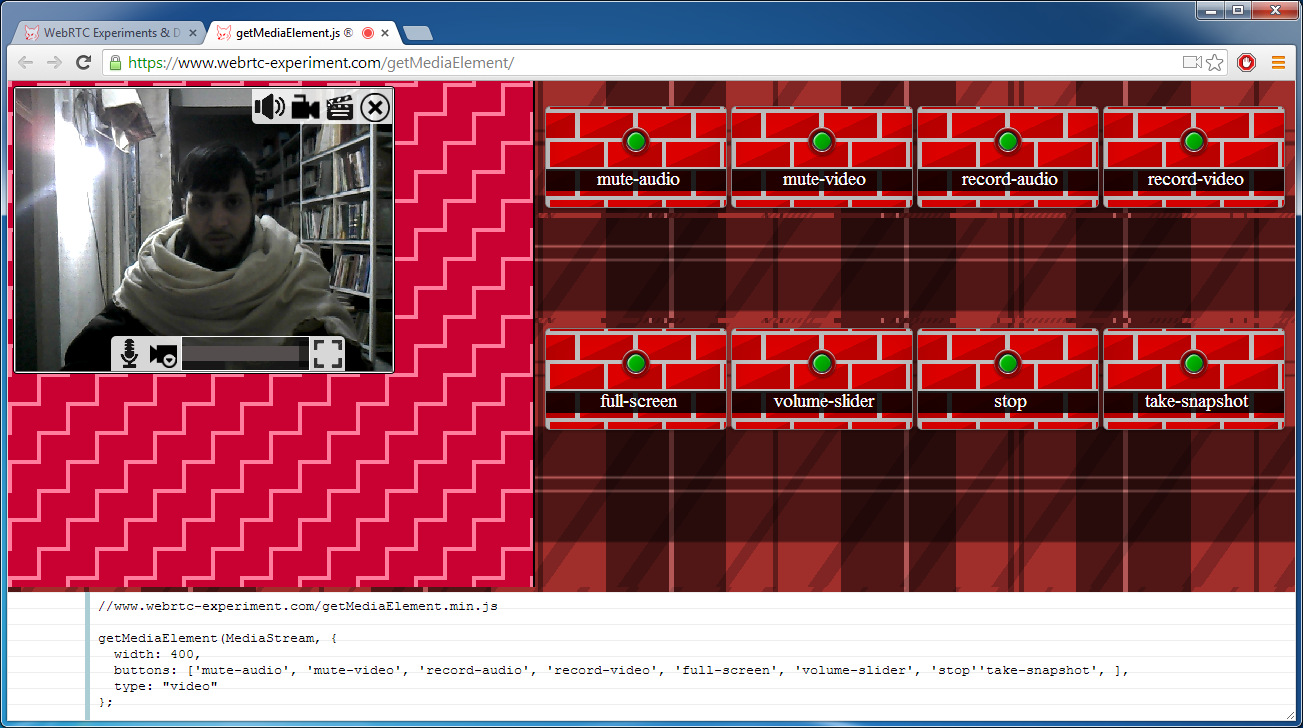

- getMediaElement.js – a library for the layout of audio & video elements

- tabCapture – capture a tab in Chrome using the tabCapture APIs

- desktopCapture – capture the screen of any active/running application using the desktopCapture APIs

- Pre-recorded media streaming – Uses MediaSource API to play vp8/webm chunks as soon as received.

- Part of screen sharing – Uses Canvas2HTML library to take and share snapshots of the HTML elements

- Ffmpeg-asm.js – a collection of demos using ffmpeg implementation in the browser to transcode and merge media files

- many others…

I have added many simple tutorials as well to explain how to:

- write audio/video or data sharing applications,

- share files,

- install TURN server on CentOS,

- write multi-user video sharing application,

- bandwidth usage out of different networking models,

- STUN/TURN comparison,

- Signaling concepts,

- Differences between different networking models like mesh, hybrid, oneway; etc.

- how to control echo cancellation etc.

All these tutorials target beginners and newbies.

webrtcHacks: Is there anything you don’t have?

Muaz: I have demos for almost all WebRTC possible scenarios except peeridentity, getStats API and media server related demos.

I didn’t open-source/include following demos in my github repo:

• SIP-based signaling and demos

• XMPP/Bosh-based signaling

• Native demos (for desktop and mobile devices)

My experiments target browser-based applications without signaling server interaction. Signaling servers are used by all other vendors and projects e.g. peerjs/simplewebrtc/easyrtc/etc. My primary focus is to experiment pure RTCWeb API and relevant VoIP tech, instead of spending time on signaling stuff.

webrtcHacks: Which project is the most popular?

Muaz: RecordRTC and RTCMultiConnection are used the most; and I received many emails regarding features requests and sometimes bugs reports.

When usage comes, video-conferencing and plugin-free screen sharing are two mostly visited experiments on www.webrtc-experiment.

RTCMultiConnection’s admin/guest demo is also visited often and liked the most.

Meeting.js is another commonly used library, although I have not updated any special thing in it for the last 6 months.

The Ffmpeg-asm.js based video conversion experiments are also useful although this implementation is missing vp8, mp3, ogg and many other encoders.

webrtcHacks: Do you have any personal favorites?

Muaz: Last week, I added Translator.js which was popular. I used existing services like Google’s speech recognition and translation API to recognize and translate text; also used meSpeak.js to play translated text as robot voice.

RecordRTC and RTCMultiConnection are also my favorites.

webrtcHacks: You clearly have ninja-like skills with WebRTC. Was there any project you really struggled with?

Muaz: Well, RecordRTC was always harder because I can’t test and fix things easily using XP service pack 2. I would be nice to have a Mac/Linux/Ubuntu and Android platforms to play with.

Whammy.js – a library that encodes webp images in webm container – isn’t a well-written library and needs many things to be fixed carefully. The first cluster works perfectly; the second one fails often on Mac/Linux/XP2. Manually adding frames and using slow encoders is currently the only solution to make a reliable clustering experience. I’m not using this slow encoding option, though.

webrtcHacks: Wait– so, you are doing all of this off of an old Windows computer?

Muaz: I use both windows 7 (Toshiba/notebook) and windows 8 (HP/notebook). I test on Windows XP SP2 (Desktop) as well.

I’ve Android S1 device where I use Firefox Beta for testing my experiments. Since I live in a remote village, it is really hard to go away and buy new devices.

Usually people help me for testing on mobile devices and Mac/etc.

webrtcHacks: That is amazing. What else has challenged you?

Muaz: Remote audio recording and streaming through peer connection is still a feature that is changing a lot. Though, it is expected to be fixed at the end of second quarter, this year.

I tired SdpSerializer.js a while ago to serialize attributes; however this portion is always tough to implement and maintain because UA implementations change too often in both Firefox and Chrome. I’m following ORTC work since beginning.

webrtcHacks: Have you played with the DataChannel?

Muaz: File streaming is also a big challenge because SCTP data connection unexpectedly fails on Chrome stable on some systems. Also sometimes Firefox fails to transmit data if the interval is too short. Fragmentation and chunks retransmission is also harder to implement at the application level. Currently we need to rely on ordered transmission.

It was amazing to see that many developers are still using Chrome 31; and this old version is unable to auto upgrade. It fails even users open “about” page to check new updates. I asked many users to “manually” uninstall M31 and install latest build. SCTP data channels doesn’t seems to be working on Chrome M31 while using new RTCDataChannel syntaxes (i.e. unidirectional streaming syntax).

webrtcHacks: As a developer, what would changes would you like to see in WebRTC? What do you want from the browser vendors and the W3C/IETF?

Muaz: I have a long list:

- I think Firefox should implement multi-streams attachments and renegotiation. Also both Firefox and Chrome should allow fake & multiple media sources – i.e. “APM” – so we can invoke “captureStreamUntilEnded” to stream pre-recorded medias or we can generate media streams on the fly and share using WebRTC peers. Chrome M32 and later supports WebAudio streams as media sources.

- I want to see full-HD and super-HD/ultra-HD media constraints support both on Firefox and Chrome.

- Application level bandwidth must be fully controlled regardless of media resolutions (and retransmission out of RTP/RTCP lost) that also affect CPU and bandwidth usage.

- There is no concrete solution to manage CPU usage or best practice information on this. For example, I found that disabling media stream tracks increases CPU usage but hold/unhold does not.

- Currently the maximum bandwidth limit for each media track is 1MB. This may sounds good however I don’t think there should be a limit for “maximum” bandwidth usage.

- Minimum video bitrate should be 20kbits instead of 50kbits so we can support modems and systems with lower Internet speed and smaller RAM.

- As far as I know, we can simultaneously cast a maximum of four streams (maybe 32 is extreme limit). Again, usually it is a good limit however I prefer “value” for word “maximum” more than enough.

- Opus uses both stereo and mono audio samples – there should be a way to detect whether incoming remote media is streamed using mono or stereo. People usually prefer mono audio to overcome echo issues.

- On the DataChannel – I’m not totally sure, however it seems that the maximum bandwidth usage is 30kbs – maybe that is the default or minimum limit. I don’t know why Chrome doesn’t allow us to manage bandwidth usage for SCTP channels.

- Firefox team should support application level bandwidth because it is really hard to rely on auto-detected available bandwidth.

- The Firefox team should fix and land their screen capturing modules; at least in Firefox Nightly.

- Chrome maybe using DirectShow modules to capture screen, like Windows does. One should be able to stream HD screen from Linux as well. Local screen recording isn’t as HD as remote screen – it should be fixed.

webrtcHacks: You’ve done some projects in C# & ASP.NET – what are your thoughts on WebRTC from a .NET developer perspective? Is there a place for .NET developers in WebRTC?

Muaz: I tried only WebSync and SignalR just as a signaling gateway; though, I tried XHR based signaling a while ago, as well. However, I think .NET framework has many signaling implementations built-in whether it is long-polling or WebSockets etc.

DotNET developers have a more productive tool: “webmatrix” and iisnode brings socket.io streaming which is easier to use and single click to deploy to azure hosting.

webrtcHacks: What new projects do you have in the works or would you like to do?

Muaz: Basically I work as a freelance WebRTC developer. I have projects like multi-user stream broadcasting as well as dynamic selection of the broadcaster. I am also working on a server-to-server WebRTC connection to bypass some SIP vendors that usually blocks some SIP ports.

Direct data connection to media server will be awesome.

I’m planning to try native plugins using node-webkit-hipster-seed – although, as far as I know, chromium screen sharing API doesn’t work there.

I’m trying icecast2 via node.js; however didn’t get any success to stream opus to node.js, transcode in ogg and relay packets over icecast2. Nodejs stack for WebRTC may solve this issue however it is not supported on Windows yet. Trying licode to make it work with icecast2/shoutcast.

webrtcHacks: Where can our readers go to find out more about your projects and follow your work?

Muaz: Please follow me on GitHub or simply go to webrtc-experiment.com.

screen sharing ds not work plase help me

Hi.. thanks very much for the information.

I have a problem :

i don’t understand the node.js part (cmd commands,…)

WebRTC says connection between 2 peers without middle server

BUT what is the node.js part ??!!!

no one no site nothing any information about this ??!!

please HELP ME THANKSSS.

behzad from IRAN.

WebRTC requires some kind of server to help with initial session establishment. I suggest you take a look through many of the great resources listed here https://webrtc.org/start/ to help you get started

Hi I stuck at turn server installation. so what exact about turn server. for production.

Did we need to purchase or simply we can install on our aws server