Update: Philipp continues to reverse engineer Hangouts using chrome://webrtc-internals. Please see the bottom section for new analysis he just put together in the past couple of days based on Chrome 38.

As initiators and major drivers of WebRTC, Google was often given a hard time for not supporting WebRTC in its core collaboration product. This recently changed when WebRTC support for Hangouts was added with Chrome 36.

So obviously we wanted to check out how this worked. We also were curious to see how a non-googler could make some practical use of chrome://webrtc-internals. Soon thereafter I came across a message from Philipp Hancke (aka Hornsby Cornflower) saying he had already starting looking at the new WebRTC hangouts with webrtc-internals. Fortunately I was able to convince him to share his findings and thorough analysis.

Philipp has been a real-time communications master for more than 10 years. He currently works for simpleWebRTC and talky.io creator &yet. In addition, he is a major contributor to the Jitsi Meet project. As a long-time member of the XMPP Standards Foundation (XSF), Philipp has contributed to a number of key XEPs (XMPP speak for an RFC). Incidentally, he is currently serving on the XSF council (which is XMPP speak for the circle of the initiated XMPP gurus that control the world).

You’ll see how the combo of his vast WebRTC knowledge and XMPP/Jingle background come together in the analysis below.

{“intro-by”: “chad“}

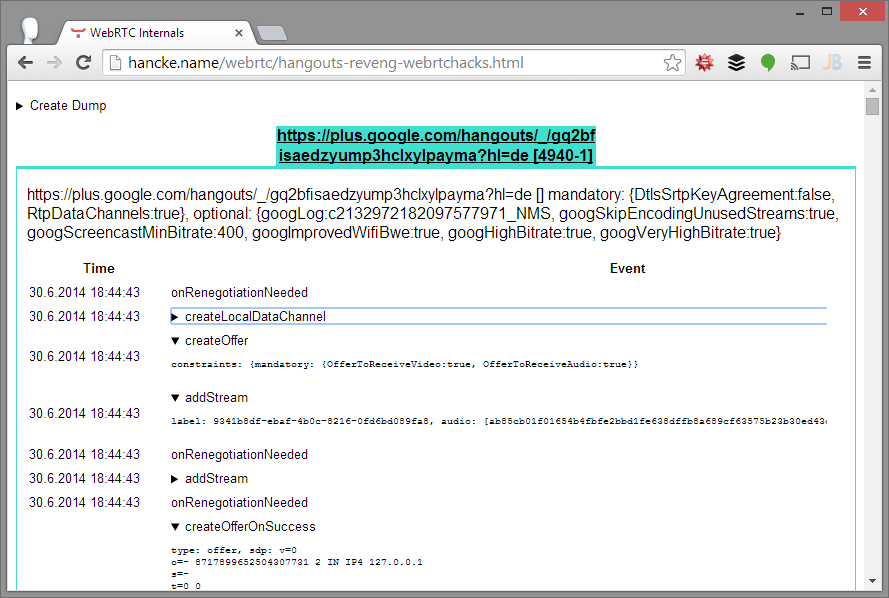

Google announced in late June that their Hangouts now supports WebRTC. At a technical level, this is a very good chance to analyze how Hangouts works using Chrome’s built-in WebRTC diagnostics tool – chrome://webrtc-internals.

The webrtc-internals page is an extremely useful tool for debugging WebRTC issues in Chrome. It shows all API calls of all PeerConnection objects along with additional statistics like bandwidth consumption in a very nice way. This allows us to observe what PeerConnection API calls are used by WebRTC without digging into the source code at all.

Findings

Non-standardized features

As my webrtc-internals analysis below reveals, some of the non-standard features implemented in Chrome from when its first added WebRTC support are used to facilitate plug-in free Hangouts there. These features are:

- the use of security descriptions (SDES) for encryption

- RTP-based unreliable data channels

- per-candidate ICE parameters (and google-ice, an old variant of the ICE standard)

None of these are part of the official WebRTC spec. This is also explains why Firefox does not work with the WebRTC version of Hangouts.

Plan B multiplexing

Another reason for the lack of support is Firefox is because Hangouts sends multiple audio and video streams over a single PeerConnection. While Firefox does not support this at all, Chrome has used a variant called Plan B (which was not adopted by the IETFs RTCWEB working group) for a while. Basically Plan B calls setRemoteDescription/setLocalDescription repeatedly over the lifetime of the stream, adding source-specific media attribute lines (as described in RFC 5576) in the SDP. The setRemoteDescription calls trigger a onaddstream or onremovestream callback respectively.

Simulcast

This is not the first step Google makes to upgrade Hangouts to WebRTC. In August 2013, the video codec used changed from H.264 (presumably H.264 SVC, the scalable variant) to VP8.

As we will see later, the new WebRTC-hangouts make use of a technique called Simulcast which is similar to SVC. Possibly Google had already introduced this technique back then, making small, well defined steps in the upgrade process.

In simulcast, several video streams are sent, each with different resolutions and framerates. Conversely, SVC does this using a single video stream. When simulcast is used to send multiple streams over the same connection there is not much difference, even though SVC is slightly more efficient. One of the most important features of both SVC and simulcast is that a Selective Forwarding Unit (such as the Jitsi Videobridge or the VidyoRouter that is presumably still used by Hangouts) can forward low-bandwidth versions of the stream to certain clients without having to decode and re-encode the video in the process.

NaCL

Another issue that was quoted back in August 2013 as a reason that Hangouts did not switch to WebRTC yet was the hats feature. Now all people in the screenshots are wearing hats and Chrome Native Client extensions (NaCL) is required. This might mean that the hats feature (which requires facial recognition) needs more performance than currently available at the JavaScript layer to work well.

The goal of this step seems to have been rolling out compatibility with Chrome without making additional changes on the server-side infrastructure. Removing the non-standard elements like SDES and RTP-based data channels might happen in the future, but will not impact users in any way. Firefox compatibility will then mostly depend on how fast Mozilla is able to add multiple streams per PeerConnection.

Screen Sharing

Even though the use of Chrome Extensions for screensharing has been advocated by the WebRTC team, Hangouts does not require an extension to use the chrome.chooseDesktopMedia API.

Hangouts is mostly WebRTC?

As a summary, let us compare where what Chrome uses in this case is different from the WebRTC standard (either the W3C or the various IETF drafts):

| Feature | WebRTC/RTCWeb Specifications | Chrome |

| SDES | MUST NOT offer SDES | uses SDES |

| data channels | DTLS/SCTP-based | unreliable RTP-based |

| ICE | RFC 5245 | google-ice |

| Audio codec | Opus or G.711 | ISAC |

| Multiple streams | undecided yet | Plan B |

| Simulcast | undecided yet | proprietary SDP extension |

If you don’t believe me, or want to learn how to use webrtc-internals to extract this kind of information and more from your WebRTC session, then please review the detailed analysis section below.

Analysis

We are now going to take a quick look at a session we dumped. The full dump is available here, saved directly from webrtc-internals.

Beware, the following sections use quite a lot of SDP terminology, so reading the SDP anatomy blogpost is highly recommended.

RTCPeerConnection Constraints

The constraints used to create the RTCPeerConnection object:

|

1 |

https://plus.google.com/hangouts/_/gq2bfisaedzyump3hclxylpayma?hl=de [] mandatory: {DtlsSrtpKeyAgreement:false, RtpDataChannels:true}, optional: {googLog:c2132972182097577971_NMS, googSkipEncodingUnusedStreams:true, googScreencastMinBitrate:400, googImprovedWifiBwe:true, googHighBitrate:true, googVeryHighBitrate:true} |

Mandatory contraints

This shows two mandatory constraints:

DtlsSrtpKeyAgreement: false

Setting this to false disables DTLS-SRTP and re-enables the older SDES encryption. As Victor previously covered in this post, at IETF 87 in Berlin last July, it was decided that WebRTC is not going to use SDES anymore. Chrome recently disabled support for this by default. Technically this means that any claims that Hangouts now uses WebRTC are incorrect.

In terms of security not much is changed however, since Hangouts are not peer-to-peer. The bridge is acting a a man-in-the-middle, so DTLS-SRTP does not offer much advantage here. As mentioned earlier, this is one of the reasons that Hangouts does not work in Firefox, which does not support SDES at all.

RtpDataChannels: true

Before deciding to use SCTP over DTLS for WebRTC data channels, Chrome first implemented unreliable data channels over RTP. This is what the RTPDataChannels constraint does. It seems the video router Google uses for this does not support DTLS at all yet. This does not work in Firefox either.

Optional constraints

There are a couple of optional constraints used too:

googLog:…

The name implies that it enables some sort of logging.

googSkipEncodingUnusedStream:true

This is an undocumented flag, but seems quite interesting. Not encoding unused streams saves CPU obviously, but it’s not clear how “unused” is defined. Quite likely this relates to the use of simulcast which is described below.

googScreencastMinBitrate: 400

Another undocumented flag. It seems to set the minimum bitrate for screensharing.

googImprovedWifiBwe: true

This enables an improved bandwidth estimation algorithm which was mentioned by Justin and Serge in their WebRTC Update at the KrankyGeek event.

googHighBitrate:true, googVeryHighBitrate: true

Also mentioned in the update, this uses a higher initial bitrate. Without this, getting high-quality HD pictures would take quite long.

The RTCPeerConnection API calls

The dump shows a createLocalDataChannel call followed by a createOffer and two addStream calls, one for audio, one for video:

|

1 |

onRenegotiationNeeded |

|

1 2 |

createLocalDataChannel label: sendDataChannel, reliable: false |

|

1 2 |

createOffer constraints: {mandatory: {OfferToReceiveVideo:true, OfferToReceiveAudio:true}} |

|

1 2 |

addStream label: 9341b8df-ebaf-4b0c-8216-0fd6bd089fa8, audio:[ab85cb01f01654b4fbfe2bbd1fe638dffb8a689cf63575b23b30ed43d174d683] |

|

1 |

onRenegotiationNeeded |

|

1 2 |

addStream label: 1199011b-f25f-497f-8e77-5a10484d71ce, video: [9a857d3bb07de50b2a7f58f4ad6edc2e44b7ca7e0fd7b41de0e2261ac9ef4385] |

|

1 |

onRenegotiationNeeded |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 |

createOfferOnSuccess type: offer, sdp: v=0 o=- 8717899652504307731 2 IN IP4 127.0.0.1 s=- t=0 0 a=group:BUNDLE audio video data a=msid-semantic: WMS m=audio 1 RTP/SAVPF 111 103 104 0 8 106 105 13 126 c=IN IP4 0.0.0.0 a=rtcp:1 IN IP4 0.0.0.0 a=ice-ufrag:4t22CxThPocZSIBa a=ice-pwd:wKJ0LyPB8DAD+r8bNpSS1riU a=ice-options:google-ice a=mid:audio a=extmap:1 urn:ietf:params:rtp-hdrext:ssrc-audio-level a=extmap:3 http://www.webrtc.org/experiments/rtp-hdrext/abs-send-time a=recvonly a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:LQP8XKpMskWEeWCsByH2ZXUwEoRZ3wdA68Pkxerb a=rtpmap:111 opus/48000/2 a=fmtp:111 minptime=10 a=rtpmap:103 ISAC/16000 a=rtpmap:104 ISAC/32000 a=rtpmap:0 PCMU/8000 a=rtpmap:8 PCMA/8000 a=rtpmap:106 CN/32000 a=rtpmap:105 CN/16000 a=rtpmap:13 CN/8000 a=rtpmap:126 telephone-event/8000 a=maxptime:60 m=video 1 RTP/SAVPF 100 116 117 96 c=IN IP4 0.0.0.0 a=rtcp:1 IN IP4 0.0.0.0 a=ice-ufrag:4t22CxThPocZSIBa a=ice-pwd:wKJ0LyPB8DAD+r8bNpSS1riU a=ice-options:google-ice a=mid:video a=extmap:2 urn:ietf:params:rtp-hdrext:toffset a=extmap:3 http://www.webrtc.org/experiments/rtp-hdrext/abs-send-time a=recvonly a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:LQP8XKpMskWEeWCsByH2ZXUwEoRZ3wdA68Pkxerb a=rtpmap:100 VP8/90000 a=rtcp-fb:100 ccm fir a=rtcp-fb:100 nack a=rtcp-fb:100 nack pli a=rtcp-fb:100 goog-remb a=rtpmap:116 red/90000 a=rtpmap:117 ulpfec/90000 a=rtpmap:96 rtx/90000 a=fmtp:96 apt=100 m=application 1 RTP/SAVPF 101 c=IN IP4 0.0.0.0 a=rtcp:1 IN IP4 0.0.0.0 a=ice-ufrag:4t22CxThPocZSIBa a=ice-pwd:wKJ0LyPB8DAD+r8bNpSS1riU a=ice-options:google-ice a=mid:data a=sendrecv b=AS:30 a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:LQP8XKpMskWEeWCsByH2ZXUwEoRZ3wdA68Pkxerb a=rtpmap:101 google-data/90000 a=ssrc:1092095154 cname:YuFlsTZUYKl5tFHm a=ssrc:1092095154 msid:sendDataChannel sendDataChannel a=ssrc:1092095154 mslabel:sendDataChannel a=ssrc:1092095154 label:sendDataChannel |

The order above is likely a bug – calling createOffer before adding the streams generates a receiveonly stream which we can see in the createOfferOnSuccess. The createOfferOnSuccess callbacks shows an SDP that is the result of some of the constraints mentioned above:

a=recvonly

This is caused by calling createOffer before the addStream calls.

a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:…

Since DtlsSrtpKeyAgreement is set to false SDES is used. There is no fingerprint attribute here either.

m=application 1 RTP/SAVPF 101

As mentioned above, RtpDataChannels are used.

setLocalDescription

Next, we see a setLocalDescription call:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 |

setLocalDescription type: offer, sdp: v=0 o=- 0 2 IN IP4 127.0.0.1 s=- t=0 0 a=ice-options:google-ice a=msid-semantic:WMS m=audio 1 RTP/SAVPF 103 111 0 8 106 105 13 126 a=sendrecv a=mid:audio a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:vZAXS5BVC+6ZBRHeiHTcPKmgFWBOsQ8EaP2X3Vj9 a=rtpmap:103 ISAC/16000/1 a=rtpmap:111 opus/48000/2 a=rtpmap:0 PCMU/8000/1 a=rtpmap:8 PCMA/8000/1 a=rtpmap:106 CN/32000/1 a=rtpmap:105 CN/16000/1 a=rtpmap:13 CN/8000/1 a=rtpmap:126 telephone-event/8000/1 a=extmap:1 urn:ietf:params:rtp-hdrext:ssrc-audio-level a=ssrc:1251026671 cname:localCname a=ssrc:1251026671 msid:9341b8df-ebaf-4b0c-8216-0fd6bd089fa8 d4c9fcf5-07ce-490e-bf6c-a33eca5006f0 a=ice-ufrag:4t22CxThPocZSIBa a=ice-pwd:wKJ0LyPB8DAD+r8bNpSS1riU m=video 1 RTP/SAVPF 100 a=sendrecv a=mid:video a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:GWerQs2ulGMdBrsK7Vy0D45YPCfZFRYKcXPP9IQ3 a=rtpmap:100 VP8/90000 a=rtcp-fb:100 ccm fir a=rtcp-fb:100 nack a=rtcp-fb:100 goog-remb a=extmap:2 urn:ietf:params:rtp-hdrext:toffset a=extmap:3 http://www.webrtc.org/experiments/rtp-hdrext/abs-send-time a=ssrc-group:SIM 3110133607 4062881588 2610516569 a=ssrc:3110133607 cname:localCname a=ssrc:3110133607 msid:1199011b-f25f-497f-8e77-5a10484d71ce fdc66525-2a0d-42c9-b564-7bfeaba3b11d a=ssrc:4062881588 cname:localCname a=ssrc:4062881588 msid:1199011b-f25f-497f-8e77-5a10484d71ce fdc66525-2a0d-42c9-b564-7bfeaba3b11d a=ssrc:2610516569 cname:localCname a=ssrc:2610516569 msid:1199011b-f25f-497f-8e77-5a10484d71ce fdc66525-2a0d-42c9-b564-7bfeaba3b11d a=ice-ufrag:4t22CxThPocZSIBa a=ice-pwd:wKJ0LyPB8DAD+r8bNpSS1riU m=application 1 RTP/SAVPF 101 a=sendrecv a=mid:data a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:10ueh73FJapY0LZoZJGGx5SmnaNxlC7BnODnPJP2 a=rtpmap:101 google-data/90000 a=ssrc:2810066131 cname:localCname a=ssrc:2810066131 msid:sendDataChannel sendDataChannel a=ice-ufrag:4t22CxThPocZSIBa a=ice-pwd:wKJ0LyPB8DAD+r8bNpSS1riU |

Usually, one would not expect much difference between the SDP in the createOfferOnSuccess callback and the one used in setLocalDescription. Here, the changes are quite significant, so let’s take a closer look.

There is a a=ice-options:google-ice line at session level, moved there from the individual m-lines.

This means that google-ice, an old variant that predates RFC 5245 is used. This can be easily verified with tools like Wireshark. Compared to standard ICE the both username fragments that form the STUN username are not separated by a colon.

m=audio 1 RTP/SAVPF 103 111 0 8 106 105 13 126

This changes the order of the audio codecs described in the a=rtpmap lines. Basically this means that the ISAC codec (at 16khz) is used.

a=sendrecv

This makes the streams bidirectional, working around the wrong order of the createOffer / addStream calls.

a=ssrc:…

The number of a=ssrc lines is lower than what Chrome generates by default. But it turns out that actually the two additional lines that Chrome generates are redundant and will be removed at some point.

a=ssrc-group:SIM

This defines a group of synchronisation sources (as described in RFC 5576) with a “SIM” semantics. While this semantics is not registered with the IANA, the name SIM implies simulcast, i.e. sending different resolutions or framerates on the same connection. We can see three different SSRCs in this group, probably HD, 360p and a small resolution stream. It recently became possible to achieve similar results with getUserMedia, but this way to enable simulcast might be easier to optimize for the video encoder.

setRemoteDescription

The next thing we see is a setRemoteDescription call:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

setRemoteDescription type: answer, sdp: v=0 o=- 0 2 IN IP4 127.0.0.1 s=- t=0 0 a=ice-options:google-ice a=msid-semantic:WMS m=audio 1 RTP/SAVPF 103 111 0 8 106 105 13 126 a=sendrecv a=mid:audio a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:Tn3m25xXNDC6NZjZ7WNcJCJzfN+a8mylmfpBBibR a=rtpmap:103 ISAC/16000/1 a=rtpmap:111 opus/48000/2 a=rtpmap:0 PCMU/8000/1 a=rtpmap:8 PCMA/8000/1 a=rtpmap:106 CN/32000/1 a=rtpmap:105 CN/16000/1 a=rtpmap:13 CN/8000/1 a=rtpmap:126 telephone-event/8000/1 a=extmap:1 urn:ietf:params:rtp-hdrext:ssrc-audio-level a=x-google-flag:conference a=ice-ufrag:1234567890123456 a=ice-pwd:123456789012345678901234 m=video 1 RTP/SAVPF 100 a=sendrecv a=mid:video a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:bTrwvSTNGNJoBWFBNFGDnhOawByReYr4j35i0FHp a=rtpmap:100 VP8/90000 a=rtcp-fb:100 ccm fir a=rtcp-fb:100 nack a=rtcp-fb:100 goog-remb a=extmap:2 urn:ietf:params:rtp-hdrext:toffset a=extmap:3 http://www.webrtc.org/experiments/rtp-hdrext/abs-send-time a=x-google-flag:conference a=ice-ufrag:1234567890123456 a=ice-pwd:123456789012345678901234 m=application 1 RTP/SAVPF 101 a=sendrecv a=mid:data a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:Ow7DkCVQnxaqVsj8T7a9kfQF42dg0R6XpWYnmkrc a=rtpmap:101 google-data/90000 a=x-google-flag:conference a=ssrc:0 cname:localCname a=ssrc:0 msid:sendDataChannel sendDataChannel a=ice-ufrag:1234567890123456 a=ice-pwd:123456789012345678901234 |

This shows the SDP coming from the video router. Again, a number of things are worth pointing out here:

a=ice-ufrag:1234567890123456

a=ice-pwd:123456789012345678901234

These are the router’s ICE ufrag and pwd attributes. At a first glance this looks like a really bad hack. However, it turns out later that these parameters are never used. They’re just here to make Chrome happy which enforces the presence of these attributes in a setRemoteDescription call.

a=x-google-flag:conference

It is not documented anywhere what this actually does. Probably it’s a hint for the encoder that there might be multiple audio streams to enable better echo cancellation.

Another setRemoteDescription?

This is immediately followed by another setRemoteDescription call, without waiting for the setRemoteDescription to even succeed:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

setRemoteDescription type: offer, sdp: v=0 o=- 0 2 IN IP4 127.0.0.1 s=- t=0 0 a=ice-options:google-ice a=msid-semantic:WMS m=audio 1 RTP/SAVPF 103 111 0 8 106 105 13 126 a=sendrecv a=mid:audio a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:Tn3m25xXNDC6NZjZ7WNcJCJzfN+a8mylmfpBBibR a=rtpmap:103 ISAC/16000/1 a=rtpmap:111 opus/48000/2 a=rtpmap:0 PCMU/8000/1 a=rtpmap:8 PCMA/8000/1 a=rtpmap:106 CN/32000/1 a=rtpmap:105 CN/16000/1 a=rtpmap:13 CN/8000/1 a=rtpmap:126 telephone-event/8000/1 a=extmap:1 urn:ietf:params:rtp-hdrext:ssrc-audio-level a=x-google-flag:conference a=ssrc:1251026671 cname:/c2132972182097577971_NMS%2F1 a=ssrc:1251026671 msid:/c2132972182097577971_NMS%2F1 /c2132972182097577971_NMS%2F1 a=ice-ufrag:1234567890123456 a=ice-pwd:123456789012345678901234 m=video 1 RTP/SAVPF 100 a=sendrecv a=mid:video a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:bTrwvSTNGNJoBWFBNFGDnhOawByReYr4j35i0FHp a=rtpmap:100 VP8/90000 a=rtcp-fb:100 ccm fir a=rtcp-fb:100 nack a=rtcp-fb:100 goog-remb a=extmap:2 urn:ietf:params:rtp-hdrext:toffset a=extmap:3 http://www.webrtc.org/experiments/rtp-hdrext/abs-send-time a=x-google-flag:conference a=ice-ufrag:1234567890123456 a=ice-pwd:123456789012345678901234 m=application 1 RTP/SAVPF 101 a=sendrecv a=mid:data a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:Ow7DkCVQnxaqVsj8T7a9kfQF42dg0R6XpWYnmkrc a=rtpmap:101 google-data/90000 a=x-google-flag:conference a=ssrc:0 cname:localCname a=ssrc:0 msid:sendDataChannel sendDataChannel a=ice-ufrag:1234567890123456 a=ice-pwd:123456789012345678901234 |

The new setRemoteDescription call adds two a=ssrc lines in the audio section which triggers an addStream. This might be a mixed audio stream for the conference. Another setLocalDescription call (no changes) brings the signaling state back to stable. As a rule of thumb, you always want your signaling state to be stable.

ICE candidates

Next, the video router sends a couple of ICE candidates. Let’s look at the first one:

|

1 2 3 |

addIceCandidate mid: audio, candidate: a=candidate:0 1 udp 0 173.194.65.127 19305 typ host generation 0 username aEaa0tkkdyIRRqzZ password xRIKRBlyiT7syBDkVs44q3Tv |

This line includes a username and password at the end which is an extension to the normal ice candidate syntax that is only supported by Chrome. It seems that hangouts might still use Jingle, the signaling protocol Google originally came up with for XMPP and Google Talk. Jingle allows individual ufrag and pwds for each transport-info message which are used to do trickle ice. More on that later.

Looking at the other candiates is interesting as well, in particular the third one:

|

1 2 3 |

addIceCandidate mid: audio, candidate: a=candidate:0 1 ssltcp 0 173.194.65.127 443 typ host generation 0 username aEaa0tkkdyIRRqzZ password xRIKRBlyiT7syBDkVs44q3Tv |

The transport field of this candidate is ssltcp and the port is 443. This means it is running ICE-TCP prepended by something that looks like a TLS handshake on port 443. Port 443 is typically used for HTTPS traffic which will be open even in environments that block UDP traffic.

Further, it will work well with HTTPS proxy servers that support the CONNECT method. This method is similar to running TURN over TLS, with slightly less overhead for the server.

Plan B multi-streams

The next setRemoteDescription call adds a video stream:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 |

setRemoteDescription type: offer, sdp: v=0 o=- 0 2 IN IP4 127.0.0.1 s=- t=0 0 a=ice-options:google-ice a=msid-semantic:WMS m=audio 1 RTP/SAVPF 103 111 0 8 106 105 13 126 a=sendrecv a=mid:audio a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:Tn3m25xXNDC6NZjZ7WNcJCJzfN+a8mylmfpBBibR a=rtpmap:103 ISAC/16000/1 a=rtpmap:111 opus/48000/2 a=rtpmap:0 PCMU/8000/1 a=rtpmap:8 PCMA/8000/1 a=rtpmap:106 CN/32000/1 a=rtpmap:105 CN/16000/1 a=rtpmap:13 CN/8000/1 a=rtpmap:126 telephone-event/8000/1 a=extmap:1 urn:ietf:params:rtp-hdrext:ssrc-audio-level a=x-google-flag:conference a=ssrc:1251026671 cname:/c2132972182097577971_NMS%2F1 a=ssrc:1251026671 msid:/c2132972182097577971_NMS%2F1 /c2132972182097577971_NMS%2F1 a=ice-ufrag:1234567890123456 a=ice-pwd:123456789012345678901234 m=video 1 RTP/SAVPF 100 a=sendrecv a=mid:video a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:bTrwvSTNGNJoBWFBNFGDnhOawByReYr4j35i0FHp a=rtpmap:100 VP8/90000 a=rtcp-fb:100 ccm fir a=rtcp-fb:100 nack a=rtcp-fb:100 goog-remb a=extmap:2 urn:ietf:params:rtp-hdrext:toffset a=extmap:3 http://www.webrtc.org/experiments/rtp-hdrext/abs-send-time a=x-google-flag:conference a=ssrc:1690549932 cname:hangout57996CC2_ephemeral.id.google.com%5E28085a9400/1690549932 a=ssrc:1690549932 msid:hangout57996CC2_ephemeral.id.google.com%5E28085a9400/1690549932 hangout57996CC2_ephemeral.id.google.com%5E28085a9400/1690549932 a=ice-ufrag:1234567890123456 a=ice-pwd:123456789012345678901234 m=application 1 RTP/SAVPF 101 a=sendrecv a=mid:data a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:Ow7DkCVQnxaqVsj8T7a9kfQF42dg0R6XpWYnmkrc a=rtpmap:101 google-data/90000 a=x-google-flag:conference a=ssrc:2810066131 cname:hangout57996CC2_ephemeral.id.google.com%5E28085a9400/2810066131 a=ssrc:2810066131 msid:hangout57996CC2_ephemeral.id.google.com%5E28085a9400/2810066131 hangout57996CC2_ephemeral.id.google.com%5E28085a9400/2810066131 a=ssrc:0 cname:localCname a=ssrc:0 msid:sendDataChannel sendDataChannel a=ice-ufrag:1234567890123456 a=ice-pwd:123456789012345678901234 |

|

1 2 3 |

onRemoteDataChannel label: hangout57996CC2_ephemeral.id.google.com%5E28085a9400/2810066131, reliable: false 30.6.2014 18:44:43 |

|

1 2 3 |

onAddStream label: hangout57996CC2_ephemeral.id.google.com%5E28085a9400/1690549932, video: [hangout57996CC2_ephemeral.id.google.com%5E28085a9400/1690549932] |

Adding multiple audio/video streams this way is called “Plan B” and described here. It was actually decided at the IETF in Berlin last year that this is not the SDP way to do this, but we also use it alot in Jitsi Meet and it works pretty well.

At that point, the ICE connection is established and we see an unreliable data channel added with a onRemoteDataChannel callback. Note how the label of the channel correlates with the a=ssrc msid line in the SDP.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 |

setRemoteDescription type: offer, sdp: v=0 o=- 0 2 IN IP4 127.0.0.1 s=- t=0 0 a=ice-options:google-ice a=msid-semantic:WMS m=audio 1 RTP/SAVPF 103 0 111 105 13 106 a=sendrecv a=mid:audio a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:Tn3m25xXNDC6NZjZ7WNcJCJzfN+a8mylmfpBBibR a=rtpmap:103 ISAC/16000/1 a=rtpmap:0 PCMU/8000/1 a=rtpmap:111 OPUS/48000/2 a=rtpmap:105 CN/16000/1 a=rtpmap:13 CN/8000/1 a=rtpmap:106 CN/32000/1 a=extmap:1 urn:ietf:params:rtp-hdrext:ssrc-audio-level a=x-google-flag:conference a=ssrc:1251026671 cname:/c2132972182097577971_NMS%2F1 a=ssrc:1251026671 msid:/c2132972182097577971_NMS%2F1 /c2132972182097577971_NMS%2F1 a=ssrc:1409438262 cname:hangout0F9108B9_ephemeral.id.google.com%5Efaf46371f0/1409438262 a=ssrc:1409438262 msid:hangout0F9108B9_ephemeral.id.google.com%5Efaf46371f0/1409438262 hangout0F9108B9_ephemeral.id.google.com%5Efaf46371f0/1409438262 a=ice-ufrag:1234567890123456 a=ice-pwd:123456789012345678901234 m=video 1 RTP/SAVPF 100 a=sendrecv a=mid:video a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:bTrwvSTNGNJoBWFBNFGDnhOawByReYr4j35i0FHp a=rtpmap:100 VP8/90000 a=fmtp:100 width=640 a=fmtp:100 height=360 a=fmtp:100 framerate=30 a=rtcp-fb:100 ccm fir a=rtcp-fb:100 nack a=rtcp-fb:100 goog-remb a=extmap:3 http://www.webrtc.org/experiments/rtp-hdrext/abs-send-time a=x-google-flag:conference a=ssrc:1690549932 cname:hangout57996CC2_ephemeral.id.google.com%5E28085a9400/1690549932 a=ssrc:1690549932 msid:hangout57996CC2_ephemeral.id.google.com%5E28085a9400/1690549932 hangout57996CC2_ephemeral.id.google.com%5E28085a9400/1690549932 a=ice-ufrag:1234567890123456 a=ice-pwd:123456789012345678901234 m=application 1 RTP/SAVPF 101 a=sendrecv a=mid:data a=rtcp-mux a=crypto:1 AES_CM_128_HMAC_SHA1_80 inline:Ow7DkCVQnxaqVsj8T7a9kfQF42dg0R6XpWYnmkrc a=rtpmap:101 google-data/90000 a=x-google-flag:conference a=ssrc:2810066131 cname:hangout57996CC2_ephemeral.id.google.com%5E28085a9400/2810066131 a=ssrc:2810066131 msid:hangout57996CC2_ephemeral.id.google.com%5E28085a9400/2810066131 hangout57996CC2_ephemeral.id.google.com%5E28085a9400/2810066131 a=ssrc:0 cname:localCname a=ssrc:0 msid:sendDataChannel sendDataChannel a=ice-ufrag:1234567890123456 a=ice-pwd:123456789012345678901234 |

When another person joins, we see another setRemoteDescription call. This one adds the following lines in the video section:

a=fmtp:100 width=640

a=fmtp:100 height=360

a=fmtp:100 framerate=30

While Chrome does not parse this (and does not need to), it is very interesting because this is equivalent to a description-info action in Jingle as described here. As this is the only thing that changes here, I take it as another hint that hangouts still uses Jingle.

Next we see another two setRemoteDescription calls, adding an audio and video stream respectively. And a third one which adds an RTP datachannel.

While not shown in the dump, the same thing happens when a participant leaves. The a=ssrc lines are removed and onRemoveStream is called.

New Chrome 38 Analysis

In Chrome 38 (currently canary channel), the chrome://webrtc-internals page also shows any getUserMedia calls and the constraints used. Again, what has been tweaked is quite interesting:

|

1 2 3 4 |

Audio Constraints optional: {googEchoCancellation:true, googAutoGainControl:true, googNoiseSuppression:true, googHighpassFilter:true, googAudioMirroring:false, googNoiseSuppression2:true, googEchoCancellation2:true, googAutoGainControl2:true, googDucking:false, sourceId:ab85cb01f01654b4fbfe2bbd1fe638dffb8a689cf63575b23b30ed43d174d683, chromeRenderToAssociatedSink:true} Video Constraints optional: {minFrameRate:30, minHeight:720, minWidth:1280, maxFrameRate:30, maxWidth:1280, maxHeight:720, googLeakyBucket:true, googNoiseReduction:true, sourceId:9a857d3bb07de50b2a7f58f4ad6edc2e44b7ca7e0fd7b41de0e2261ac9ef4385} |

Common constraints

sourceId

The sourceId constraint selects a specific device, identified by this id which can be stored in a cookie or localStorage setting.

The API for enumerating available devices is currently somewhat unstable but already allows changing the microphone and camera to be used in Chrome. It was originally MediaStream.getSources, but was renamed to Navigator.getMediaDevices and is soon going to be available as MediaDevices.enumerateDevices.

Audio constraints

There is quite a number of audio constraints here. The first set consisting of:

|

1 2 3 4 5 6 7 |

googEchoCancellation: true googEchoCancellation2: true googAutoGainControl: true googAutoGainControl2: true googNoiseSuppression: true googgNoiseSuppression2: true googHighpassFilter: true |

This probably gives you the best echo cancellation available.

googAudioMirroring

seems to allow swapping the left and right channels for local audio (which is disabled usually), similar to how the local video is displayed mirrored by means of CSS.

googDucking: false

Ducking is described as using the default communications device on Windows in this chromium issue. This is particularly important for users with headsets.

It seems to be broken currently and is deactivated therefore.

chromeRenderToAssociatedSink: true

Quite likely, this sends audio output to the same device that is used for capturing audio. Again, this seems useful when using a headset.

Video constraints

minFrameRate: 30, minHeight: 720, minWidth: 1280, maxFrameRate: 30, maxWidth: 1280, maxHeight: 720

Acquires a 720p hd videostream at 30 frames per second.

googLeakyBucket: true

As explained in this bug report this changes the way the video encoding adapts to the available bandwidth, especially when sending large keyframes as it happens when sharing a full-HD screen.

googNoiseReduction: true

Likely removes the noise in the captured video stream at the expense of computational effort.

{“author”: “Philipp Hancke“}

Very interesting read, thanks for sharing!

I find it a little annoying that they allow Hangouts to go around the mandatory extension for chooseDesktopMedia, but considering the number of undocumented features they exploit that’s hardly surprising.

Lorenzo: I did not find jubertis https://twitter.com/HCornflower/status/486828629977235456 convincing either :-/

+1

Really nice article. Thx!!

Very nice article !! thanks

Hi,

great article, but i still have a few questions 🙂

– you say that chrome use additional (non-webrtc) modules to talk to hangouts. Are these modules included in the open-source version of chrome: chromium ?

– if Yes, do they need Google API keys

– are audio-only hangouts based on the same architecture that video hangouts ?

Thanks a lot

Eric

Can these undocumented getuserMedia constraints, Plan B, and chooseDesktopMedia be used outside of hangouts when communicating with a custom MCU?

Thanks for this very detailed article! Appreciate the effort that went into this.

I am a big fan of Adobe flash player and their streaming services. Since flash plugin is available on almost every browser makes it easier to built RTC apps. When do you think WebRTC will be available for every browser. Enlighten us?

Depending on how you count and who you care about, WebRTC already covers most of the market with Chrome and Firefox. Internet Explorer has been the big question mark, but Microsoft has made it clear (https://webrtchacks.com/ortc-edge-microsoft-qa/) the are pushing their users to Edge (which has WebRTC) as soon as possible. That leaves Apple’s Safari – there are indicators that that is a work in progress, but of course Apple will never officially comment on anything they haven’t launched yet.

Flash is on a path to deprecation. The better question might be when do you have to use WebRTC because Flash no longer works reliably (some may say that is already true).

i have done conference call with Jitsi-meet ,and i have created server instance along with load balancing as well hazelcast on openfire server. but problem is that whenever i trie to switch the server video is freeze, but audio is available . for example i have to connected with three desktop A , B and C its working fine and once i stope the current server ,even its switch the severe by load balancing and A ,B properly works but in C previous server session video frames there and its look video freeze and this case audio is there . Although some cases video comes after 5 to 10 minutes .

This sounds like a jitsi related question. I recommend asking this at community.jitsi.org.

Does they use any WebRTC gateway?