It has been more than a year since Apple first added WebRTC support to Safari. My original post reviewing the implementation continues to be popular here, but it does not reflect some of the updates since the first limited release. More importantly, given its differences and limitations, many questions still remained on how to best develop WebRTC applications for Safari.

I ran into Chad Phillips at Cluecon (again) this year and we ended up talking about his arduous experience making WebRTC work on Safari. He had a great, recent list of tips and tricks so I asked him to share it here.

Chad is a long-time open source guy and contributor to the FreeSWITCH product. He has been involved with WebRTC development since 2015. He recently launched MoxieMeet, a videoconferencing platform for online experiential events, where he is CTO and developed a lot of the insights for this post.

{“editor”, “chad hart“}

In June of 2017, Apple became the last major vendor to release support for WebRTC, paving the (still bumpy) road for platform interoperability.

And yet, more than a year later, I continue to be surprised by the lack of guidance available for developers to integrate their WebRTC apps with Safari/iOS. Outside of a couple posts by the Webkit team, some scattered StackOverflow questions, the knowledge to be gleaned from scouring the Webkit bug reports for WebRTC, and a few posts on this very website, I really haven’t seen much support available. This post is an attempt to begin rectifying the gap.

I have spent many months of hard work integrating WebRTC in Safari for a very complex videoconferencing application. Most of my time was spent getting iOS working, although some of the below pointers also apply to Safari on MacOS.

This post assumes you have some level of experience with implementing WebRTC — it’s not meant to be a beginner’s how to, but a guide for experienced developers to smooth the process of integrating their apps with Safari/iOS. Where appropriate I’ll point to related issues filed in the Webkit bug tracker so that you may add your voice to those discussions, as well as some other informative posts.

I did an awful lot of bushwacking in order to claim iOS support in my app, hopefully the knowledge below will make a smoother journey for you!

Some good news first

First, the good news:

- Apple’s current implementation is fairly solid

- For something simple like a 1-1 audio/video call, the integration is quite easy

Let’s have a look at some requirements and trouble areas.

General Guidelines and Annoyances

Use the current WebRTC spec

If you’re building your application from scratch, I recommend using the current WebRTC API spec (it’s undergone several iterations). The following resources are great in this regard:

For those of you running apps with older WebRTC implementations, I’d recommend you upgrade to the latest spec if you can, as the next release of iOS disables the legacy APIs by default. In particular, it’s best to avoid the legacy addStream APIs, which make it more difficult to manipulate tracks in a stream.

More background on this here: https://blog.mozilla.org/webrtc/the-evolution-of-webrtc/

iPhone and iPad have unique rules – test both

Since the iPhone and iPad have different rules and limitations, particularly around video, I’d strongly recommend that you test your app on both devices. It’s probably smarter to start by getting it working fully on the iPhone, which seems to have more limitations than the iPad.

More background on this here: https://webkit.org/blog/6784/new-video-policies-for-ios

Let the iOS madness begin

It’s possible that may be all you need to get your app working on iOS. If not, now comes the bad news: the iOS implementation has some rather maddening bugs/restrictions, especially in more complex scenarios like multiparty conference calls.

Other browsers on iOS missing WebRTC integration

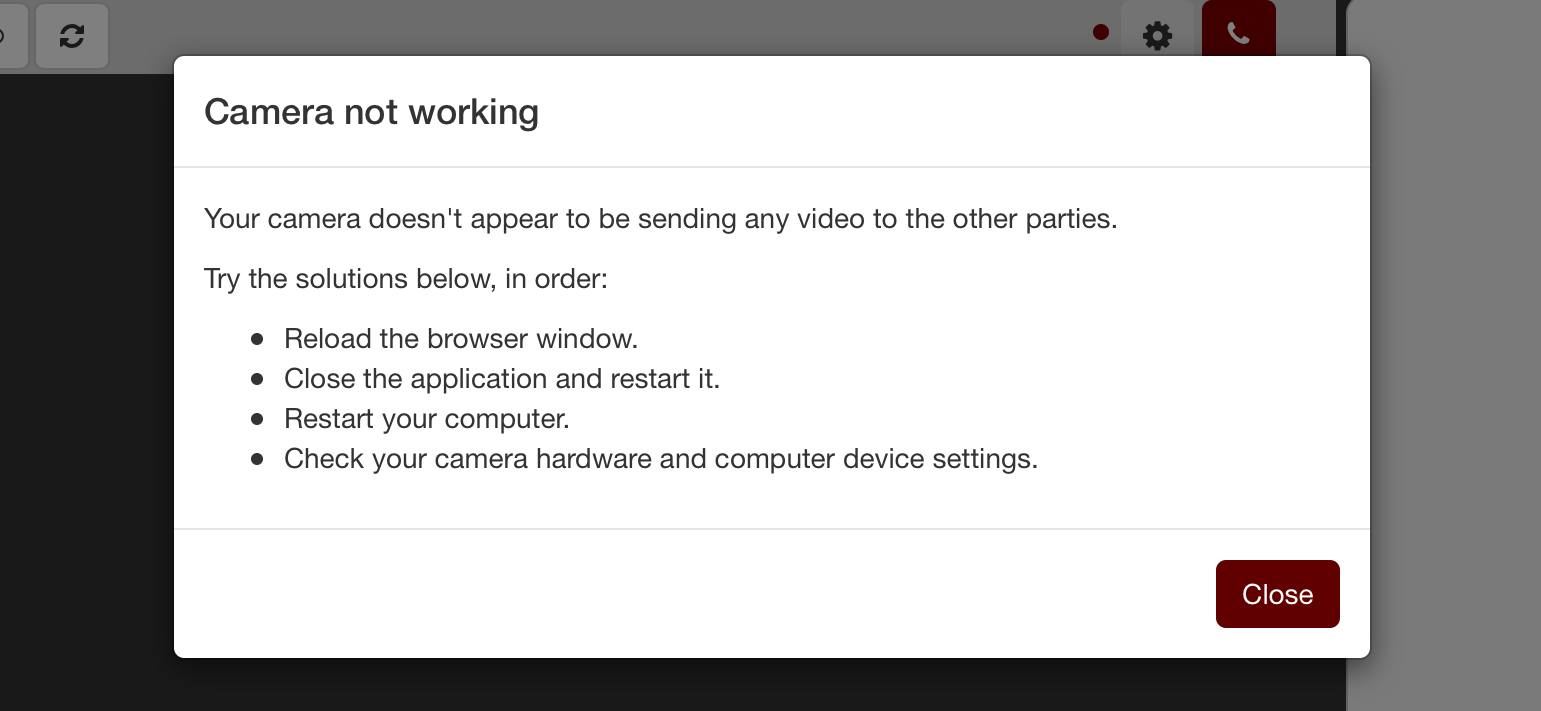

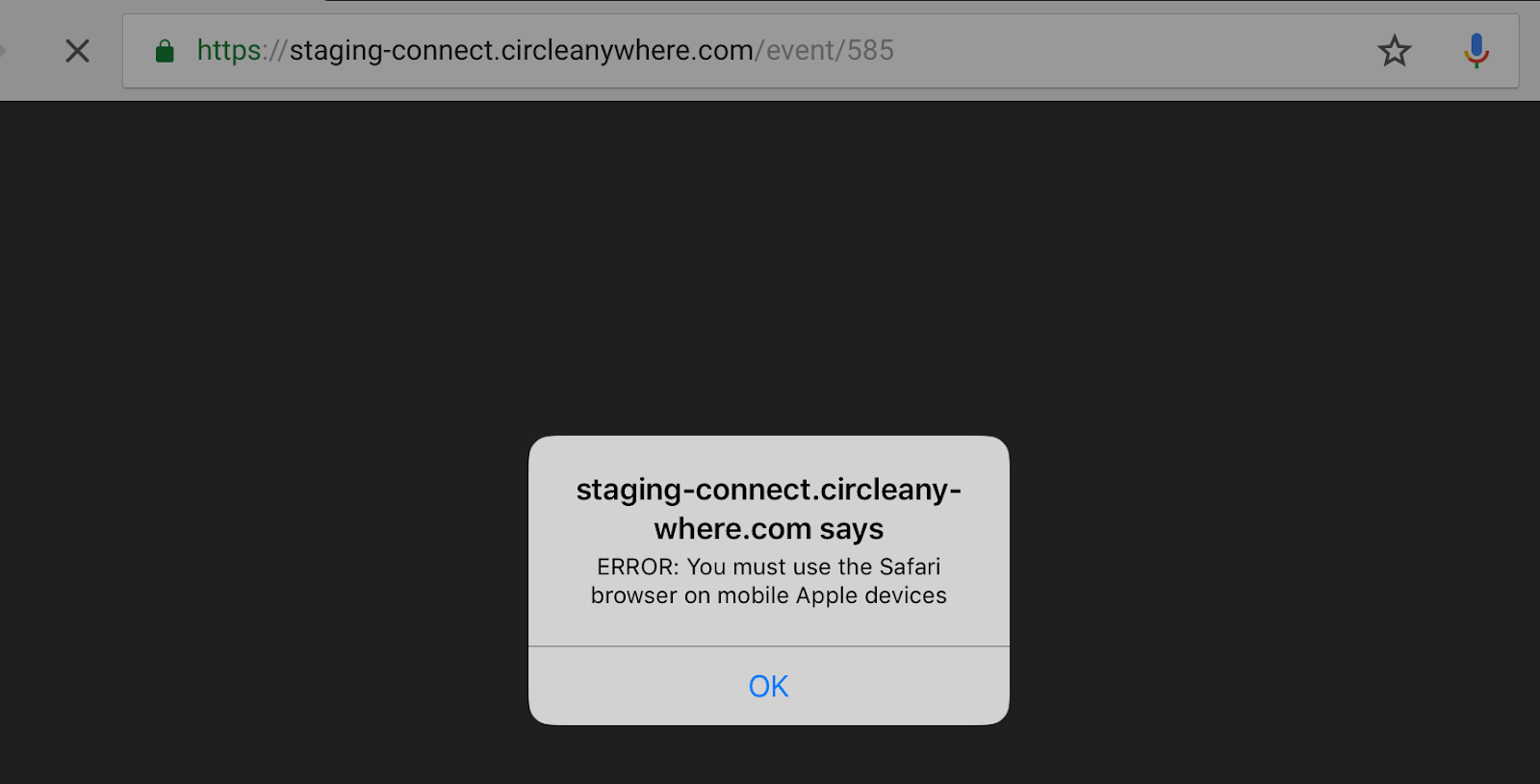

The WebRTC APIs have not yet been exposed to iOS browsers using WKWebView . In practice, this means that your web-based WebRTC application will only work in Safari on iOS, and not in any other browser the user may have installed (Chrome, for example), nor in an ‘in-app’ version of Safari.

To avoid user confusion, you’ll probably want to include some helpful user error message if they try to open your app in another browser/environment besides Safari proper.

Related issues:

- https://bugs.webkit.org/show_bug.cgi?id=183201

- https://bugs.chromium.org/p/chromium/issues/detail?id=752458

No beforeunload event, use pagehide

According to this Safari event documentation, the unload event has been deprecated, and the beforeunload event has been completely removed in Safari. So if you’re using these events, for example, to handle call cleanup, you’ll want to refactor your code to use the pagehide event on Safari instead.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

/** * iOS doesn't support beforeunload, use pagehide instead. * NOTE: I tried doing this detection via examining the window object * for onbeforeunload/onpagehide, but they both exist in iOS, even * though beforeunload is never fired. */ var iOS = ['iPad', 'iPhone', 'iPod'].indexOf(navigator.platform) >= 0; var eventName = iOS ? 'pagehide' : 'beforeunload'; window.addEventListener(eventName, function (event) { // Do the work... }); |

source: https://gist.github.com/thehunmonkgroup/6bee8941a49b86be31a787fe8f4b8cfe

Getting & Playing Media

playsinline attribute

Step one is to add the required playsinline attribute to your video tags, which allows the video to start playing on iOS. So this:

|

1 |

<video id="video-tag" autoplay></video> |

Becomes this:

|

1 |

<video id="video-tag" autoplay playsinline></video> |

playsinline was originally only a requirement for Safari on iOS, but now you might need to use it in some cases in Chrome too – see Dag-Inge’s post for more on that..

See the thread here for details on this issue requirement: https://github.com/webrtc/samples/issues/929

Autoplay rules

Next you’ll need to be aware of the Webkit WebRTC rules on autoplaying audio/video. The main rules are:

- MediaStream-backed media will autoplay if the web page is already capturing.

- MediaStream-backed media will autoplay if the web page is already playing audio

- A user gesture is required to initiate any audio playback – WebRTC or otherwise.

This is good news for the common use case of a video call, since you’ve most likely already gotten permission from the user to use their microphone/camera, which satisfies the first rule. Note that these rules work alongside the base autoplay rules for MacOS and iOS, so it’s good to be aware of them as well.

Related webkit posts:

- https://webkit.org/blog/7763/a-closer-look-into-webrtc

- https://webkit.org/blog/7734/auto-play-policy-changes-for-macos

- https://webkit.org/blog/6784/new-video-policies-for-ios

No low/limited video resolutions

UPDATE 2019-08-18:

Unfortunately this bug has only gotten worse in iOS 12, as their attempt to fix it broke the sending of video to peer connections for non-standard resolutions. On the positive side the issue does seem to be fully fixed in the latest iOS 13 Beta: https://bugs.webkit.org/show_bug.cgi?id=195868

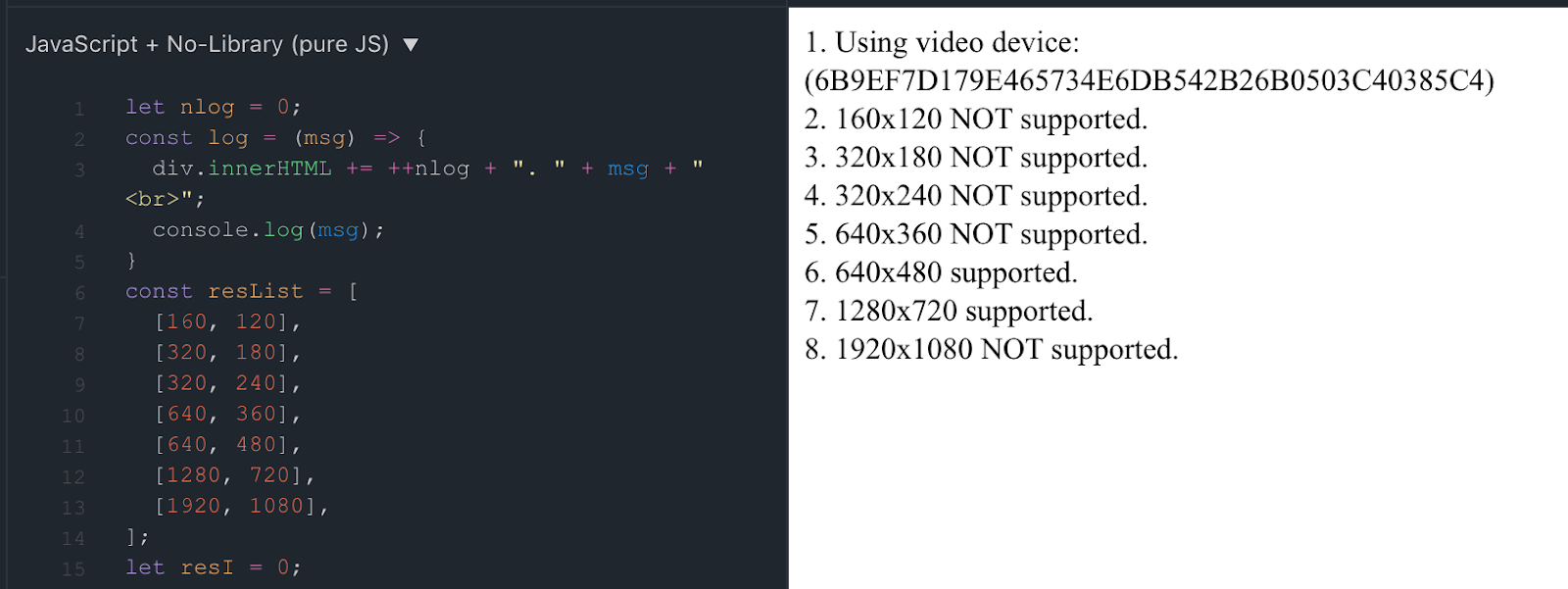

Visiting https://jsfiddle.net/thehunmonkgroup/kmgebrfz/15/ (or the webrtcHack’s WebRTC-Camera-Resolution project) in a WebRTC-compatible browser will give you a quick analysis of common resolutions that are supported by the tested device/browser combination. You’ll notice that in Safari on both MacOS and iOS, there aren’t any available low video resolutions such as the industry standard QQVGA, or 160×120 pixels. These small resolutions are pretty useful for serving thumbnail-sized videos — think of the filmstrip of users in a Google Hangouts call, for example.

Now you could just send whatever the lowest available native resolution is along the peer connection and let the receiver’s browser downscale the video, but you’ll run the risk of saturating the download bandwidth for users that have less speedy internet in mesh/SFU scenarios.

I’ve worked around this issue by restricting the bitrate of the sent video, which is a fairly quick and dirty compromise. Another solution that would take a bit more work is to handle downscaling the video stream in your app before passing it to the peer connection, although that will result in the client’s device spending some CPU cycles.

Example code:

New getUserMedia() request kills existing stream track

If your application grabs media streams from multiple getUserMedia() requests, you are likely in for problems with iOS. From my testing, the issue can be summarized as follows: if getUserMedia() requests a media type requested in a previous getUserMedia() , the previously requested media track’s muted property is set to true, and there is no way to programmatically unmute it. Data will still be sent along a peer connection, but it’s not of much use to the other party with the track muted! This limitation is currently expected behavior on iOS.

I was able to successfully work around it by:

- Grabbing a global audio/video stream early on in my application’s lifecycle

- Using MediaStream.clone() , MediaStream.addTrack() , MediaStream.removeTrack() to create/manipulate additional streams from the global stream without calling getUserMedia() again.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

/** * Illustrates how to clone and manipulate MediaStream objects. */ function makeAudioOnlyStreamFromExistingStream(stream) { var audioStream = stream.clone(); var videoTracks = audioStream.getVideoTracks(); for (var i = 0, len = videoTracks.length; i < len; i++) { audioStream.removeTrack(videoTracks[i]); } console.log('created audio only stream, original stream tracks: ', stream.getTracks()); console.log('created audio only stream, new stream tracks: ', audioStream.getTracks()); return audioStream; } function makeVideoOnlyStreamFromExistingStream(stream) { var videoStream = stream.clone(); var audioTracks = videoStream.getAudioTracks(); for (var i = 0, len = audioTracks.length; i < len; i++) { videoStream.removeTrack(audioTracks[i]); } console.log('created video only stream, original stream tracks: ', stream.getTracks()); console.log('created video only stream, new stream tracks: ', videoStream.getTracks()); return videoStream; } function handleSuccess(stream) { var audioOnlyStream = makeAudioOnlyStreamFromExistingStream(stream); var videoOnlyStream = makeVideoOnlyStreamFromExistingStream(stream); // Do stuff with all the streams... } function handleError(error) { console.error('getUserMedia() error: ', error); } var constraints = { audio: true, video: true, }; navigator.mediaDevices.getUserMedia(constraints). then(handleSuccess).catch(handleError); |

source: https://gist.github.com/thehunmonkgroup/2c3be48a751f6b306f473d14eaa796a0

See this post for more: https://developer.mozilla.org/en-US/docs/Web/API/MediaStream and

this related issue: https://bugs.webkit.org/show_bug.cgi?id=179363

Managing Media Devices

Media device IDs change on page reload

UPDATE 2019-08-18:

This has been improved as of iOS 12.2, where device IDs are now stable across browsing sessions after getUserMedia() has been called once. However, device IDs are still not preserved across browser sessions, so this improvement isn’t really helpful for storing a user’s device preferences longer term. For more info, see https://webkit.org/blog/8672/on-the-road-to-webrtc-1-0-including-vp8/

Many applications include support for user selection of audio/video devices. This eventually boils down to passing the deviceId to getUserMedia() as a constraint.

Unfortunately for you as a developer, as part of Webkit’s security protocols, random deviceId’s are generated for all devices on each new page load. This means, unlike every other platform, you can’t simply stuff the user’s selected deviceId into persistent storage for future reuse.

The cleanest workaround I’ve found for this issue is:

- Store both device.deviceId and device.label for the device the user selects

- For any code workflow which eventually passes a

deviceId to

getUserMedia() :

- Try using the saved deviceId

- If that fails, enumerate the devices again, and try looking up the deviceId from the saved device label.

On a related note: Webkit further prevents fingerprinting by only exposing a user’s actual available devices after the user has granted device access. In practice, this means you need to make a getUserMedia() call before you call enumerateDevices() .

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 |

** * Illustrates how to handle getting the correct deviceId for * a user's stored preference, while accounting for Safari's * security protocol of serving a random deviceId per page load. */ // These would be pulled from some persistent storage... var storedVideoDeviceId = '1234'; var storedVideoDeviceLabel = 'Front camera'; function getDeviceId(devices) { var videoDeviceId; // Try matching by ID first. for (var i = 0; i < devices.length; ++i) { var device = devices[i]; console.log(device.kind + ": " + device.label + " id = " + device.deviceId); if (deviceInfo.kind === 'videoinput') { if (device.deviceId == storedVideoDeviceId) { videoDeviceId = device.deviceId; break; } } } if (!videoDeviceId) { // Next try matching by label. for (var i = 0; i < devices.length; ++i) { var device = devices[i]; if (deviceInfo.kind === 'videoinput') { if (device.label == storedVideoDeviceLabel) { videoDeviceId = device.deviceId; break; } } } // Sensible default. if (!videoDeviceId) { videoDeviceId = devices[0].deviceId; } } // Now, the discovered deviceId can be used in getUserMedia() requests. var constraints = { audio: true, video: { deviceId: { exact: videoDeviceId, }, }, }; navigator.mediaDevices.getUserMedia(constraints). then(function(stream) { // Do something with the stream... }).catch(function(error) { console.error('getUserMedia() error: ', error); }); } function handleSuccess(stream) { stream.getTracks().forEach(function(track) { track.stop(); }); navigator.mediaDevices.enumerateDevices(). then(getDeviceId).catch(function(error) { console.error('enumerateDevices() error: ', error); }); } // Safari requires the user to grant device access before providing // all necessary device info, so do that first. var constraints = { audio: true, video: true, }; navigator.mediaDevices.getUserMedia(constraints). then(handleSuccess).catch(function(error) { console.error('getUserMedia() error: ', error); }); |

source: https://gist.github.com/thehunmonkgroup/197983bc111677c496bbcc502daeec56

Related issue: https://bugs.webkit.org/show_bug.cgi?id=179220

Related post: https://webkit.org/blog/7763/a-closer-look-into-webrtc

Speaker selection not supported

Webkit does not yet support HTMLMediaElement.setSinkId() , which is the API method used for assigning audio output to a specific device. If your application includes support for this, you’ll need to make sure it can handle cases where the underlying API support is missing.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

/** * Illustrates methods for testing for the existence of support * for setting a speaker device. */ // Check for the setSinkId() method on HTMLMediaElement. if (setSinkId in HTMLMediaElement.prototype) { // Do the work. } // ...or... // Check for the sinkId property on an HTMLMediaElement instance. if (typeof element.sinkId !== 'undefined') { // Do the work. } |

source: https://gist.github.com/thehunmonkgroup/1e687259167e3a48a55cd0f3260deb70

Related issue: https://bugs.webkit.org/show_bug.cgi?id=179415

PeerConnections & Calling

Beware, no VP8 support

UPDATE 2019-08-18:

Support for VP8 has now been added as of iOS 12.2. See https://webkit.org/blog/8672/on-the-road-to-webrtc-1-0-including-vp8/

While the W3C spec clearly states that support for the VP8 video codec (along with the H.264 codec) is to be implemented, Apple has thus far chosen to not support it. Sadly, this is anything but a technical issue, as libwebrtc includes VP8 support, and Webkit actively disables it.

So at this time, my advice to achieve the best interoperability in various scenarios is:

- Multiparty MCU – make sure that H.264 is a supported codec

- Multiparty SFU – use H.264

- Multiparty Mesh and peer to peer – pray everyone can negotiate a common codec

I say best interop because while this gets you a long way, it won’t be all the way. For example, Chrome for Android does not support software H.264 encoding yet. In my testing, many (but not all) Android phones have hardware H.264 encoding, but those that are missing hardware encoding will not work in Chrome for Android.

Associated bug reports:

- https://bugs.webkit.org/show_bug.cgi?id=167257

- https://bugs.webkit.org/show_bug.cgi?id=173141

- https://bugs.chromium.org/p/chromium/issues/detail?id=719023

Send/receive only streams

As previously mentioned, iOS doesn’t support the legacy WebRTC APIs. However, not all browser implementations fully support the current specification either.

As of this writing, a good example is creating a send only audio/video peer connection. iOS doesn’t support the legacy RTCPeerConnection.createOffer() options of offerToReceiveAudio / offerToReceiveVideo , and the current stable Chrome doesn’t support the RTCRtpTransceiver spec by default.

Other more esoteric bugs and limitations

There are certainly other corner cases you can hit that seem a bit out of scope for this post. However, an excellent resource should you run aground is the Webkit issue queue, which you can filter just for WebRTC-related issues: https://bugs.webkit.org/buglist.cgi?component=WebRTC&list_id=4034671&product=WebKit&resolution=—

Remember, Webkit/Apple’s implementation is young

It’s still missing some features (like the speaker selection mentioned above), and in my testing isn’t as stable as the more mature implementation in Google Chrome.

There have also been some major bugs — capturing audio was completely broken for the majority of the iOS 12 Beta release cycle (thankfully they finally fixed that in Beta 8).

Apple’s long-term commitment to WebRTC as a platform isn’t clear, particularly because they haven’t released much information about it beyond basic support. As an example, the previously mentioned lack of VP8 support is troubling with respect to their intention to honor the agreed upon W3C specifications.

These are things worth thinking about when considering a browser-native implementation versus a native app. For now, I’m cautiously optimistic, and hopeful that their support of WebRTC will continue, and extend into other non-Safari browsers on iOS.

{“author”: “Chad Phillips“}

One of the most detailed posts I’ve seen on the subject; thank you Chad, for sharing.

Please also note that Safari does not support data channels.

@JSmitty, all of the ‘RTCDataChannel’ examples at https://webrtc.github.io/samples/ do work in Safari on MacOS, but do not currently work in Safari on iOS 11/12. I’ve filed https://bugs.webkit.org/show_bug.cgi?id=189503 and https://github.com/webrtc/samples/issues/1123 — would like to get some feedback on those before I incorporate this info into the post. Thanks for the heads up!

OK, so I’ve confirmed data channels DO work in Safari on iOS, but there’s a caveat: iOS does not include local ICE candidates by default, and many of the data channel examples I’ve seen depend on that, as they’re merely sending data between two peer connections on the same device.

See https://bugs.webkit.org/show_bug.cgi?id=189503#c2 for how to temporarily enable local ICE on iOS.

Great article. Thanks Chad & Chad for sharing your expertise.

As to DataChannel support. Looks like Safari officially still doesn’t support it according to the support matrix.

https://developer.mozilla.org/en-US/docs/Web/API/RTCDataChannel

My own testing shows that DataChannel works between two Safari browser windows. However at this time (Jan 2020) it does not work between Chrome and Safari windows. Also fails between Safari and aiortc (Python WebRTC provider). DataChannel works fine between Chrome and aiortc.

A quick way to test this problem is via sharedrop.io

Transferring files works fine between same brand browser windows, but not across brands.

Hope Apple is working on the compatibility issues with Chrome.

Ivelin

Nice summary Chad. Thanks for this! –

Very good post, Chad. Just what I was looking for. Thanks for sharing this knowledge. 🙂

Thanks for this Chad, currently struggling with this myself, where a portable ‘web’ app is being written..

I’m hopeful it will creep into wkwebview soon!

Thanks for detailing the issues.

One suggestion for any future article would be including the iOS Safari limitation on simultaneous playing of multiple elements with audio present.

This means refactoring so that multiple (remote) audio sources are rendered by a single element.

There’s a good bit of detail/discussion about this limitation here: https://bugs.webkit.org/show_bug.cgi?id=176282

Media servers that mix the audio are a good solution.

The same issue I’m facing New getUserMedia() request kills existing stream track. Let’s see whether it helps me or not.

iOS calling getUserMedia() again kills video display of first getUserMedia(). This is the issue I’m facing but I want to pass the stream from one peer to another peer.

Thank you Chad for sharing this, I was struggling with the resolution issue on iOS and I was not sure why I was not getting the full hd streaming. Hope this will get supported soon.

VP8 is a nightmare. I work on a platform where we publish user-generated content, including video, and the lack of support for VP8 forces us to do expensive transcoding on these videos. I wonder why won’t vendors just settle on a universal codec for mobile video.

VP8 is supported as of iOS 12.2: https://webkit.org/blog/8672/on-the-road-to-webrtc-1-0-including-vp8/

Great Post!

Chad I am facing an issue with iOS Safari, The issue is listed below.

I am using KMS lib for room server handling and calling, There wasn’t any support for Safari / iOS safari in it, I added adapter.js (shim) to make my application run on Safari and iOS (Safari). After adding it worked perfectly on Safari and iOS, but when more than 2 persons join the call, The last added remote stream works fine but the existing remote stream(s) get struck/disconnected which means only peer to peer call works fine but not multiple remote streams. Can you please guide how to handle multiple remote streams in iOS (Safari).

Thanks

Your best bet is probably to search the webkit bugtracker, and/or post a bug there.

No low/limited video resolutions: 1920×1080 not supported

-> are you talking about IOS12 ?

Because I’m doing 4K on IOS 12.3.1 with janus echo test with iphone XS Max (only one with 4K front cam)

Of course if I run your script on my MBP it will say fullHD not supported -> because the cam is only 720p.

That may be a standard camera resolution on that particular iPhone. The larger issue has been that only resolutions natively supported by the camera have been available, leading to difficultly in reliably selecting resolutions in apps, especially lower resolutions like those used in thumbnails.

Thankfully, this appears to be fully addressed in the latest beta of iOS 13.

How many days of work I lost before find this article. It’s amazing and explain a lot the reasons of all the strange bugs in iOS. Thank you so much.

Hi, i’m having issues with Safari on iOS. In the video tag, adding autoplay and playsinline doesn’t work on our Webrtc implementation.

Obviously it works fine in any browser on any other platform.

I need to add the controls tag, then manually go to full screen and press play.

Is there a way to play the video inside the web page ?

Thanks

First of all, thanks for detailing the issues.

This article is unique to provide many insides for WebRTC/Safari related issues. I learned a lot and applied some the techniques in our production application.

But I had very unique case which I am struggling with right now, as you might guess with Safari. I would be very grateful if you can help me or at least to guide to the right direction.

The case:

We have webrtc-based one-2-one video chat, one side always mobile app (host) who is the initiator and the other side is always browser both desktop and mobile. Making the app working across different networks was pain in the neck up to recently, but managed to fix this by changing some configurations. So the issue was in different networks WebRTC was not generating relay and most of the time server reflexive candidates, as you know without at least stun provided candidates parties cannot establish any connection. Solution was simple as though it look a lot of search on google,

(https://github.com/pion/webrtc/issues/810), we found out that mobile data providers mostly assigning IPv6 to mobile users. And when they used mobile data plan instead of local wifi, they could not connect to each other. By the way, we are using cloud provider for STUN/TURN servers (Xirsys). And when we asked their technical support team they said their servers should handle IPv6 based requests, but in practice it did not work. So we updated RTCPeerConnection configurations, namely, added optional constraints (and this optional constraints are also not provided officially, found them from other non official sources), the change was just disabling IPv6 on both mobile app (iOS and Android) and browser. After this change, it just worked perfectly until we found out Safari was not working at all. So we reverted back for Safari and disabled IPv6 for other cases (chrome, firefox, Android browsers)

const iceServers = [ { urls: “stun:” }, { urls: [“turn:”,”turn:”,… ],

credential: “secret”, username: “secret” } ];

let RTCConfig;

// just dirty browser detection

const ua = navigator.userAgent.toLocaleLowerCase();

const isSafari = ua.includes(“safari”) && !ua.includes(“chrome”);

if (isSafari) {

RTCConfig = iceServers;

} else {

RTCConfig = {

iceServers,

constraints: { optional: [{ googIPv6: false }] }

};

}

if I wrap iceServers array inside envelop object and optional constraints and use it in new RTCPeerConnection(RTCConfig); is is throwing error saying:

Attempted to assign readonly propertypointing into => safari_shim.js : 255Can you please help with this issue, our main customers use iPhone, so making our app work in Safari across different networks are very critical to our business. If you provide some kind of paid consultation, it is also ok for us

Looking forward to hearing from you

Thanks for the great summary regarding Safari/IOS.

The work-around for low-bandwidth issue is very interesting. I played with the sample. It worked as expected. It’s played on the same device, isn’t it?

When I tried to add a similar “a=AS:500\r\n” to the sdp and tested it on different devices – one being windows laptop with browser: Chrome, , another an ipad with browser: Safari – it seemed not working.

The symptom was: the stream was not received or sent. In a word, the connections for media communications was not there.

I checked the sdp, it’s like,

sdp”: {

“type”: “offer”,

“sdp”: ”

v=0\r\n

o=- 3369656808988177967 2 IN IP4 127.0.0.1\r\n

s=-\r\n

t=0 0\r\n

a=group:BUNDLE 0 1 2\r\n

a=extmap-allow-mixed\r\n

a=msid-semantic: WMS 7BLOSVujr811EZHSiFZI2t8yMML8LpOgo0in\r\n

m=audio 9 UDP/TLS/RTP/SAVPF 111 63 103 104 9 0 8 106 105 13 110 112 113 126\r\n

c=IN IP4 0.0.0.0\r\n

b=AS:500\r\n

…

}

Also I didn’t quite understand the statement in the article.

“I’ve worked around this issue by restricting the bitrate of the sent video, which is a fairly quick and dirty compromise. Another solution that would take a bit more work is to handle downscaling the video stream in your app before passing it to the peer connection” – don’t the both scenarios work on the sent side?

Great insights, Chad! It’s fascinating to see how WebRTC is evolving, especially with Safari. Your tips on navigating its limitations are incredibly helpful. Looking forward to implementing some of these strategies in my projects!