Losing customers because of issues with your network service is a bad thing. Sure you can gather data and try to prevent, but isn’t it better to prevent issues in the first place? What are the most common pitfalls to look out for? What’s a good benchmark? What WebRTC-specific user experience elements should you spend your limited resources focusing on? No service can be perfect, so what is a reasonable error rate? These are all tough questions to answers without decent industry data.

Fortunately Lasse Lumiaho and Varun Singh from callstats.io have agreed to share some stats from their WebRTC monitoring service to help you answer these questions. Their service does not monitor every WebRTC service, but their 100+ customer base does provide a statistically meaningful sample to help give you some practical metrics for planning and comparison.

Fellow WebRTC data nerds, please enjoy…

{“editor”, “chad hart“}

callstats.io operates a service that monitors and analyses WebRTC media sessions in real-time. We measure every aspect of a WebRTC session from session startup until the session termination. We diagnose session set up issues, media disruptions, media quality, and other important annoyances. In this post, we will go through some usage metrics aggregated over the last year (Jan 2015 – Feb 2016), corresponding to billions of minutes.

Typical WebRTC applications

We classify our customers into 3 service categories:

- Communication apps

- Marketplaces (e-health, e-learning etc.)

- Customer-service apps

Communication apps tend to serve across all platforms, i.e., web and mobile. Marketplace apps typically serve two-sided markets, i.e., a doctor and a patient, a teacher and a student. A customer service app mostly connect two parties: a customer and a customer service agent. And typically the customer is connecting through a WebRTC gateway to connect to an agent or vice-versa.

WebRTC usage in numbers

Browsers

To give an overview of WebRTC, we’ll go through some basic usage numbers. Most WebRTC users rely on Chrome. A whopping 95% of the participants use Chrome for WebRTC sessions, and the rest 5% are using Firefox. We encourage our customers to use the adapter.js to overcome the browser interoperability issues.

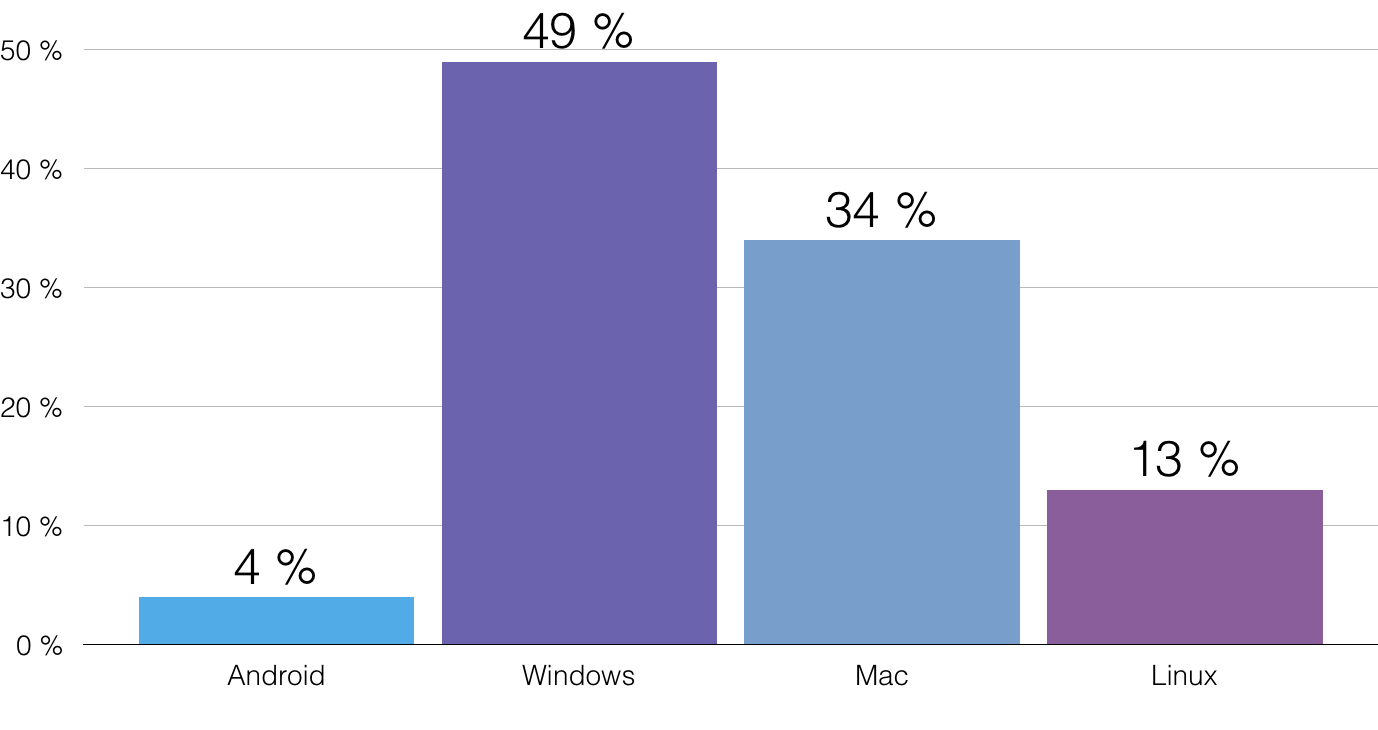

Operating Systems

Another sign that it is early stages for WebRTC is the distribution of operating systems. Windows is leading with 49% (85% global desktop share by StatCounter) but OSX is trailing closely with 34% (9%). For Linux, the share of WebRTC users is 13%, which is about tenfold when compared to estimated share of Linux desktop users (1.5%).

Multi-party communication: are we there, yet?

More than half of the WebRTC sessions (65%) monitored by callstats.io have three participants (note the multi-party usage is probably unique among our customer base vs. the general WebRTC population). Roughly a third of WebRTC sessions have two participants. Meanwhile, sessions that had more than eight participants account only for 0.3%. The biggest session ever recorded on callstats.io from an interactive learning company had 118 participants, and we’ve seen several instances of sessions with 30+ participants. These are all encouraging observations because we believe the ecosystem is ready for its growth phase!

Performance Metrics

Just like any other software product or service, a WebRTC application requires analytics for the developers to know: “how the users are using the application”? A more traditional web analytics solution looks at pageviews and clicks as metrics to measure engagement. For a WebRTC application, the analytics concentrates at two levels:

- service level and at the

- conference level.

The service level metrics indicate the engagement of the end-users with the service. The conference level metrics are based on the media quality of the individual participants in a WebRTC conference session. For example, a user intermittently loses internet connectivity, fails to connect, or has low audio/video rendering quality.

Timeliness and disruptions

Audio and video quality degrades when packets arrive late and the dejitter buffer skips, discards or drops the frames. Hence, more than the throughput, WebRTC quality is about network jitter, end-to-end latency, and the timeliness of playout of the audio and video frames. The reasons to drop media packets or frames during playback are: they arrived too early or too late in the dejitter buffer due to network issues, or to maintain the audio/video playback rate.

If media is not played back in a timely manner for an extended duration of time, it causes disruption in communication. Disruption may occur due to lack in available capacity, high delay, or loss in network connectivity caused by high packet loss or change in network interfaces.

We observe that some users self-diagnose, i.e., they react to disruptions by either turning on and off media, or reloading the page, which is a cause of churn. Let’s dig in.

Failures

The following are metrics as observed by callstats.io, these metrics are primarily gathered from the browser including Firefox and Chrome for Android.

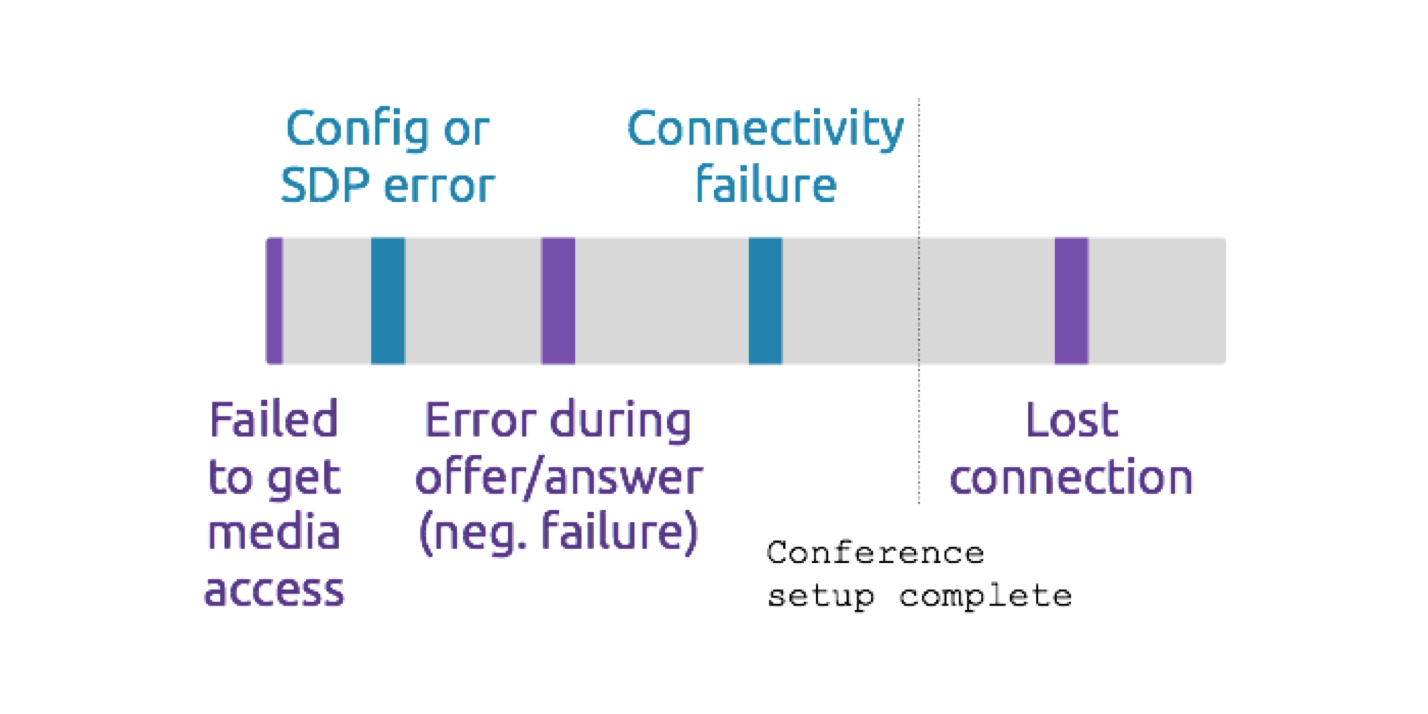

In the WebRTC services that we observe, the failure rate is on average 12%, i.e., 1 in 8 sessions are never set up. However, most failures (85%) come from the inability of an endpoint to traverse NATs or firewalls successfully. That problem can be usually fixed by deploying relay servers and we already observe that 22% of the conferences need some kind of TURN relay server. About 9% of the conferences required TCP, which may not be the best transport for media quality but is better than failing to establish the session. However, it would be remiss not to point out that not all our customers use a TURN/TCP or TURN/TLS. Ergo, the failure rate may still be indicative of not having the complete suite of relays in place.

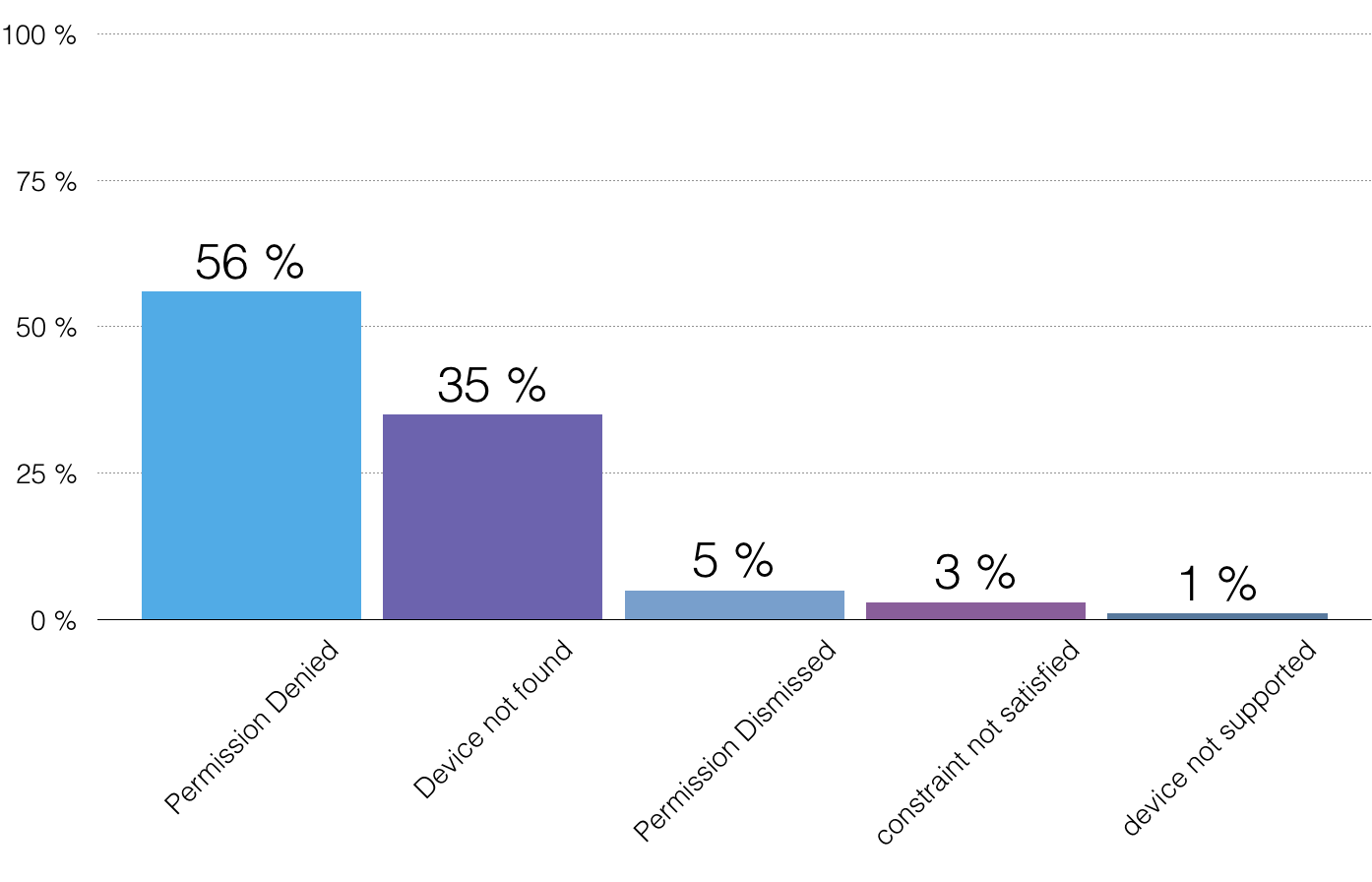

getUserMedia Errors

Another distinct failure reason (14%) is getUserMedia (gUM) errors, the inability to get access to users’ camera or microphone. The two main reasons for gUM errors are: permission denied by the user (56%) and device not found (35%).

Dropped Session

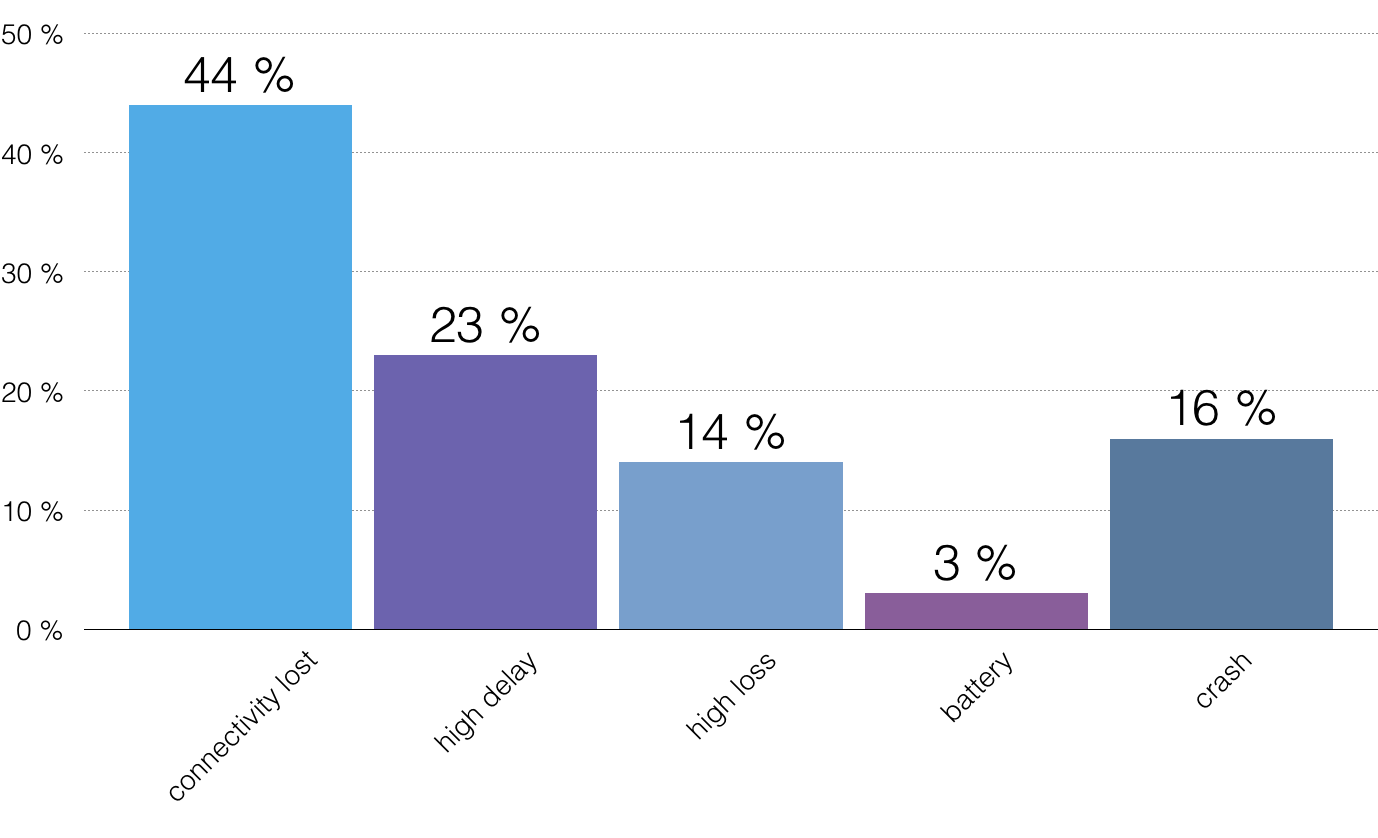

About 20% of the sessions drop after the conference is set up. The failure reasons for the dropped session is different from those during the conference set up phase. Forty-four percent of the dropped sessions occur because of loss in Internet connection, i.e., typically this can occur either when the connectivity is poor (i.e., high delays or high losses), or the available connection disappears (especially in mobile environments). Screen sharing plugins, or media pipeline crashes (one-way audio/video) are another major reason for calls being dropped.

Session Churn

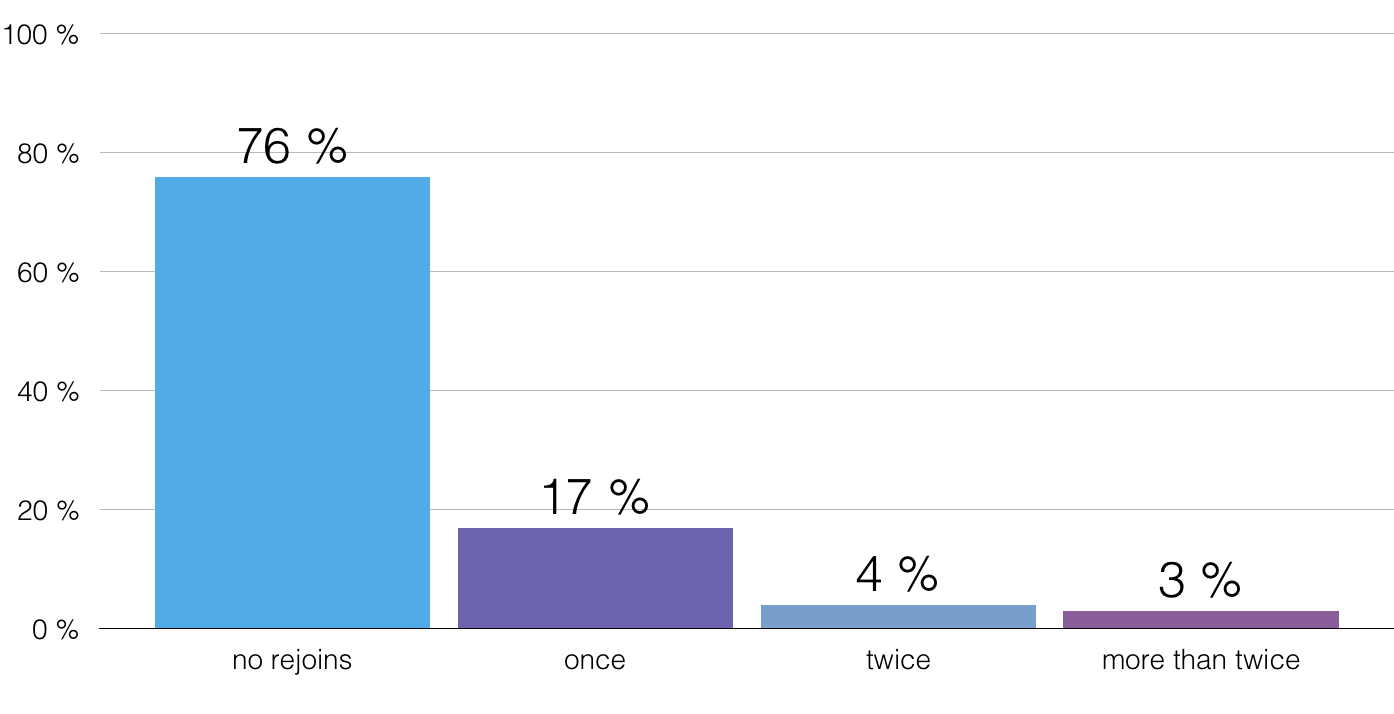

Churn occurs when a participant leaves and rejoins a conference within a short period of time. The major causes of session churn are: a page load, plugin failure, one-way audio or video, loss in network connectivity. These sessions are distinct from conference drops, because in this case, the participant rejoins after a brief disruption. In 25% of sessions there is at least one participant re-joining the conference.

We also often see that people start fixing media quality issues by pausing and unpausing video. Sometimes they also leave and rejoin a conference to try and fix any issues they might encounter when using WebRTC.

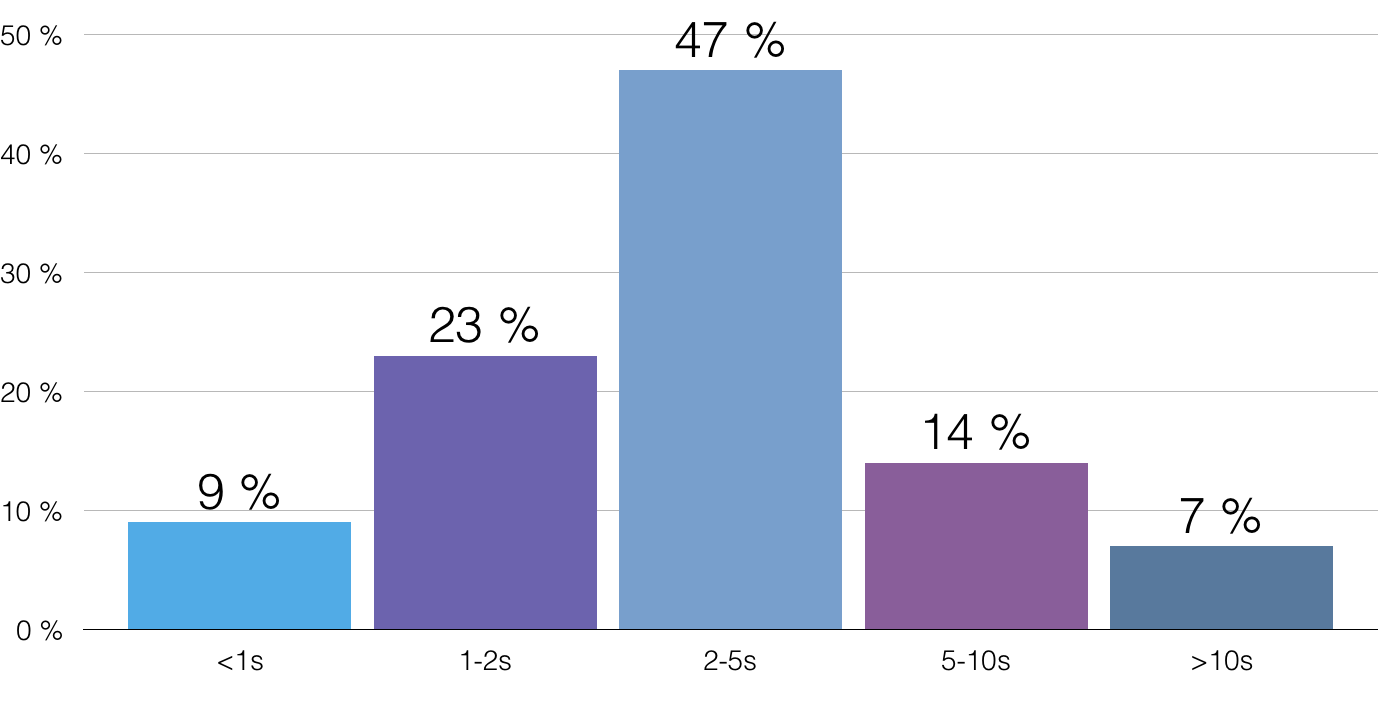

Setup times

Session establishment is the most critical part of a conference. While 88% of sessions are set up, the time to set up the session is also an important metric. Our observations show that the 80-percentile is less than 2 seconds and 50 percentile is less than 4 seconds (sorting the set up times in decreasing order). Lastly, we observe that 80% of the sessions are set up in 5s or less time.

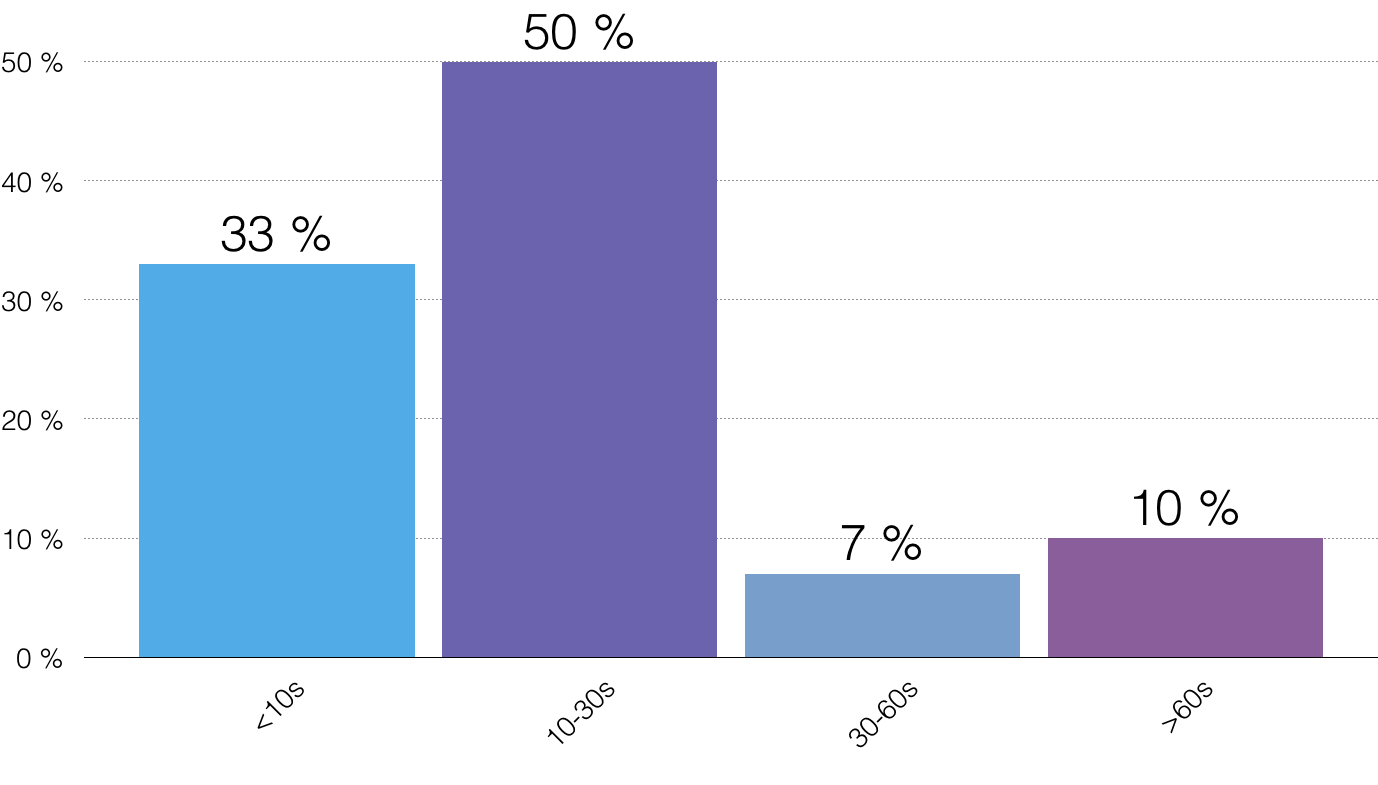

Failure times

As stated earlier, twelve percent of conferences fail, and most setup failures occur within the first 30s, i.e., from the beginning of the individual session. Normally a session will start after clicking the call button or on a page load. Sixty-seven percent of sessions fail after ten seconds, which tells that people are very patient with WebRTC conference setup times. Usability expert Jakob Nielsen states that people tend to start look for other things to do after ten seconds of waiting.

Also, these metrics only include data from browsers and perhaps it is easy to leave the conference tab open in the background on a desktop and meanwhile do something else.

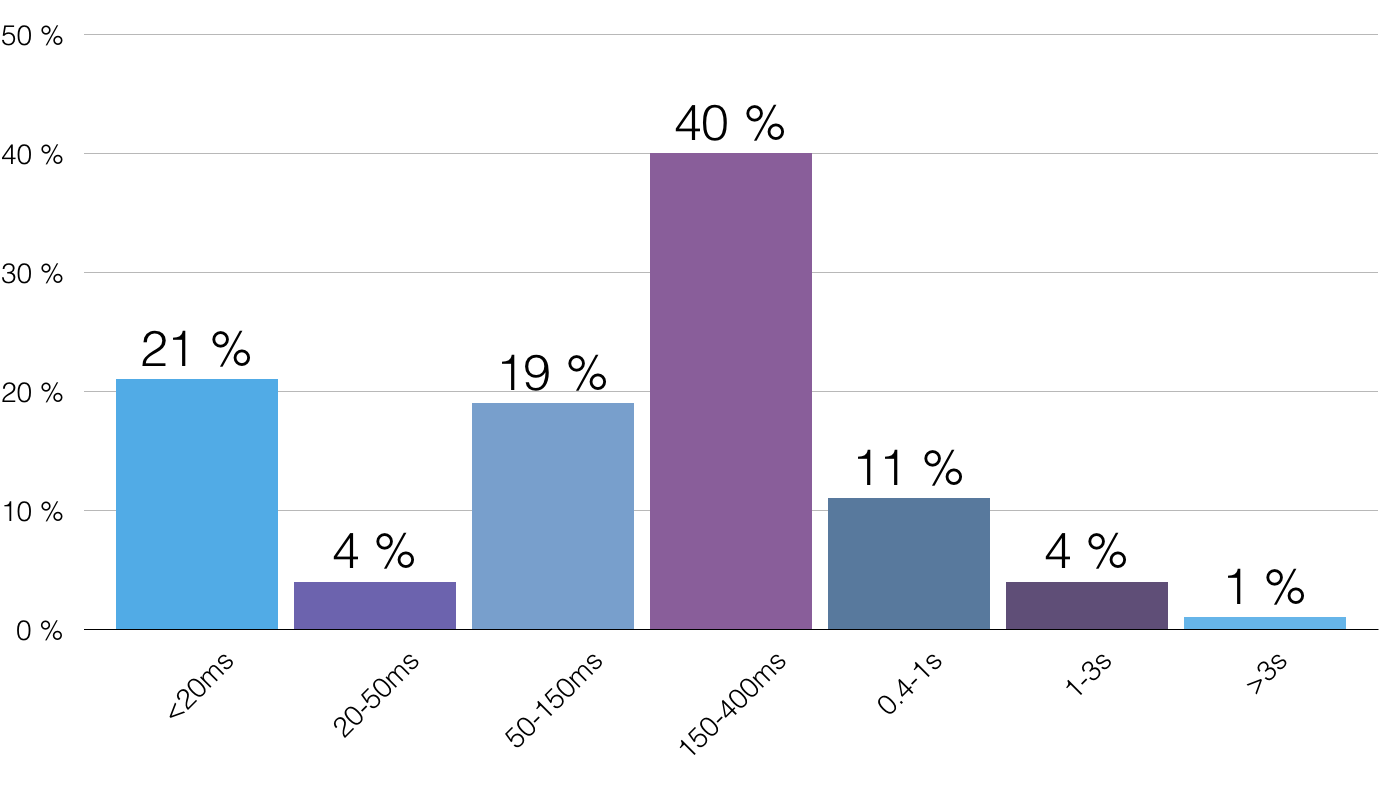

Network Latency

For real-time communication, users expect the end-to-end latency to be low, and we see a strong correlation between RTT values and objective quality that a user might report. Typically, Round-trip time (RTT) is 2 times the latency, assuming path symmetry. The ITU.T targets one-way delay for conversational audio to be less than 150ms (RTT≈300ms) and for video to be less than 200 ms (RTT≈400ms). For example, latency of less than 150ms tends to score close to “Excellent”, and greater than 500ms to “Poor” quality.

Forty four percent of our WebRTC sessions have an RTT less than 400 ms, which is good. We also observe a fifth of the average RTTs for all the sessions are less than 20ms, which indicates that these sessions most likely occur in nearby locations.

Lessons learned

After two years of analyzing WebRTC traffic and talking to our customers we have found three easy ways to enhance the user experience of a WebRTC application:

- First, ask for audio and video device permissions separately. Sometimes people get scared if the application requests more permissions that are needed, for example, asking for camera permission if the application can only do audio calls.

- Second, deploy TURN servers with UDP and TCP support. As stated above, most failures happen because of issues with NAT or firewalls, and setting up a TURN server is a simple fix to most failures.

- Third, make sure your WebRTC application can detect crashes and re-setup connections when a crash happens. In particular, keep an eye on the media pipeline and screen sharing plugin crashes.

WebRTC is still growing. With new browsers joining the fray, constantly evolving standards, and the diversity of applications increasing one might expect to see more errors. However, our data actually indicates the metrics are getting better over time. We hope the regular updates and proposals to improve ICE and WebRTC objects will continue to improve these averages.

{“authors”, [“Lasse Lumiaho“, “Varun Singh“]}

Great post!

This is a wealth of information… any chance for an updated version for 2018? Pretty Please?