I was working on a project where I needed to save a bunch of webcam images over time in the background using JavaScript. I searched around for some code I could quickly copy and found they all have the same general approach – attach a getUserMedia stream to a video element, copy the image from that element to a canvas, and then use one of the many canvas methods to save the image. I didn’t like the reliance on a canvas element. These approaches all also assumed you were displaying a stream, which I didn’t necessarily want to do.

So, I set out to experiment with some more modern approaches, including:

createImageBitmapOffscreenCanvasImageCaptureMediaStreamTrackProcessorwithReadableStreams

The good news is there are a bunch of ways to save an image from your webcam in JavaScript. Let’s see which method is best..

Use cases

Remember early in the pandemic when you thought you could avoid Covid by washing your hands and by not touching your face? I was reviewing my Stop touching your face using a browser and TensorFlow.js / facetouchmonitor.com and thinking of other non-pandemic use cases for using webcam face meshes. One is this WebcamEyeContact experiment I use during presentations to remind me to look at the camera because you should Look at your webcam, not your monitor during Zoom calls and livestreams if you don’t want to appear shady. If you want to develop your own machine learning model then you need to collect images training. This got me investigating how easy it is to periodically save webcam images during a video call. What if I wanted to put this capability into a Chrome extension and have it run in the background (with the user’s knowledge)? Would I need to have an active self-view? I found the typical approaches did not cover these cases.

More typical use cases include user profiles where you can use your webcam to snap an image as an alternative to uploading a picture. Some sites send a snapshot as a “knock” before the host lets you into a call. There are some video callings services that let you take a snapshot of a screen share for notes. Webcam-based photo booths are another use case.

Comparing approaches

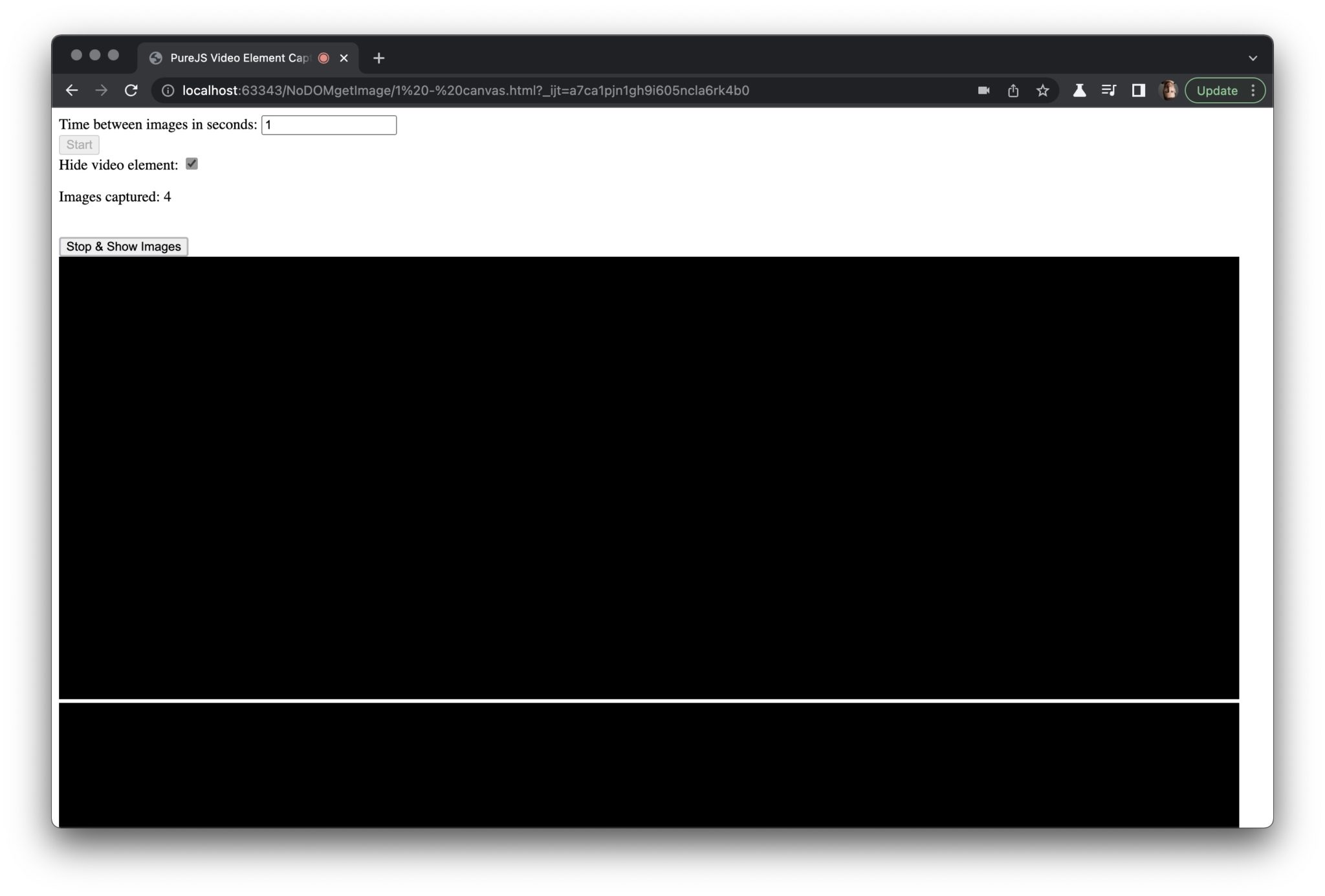

I built a basic single-page template to compare the approaches. A few things to note about my examples:

- My focus was on saving images from the webcam without having to view them right away

- I am saving the images to an array because this was quick – in a real app you would want to send them to a database or save them locally to indexedDB

- In most cases I am grabbing the image at regular intervals using a

setInterval– be careful of throttling with this approach in real apps - I have a separate loop to display images for verification – I’m not going to describe that below but you can see it in the code

- I call out the main code highlights in each section below – see the codepen examples or the repo for the full code

- Click on the “Hide video element” checkbox to see what happens when the webcam stream isn’t displayed

Each example requires the webcam stream – if you’re new to WebRTC you can get that with:

|

1 |

stream = await navigator.mediaDevices.getUserMedia({video: true}); |

Old-school way – canvas.toBlob

The more traditional method of saving an image looks something like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

videoElem.srcObject = stream; const canvas = document.createElement("canvas"); const ctx = canvas.getContext("2d"); // (data check on the interval value), then convert that value from seconds const interval = (parseInt(intervalSec.value) >= 1 ? intervalSec.value * 1: 1) * 1000; captureInterval = setInterval(async ()=>{ // I am not assuming the source video has fixed dimensions canvas.height = videoElem.videoHeight; canvas.width = videoElem.videoWidth; ctx.drawImage(videoElem, 0, 0); canvas.toBlob(blob=> { console.log(blob); storage.push(blob); }); }, interval); |

After you get the getUserMedia stream, just attach it to a video element. Then you can use a canvas to grab that video element image and the canvas.toBlob() method to convert it to a format you can send/save.

Full example

Good

- The good news is this works on every browser.

Bad

- The bad side of this approach is that you need to display the element as you want to capture it. If you don’t display the element you’ll just get a black frame:

If your GUI design doesn’t allow you to display what you want to capture, then you are out of luck. The visible canvas is also subject to throttling when you remove focus from the tab (i.e. to open another app). In my use case, my minimal interval between pictures is 1 second, so throttling doesn’t matter. However, if you were trying to grab images more frequently than that, then it probably would.

OffscreenCanvas

To avoid throttling you could move the canvas shown above to an offScreenCanvas on Chromium browsers:

|

1 2 3 4 5 6 7 8 |

const canvas = new OffscreenCanvas(1,1); // needs an initial size const ctx = canvas.getContext("2d"); //... canvas.height = videoElem.videoHeight; canvas.width = videoElem.videoWidth; ctx.drawImage(videoElem, 0, 0); const bitmap = canvas.transferToImageBitmap(); storage.push(bitmap); |

OffscreenCanvas has slightly different methods than the normal Canvas element. convertToBlob() will do the exact same thing as the canvas.toBlob()in my original example. In the example above, I used transferToImageBitmap() which is the equivalent to canvas.CreateImageBitmap(). Working with ImageBitmaps are slightly more performant:

An

ImageBitmapobject represents a bitmap image that can be painted to a canvas without undue latency.

source: https://html.spec.whatwg.org/multipage/imagebitmap-and-animations.html#imagebitmap

Full Example

Good

- More performant if you are doing something complex with the canvas (not what I am doing here)

OffScreenCanvasworks with Web Workers.

Bad

- You need to display the video as you want to capture it in a DOM element

- Only works on Chromium browsers; it has been perpetually behind a flag on Firefox and has bugs

createImageBitmap

The above method requires creating a canvas. If you are manipulating that image this approach makes sense, but what if you want to just capture the image and send it off as soon as possible? The createImageBitmap method will let you do that without all the extra canvas logic. All you need to do is assign your stream to a video Element and:

|

1 2 |

const bitmap = await createImageBitmap(videoElem); storage.push(bitmap); |

Full Example

Good

- This also works on every browser

- Fewer lines of code than the above

- This can be used in a worker

Bad

- DOM element needed

imageCapture

iOS and Android have sophisticated camera capture APIs that take advantage of the phone’s hardware. It turns out there is an API for the web that does that too – the Image Capture API. It works with any webcam too.

The API requires a getUserMedia call first, then you pass the track to the ImageCapture API to grabFrame() or takePhoto():

|

1 2 3 4 5 |

const blob = await imageCapture.takePhoto() .catch(err => console.error(err)); // It took a few seconds for me the camera to get ready if(blob){ storage.push(blob); |

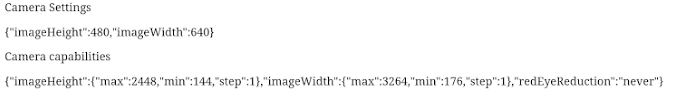

grabFrame will return an image based on the current MediaStreamTrack settings – just like the canvas method earlier. takePhoto uses a different process that requests a still image from the camera hardware with its own separate photoSettings configuration. My example code below includes an output of the default settings and a list of capabilities by calling imageCapture.getPhotoSettings() and imageCapture.getPhotoCapabilities() respectively. In addition to resolution options, these capabilities can include redeye reduction and flash options in addition to the capture constraint options you call on the initial getUserMedia capture:

Full Example

Good

- Higher quality images since it is using a still capture mode (with

takePhoto) - Does not require any video DOM element, so you can show your user whatever you like or nothing

- The

takePhotomethod also allows you to capture an image at a different resolution than yourgetUserMediastream. By default, this will take an image using the camera’s maximum still image capture resolution, which may be higher than its video capture resolution. - Red-eye and flash settings

Bad

- Chromium browsers only

takePhotoreconfigures the camera, which could interrupt the stream for several seconds

To the last point – this doesn’t seem to be a very practical method if you are going to have a low time between snapshots. As the video below from a new Pixel 6XL shows, the video stream is very slow when set to 1 capture per second.

In addition, I find it kind of strange a still image API uses a video stream track to start, but maybe that was the cleanest way for the browser to handle user permissions and get access to the camera hardware.

Considerations

I got a strange DOMException: platform error when I tried to make repeatedtakePhoto captures before the camera was ready in my 1 -second setInterval. It looks like you could look for track unmute events to monitor for when the image capture is done and the normal video stream mode has started.

The getPhotoSettings defaults return the video stream defaults, not the actual higher resolution of the still image capture (when you don’t specify any settings).

The grabFrame method returns an ImageBitmap instead of a blob. They may be better or worse depending on how you want to store and display the image. Speaking of bitmaps, let’s look at another way to create those with createImageBitmap.

MediaStreamTrackProcessor / ReadableStreams

This last approach uses MediaStreamTrack Insertable Media Processing using Streams. That is a mouthful but leverages some new W3C draft API’s made for WebRTC Insertable Streams / Breakout Box / WebRTC Encoded Transform / whatever they are calling the mechanisms today that let you read, manipulate, and write raw video frame data. We have covered Insertable Streams a few times because they let you do interesting things with your media in a performant way. They can be leveraged here for grabbing an image from the camera.

I actually started out making an TransformStream. I didn’t want to transform anything, but I thought I needed a sink, so I ended up making a WritableStream that reads every frame and saves them every so often here: 6-writableCreateImageBitmap.html. However, in this use case, I don’t need to process every frame and I shouldn’t have to create a stream sink anywhere (like you do with a TransformStream). After reviewing some ReadableStream more closely, found a way to do this just using the ReadableStream and nothing else.

First we get our stream, grab the video track, then turn it into a readable stream using the MediaStreamTrackProcessor:

|

1 2 3 4 |

stream = await navigator.mediaDevices.getUserMedia({video: true}); const [track] = stream.getVideoTracks(); const processor = new MediaStreamTrackProcessor(track); const reader = await processor.readable.getReader(); |

Then we make a function that reads the frame and saves it.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

async function readFrame() { const {value: frame, done} = await reader.read(); // value is the frame object if (frame) { const bitmap = await createImageBitmap(frame); console.log(bitmap); storage.push(bitmap); imageCountSpan.innerText++; frame.close(); } if (done) clearInterval(captureInterval); } |

Then all we need is a simple setIntervalto read those frames every so often:

|

1 2 |

const interval = (parseInt(intervalSec.value) >= 1 ? intervalSec.value * 1: 1) * 1000; captureInterval = setInterval(async ()=>await readFrame(), interval); |

Unlike the other approaches where the browser was doing some level of rendering, this approach takes the raw video frame right from the video decoder. However, there are some caveats with this since the frame is treated as a protected element. I found you can’t read more than 3 frames without the reader.read() freezing. The Streams API handles queuing backpressure to make sure the stream buffer doesn’t overflow. There are some ways to adjust this, but for this use case we don’t ever need more than one frame at a time. To keep our buffer empty, we call frame.close() when we’re done with the frame to clear the queue.

Also, frames aren’t serializable objects. You can’t save the frame anywhere else. That’s why we need to convert it to a new bitmap object.

Full Example

Good

- No DOM element or canvas needed

- Design to run in a worker

- Resource-efficient

Bad

MediaStreamTrackProcessoris only supported in Chromium; though all the browsers do support the Streams API

Which should you use?

If you want to use the same code on every browser then you really only have one option – the canvas.toBlob if you need to display a canvas anyway or the createImageBitmap approach if you don’t. In any case, you are going to need to render the video before you capture it.

As of January 18, 2022 that is the only method that is universally supported, but make sure to check for browser updates:

If you are ok with Chromium-only for now and want the best image quality, then the ImageCapture().takePhoto() makes the most sense. Just be aware of the impact and have the UI deal with the impact this will have if you are displaying a video stream from the camera. If that is a problem, then the use the grabFramemethod instead.

Personally, I like the ReadableStreams approach over grabFrame. I like the idea of getting the raw video frames and being able to do what you want with them. That is overkill for this application, but this approach is important to understand if you want to get into WebCodecs.

{“author”: “chad hart“}

Is there a way to send the bitmap image data as a blob without drawing it on a canvas again?

Hi Przemyslaw – can you clarify what you are trying to do? You want the data saved as a blob, but you don’t want to render it as a canvas during the initial frame capture?

Love it! Tho, I can’t really use this as im not able to convert the ImageBitmap to something useful like base64 😀

How do I eg. do this

const bitmap = canvas.toDataURL();

instead of this:’

const bitmap = canvas.transferToImageBitmap();