New coturn project leads Gustavo Garcia and Pavel Punsky give an update on the popular TURN server project, what’s new in STUN and TURN standards, and the roadmap for the project

Search Results for: turn

coTURN: the open-source multi-tenant TURN/STUN server you were looking for

Last year we interviewed Oleg Moskalenko and presented the rfc5766-turn-server project, which is a free open source and extremely popular implementation of TURN and STURN server. A few months later we even discovered Amazon is using this project to power its Mayday service. Since then, a number of features beyond the original RFC 5766 have been […]

The Open Source rfc5766-turn-server Project – Interview with Oleg Moskalenko

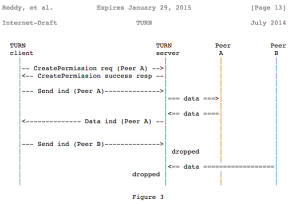

As Reid previously introduced in his An Intro to WebRTC’s NAT/Firewall Problem post, NAT traversal is often one the more mysterious areas of WebRTC for those without a VoIP background. When two endpoints/applications behind NAT wish to exchange media or data with each other, they use “hole punching” techniques in order to discover a direct communication […]

Power-up getStats for Client Monitoring

WebRTC’s peer connection includes a getStats method that provides a variety of low-level statistics. Basic apps don’t really need to worry about these stats but many more advanced WebRTC apps use getStats for passive monitoring and even to make active changes. Extracting meaning from the getStats data is not all that straightforward. Luckily return author […]

WebRTC Plumbing with GStreamer

GStreamer is one of the oldest and most established libraries for handling media. As a core media handling element in Linux and WebKit that as launched near the turn of the century, it is not surprising that many early WebRTC projects use various pieces of it. Today, GStreamer has expanded options for helping developers plumb […]